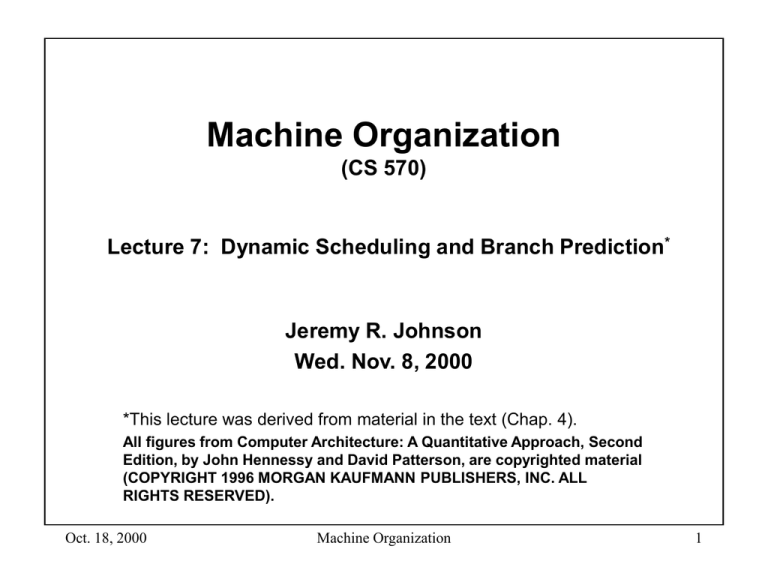

Machine Organization

(CS 570)

Lecture 7: Dynamic Scheduling and Branch Prediction*

Jeremy R. Johnson

Wed. Nov. 8, 2000

*This lecture was derived from material in the text (Chap. 4).

All figures from Computer Architecture: A Quantitative Approach, Second

Edition, by John Hennessy and David Patterson, are copyrighted material

(COPYRIGHT 1996 MORGAN KAUFMANN PUBLISHERS, INC. ALL

RIGHTS RESERVED).

Oct. 18, 2000

Machine Organization

1

Introduction

• Objective: To understand how pipeline scheduling, loop

unrolling, and branch prediction can be carried out in

hardware.

– We contrast the dynamic approaches to the compiler techniques

discussed previously. Since many of these techniques become more

important when there is multiple issue of instructions, we give a brief

overview of techniques for multiple instruction issue.

– We will also review static techniques for branch prediction.

• Topics

– Review of static branch prediction

• tcov profiling tool

– Dynamic scheduling

• scoreboard

• Tomasulu’s algorithm

– Branch prediction

– Multiple issue

• superscalar

• VLIW

Oct. 18, 2000

Machine Organization

2

Dynamic Scheduling

• Hardware rearranges instructions at runtime to reduce

stalls

–

–

–

–

simplify compiler

catch some cases where dependencies are not known at compile time

Scoreboard (RAW)

Register renaming (WAW, WAR)

• Major limitation of previous pipelining techniques is that

they use an in-order instruction issue. If an instruction is

stalled, no later instructions can proceed.

DIVD F0, F2,F4

ADDD F10,F0,F8

SUBD F12,F8,F14

Oct. 18, 2000

; long running instruction

; no dependence on DIVD, eliminate by no longer

; requiring in-order execution

Machine Organization

3

Split ID Stage

• Issue - Decode instruction, check for structural hazards

• Read operands - Wait until no data hazards, then read

operands

• This allows multiple instructions to be in execution at the

same time.

• Out of order execution is possible (WAR, WAW hazards may

occur)

– DIVD

– ADDD

– SUBD

Oct. 18, 2000

F0, F2, F4

F10, F0, F8

F8, F8, F14

; if dest = F10 WAW

Machine Organization

4

Scoreboard

Oct. 18, 2000

Machine Organization

5

Pipeline Steps with Scoreboard

• Issue

– if functional unit free and no other active instruction has the same

destination register then issue the instruction and update scoreboard

– may have a queue for instruction fetches (stall when full)

– removes WAW hazards

• Read operands

– scoreboard monitors availability of source operands

– when available tells functional unit to read operands

– resolves RAW hazards (may be sent to execution out of order)

• Execution

– functional unit begins execution upon receiving operands

– notify scoreboards when result is ready

• Write result

– when result available check for WAR hazards and stall if necessary

and write to register

Oct. 18, 2000

Machine Organization

6

Example (Fig. 4.4 - 4.6)

Oct. 18, 2000

Machine Organization

7

Example (Fig. 4.4 - 4.6)

Oct. 18, 2000

Machine Organization

8

Example (Fig. 4.4 - 4.6)

Oct. 18, 2000

Machine Organization

9

Checks and Bookkeeping

• Issue

–

–

–

–

Wait until not busy[FU] and not Result[D]

Busy[FU] = yes; Op[FU] = op; Fi[FU] = D;

Fj[FU] = S1; Fk[FU] = S2; Qj = Result[S1]; Qk = Result[S2];

Rj = not Qj; Rk = not Qk; Result[D] = FU;

• Read Operands

– wait until Rj and Rk

– Rj = no; Rk = no; Qj = 0; Qk = 0;

• Execution Complete

– Functional unit done

• Write result

– f (Fj[f] Fi[FU] or Rj[f] = No) & (Fk[f] Fj[FU] or Rk[f] = No)

– f ( if Qj[f] = FU then Rj[f] = yes); f ( if Qk[f] = FU then Rk[f] = yes);

– Result[Fi[FU]] = 0; Busy[FU] = no;

Oct. 18, 2000

Machine Organization

10

Register Renaming (Tomasulo)

• Uses reservation stations to buffer instructions waiting to

issue

– fetch operands as soon as possible

– eliminates need to get operand from a register

– pending instructions designate reservation station that will provide

results

– with successive writes to a register only last one actually updates

register

– register specifiers are renamed to reservation station

– eliminates WAW and WAR hazards

• Uses distributed control (common data bus)

• Results go directly to functional units from reservation

stations rather than through the register file

Oct. 18, 2000

Machine Organization

11

Hardware for Register Renaming

(Fig. 4.8)

Oct. 18, 2000

Machine Organization

12

Pipeline Steps with Renaming

• Issue

– Get instruction from floating point queue

– If FP reservation station free send instruction with operands if in

registers

– If ld/st issue there is an available buffer

– renaming done here

• Execution

– If one or more operands are not available monitor CDB while waiting

for it to be computed

– When operands are available execute operation

– Check for RAW hazards

• Write result

– When result available write on CDB and from there into registers,

reservation stations, and waiting store buffers

Oct. 18, 2000

Machine Organization

13

Example (Fig. 4.9 - 4.10)

Oct. 18, 2000

Machine Organization

14

Example (Fig. 4.9 - 4.10)

Oct. 18, 2000

Machine Organization

15

Checks and Bookkeeping

• Issue

–

–

–

–

–

–

Wait until station or buffer empty

if (Register[S1].Qi 0) { RS[r].Qj = Register[S1].Qi }

else {RS[r]/Vj = S1; RS[r].Qj = 0;}

if (Register[S2].Qi 0) { RS[r].Qk = Register[S2].Qi }

else {RS[r]/Vj = S2; RS[r].Qk = 0;}

RS[r].Busy = yes; Register[D].Qi = r;

• Execution Complete

– Wait until (RS[r].Qj = 0) and (RS[r].Qk = 0)

– no bookkeeping - operands are in Vj and Vk

• Write result

–

–

–

–

–

–

Wait until execution completed at r and CDB available

x (if (Register[x].Qi = r) then {Fx = result; Register[x].Qi=0})

x (if (RS[x].Qj = r) then {RS[x].Vj=result; RS[x].Qj = 0})

x (if (RS[x].Qk = r) then {RS[x].Vk=result; RS[x].Qk = 0})

x (if (Store[x].Qi = r) then {Store[x].V=result; Store[x].Qi = 0})

RS[r].Busy = no;

Oct. 18, 2000

Machine Organization

16

Dynamic Unrolling (Fig. 4.12)

Loop:

LD

MULTD

SD

SUBI

BNEZ

F0, 0(R1)

F4, F0, F2

0(R1), F4

R1, R1, #8

R1, Loop

• If we predict that the branch is taken, using reservation

stations will allow multiple executions of this loop to

proceed at once (dynamic unrolling)

Oct. 18, 2000

Machine Organization

17

Dynamic Unrolling (Fig. 4.12)

Oct. 18, 2000

Machine Organization

18

Dynamic Branch Prediction

• Provide hardware to dynamically predict whether a branch

is taken or not

• In order to be effective when we predict that a branch will

be taken, it is necessary to be able to compute the address

before we would normally determine whether to take the

branch

– branch target buffer (BTB) provides a cache of branch target

addresses

• Simplest approach uses 1 bit to remember whether the

branch was taken the last time or not

• In a simple loop this leads to two mispredictions

– A two bit scheme will improve this situation

Oct. 18, 2000

Machine Organization

19

Two-Bit Prediction Scheme

Oct. 18, 2000

Machine Organization

20

Branch Target Buffer

Oct. 18, 2000

Machine Organization

21

Frequency of Mispredictions (4.14)

4096-entry 2-bit prediction buffer

Oct. 18, 2000

Machine Organization

22

Handling Instructions with BTB (4.23)

Oct. 18, 2000

Machine Organization

23

Multiple Issue

• Previous techniques used to eliminate data and control

stalls. They allow us to approach the ideal CPI of 1

• To improve performance further, we would like to decrease

the CPI to less than 1. This can not happen if we can issue

only one instruction per cycle

• Multiple issue processors

– superscalar

– VLIW

Oct. 18, 2000

Machine Organization

24

Code Example

• Latencies

Loop: LD

ADDD

SD

SUBI

BNEZ

Oct. 18, 2000

Inst. producing result

FP ALU op

FP ALU op

Load

Load

F0,0(R1)

F4, F0, F2

0(R1), F4

R1, R1, #8

R1, Loop

Inst. using result

FP ALU op

Store

FP ALU op

Store

latency

3

2

1

0

; F0 = array element

; add scalar in F2

; store result

; decrement pointer

; 8 bytes per double

; branch R1!=zero

Machine Organization

25

Superscalar DLX

• Can issue two instructions per cycle

– integer including ld/st/br

– FP

– To make this worthwhile, we need either multiple FP units or pipelined

FP units

– This restriction simplifies the implementation (e.g. use opcode to

detect issue restriction)

– Extra difficulty with simultaneous ld/st and FP operation (contention

for register datapath)

• Need to fetch and decode 64 bits of instructions

– assume that they are aligned on 64-bit boundaries

– integer instruction comes first

Oct. 18, 2000

Machine Organization

26

Superscalar Scheduling

Loop: LD

LD

LD

LD

LD

SD

SD

SD

SUBI

SD

BNEZ

SD

Oct. 18, 2000

F0,0(R1)

F6,-8(R1)

F10,-16(R1)

F14,-24(R1)

F18,-32(R1)

0(R1), F4

-8(R1), F8

-16(R1), F16

R1, R1, #40

16(R1), F16

R1, Loop

8(R1), F20

;

;

;

;

;

;

;

;

;

;

;

;

ADDD

ADDD

ADDD

ADDD

ADDD

Machine Organization

F4, F0, F2

F8, F6, F2

F12, F10, F2

F16, F14, F2

F20, F18, F2

;1

;2

;3

;4

;5

;6

;7

;8

;9

; 10

; 11

; 12

27

Dynamic Scheduling

It

1

1

1

1

1

2

2

2

2

2

Inst

LD

F0,0(R1)

ADDD F4,F0,F2

SD

0(R1),F4

SUBI R1,R1,#8

BNEZ R1,Loop

LD

F0,0(R1)

ADDD F4,F0,F2

SD

0(R1),F4

SUBI R1,R1,#8

BNEZ R1,Loop

Oct. 18, 2000

Issue

1

1

2

3

4

5

5

6

7

8

Ex

2

4

3

4

5

6

9

7

8

5

Mem

3

Machine Organization

WB

3

6

7

5

8

8

11

12

9

28

VLIW Scheduling

• Each instruction

– two memory references

– two FP operations

– one integer or branch operation

• In the following example

–

–

–

–

7 loop iterations in 9 cycles

23 operations (2.5 ops/cycle)

60% efficiency

needs extra registers

Oct. 18, 2000

Machine Organization

29

VLIW Scheduling

LD F0,0(R1)

LD F6,-8(R1)

LD F10,-16(R1) LD F14,-24(R1)

LD F18,-32(R1) LD F22,-40(R1) ADDD F4,F0,F2

ADDD F8,F6,F2

LD F26,-48(R1)

ADDD F12,F10,F2

ADDD F16, F14, F2

ADDD F20,F18,F2 ADDD F24,F22,F2

SD 0(R1), F4 SD -8(R1), F8 ADD F28, F26, F2

SD -16(R1), F12 SD -24(R1),F16

SD -32(R1), F20 SD -40(R1),F24

SUBI R1,R1,#56

SD 8(R1), F28

BNEZ R1, Loop

Oct. 18, 2000

Machine Organization

30