The Proposed System

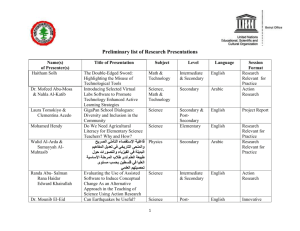

advertisement

Speaker Independent Arabic Speech Recognition Using Support Vector Machine By Eng. Shady Yehia El-Mashad Supervised By Ass. Prof. Dr. Hala Helmy Zayed Dr. Mohamed Ibrahim Sharawy Agenda Introduction Characteristics of Speech Signal History of Speech & Previous Research The Proposed System Results and Conclusions Introduction Characteristics of Speech Signal History of Speech & Previous Research The Proposed System Results and Conclusions Recognition Is one of the basic memory tasks. It involves identifying objects or events that have been encountered before. It is the easiest of the memory tasks. It is easier to recognize something, than to come up with it on your own Speech Recognition System Also Known as Automatic Speech Recognition or Computer Speech Automatic Speech Recognition (ASR) is the process of converting captured speech signals into the corresponding sequence of words in text. ASR systems accomplish three basic tasks: 1- Pre-processing 2- Recognition 3-Communication How do humans do it? • Articulation produces sound waves which the ear conveys to the brain for processing How might computers do it? Acoustic Waveform Acoustic Signal Speech Recognition Introduction Characteristics of Speech Signal History of Speech & Previous Research The Proposed System Results and Conclusions Types of Speech Recognition There are two main types of speaker models: (1) Speaker independent Speaker independent models recognize the speech patterns of a large group of people. (2) Speaker dependent Speaker dependent models recognize speech patterns from only one person. Speech Recognition Usually Concern Three Types of Speech (1) Isolated Word Recognition Is the simplest speech type because it requires the user to pause between each word. (2) Connected Word Recognition Is capable of analyzing a string of words spoken together, but not at normal speech rate. (3) Connected Speech Recognition (Continuous Speech Recognition) Allows for normal conversational speech. Factors that affect the speech signal - Speaker gender - Speaker identity - Speaker language - Psychological conditions - Speaking style - Environmental conditions Some of the difficulties related to speech recognition - Digitization: Converting analogue signal into digital representation - Signal processing: Separating speech from background noise - Phonetics: Variability in human speech - Continuity: Natural speech is continuous. The Three-State Representation Three-state representation is one way to classify events in speech. The events of interest for the three-state representation are: • Silence (S) - No speech is produced. • Unvoiced (U) - Vocal cords are not vibrating, resulting in an aperiodic or random speech waveform. • Voiced (V) - Vocal cords are vibrating periodically, resulting in a speech waveform that is quasi-periodic. Fig. Three State Speech Representation Applications of Speech Recognition (1) Security (2) Education (3) Control (4) Diagnosis (5) Dictation Introduction Characteristics of Speech Signal History of Speech & Previous Research The Proposed System Results and Conclusions History of Speech Previous Research(Arabic Speech) Combined Classifier Based Arabic Speech Recognition Comparative Analysis of Arabic Vowels using Formants and an Automatic Speech Recognition System HMM AUTOMATIC SPEECH RECOGNITION SYSTEM OF ARABIC ALPHADIGITS Phonetic Recognition of Arabic Alphabet letters using Neural Networks INFOS2008, March 2729, 2008 Cairo-Egypt © 2008 Faculty of Computers & Information-Cairo University International Journal of Signal Processing, Image Processing and Pattern RecognitionVol. 3, No. 2; June-2010 the Arabian Journal for Science and Engineering, Volume 35, Number 2C; December- 2010 International Journal of Electric & Computer Sciences IJECS-IJENS, Vol: 11, No: 01; February-2011 The technique used ANN HMM HMM ANN Type of neural network combined classifier ----------------- ----------------- PCA The scope of speech 6 isolated word from Holy Quran Arabic vowels (10 words) Alpha Digits “Saudi Accented “ Arabic Alphabet Performance 93% 91.6% 76% 96% Title Source Previous Research(Arabic Digits) Title Source Recognition of Spoken Arabic Digits Using Neural Predictive Hidden Markov Models Efficient System for Speech Recognition using General Regression Neural Network Speech Recognition System of Arabic Digits based on A Telephony Arabic Corpus Radial Basis Functions With Wavelet Packets For Recognizing Arabic Speech The International Arab Journal of Information Technology, Vol. 1; International Journal of Intelligent Systems and Technologies 1; 2 © www.waset.org Spring Intensive Program on Computer Vision (IPCV'08), Joensuu, Finland; 2006 August-2008 CSECS '10 Proceedings of the 9th WSEAS international conference on Circuits, systems, electronics, control and signal July-2004 processing; 2010 The technique used Neural Network and Hidden Markov Model ANN HMM ANN MLP general regression neural network (GRNN) --------------- RBF The scope of speech Arabic Digits Arabic Digits Arabic Digits “Saudi accented “ Arabic Digits Performance 88% 85- 91% 93.72% 87-93 % Type of neural network Introduction Characteristics of Speech Signal History of Speech & Previous Research The Proposed System Results and Conclusions The Proposed System The Proposed System 1.Recording System The Proposed System 2. Data Set Creating of a speech database is important for the development researcher. For English language: we don't need to create a database because there is already more than one have been created to help the researcher on their research like sphinx1,2,3&4 and Australian English For Arabic language, we should try to create a database that help us. The Proposed System 2. Data Set The Proposed System 3. The Segmentation System Segmentation process is implemented by two techniques; semi-automatic and fully-automatic. Semi-automatic technique: We adopt the segmentation parameters which are window size, minimum amplitude, minimum frequency, maximum frequency, minimum silence, minimum speech, and minimum word manually by trial and error. In this technique, we achieve only 70 percent performance, which is not very high and with this technique we can’t continue in our system because we still have two stages after that which is the feature extraction and the recognition The Proposed System 3. The Segmentation System 0 1 6 5 3 2 0 4 0 3 X 0 1 6 5 3 2 0 4 0 3 - The Proposed System 3. The Segmentation System Fully-automatic techniques These parameters are set automatically to get better performance by using the K-Mean clustering. By this technique we achieve nearly 100 percent in the segmentation of the digits. The K-Means Algorithm process is as follows: •The dataset is partitioned into K clusters and the data points are randomly assigned to the clusters. •For each data point: •Calculate the distance from the data point to each cluster. •If the data point is closest to its own cluster, we leave it, and if not move it into the closest cluster. •Repeating the above step until a complete pass through all the data points resulting in no data point moving from one cluster to another. The Proposed System 4. Feature Extraction When the input data to an algorithm is too large to be processed and it is suspected to be notoriously redundant (much data, but not much information) then the input data will be transformed into a reduced representation set of features (also named features vector). Transforming the input data into the set of features is called Feature extraction. The feature vector must contain information that is - useful to identify and differentiate speech sounds - identify and differentiate between speakers There are some methods such as FFT, LPC, Real Cepstrum and MFCC. The Proposed System Mel Frequency Cepstrum Coefficients (MFCC): Take the Fourier transform of a signal. Map the powers of the spectrum obtained above onto the mel scale, using triangular overlapping windows. Take the logs of the powers at each of the mel frequencies. Take the discrete cosine transform of the list of mel log powers, as if it were a signal. The MFCCs are the amplitudes of the resulting spectrum. The Proposed System 5. Neural Network Classifier There are many Neural Models, Each model has advantages and disadvantages depending on the application. According to our application we choose Support Vector Machine (SVM) The Proposed System Support Vector Machine (SVM): A Support Vector Machine (SVM) is implemented using the kernel Adatron algorithm which constructs a hyperplane or set of hyperplanes in a high dimensional space, which can be used for classification, regression or other tasks. Intuitively, a good separation is achieved by the hyperplane that has the largest distance to the nearest training data points of any class (so-called functional margin), since in general the larger the margin the lower the generalization error of the classifier. The Proposed System Support Vector Machine (SVM): H3 (green) doesn’t separate the two classes; H1 (blue) separates the two classes but with a small margin and H2 (red) separates the two classes with the maximum margin. Introduction Characteristics of Speech Signal History of Speech & Previous Research The Proposed System Results and Conclusions Results and Conclusions Training and Testing Support Vector Machine (SVM) We use the SVM network and adapting its parameter as follows: no hidden layers. The output layer has 10 neurons. And we train with maximum epochs of 1000. We have 10000 samples, we divide them into: Training: 70% Cross Validation: 15% Testing: 15% Results and Conclusions Results Cross Validation Confusion Matrix of the SVM 0 1 2 3 4 5 6 7 8 9 0 100.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 1 0.00 96.00 0.00 0.00 0.00 0.00 0.00 0.00 4.00 0.00 2 0.00 0.00 90.00 0.00 0.00 0.00 0.00 0.00 10.00 0.00 3 0.00 0.00 0.00 100.00 0.00 0.00 0.00 0.00 0.00 0.00 4 4.00 0.00 0.00 0.00 94.00 0.00 0.00 0.00 2.00 0.00 5 7.00 0.00 0.00 0.00 0.00 89.00 0.00 0.00 0.00 4.00 6 0.00 0.00 0.00 0.00 0.00 0.00 92.00 0.00 0.00 8.00 7 0.00 0.00 0.00 0.00 0.00 2.00 4.00 90.00 0.00 4.00 8 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 100.00 0.00 9 0.00 0.00 0.00 0.00 0.00 0.00 6.00 0.00 0.00 94.00 Results and Conclusions Results The Testing Confusion Matrix of the SVM Output / Desired 0 1 2 3 4 5 6 7 8 9 0 1 2 3 4 5 6 7 8 9 255 5 0 4 0 0 6 0 0 3 0 313 0 0 1 0 0 0 6 0 2 0 122 0 0 0 0 0 0 0 2 4 0 105 0 0 1 0 2 0 0 0 0 0 135 0 0 6 0 1 0 0 0 0 0 90 3 0 1 1 4 0 0 1 0 0 83 0 0 0 0 6 2 2 1 4 0 119 2 2 0 5 0 5 0 0 6 0 95 0 0 0 0 0 0 0 0 0 0 95 Results and Conclusions Results Performance = (255+313+122+105+135+90+83+119+95+95) / 1500 = 1412 / 1500 = 94.13 % Results and Conclusions •Conclusion A spoken Arabic digits recognizer is designed to investigate the process of automatic digits recognition. The Segmentation process is implemented by two techniques; semi-automatic and fully-automatic. The feature extracted by using MFCC technique. This system is based on NN and by using Colloquial Egyptian dialect within a noisy environment and carried out by neuro solution tools. The performance of the system is nearly 94% when we use (SVM). THANK YOU!