Operant versus classical conditioning: Law of Effect

advertisement

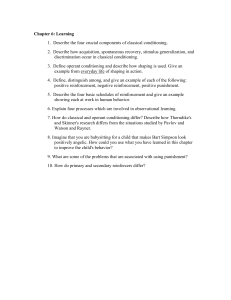

Operant Conditioning The Law of Effect • Thorndike (1911): Animal Intelligence – Experimented with cats in a puzzle box • Put cats in the box • Cats had to figure out how to pull/push/move lever to get out; when out got reward • The cats got faster and faster with each trial • Law of Effect emerged from this research: – When a response is followed by a satisfying state of affairs, that response will increase in frequency. E.L. Thorndike 1874-1949 Skinner’s version of Law of Effect • Had two problems with Thorndike’s law: – Defining “satisfying state of affairs” – Defining “increase” in behavior • Rewrote the law to be more specific: – Used words reinforcer and punisher – Idea of reinforcer is strengthening of relation between a R and Sr • Now defined reinforcement and punishment: – A reinforcer is any stimulus which increases the probability of a response when delivered contingently – A punisher is any stimulus which decreases the probability of a response when delivered contingently – Also noted could deliver reinforcers and punishers in TWO ways: • Add something: positive • Take away something: negative Burris Fredric Skinner Skinner box: Pigeon pecks or rat bar presses to receive reinforcers Reinforcers vs. Punishers Positive vs. Negative • • • • Reinforcer = rate of response INCREASES Punisher = rate of response DECREASES Positive: something is ADDED to environment Negative: something is TAKEN AWAY from environment • Can make a 4x4 contingency table Reinforcement Punishment Positive Add Stimulus Positive Reinforcement make bed-->10cent (Positive) Punishment hit sister->spanked Negative Negative Reinforcement Negative Punishment Remove make bed-> Mom stops hit sister->lose TV Stimulus nagging Parameters or Characteristics of Operant Behavior • Strength of the response: – With each pairing of the R and Sr/P, the response-contingency is strengthened – The learning curve is • Monotonically ascending • Has an asymptote • There is a maximum amount of responding the organism can make Parameters or Characteristics of Operant Behavior • Extinction of the response: – Remove the R Sr or RP contingency – Now the R 0 • Different characteristics than with classical conditioning: – Animal increases behavior immediately after the extinction begins: TRANSIENT INCREASE – Animal shows extinction-induced aggression! – Why? More parameters: • Generalization can occur: – Operant response may occur in situations similar to the one in which originally trained – Can learn to behavior in many similar settings • Discrimination can occur – Operant response can be trained to very specific stimuli – Only exhibit response under specific situations • Can use a cue to teach animal: – S+ or SD : contingency in place – S- or S : contingency not in place – Thus: SD: RSr Several ways to use Operant Conditioning • Discrete Trial Procedures: – Has a set beginning and end – a trial – The experimenter controls the rate of behavior and reinforcement • Free operant procedures – Session has a start and end – Organism can make as many responses as ‘wants” in session – Organism controls how many reinforcers earned (according to programmed schedule) – Organism controls rate of responding: R’s/min Must “train” or “teach” an operant response • in lab setting: magazine training – – – – Train animal to come to feeder Really is classical conditioning Click of feeder predicts food No response (other than approach) required • Shaping – – – – Using successive approximations of the final response Break up a response into its components or pieces E.g., tying a shoe: how many steps? Train so that put the “steps” together until have the fluid final response • For example: Clicker Training! Why Shaping? • Is fast and efficient way to develop new behavior • Maintains the learner’s excitement and willingness to learn • Produces behaviors that are accurately remembered, unlike coerced or lured responses • Allows on to train individuals and behaviors not easily trained in more traditional ways • Creates empathy in animals and allows one to read and understand the animal’s emotions • Changes the organism being shaped from a passive recipient of information and guidance to an active learner and member of the learning team Is fun for both the animal and the trainer! • How to Shape Behavior • Start with Capturing a behavior or component of the behavior you want to shape – – – – Allows you to selectively reinforce some of the repetitions, but not others Can select bigger, better responses Ignore weaker responses: these go unclicked Leads to a high rate of reinforcement • High rate of reinforcement CRITICAL for shaping!!!! • Raising the Criterion: – Raise the criterion or rule for getting a C/T – Build the response in a series of small steps – Think of it as going up a staircase towards your goal. The Shaping Staircase Each step on the staircase is an increment or step towards the final be The Shaping Process • Each step in the shaping process may have several requirements: – Every requirement within a step is called a criterion (pl. = criteria) – Criteria can be very general or very detailed • For the high five: – The lifting of the paw is a more general criterion. – The touching of the paw to your hand is more specific Example of Shaping: Clicker Training • Popular term for training/teaching method of operant conditioning – Can be used with any living organism • Gold fish • Dogs • Humans! • Very simple process: – S+ RSrcSr – Cue response markerreinforcement Clicker training or Tag Teach • System of training/teaching that uses positive reinforcement in combination with an event marker • The event marker (click) “marks” the response as correct Why should you use a clicker? • Very powerful teaching tool • According to Karen Pryor, clicker training – – – – – – Accelerates learning Strengthens the human-animal bond Produces long term recall Produces creativity and initiative Forgives your mistakes Generates enthusiastic learners Examples of learning vs. environmental manipulation • Want to keep dog out of kitchen: – Put up a gate: dog can’t get in, so behavior decreases – Does not alter the contingency of going into the kitchen – The dog has learned nothing • Want you to sit in a chair – I poke you behind the knees and you fall into the chair – You increased “chair sitting” but didn’t learn chair sitting! – Your behavior is not predictable when presented with the chair – or worse yet, you are now afraid of the chair and avoid it! Which consequence should we use? • Punish the behavior? – Decreases the probability of the behavior – Can result in unstable responding, particularly with negative reinforcement – Can result in learned helplessness, avoidance and aggression! – Often are ethical limitations Which consequence should we use? • Ignore the behavior? – Decreases the probability of the behavior – Process of extinction – Two problems: • Extinction burst • Extinction-induced aggression Which consequence should we use? • Positively Reinforce a behavior: – Increases the probability of the behavior – Can reinforce the opposite of the response you are trying to decrease! – Creates a “fun” learning environment – Data suggest that organisms trained with positive reinforcement WANT to work! Which consequence should we use? • But wait: won’t positive reinforcement make greedy organisms? – Initially, we use continuous reinforcement – Gradually we thin out the rate of reinforcement using partial schedules of reinforcement – More and more responding or chains of behavior required to get a reward • When you were kindergarten, you needed lots of reinforcers every day • Now in college you can work all semester for that final reinforcer of an “A”. What skills are necessary to become a good shaper/trainer? • Must be an excellent observer of behavior – • Must be precise with your clicker – – • When learning a new response, the animal needs lots of feedback Reinforcement variety improves the learning process Reinforcers must be of value to the learner (what THEY like, not what YOU like). Barney stickers or Beer? Must use the clicker as a conditioned reinforcer: – – – • Must be quick and “catch” and “mark” that response May introduce a “keep going” signal, too! Must be generous with reinforcement – – – – • Must be able to identify the response or component of the response The clicker derives value from it being tightly paired with the primary reinforcer Use as a bridge or a “yes, keep going” signal We will call the clicker an event marker Must be consistent! – – The animal is learning the rule, so the rule must be consistent Only when the response is solid will you move to partial reinforcement