Discovery Informatics

advertisement

Session 1: Plenary

Themes in Discovery Informatics

Science Has a Never-ending Thirst

for Technology

Computing as a substrate for science in innovative ways

Ongoing investments in cyberinfrastructure have a

tremendous impact in scientific discoveries

Shared high end instruments

High performance computing

Distributed services

Data management

Virtual organizations

These investments are extremely valuable for science, but do

not address many aspects of science

Further Science Needs

Emphasis has been on data and computation, not so much

on models

Need to support model formulation and testing is missing

Models should be related to data (observed or simulated)

Emphasize insight and understanding

From correlations to causality and explanation

Developing tools for the full discovery process and using tools

for the discovery process

Tools that help you do new things vs tools that help you do

things better

Further Science Needs

Many aspects of the scientific process could be improved

Some are not addressed by CI (eg literature search, reasoning about

models)

Others could benefit from new approaches (eg capturing metadata)

Effort is significant

Many scientists do not have the resources or inclination to benefit from

CI

How do you create a culture in which science stays timely in its use of

CI?

Discipline-specific services make it harder to cross bounds

Methods and process for being able to work with scientists

Further Science Needs

Integration is important and far from being a solved

problem

Integration across science domains

Integration within a domain

Connecting tools and technologies to the practice of science

Most science is done local, need to respond accordingly (e.g.,

how do you support your student, get tenure)

How to reduce the impedance mismatch between cognition and

practice

The “long tail” of science – most of science is not big science nor

big data

CI can transform all elements of the discovery timeline

Further Science Needs

User-centered design

Usability

Functionality

What are metrics for success

Adoption by others?

Characterization of domains and facets that impact discovery

informatics is still not understood

You can’t get this by asking the scientists

What are equivalent classes of domains as they pertain to CI

Need to treat domain scientists, social scientists, and

computer scientists on equal footing

Emerging Movement?

A movement for scientist-centered system design?

A movement to focus on the “human processor bottleneck”?

Human cognitive capacity is flat (or at best getting slightly linearly),

while other dimensions of computing have grown exponentially

A movement for non-centralized science? (“long tail” of science (on

multiple dimensions) aka “dark matter” of science; small science vs

big; small data vs large)

A movement to improve the use of mundane technology in science

practice?

A movement to lower the learning curve in infrastructure?

There will be some curve, but it is smaller and the same no matter what

you need to access

eg web infrastructure is a good example

What is Discovery Informatics

We should come back to a definition later in the meeting

Some possible defining characteristics:

Small data science still has a major role to play

Complements big data science

Much of science is largely local

Complements science at larger scales

Big data science can be seen as a movement to more centralized science

The “long tail” of scientists are still largely underserved

The “long tail” of scientific questions still has rudimentary technology

Spreadsheets are still in widespread use

Many valuable datasets are never integrated to address aggregate questions

Discovery is a social endeavor

Socio-technical systems to support ad-hoc collaborations

Enable routine unexpected or indirect interactions among scientists

eg, unanticipated data sharing

DI: Automating and enhancing scientific processes at all levels?

DI: Empowering individual researchers through local infrastructure?

Do Scientific Discoveries Result from

Special Kinds of Scientific Activities?

Perhaps, but we do not need to address this question if we

can agree to consider discoveries in a continuum

The more the scientific processes are improved, the more the

discovery processes are improved

The more we empower scientists to cope with more complex

models (larger scope, broader coverage), the more the

discovery processes are improved

The more we open access of potential contributors to scientific

processes, the more the discovery processes are improved

Discovery Informatics: Why Now

Discovery informatics as “multiplicative science”: Investments in this

area will have multiplicative gains as they will impact all areas of

science and engineering

Multiplicative in the dimension of the “human bottleneck”

Could address current redundancy in {bio|geo|eco|…}informatics

Discovery informatics will empower the public: Society is ready to

participate in scientific activities and discovery tools can capture

scientific practices

“Personal data” will give rise to “personal science”

I study my genes, my medical condition, my backyard’s ecosystem

Volunteer donations of funds and time are now commonplace

Enable donations of more intellectual contributions and insights

Discovery informatics will enable lifelong learning and training of future

workforce in all areas of science

Focuses on usable tools that encapsulate, automate, and disseminate

important aspects of state-of-the-art scientific practice

Discovery Informatics: Why Now

Scope to include engineering, medicine

Science too big to fit in your head all at one time

Need computation to help understand it

Current process of conducting science in all areas is utterly

broken, often reinventing processes year after year

Science are more willing to adopt and collaborate

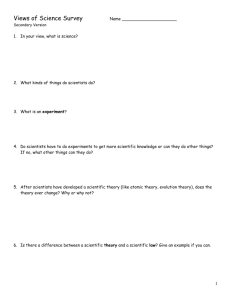

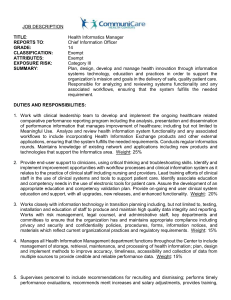

Three Major Themes

in Discovery Informatics

IN THIS SESSION:

3) Social

computing

for

science

1)

Improving

the

discovery

process

For each theme:

1. Why important to

discuss

2. State of the art

(where is it

published)

3. Topics

Focus is on coming up

2) Learning

models from

science data

as a group with topics

that each breakout

should elaborate

Bring up a topic not

yet listed but do not

dwell on it

THEME 1: Improving the Experimentation

and Discovery Process

Unprecedented complexity of scientific

enterprise

Is science stymied by the human bottleneck?

What aspects of the process could be improved, e.g.:

Managing publications through natural

language technologies

Capturing current knowledge through

ontologies and models

Multi-step data analysis through

computational workflows

Process reproducibility and reuse

through provenance

Data collection and analysis

through integrated robotics

Data sharing through Semantic

Web

Cross-disciplinary research

through collaborative interfaces

Result understanding through

visualization

THEME 2: Learning Models from

Science Data

Complexity of models and complexity of data analysis

Data analysis activities placed in a larger context

Comprehensive treatment of data

to models to hypotheses cycle

Using models to drive data collection activities

Preparing data in service of model formation and

hypothesis testing

Selecting relevant features for model development

Highlighting interesting behaviors and unusual

results

THEME 3: Social Computing for

Science

Multiplicative gains through broadening participation

Some challenges require it, others can significantly

benefit

Managing human contributions

What scientific tasks could be handled

How can tasks be organized to facilitate contributions

Can reusable infrastructure be developed

Can junior researchers, K-12 students, and the public

take more active roles in scientific discoveries

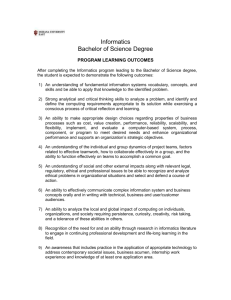

Three Major Themes

3) Social

computing

for

discovery

1)

Improving

the

discovery

process

2) Learning

science models

from data

Improving the Discovery Process:

Why

Characterizing what the discovery process is

Current processes are in many ways inefficient / less effective

Manual data analysis

Reproducibility is too costly

Literature is vast and unmanageable

…

Improving the Discovery Process:

What is the State of the Art

Workflow systems

Automate many aspects of data analysis, make it

reproducible/reusable

Emerging provenance standards (OPM, W3C’s PROV)

Augmenting scientific publications with workflows

Creating knowledge bases from publications

Ontological annotations of articles including claims and evidence

Text mining to extract assertions to create knowledge bases

Reasoning with knowledge bases to suggest or check hypotheses

Visualization

3 separate fields: scientific visualization, information visualization, and

visual analytics

“design studies”

Combining visualizations with other data

Improving the Discovery Process:

What is the State of the Art

What is the state of the art of what’s currently used in

science?

Opening data and models

Visualization not just of data, but also models and

relationships between models

Improving the Discovery Process:

Discussion Topics (I)

Automation of discovery processes

What is possible and unlikely in near/longer term

Representations are key to discovery, hard to engineer change

of representation in a system

Challenge is to find the right division of labor between human

and computer

User-centered design

Automation should come with suitable explanations

Of processes, models, data, etc.

Designing tools for the individual scientist (the “long tail”)

Improving the Discovery Process:

Discussion Topics (II)

Workflows

Understand barriers to widespread practice

Have they reached the tipping point of usability vs pain?

Workflow reuse across labs, across workflow systems

Are workflows useful?

What can we learn from workflows in non-science domains?

Text extraction / generation

Annotating publications

Improving the Discovery Process:

Discussion Topics (III)

Visualizations could help maximize the bandwidth of what humans

can assimilate

Visualization

Do scientists know what they want?

Scientists seem to prefer interaction, ie, control over the visualization,

rather than automatic visualizations

Active co-creation of visualization helps scientists

Domain specification / requirements extraction

Centrality of knowledge representations (means to an end)

Data

Processes

Reuse, open access, dynamic

Enabling integrated representation, reasoning, and learning

Risk of not being pertinent to some areas of science

From Models to Data and Back Again:

Why

Need to integrate better data with models and sense-making

Semantic integration to enable reasoning

Linking claims to experimental designs to data

Interpreting data is a cognitive social process, aided by

visualizations that integrate context into the data

How do we integrate prior knowledge, formalisms scientists

use, how do we update knowledge/formalisms

Generating useful data is a bottleneck, generating lots of

models is easy, should leverage this

Need to help scientists to evaluate models

Learning “Models” from Data:

What is the State of the Art

Cognitive science studies of discovery and insight

The role of effective problem representations

The challenges of programming representation change

Computational discovery

Model-based reasoning

Causality

Temporal dependency analysis

Design of quasi-experiments

Spatial and temporal data

Variability, multi-scale,

Sensor noise

Quality control

Sensor noise vs actual phenomena

Learning Models from Data:

Discussion Topics (I)

Integrating better models/knowledge and data

Model-guided data collection

Collect data based on goals

Observations guiding the revision of models

Explaining findings and revising models and knowledge

Visualizations that combine models and data

Deriving stuff from data

Enable causal connections across diverse data sources

Causal relations co-existing with gaps and conflicts stands in the way to more unified

databases

Models / patterns / laws?

Importance of uncertainty, quality, utility

From models to use

Connecting computer simulations and model building from data

HPC, simulation, and modeling from data should be connected

Learning Models from Data:

Discussion Topics (II)

Learning models that are communicable

Potential for unifying models and associated tools for doing

so

ML has a lot of theoretical results that have not yet been

made useful more broadly

Need to be more usable/accessible

Particularly in social sciences

Not always easy to apply to big data

Learning Models from Data:

Discussion Topics (III)

Incentivizing digital resource sharing to enable discoveries

Privacy and security: data being misused or not appropriately

credited

The social sciences are a particularly promising area for

discovery informatics, and what would facilitate this

Digital resource curation as a social issue

Verification (of models, conclusions, data, explanations, etc.)

Social Computing:

Why

Many valuable datasets lack appropriate metadata

Labels, data characteristics and properties, etc.

Human computation has beaten best of breed algorithms

Social agreement accelerates data sharing

Public interest in participating in scientific activity

Community assessment of models, knowledge, etc.

Concretizing elements that were mushy in the past

Mixed-initiative processes – humans exceed machine in many areas, so

we need to assimilate them for the things that they do better

Harness knowledge about what makes online communities (including,

e.g., Wikipedia) work well or poorly

Role of incentives, motivation, in bringing people together to do science

Social Computing:

What is the State of the Art

Very different manifestations:

Collecting data (eg pictures of birds)

Labeling data (eg Galaxy Zoo)

Computations (eg Foldit)

Elaborate human processes (eg theorem proving)

Bringing people and computing together in complementary ways

Social Computing:

Discussion Topics (I)

Several names: is there a distinction

Crowdsourcing, citizen science,

Designing the system

Roles: peers, senior researchers, automation

Incentives

Training

Platforms and infrastructure (using clouds right, social web

platforms)

Incorporating semantic information and metadata

Expertise finding

New modalities for peer review, scholarly communication

Social Computing:

Discussion Topics (II)

Defining workflows with more elaborate processes that mix

human processing with computer processing

Humans to do more complex tasks

Can facilitate reproducibility

Enticing people to participate while ensuring quality

Some existing systems should be revisited to be designed as

social systems

Workflow libraries and reuse tools

Data curation tools

Open software

Social Computing:

Discussion Topics (III)

Systems that enable collaborations that are not deliberate but

ad-hoc

Opportunistic partnerships

Unexpected uses of data

Systems that support a marketplace of ideas and track credit

New ideas/discoveries are often seen as a threat to the status

quo, how do we facilitate integration

Empower people to share ideas on a problem while credited

Incentive structures for new models of scholarly

communication, such as blogs