message(user 2,req 1)

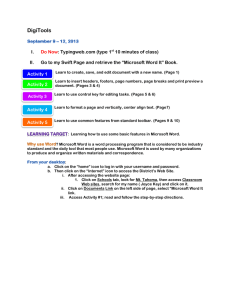

advertisement

First assignment: due Today • Go to http://malt/mw • Create an account for yourself – use andrew id • Go to your user page – Your real name & a link to your home page – Preferably a picture – Who you are and what you hope to get out of the class (Let me know if you’re just auditing) – Any special skills you have, research interests that you have, IE related projects you have been or might be working on, etc. Outline • Super-quick review of previous talk • More on NER by token-tagging – Limitations of HMMs – MEMMs for sequential classification • Review of relation extraction techniques – Decomposition one: NER + segmentation + classifying segments and entities – Decomposition two: NER + segmentation + classifying pairs of entities • Some case studies – ACE – Webmaster Quick review of previous talk What is “Information Extraction” As a family of techniques: Information Extraction = segmentation + classification + association + clustering October 14, 2002, 4:00 a.m. PT For years, Microsoft Corporation CEO Bill Gates railed against the economic philosophy of open-source software with Orwellian fervor, denouncing its communal licensing as a "cancer" that stifled technological innovation. Today, Microsoft claims to "love" the opensource concept, by which software code is made public to encourage improvement and development by outside programmers. Gates himself says Microsoft will gladly disclose its crown jewels--the coveted code behind the Windows operating system--to select customers. "We can be open source. We love the concept of shared source," said Bill Veghte, a Microsoft VP. "That's a super-important shift for us in terms of code access.“ Richard Stallman, founder of the Free Software Foundation, countered saying… * Microsoft Corporation CEO Bill Gates * Microsoft Gates * Microsoft Bill Veghte * Microsoft VP Richard Stallman founder Free Software Foundation What is “Information Extraction” As a family of techniques: Information Extraction = segmentation + classification + association + clustering October 14, 2002, 4:00 a.m. PT For years, Microsoft Corporation CEO Bill Gates railed against the economic philosophy of open-source software with Orwellian fervor, denouncing its communal licensing as a "cancer" that stifled technological innovation. Today, Microsoft claims to "love" the opensource concept, by which software code is made public to encourage improvement and development by outside programmers. Gates himself says Microsoft will gladly disclose its crown jewels--the coveted code behind the Windows operating system--to select customers. "We can be open source. We love the concept of shared source," said Bill Veghte, a Microsoft VP. "That's a super-important shift for us in terms of code access.“ Richard Stallman, founder of the Free Software Foundation, countered saying… via token tagging * Microsoft Corporation CEO Bill Gates * Microsoft Gates * Microsoft Bill Veghte * Microsoft VP Richard Stallman founder Free Software Foundation Token tagging and NER NER by tagging tokens Given a sentence: Yesterday Pedro Domingos flew to New York. 1) Break the sentence into tokens, and classify each token with a label indicating what sort of entity it’s part of: person name location name background Yesterday Pedro Domingos flew to New York 2) Identify names based on the entity labels Person name: Pedro Domingos Location name: New York 3) To learn an NER system, use YFCL. HMM for Segmentation of Addresses Hall 0.15 Wean 0.03 N-S 0.02 … … CA 0.15 NY 0.11 PA 0.08 … … • Simplest HMM Architecture: One state per entity type [Pilfered from Sunita Sarawagi, IIT/Bombay] HMMs for Information Extraction … 00 : pm Place : Wean Hall Rm 5409 Speaker : Sebastian Thrun 1. The HMM consists of two probability tables • • 2. Estimate these tables with a (smoothed) CPT • 3. Pr(currentState=s|previousState=t) for s=background, location, speaker, Pr(currentWord=w|currentState=s) for s=background, location, … Prob(location|location) = #(loc->loc)/#(loc->*) transitions Given a new sentence, find the most likely sequence of hidden states using Viterbi method: MaxProb(curr=s|position k)= Maxstate t MaxProb(curr=t|position=k-1) * Prob(word=wk-1|t)*Prob(curr=s|prev=t) … “Naïve Bayes” Sliding Window vs HMMs Domain: CMU UseNet Seminar Announcements GRAND CHALLENGES FOR MACHINE LEARNING Jaime Carbonell School of Computer Science Carnegie Mellon University 3:30 pm 7500 Wean Hall Machine learning has evolved from obscurity in the 1970s into a vibrant and popular discipline in artificial intelligence during the 1980s and 1990s. As a result of its success and growth, machine learning is evolving into a collection of related disciplines: inductive concept acquisition, analytic learning in problem solving (e.g. analogy, explanation-based learning), learning theory (e.g. PAC learning), genetic algorithms, connectionist learning, hybrid systems, and so on. Field Speaker: Location: Start Time: F1 30% 61% 98% Field Speaker: Location: Start Time: F1 77% 79% 98% Design decisions: What are the output symbols (states) ? What are the input symbols ? Cohen => “Cohen”, “cohen”, “Xxxxx”, “Xx”, … ? 8217 => “8217”, “9999”, “9+”, “number”, … ? All Numbers 3-digits 000.. ...999 Words 5-digits 00000.. ..99999 Others 0..99 0000..9999 Chars 000000.. A.. Delimiters Multi-letter . , / - + ? # ..z aa.. Sarawagi et al: choose best abstraction level using holdout set What is a symbol? Ideally we would like to use many, arbitrary, overlapping features of words. identity of word ends in “-ski” is capitalized is part of a noun phrase is in a list of city names is under node X in WordNet is in bold font is indented is in hyperlink anchor … S t-1 St S t+1 … is “Wisniewski” part of noun phrase … ends in “-ski” O t -1 Ot O t +1 Lots of learning systems are not confounded by multiple, nonindependent features: decision trees, neural nets, SVMs, … What is a symbol? identity of word ends in “-ski” is capitalized is part of a noun phrase is in a list of city names is under node X in WordNet is in bold font is indented is in hyperlink anchor … S t-1 St S t+1 … is “Wisniewski” … part of noun phrase ends in “-ski” O t -1 Ot O t +1 Idea: replace generative model in HMM with a maxent model, where state depends on observations Pr( st | xt ) ... What is a symbol? identity of word ends in “-ski” is capitalized is part of a noun phrase is in a list of city names is under node X in WordNet is in bold font is indented is in hyperlink anchor … S t-1 St S t+1 … is “Wisniewski” part of noun phrase … ends in “-ski” O t -1 Ot O t +1 Idea: replace generative model in HMM with a maxent model, where state depends on observations and previous state Pr( st | xt , st 1, ) ... What is a symbol? identity of word ends in “-ski” is capitalized is part of a noun phrase is in a list of city names is under node X in WordNet is in bold font is indented is in hyperlink anchor … S t-1 St S t+1 … is “Wisniewski” part of noun phrase … ends in “-ski” O t -1 Ot O t +1 Idea: replace generative model in HMM with a maxent model, where state depends on observations and previous state history Pr( st | xt , st 1, st 2, ...) ... Ratnaparkhi’s MXPOST • Sequential learning problem: predict POS tags of words. • Uses MaxEnt model described above. • Rich feature set. • To smooth, discard features occurring < 10 times. Conditional Markov Models (CMMs) aka MEMMs aka Maxent Taggers vs HMMS St-1 St St+1 ... Pr( s, o) Pr( si | si 1 ) Pr(oi | si 1 ) i Ot-1 Ot St-1 Ot+1 St St+1 ... Pr( s | o) Pr( si | si 1 , oi 1 ) i Ot-1 Ot Ot+1 Extracting Relationships What is “Information Extraction” As a family of techniques: Information Extraction = segmentation + classification + association + clustering October 14, 2002, 4:00 a.m. PT For years, Microsoft Corporation CEO Bill Gates railed against the economic philosophy of open-source software with Orwellian fervor, denouncing its communal licensing as a "cancer" that stifled technological innovation. Today, Microsoft claims to "love" the opensource concept, by which software code is made public to encourage improvement and development by outside programmers. Gates himself says Microsoft will gladly disclose its crown jewels--the coveted code behind the Windows operating system--to select customers. "We can be open source. We love the concept of shared source," said Bill Veghte, a Microsoft VP. "That's a super-important shift for us in terms of code access.“ Richard Stallman, founder of the Free Software Foundation, countered saying… * Microsoft Corporation CEO Bill Gates * Microsoft Gates * Microsoft Bill Veghte * Microsoft VP Richard Stallman founder Free Software Foundation What is “Information Extraction” As a task: Filling slots in a database from sub-segments of text. 23rd July 2009 05:51 GMT Microsoft was in violation of the GPL (General Public License) on the Hyper-V code it released to open source this week. After Redmond covered itself in glory by opening up the code, it now looks like it may have acted simply to head off any potentially embarrassing legal dispute over violation of the GPL. The rest was theater. As revealed by Stephen Hemminger - a principal engineer with open-source network vendor Vyatta - a network driver in Microsoft's Hyper-V used open-source components licensed under the GPL and statically linked to binary parts. The GPL does not permit the mixing of closed and opensource elements. … Hemminger said he uncovered the apparent violation and contacted Linux Driver Project lead Greg Kroah-Hartman, a Novell programmer, to resolve the problem quietly with Microsoft. Hemminger apparently hoped to leverage Novell's interoperability relationship with Microsoft. NAME Stephen Hemminger Greg Kroah-Hartman Greg Kroah-Hartman TITLE ORGANIZATION principal engineer Vyatta Novell programmer Linux Driver Proj. lead What is “Information Extraction” Technique 1: NER + Segment + Classify Segments and Entities 23rd July 2009 05:51 GMT Microsoft was in violation of the GPL (General Public License) on the Hyper-V code it released to open source this week. After Redmond covered itself in glory by opening up the code, it now looks like it may have acted simply to head off any potentially embarrassing legal dispute over violation of the GPL. The rest was theater. As revealed by Stephen Hemminger - a principal engineer with open-source network vendor Vyatta - a network driver in Microsoft's Hyper-V used open-source components licensed under the GPL and statically linked to binary parts. The GPL does not permit the mixing of closed and opensource elements. … Hemminger said he uncovered the apparent violation and contacted Linux Driver Project lead Greg Kroah-Hartman, a Novell programmer, to resolve the problem quietly with Microsoft. Hemminger apparently hoped to leverage Novell's interoperability relationship with Microsoft. What is “Information Extraction” Technique 1: NER + Segment + Classify Segments and Entities 23rd July 2009 05:51 GMT Microsoft was in violation of the GPL (General Public License) on the Hyper-V code it released to open source this week. After Redmond covered itself in glory by opening up the code, it now looks like it may have acted simply to head off any potentially embarrassing legal dispute over violation of the GPL. The rest was theater. As revealed by Stephen Hemminger - a principal engineer with open-source network vendor Vyatta - a network driver in Microsoft's Hyper-V used open-source components licensed under the GPL and statically linked to binary parts. The GPL does not permit the mixing of closed and opensource elements. … Hemminger said he uncovered the apparent violation and contacted Linux Driver Project lead Greg Kroah-Hartman, a Novell programmer, to resolve the problem quietly with Microsoft. Hemminger apparently hoped to leverage Novell's interoperability relationship with Microsoft. What is “Information Extraction” Technique 1: NER + Segment + Classify Segments and Entities 23rd July 2009 05:51 GMT Microsoft was in violation of the GPL (General Public License) on the Hyper-V code it released to open source this week. Does not contain worksAt fact After Redmond covered itself in glory by opening up the code, it now looks like it may have acted simply to head off any potentially embarrassing legal dispute over violation of the GPL. The rest was theater. Does not contain worksAt fact As revealed by Stephen Hemminger - a principal engineer with open-source network vendor Vyatta - a network driver in Microsoft's Hyper-V used open-source components licensed under the GPL and statically linked to binary parts. The GPL does not permit the mixing of closed and opensource elements. … Does contain worksAt fact Hemminger said he uncovered the apparent violation and contacted Linux Driver Project lead Greg Kroah-Hartman, a Novell programmer, to resolve the problem quietly with Microsoft. Hemminger apparently hoped to leverage Novell's interoperability relationship with Microsoft. Does contain worksAt fact What is “Information Extraction” Technique 1: NER + Segment + Classify Segments and Entities As revealed by Stephen Hemminger - a principal engineer with open-source network vendor Vyatta - a network driver in Microsoft's Hyper-V used open-source components licensed under the GPL and statically linked to binary parts. The GPL does not permit the mixing of closed and opensource elements. … Stephen Hemminger principal engineer Microsoft Vyatta Is in the worksAt fact Is in the worksAt fact Is not in the worksAt fact Is in the worksAt fact Does contain worksAt fact NAME Stephen Hemminger TITLE ORGANIZATION principal engineer Vyatta What is “Information Extraction” Technique 1: NER + Segment + Classify Segments and Entities Because of Stephen Hemminger’s discovery, Vyatta was soon purchased by Microsoft for $1.5 billion… Microsoft Vyatta Stephen Hemminger $1.5 billion Does contain an acquired fact Is in a acquired fact: role=acquirer Is in the acquired fact: role=acquiree Is not in the acquired fact Is in the acquired fact: role=price What is “Information Extraction” Technique 1: NER + Segment + Classify Segments and Entities 23rd July 2009 05:51 GMT Hemminger said he uncovered the apparent violation and contacted Linux Driver Project lead Greg Kroah-Hartman, a Novell programmer, to resolve the problem quietly with Microsoft. Hemminger apparently hoped to leverage Novell's interoperability relationship with Microsoft. Does contain worksAt fact (actually two of them) - and that’s a problem What is “Information Extraction” Technique 2: NER + Segment + Classify EntityPairs from same segment 23rd July 2009 05:51 GMT Hemminger said he uncovered the apparent violation and contacted Linux Driver Project lead Greg Kroah-Hartman, a Novell programmer, to resolve the problem quietly with Microsoft. Hemminger apparently hoped to leverage Novell's interoperability relationship with Microsoft. Hemminger Microsoft Linux Driver Project programmer Novell lead Greg Kroah-Hartman ACE: Automatic Content Extraction A case study, or: yet another NIST bake-off About ACE • • http://www.nist.gov/speech/tests/ace/ and http://projects.ldc.upenn.edu/ace/ The five year mission: “develop technology to extract and characterize meaning in human language”…in newswire text, speech, and images – EDT: Develop NER for: people, organizations, geo-political entities (GPE), location, facility, vehicle, weapon, time, value … plus subtypes (e.g., educational organizations) – RDC: identify relation between entities: located, near, part-whole, membership, citizenship, … – EDC: identify events like interaction, movement, transfer, creation, destruction and their arguments … and their arguments (entities) Event = sentence plus a trigger word Events, entities and mentions • In ACE there is a distinction between an entity—a thing that exists in the Real World—and an entity mention—which is something that exists in the text (a substring). • Likewise, and event is something that (will, might, or did) happen in the Real World, and an event mention is some text that refers to that event. – An event mention lives inside a sentence (the “extent”) • with a “trigger” (or anchor) – An event mention is defined by its type and subtype (e.g, Life:Marry, Transaction:TransferMoney) and its arguments – Every argument is an entity mention that has been assigned a role. – Arguments belong to the same event if they are associated with the same trigger. • The entity-mention, trigger, extent, argument are markup and also define a possible decomposition of the eventextraction task into subtask. The Webmaster Project: A Case Study with Einat Minkov (LTI, now Haifa U), Anthony Tomasic (ISRI) See IJCAI-2005 paper Overview and Motivations • • What’s new: – Adaptive NLP components – Learn to adapt to changes in domain of discourse – Deep analysis in limited but evolving domain Compared to past NLP systems: – Deep analysis in narrow domain (Chat-80, SHRDLU,...) ...something in between... – Shallow analysis in broad domain (POS taggers, NE recognizers, NP-chunkers, ...) – Learning used as tool to develop non-adaptive NLP components • Details: – Assume DB-backed website, where schema changes over time • No other changes allowed (yet) – Interaction: • User requests (via NL email) changes in factual content of website (assume update of one tuple) • System analyzes request • System presents preview page and editable form version of request • Key points: – partial correctness is useful – user can verify correctness (vs case for DB queries, q/a,...) => source of training data Shallow NLP email msg Classification LEARNER POS tags C requestType NP chunks C targetRelation C targetAttrib words, ... NER features offline training data Feature Building entity1, entity2, .... newEntity1,... C oldEntity1,... keyEntity1,... otherEntity1,... preview page user-editable form version of request User Update Request Construction confirm? database web page templates Outline • Training data/corpus – look at feasibility of learning the components that need to be adaptive, using a static corpus • Analysis steps: – – – – – – request type entity recognition role-based entity classification target relation finding target attribute finding [request building] • Conclusions/summary Training data User1 User2 User3 .... Mike Roborts should be Micheal Roberts in the staff listing, pls fix it. Thanks - W On the staff page, change Mike to Michael in the listing for “Mike Roberts”. Training data Add this as Greg Johnson’s phone number: 412 281 2000 User1 Please add “412-281-2000” to greg johnson’s listing on the staff page. User2 User3 .... Training data – entity names are made distinct Add this as Greg Johnson’s phone number: 412 281 2000 User1 Please add “543-341-8999” to fred flintstone’s listing on the staff page. User2 User3 .... Modification: to make entity-extraction reasonable, remove duplicate entities by replacing them with alternatives (preserving case, typos, etc) Training data message(user 1,req 1) User1 Request1 message(user 2,req 1) .... message(user 1,req 2) User2 Request2 User3 Request3 message(user 2,req 2) .... message(user 1,req 3) message(user 2,req 3) .... .... .... Training data – always test on a novel user? message(user 1,req 1) User1 Request1 message(user 2,req 1) .... message(user 1,req 2) User2 Request2 User3 Request3 Simulate of .... a distribution .... many users (harder to learn) test train message(user 2,req 2) .... message(user 1,req 3) message(user 2,req 3) .... train Training data – always test on a novel request? message(user 1,req 1) User1 Request1 message(user 2,req 1) .... message(user 1,req 2) User2 Request2 User3 Request3 train test message(user 2,req 2) .... message(user 1,req 3) train message(user 2,req 3) Simulate a distribution of .... many requests (much harder to learn) 617 emails total + 96 similar ones Training data – limitations • One DB schema, one off-line dataset – May differ from data collected on-line – So, no claims made for tasks where data will be substantially different (i.e., entity recognition) – No claims made about incremental learning/transfer • All learning problems considered separate • One step of request-building is trivial for the schema considered: – Given entity E and relation R, to which attribute of R does E correspond? – So, we assume this mapping is trivial (general case requires another entity classifier) Shallow NLP POS tags C requestType NP chunks C targetRelation C targetAttrib words, ... Information Extraction entity1, entity2, .... features email msg Feature Building newEntity1,... C oldEntity1,... keyEntity1,... otherEntity1,... Entity Extraction Results • We assume a fixed set of entity types – no adaptivity needed (unclear if data can be collected) • Evaluated: – hand-coded rules (approx cascaded FST in “Mixup” language) – learned classifiers with standard feature set and also a “tuned” feature set, which Einat tweaked – results are in F1 (harmonic avg of recall and precision) – two learning methods, both based on “token tagging” • Conditional Random Fields (CRF) • Voted-perception discriminative training for an HMM (VP-HMM) Entity Extraction Results – v2 (CV on users) Shallow NLP POS tags C requestType NP chunks C targetRelation C targetAttrib words, ... Information Extraction entity1, entity2, .... features email msg Feature Building newEntity1,... C oldEntity1,... keyEntity1,... otherEntity1,... Entity Classification Results • Entity “roles”: – keyEntity: value used to retrieve a tuple that will be updated (“delete greg’s phone number”) – newEntity: value to be added to database (“William’s new office # is 5307 WH”). – oldEntity: value to be overwritten or deleted (“change mike to Michael in the listing for ...”) – irrelevantEntity: not needed to build the request (“please add .... – thanks, William”) Features: • closest preceding preposition • closest preceding “action verb” (add, change, delete, remove, ...) • closest preceding word which is a preposition, action verb, or determiner (in “determined” NP) • is entity followed by ‘s Shallow NLP POS tags C requestType NP chunks C targetRelation C targetAttrib words, ... Information Extraction entity1, entity2, .... features email msg Feature Building newEntity1,... C oldEntity1,... keyEntity1,... otherEntity1,... Shallow NLP POS tags C requestType NP chunks C targetRelation C targetAttrib words, ... Information Extraction entity1, entity2, .... features email msg Feature Building newEntity1,... C oldEntity1,... keyEntity1,... otherEntity1,... Reasonable results with “bag of words” features. Shallow NLP POS tags C requestType NP chunks C targetRelation C targetAttrib words, ... Information Extraction entity1, entity2, .... features email msg Feature Building newEntity1,... C oldEntity1,... keyEntity1,... otherEntity1,... Request type classification: addTuple, alterValue, deleteTuple, or deleteValue? • Can be determined from entity roles, except for deleteTuple and deleteValue. – “Delete the phone # for Scott” vs “Delete the row for Scott” • Features: – counts of each entity role – action verbs – nouns in NPs which are (probably) objects of action verb – (optionally) same nouns, tagged with a dictionary Target attributes are similar • Comments: • Very little data is available • Twelve words of schema-specific knowledge: dictionary of terms like phone, extension, room, office, ... Shallow NLP POS tags C requestType NP chunks C targetRelation C targetAttrib words, ... Information Extraction entity1, entity2, .... features email msg Feature Building newEntity1,... C oldEntity1,... keyEntity1,... otherEntity1,... Shallow NLP POS tags C requestType NP chunks C targetRelation C targetAttrib words, ... Information Extraction entity1, entity2, .... features email msg Feature Building newEntity1,... C oldEntity1,... keyEntity1,... otherEntity1,... Training data message(user 1,req 1) User1 Request1 message(user 2,req 1) .... message(user 1,req 2) User2 Request2 User3 Request3 message(user 2,req 2) .... message(user 1,req 3) message(user 2,req 3) .... .... .... Training data – always test on a novel user? message(user 1,req 1) User1 Request1 message(user 2,req 1) .... message(user 1,req 2) User2 Request2 User3 Request3 Simulate of .... a distribution .... many users (harder to learn) test train message(user 2,req 2) .... message(user 1,req 3) message(user 2,req 3) .... train Training data – always test on a novel request? message(user 1,req 1) User1 Request1 message(user 2,req 1) .... message(user 1,req 2) User2 Request2 User3 Request3 train test message(user 2,req 2) .... message(user 1,req 3) train message(user 2,req 3) Simulate a distribution of .... many requests (much harder to learn) 617 emails total + 96 similar ones Other issues: a large pool of users and/or requests usr Webmaster: the punchline Conclusions? • System architecture allows all schema-dependent knowledge to be learned – Potential to adapt to changes in schema – Data needed for learning can be collected from user • Learning appears to be possible on reasonable time-scales – 10s or 100s of relevant examples, not thousands – Schema-independent linguistic knowledge is useful • F1 is eighties is possible on almost all subtasks. – Counter-examples are rarely changed relations (budget) and distinctions for which little data is available • There is substantial redundancy in different subtasks – Opportunity for learning suites of probabilistic classifiers, etc • Even an imperfect IE system can be useful…. – With the right interface… Shallow NLP POS tags C requestType NP chunks C targetRelation C targetAttrib words, ... Information Extraction entity1, entity2, .... features email msg Feature Building microform newEntity1,... C oldEntity1,... keyEntity1,... otherEntity1,... query DB Webmaster: the Epilog (VIO) Tomasic et al, IUI 2006 • Faster for request-submitter • Zero time for webmaster • Zero latency • More reliable (!) • Entity F1 ~= 84, • Micro-form selection accuracy =~ 80 • Used UI for experiments on real people (human-human, human-VIO) Conclusions and comments • Two case studies of non-trivial IE pipelines illustrate: – In any pipeline, errors propogate – What’s the right way of training components in a pipeline? Independently? How can (and when should) we make decisions using some flavor of joint inference? • Some practical questions for pipeline components: – What’s downstream? What do errors cost? – Often we can’t see the end of the pipeline… • How robust is the method ? – new users, new newswire sources, new upsteam components… – Do different learning methods/feature sets differ in robustness? • Some concrete questions for learning relations between entities: – (When) is classifying pairs of things the right approach? How do you represent pairs of objects? How to you represent structure, like dependency parses? Kernels? Special features?