What is “Groupware?”

advertisement

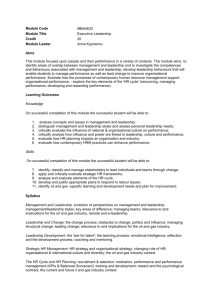

Evalueren van Groupware J.H. Erik Andriessen Technische Universiteit Delft Examples of ICT supported groups • • • • • Teams of consultants in Germany Aviation design teams Home nursing in Sweden Unilever communities Ambulance ‘teams’ New work arrangements Virtual offices –Telework / Telecommuting –Hotdesking / Hoteling –Home workers –Mobile work Virtual groups –Virtual fixed teams –Virtual mobile teams –Virtual communities Developments in work settings • Many modern organisations are “networking organisations”. – Many problems/tasks require people with different skills and experiences – Workforces are more distributed, and organizations are becoming more “virtual” – Much work is done in groups – Groups often work inefficient • Dispersed cooperation is possible, but to make such teams effective, knowledge is needed about: – the functioning of groups in general, – virtual groups, and – the possibilities of ICT support Virtual teams So: Virtual teams have advantages But: Virtual teams have also problems: – Interpersonal communication is more difficult : No nonverbal signals; no unplanned encounters; no context awareness – And therefore : Difficulty in all kinds of group processes: collaboration, coordination, developing trust, exchanging knowledge – ICT tools have to match with the type of group and its task; And they have to be supportive for the group processes Global virtual teams GVT’s have even more problems: • Time zone differences • Language differences \ Cultural differences Research suggests: In individualistic cultures (EU, USA) people prefer direct expression of opinions; In virtual groups they prefer synchronous communication, through telephone, video and chat. In collectivist cultures (Asia, Africa) people are sensitive to non-verbal signals and group relations. In virtual groups people prefer asynchronous communication, to be able to express themselves more carefully: e-mail What is “Groupware?” • Tools (hardware, software, processes) that support person-to-person collaboration • This can include e-mail, bulletin boards, conferencing systems, decision support systems, video and workflow systems, etc… • Some common groupware acronyms: – – – – – – Group Support Systems (GSS) Group Decision Support Systems (GDSS) Electronic Meeting Systems (EMS) Bulletin Board Systems (BBS) Group Collaboration Systems (GCS) Computer-Supported Cooperative Work (CSCW) systems Three types of interaction All collaboration technology always implies: • 1. Human - computer interaction • 2. Human - database interaction: (information seeking) • Internets • Intra-net • Group networks • 3. Mediated interpersonal interaction (communication) A Simple Classification of Groupware (adapted from Johansen, 1991) Same Same Location Different Time Different GDSS; Support for FtF Meetings, Email, Bulletin board, Comp. Conf Teleconferencing, Instant Msg Chat, Whiteboard, Video Email, Bulletin board, Comp. Conf Web based CS A GDSS Example Video Conferencing Groupware system Five Basic group processes co-operation co-ordination communication learning by knowledge sharing social interaction team building Dynamic Group Interaction model Processes Group characteristics Outcomes Individual interpretation and performance Group Technology Persons Task Group Formal structure Culture Physical setting Organisational environment cooperation Life cycles communication learning Reflection Learning Appropriation coordination social interaction Individual rewards Group vitality Organisational outcomes Emerging structures Changes in organisational setting Basic Principles • The effectiveness of a group can be expressed in terms of three types of outcomes, i.e. (quality and quantity of the )products, individual ‘rewards’ and vitality of the social relations. • Effectiveness depends on the quality of the individual preformance and six group processes, which have to match • The quality of the group processes depends on the support of six conditions, and on the interaction with the environment. • The six aspects of the context-of-use have to fit to each other. • Groups develop and tools become adopted and adapted to, through interaction processes and feedback. SUPPORT – MATCH – ADAPTATION To evaluate the role of groupware tools. TOOL Persons Task Group Formal structure Culture Physical setting cooperation Life cycles communication Evaluate • Technical properties • Degree of fit to task users, group, setting, other characteristics • Degree of support for processes • Effects on outcomes • Possibilities to adapt Reflection Learning Appropriation coordination learning social interaction Individual rewards Group vitality Organisational outcomes Evaluation issues in more detail 1. Describe the tool characteristics: reliability, portability, maintainability, network performance, costs, infrastructural quality, security/privacy and evaluate whether this is adequate 2. 3. 4. 5. 6. 7. 8. (ISO-9126) Describe the functionalities Analyse the task and evaluate whether the functionalities fit the task Analyse the users and evaluate whether the tool fits the users (usability) Analyse the group (structure, culture, setting) and evaluate whether the tool fits the group Evaluate whether the tool supports (or at least does not hinder) the group processes: communication, co-operation, co-ordination, learning, social interaction. Evaluate whether the tool contributes to (or at least does not hinder) individual, group, organisational outcomes. Evaluate to which extend the tools can be adapted to learning and new uses Evaluation principles • - Evaluation should be integrated in the design process from the very beginning. • - The design and the evaluation process should be iterative and stakeholder centred; critical success factors of stakeholders should be formulated. • - Evaluation can take place at three periods in the design life cycle – a. Concept evaluation – b. Prototype evaluation – c. Operational assessment Analysis of task, context technology, stakeholders Identification of technological and organisational options Development of Future Usage Scenarios Design requirements Identification of Stakeholders’ success factors Choice of Evaluation approach Analysis of potential impacts Iterative tests of prototypes Iterative design and implementation Use, experience, adaptation of new system Concept Evaluation’ Prototype Evaluation Operational Evaluation Lessons learned (1) 1. 2. 3. 4. 5. Groupware is part of a social system. Design not for a tool as such but for a new socio-technical setting. Design for several levels of interaction, i.e. for user friendly human computer interaction, adequate interpersonal communication, group co-operation and organisational functioning. Design in a participative way, i.e. users and possibly other stakeholders should be part of the design process from the beginning. Analyse carefully the situation of the users. Success of collaboration technology depends on the use and the users, not on the technology. Introduction should match their skills and abilities, and also their attitudes, otherwise resistance is inevitable. Analyse carefully the context, since success of collaboration technology depends on the fit to that context. The more a new setting deviates from the existing one the more time, energy and other resources should be mobilised to make it a success. Lessons learned (2) 6. Introduce the new system carefully. Apply proper project management, find a champion, try a pilot, inform people intensively 7. Train and support end-users extensively 8. Measure success conditions and success criteria before, during and after the development process. Only in this way you can learn for future developments. 9. Plan for a long process of introduction, incorporation, evaluation and adaptation. Groupware is not a quick fix. 10. Despite careful preparations groupware is appropriated and adapted in unforeseen ways. Keep options open for new ways of working with the groupware, because this may result in creative and innovative processes. Evaluation methods 1. Inspection methods • Heuristic Evaluation: 2. Performance analysis • Human Reliability Analysis 3. Behaviour analysis • Diagnostic Recorder for Usability Measurement (DRUM) 4. Effort and satisfaction • NASA-Task Load IndeX (NASA-TLX) • Measuring the Usability of Multi-Media Systems (MUMMS) • MultiMedia Communication Questionnaire (MMCQ) Evaluation Methods (2) 5. Task aspects and relations • Extended Delft Measurement Kit 6. Network performance 7. System usage and interaction registration • Automatic registration of the use of the system • Coding schemes for communication content Situational constraints Tool Person Task Setting Organisational environment Interpretation : •personal goals / task and perceived usefulness of tools •social relations and norms •perceived ease of use of tools and perceived situational constraints Motivation to act and to choose and use tools Tool Choice and Use Task Performance Reflection Learning Appropriation Outcomes Diversity Hypothesis Fully Dispersed Most Conflict Least Trust Three Subgroups Two Subgroups Least Conflict Most Trust