University Of Rizal system Morong, Rizal General title: Joint and

advertisement

University Of Rizal system

Morong, Rizal

General title: Joint and Marginal Distribution

Sub title: Joint Distribution Function

Introduction

In the study of probability, given two random variables X and Y, the joint

distribution for X and Y defines the probability of events defined in terms of both X and

Y. In a case of Joint Distribution Function

in two variables defined by

so that the joint probability function satisfies

The Joint distribution function

, also called the cumulative distribution function

(CDF) or cumulative frequency function, describes the probability that a variate

takes

on a value less than or equal to a number . The distribution function is therefore related

to a continuous probability density function

so

by

(when it exists) is simply the derivative of the Joint distribution

function

In an experiment produces a pair (X1,X2) that falls in a rectangular region with

lower left corner (a, c) and upper right corner (b, d) is P[(a < X1 ≤ b) ∩ (c < X2 ≤

d)] = FX1X2(b, d) − FX1X2(a, d) – FX1X2(b, c)+FX1X2(a, c)

Definition of Terms

Events – any set of outcome of element.

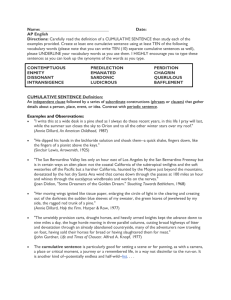

Cumulative Distribution Function – In probability theory and statistics, the cumulative

distribution or just distribution function, completely describes the probability distribution of

a real-valued random variable X. Cumulative distribution functions are also used to

specify the distribution of multivariate random variables.

Continuous Probability Density Function – that describes the relative likelihood for this

random variable to occur at a given point in the observation space, is used to denote the

probability density function.

Example

FX1X2(x1, x2) = P[(X1 ≤ x1) ∩ (X2 ≤ x2)]

1. FX1X2(−∞,−∞) = 0

2. FX1X2(−∞, x2) = 0 for any x2

3. FX1X2(x1,−∞) = 0 for any x1

4. FX1X2(+∞,+∞) = 1

5. FX1X2(+∞, x2) = FX2(x2) for any x2

6. FX1X2(x1,+∞) = FX1(x1) for any x1

Summary :

• We may be interested in probability statements of several RVs.

• Example: Two people A and B both flip coin twice. X: number of heads obtained by A.

Y: number of heads obtained by B. Find P (X > Y ).

• Discrete case: Joint probability mass function: p(x, y) = P (X =x, Y = y).– Two coins,

one fair, the other two-headed. A randomly chooses one and B takes the other.{1 A gets

head1 B gets head X=Y =0 A gets tail0 B gets tail. Find P (X ≥ Y ).1

• Marginal probability mass function of X can be obtained from the joint probability mass

function, p(x, y):∑ p X (x) =p(x, y) .y:p( x ,y )>0

Similarly: ∑p Y (y) =p(x, y) x:p(x, y)>02

• Continuous case: Joint probability density function f (x, y):P {(X, Y ) ∈ R} =f (x, y)dx dy

R

• Marginal pdf :∞∫fX (x) =f (x, y)dy−∞∞∫fY (y) =f (x, y)dx−∞

• Joint cumulative probability distribution function ofX and YF (a, b) = P {X ≤ a, Y ≤b}− ∞

< a, b < ∞

• Marginal cdf:FX (a) = F (a, ∞)FY (b) = F (∞, b)

• Expectation E[g(X, Y )]:∑ ∑= ∫ y ∫ x g(x, y)p(x, y)in the discrete case∞∞= −∞ −∞ g(x, y)f

(x, y)dxdy in the continuous case3

• Based on joint distribution, we can deriveE[aX + bY ] = aE[X] + bE[Y ]

Extension:E[a1X1 + a2X2 + · · · + anXn]= a1E[X1] + a2E[X2] + · · · + anE[Xn]

E[X], X is binomial with n, p:{1 ith flip is headXi =0 ith flip is tail nn∑∑X=Xi , E[X] =E[Xi] =

npi=1i=1.

John Clement C. Ulang

II-D1