Assessment for Learning

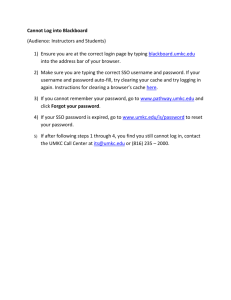

advertisement

FaCET Workshop on Assessment Basics Nathan Lindsay September 18, 2013 Can dogs talk? Our Vision for Assessment To provide sufficient support and guidance to help you realize the dividends for the time/effort invested Enhanced learning Improved programs/degrees Greater communication about teaching/learning among faculty To create a culture of learning, where striving to enrich our students’ learning is what is valued I think that good teaching is more art than science. 8% 8% 8% lic ap p N ot ly tr on g S ab le ag ... di s gr ee is a D re e ag A gr ee ... 0% ei th er 6. 17% N 5. ag re e 4. ly 3. 58% tr on g 2. Strongly agree Agree Neither agree nor disagree Disagree Strongly disagree Not applicable S 1. Some Guiding Assumptions… Teaching and learning can be improved through systematic inquiry Assessment is always a work in progress, and it’s ok if things don’t go perfectly Assessment is about lessons learned in the efforts to enhance learning/teaching Goal of the Assessment Annual Report = To demonstrate concerted effort on the part of faculty to examine student outcomes and make appropriate adjustments to improve program I think that the quality of student learning at UMKC is excellent. 8% ot ... /N no w ’t k on D S tr on g ly di s gr ee is a D ag re e gr ee A ag ... 0% ... 0% ei th er 6. N 5. ag re e 4. 33% 25% ly 3. 33% tr on g 2. Strongly agree Agree Neither agree nor disagree Disagree Strongly disagree Don’t know/Not applicable S 1. Four “Big Picture” questions to ask about assessment How do you define a successful student? What have you learned about your students’ learning? Are you satisfied with the results? If not satisfied with the results, what are you going to do about it? Assessing Our University’s (& Your Department’s) Assessment Efforts Compliance Commitment External Questions Internal Questions Number & Amount Quality & Utility Reporting Interpreting Collecting it Using it Accreditation Learning Initial Assessment Components for Each Academic Degree Mission statement Goals (usually 2-3) Learning Outcomes (usually 3-7) Remember: SMART Specific Measurable Attainable Relevant/ResultsOriented Time-bound Measurements Complete Measurements Process What instrument? why? formative or summative assessment? direct or indirect measure? if possible, it’s best to use multiple measures How conduct measurement? which students? when measured? where? how administered? by whom? often good to use smaller samples of students; capstone courses How collect and store data? Who analyzes data? how? when? Who reports? to faculty: how? when? where? to WEAVE? Achievement Targets What kind of performance do you expect from your students on your learning outcomes? What is the desirable level of performance for your students Rubrics can clarify this (see the next slides) What percentage of students do you expect to achieve this? Using Rubrics • A rubric is: “a set of criteria and a scoring scale that is used to assess and evaluate students’ work” (Cambell, Melenyzer, Nettles, & Wyman, 2000). • Addresses performance standards in a clear and concise manner (which students appreciate!) • Clearly articulates to students the areas of improvement needed to meet these standards • Blackboard has a new Rubric feature that makes the process straightforward and easier • To find examples, Google rubrics for your discipline, or see the Rubistar website http://rubistar.4teachers.org/ Example of a Rubric UMKC Foreign Languages and Literatures Assessment Tool for Oral Proficiency Interview adapted from “Interpersonal Mode Rubric Pre-Advanced Learner” 2003 ACTFL Category Exceeds Expectations Meets Expectations Does Not Meet Expectations Comprehensibility Who can understand this person’s meaning? How sympathetic must the listener be? Does it need to be the teacher or could a native speaker understand the speaker? How independent of teaching situation is the conversation? Easily understood by native speakers, even those unaccustomed to interacting with language learners. Clear evidence of culturally appropriate language, Although there may be some confusion about the message, generally understood by those unaccustomed to interacting with language learners. Generally understood by those accustomed to interacting with language learners. Language Control Accuracy, form, appropriate vocabulary, degree of fluency High degree of accuracy in present, past and future time. Accuracy may decrease when attempting to handle abstract topics Most accurate with connected discourse in present time. Accuracy decreases when narrating and describing in time frames other than present. Most accurate with connected sentence-level discourse in present time. Accuracy decreases as language becomes complex. How to build a rubric Answer the following questions: Given your broad course goals, what determines the extent of student understanding? What criterion counts as EVIDENCE of student learning? What specific characteristics in student responses, products or performances should be examined as evidence of student learning? Developing a rubric helps you to clarify the characteristics/components of your Learning Outcomes: For example: Can our students deliver an effective Public Speech? eye contact style appearance gestures rate evidence volume poise conclusion sources transitions examples verbal variety organization attention getter Rubrics Resources at UMKC Two new pages discussing rubrics are available on UMKC’s Blackboard Support Site. http://www.umkc.edu/ia/its/support/blackboard/fa culty/rubrics.asp http://www.umkc.edu/ia/its/support/blackboard/fa culty/rubrics-bb.asp Training for Rubrics on Blackboard For assistance with using Rubrics in Blackboard, please contact Molly Mead Instructional Designer, E-Learning Experiences at 235-6595 or meadmo@umkc.edu More rubric help AACU Rubrics http://www.aacu.org/value/rubrics Rubrics from Susan Hatfield (HLC Mentor) www.winona.edu/air/rubrics.htm Rubistar http://rubistar.4teachers.org/ Findings What do the data tell you? Part I: specific findings Compare new data to achievement targets Did students meet or deviate from expectations? Important: Include specific numbers/percentages when possible Do not use course grades or pass rates. Optional: Post anonymous data or files in WEAVE Document Management section Findings (cont.) what do the data tell you? Part II: general findings What lessons did your faculty learn from this evidence about your students? What broader implications do you draw about your program? Ex: curriculum, admissions, administration, policies, requirement, pedagogy, assessment procedures, and so on ◦ Conversations The more people involved, the better! Action Plans Concrete Steps for Change list of specific innovations that you would like to introduce in AY 2013-14 to address lessons learned in AY 201213. Again, in curriculum, admissions, administration, policies, requirement, pedagogy, assessment procedures, and so on Resources? Time Period? Point Person? It is best to have documentation of the changes made through these Action Plans (e.g., in syllabi, the course catalogue, meeting minutes) Submitting the Assessment Annual Report Part I: Detailed Assessment Report “Assessment Plan Content” All items (mission -> action plans) submitted in WEAVEonline to log in to WEAVE, go to https://app.weaveonl ine.com/umkc/login. aspx Using WEAVE for the 2012-2013 Assessment Cycle Everything from previous cycles has carried over into the 2012-2013 assessment cycle If you are creating entirely new goals, learning outcomes, etc., don’t write these over the top of old items (this will mess up your linked associations in WEAVE). Create new ones. If you need to delete something in WEAVE, please contact me, and I will do it for you Sharing Assessment Plans: Printing Reports from WEAVE Click on the “Reports” tab Under “Select cycle,” choose your cycle (the 2012-2013 cycle should be chosen if you’d like your findings listed) Under “Select a report,” there is a button you can select for “Assessment Data by Section” to make your report a little shorter Under “Select report entities,” choose the areas you would like to report Printing Reports from WEAVE (cont.) Click on “Next” (on the right side of the page) On the second page, under “Report-Specific Parameters,” click on “Keep user-inserted formatting.” Click on “Run” (on the right side of the page) The Report will come up in a new window, and this can be copied and pasted into a Word document. Assessment Plan Narrative Part II: Timeline/Account of Activities “Assessment Plan Narrative” In 1-2 pages, tell the story of all the work and careful consideration you and your colleagues accomplished in your assessment work this year (Ex.: meetings, mentoring, experiments, setbacks, lessons learned) Submit this in the Document Management section in WEAVE Please follow the four outlined questions (see next slide) Four Questions for the Assessment Narrative 1) Process: Please describe the specific activities and efforts used to design, implement, and analyze your assessment plan during this academic year. This narrative might be organized chronologically, listing meetings, mentoring sessions, and experiments at each stage of the developmental process including the names of people involved in various capacities, with each event given one paragraph. 2) Positives: Please describe what was most useful about the assessment process, or what went well. What did you learn about your faculty, students, or program through this experience? 3) Challenges: Please describe the challenges you encountered in terms of the development or implementation of your assessment procedures, as well as the lessons you learned from this experience and your efforts or plans for overcoming them. This section might be organized topically. 4) Support: Please describe your program’s experience during the past year with the support and administrative structures in place at UMKC for Assessment: the Provost’s Office, the University Assessment Committee, FaCET, and so on. If there are ways in which these areas could be improved to better support your efforts in assessment, please make those suggestions here. Avoiding “Garbage In, Garbage Out” An assessment plan submitted for each degree is not enough Focus on encouraging best practices Enhancing overall quality through: One-on-one mentoring Multiple drafts/iterative process Timely and thorough peer review given for all degrees and programs (requiring many hours!) Submission: October 1st 2013 Final reporting complete for the 2012-2013 assessment cycle No edits allowed after 1st of October During the fall semester, the University Assessment Committee and the Asst. VP for Assessment will give feedback on these Annual Reports After October st 1 Assessment entries for AY 2013-14 begin Assessment Cycle runs from June 1, 2013 to May 30, 2014 Need to implement the Action Plans from last year Update mission statements, goals, learning outcomes, and measurements based on feedback from UAC. Items in WEAVE carry over from last year unless changed. Enter new findings and action plans. Assessment Resources University Assessment website: http://www.umkc.edu/asse ssment/index.cfm Academic degree assessment General education assessment University Assessment Committee Assessment Resources Assessment Handbook Core principles and processes regarding UMKC assessment WEAVE guidelines Assessment glossary 10 FAQs Appendices Available at http://www.umkc.edu/provost/academicassessment/downloads/handbook-2011.pdf Assessment Projects from Recent Years UMKC Assessment Plan (see handout) and General Education Assessment Plan (http://www.umkc.edu/assessment/downloads/generaleducation-assessment-plan-6-28-12.pdf) Develop assessment plans for free-standing minors and certificate programs Use the major field exams, WEPT (now RooWriter), and ETS-Proficiency Profile to inform practices across the campus Conduct pilot assessments for General Education Goals for 2011-2012, 2012-2013 Here’s what we hope to see in the WEAVE reports and narratives More faculty/staff involvement within each department Additional learning outcomes measured (so that all outcomes are measured in a three-year cycle) Data showing that changes made to curriculum, pedagogy, advising, services, etc. were related to higher student learning outcomes. In other words, if scores from 2012-2013 are significantly higher than the previous year, please highlight these. Again, we need to have assessment findings and action plans from 100% of departments for our Higher Learning Commission requirements Ongoing Assessment Initiatives at UMKC Helping faculty to develop their assessment plans for the new General Education courses Integrating assessment work more effectively with the Program Evaluation Committee Having departments post their student learning outcomes on their websites Encouraging departments to establish departmental level assessment committees A Few More Areas of Assessment Progress Encouraging higher order thinking as students progress through the curriculum Using multiple types of assessments Assessing students’ learning in high impact experiences (internships, undergraduate research, service learning, study abroad) Student surveys gauging their learning/satisfaction in the department Making sure that the curriculum and pedagogy is more directly tied to your learning outcomes (i.e., curriculum mapping) COMPREHENSION ANALYSIS EVALUATION APPLICATION SYNTHESIS KNOWLEDGE Cite Count Define Draw Identify List Name Point Quote Read Recite Record Repeat Select State Tabulate Tell Trace Underline Associate Classify Compare Compute Contrast Differentiate Discuss Distinguish Estimate Explain Express Extrapolate Interpolate Locate Predict Report Restate Review Tell Translate Apply Calculate Classify Demonstrate Determine Dramatize Employ Examine Illustrate Interpret Locate Operate Order Practice Report Restructure Schedule Sketch Solve Translate Use Write Analyze Appraise Calculate Categorize Classify Compare Debate Diagram Differentiate Distinguish Examine Experiment Inspect Inventory Question Separate Su rize Test Arrange Assemble Collect Compose Construct Create Design Formulate Integrate Manage Organize Plan Prepare Prescribe Produce Propose Specify Synthesize Write Appraise Assess Choose Compare Criticize Determine Estimate Evaluate Grade Judge Measure Rank Rate Recommend Revise Score Select Standardize Test Validate Lower level course outcomes COMPREHENSION ANALYSIS EVALUATION APPLICATION SYNTHESIS KNOWLEDGE Cite Count Define Draw Identify List Name Point Quote Read Recite Record Repeat Select State Tabulate Tell Trace Underline Associate Classify Compare Compute Contrast Differentiate Discuss Distinguish Estimate Explain Express Extrapolate Interpolate Locate Predict Report Restate Review Tell Translate Apply Calculate Classify Demonstrate Determine Dramatize Employ Examine Illustrate Interpret Locate Operate Order Practice Report Restructure Schedule Sketch Solve Translate Use Write Advanced Course / Program outcomes Analyze Appraise Calculate Categorize Classify Compare Debate Diagram Differentiate Distinguish Examine Experiment Inspect Inventory Question Separate Summarize Test Arrange Assemble Collect Compose Construct Create Design Formulate Integrate Manage Organize Plan Prepare Prescribe Produce Propose Specify Synthesize Write Appraise Assess Choose Compare Criticize Determine Estimate Evaluate Grade Judge Measure Rank Rate Recommend Revise Score Select Standardize Test Validate Program Level Student Learning Outcomes 1 1xx 2xx 2xx A K K 2 3 1xx A 2xx 3xx A 3xx 3xx 4xx Capstone A A K K S S K K 4 5 S K 6 K K A 7 S A A A S S K= Knowledge/Comprehension; A= Application / Analysis; S= Synthesis /Evaluation Questions? Contact Information For assistance with assessment, please contact Nathan Lindsay, Assistant Vice Provost for Assessment at 2356084 or lindsayn@umkc.edu (After November 1st) Barb Glesner Fines, FaCET Mentor for Assessment at 2352380 or glesnerb@umkc.edu