Course Readings - School of Education

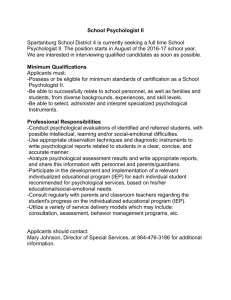

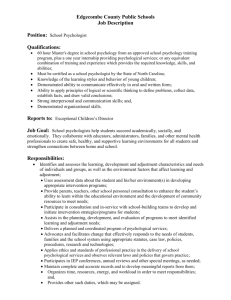

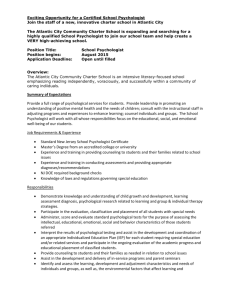

advertisement

Syllabus PIE 2030 – Experimental Design Instructor: Clement A. Stone Office: 5920 Posvar Hall; 624-9359; email: cas@pitt.edu Description The course is designed to introduce students to different methods in experimental design. Topics include characteristics of experimental research, steps for implementing an experiment, design issues as they related to internal and external validity, classification of experimental designs, sampling, and design techniques such as blocking, analysis of covariance, and assessment of change. Other research methods will be discussed including survey research, meta-analysis, and quasiexperimental designs. General course objectives include: 1) Understand the goals of experimental design and causal inference 2) Understand how experimental designs differ from other research 3) Understand what specific experimental design techniques are intended to accomplish 4) Understand connections between specific experimental design techniques and statistical analyses 5) Understand different quasi-experimental designs, what the designs are intended to accomplish 6) Be able to recognize strengths and weaknesses of various research design practices including experimental research, survey research, meta-analysis, and quasi-experimental research Course Prerequisites Introduction to Research Methodology (PIE2001 or equivalent) Introductory Statistics course (PIE2018 or equivalent) Students should have an understanding of simple statistical concepts (mean, variance, normal distribution, correlation) as well as an understanding of basic methods such as linear regression, t-test, and hypothesis testing. Course Readings Required: Shadish, Cook, & Campbell. Experimental and Quasi-experimental Designs for Generalized Causal Inference. Publisher: Wadsworth, Cengage Learning (2002). Recommended: Christensen, Burke, & Turner. Research Methods, Design, and Analysis, 11th Edition Publisher: Pearson (2010). Course Evaluation Homework (contributing 20% to the final grade) will be assigned for each topic area. For each day that answers to an exercise are submitted late, 20% of points possible will be deducted. There will be two examinations (midterm, final each contributing 40% to the final grade). Each exam will consist of short answer and multiple-choice type questions. Exams are closed book. Class participation in discussions is expected. Schedule (tentative) Shadish et. al. Readings in Christenson et. al. Ch. 1-3 Ch. 1, 2, 6, Reading Readings in Week 1- Introduction to course – review of concepts Review of Statistical Concepts (Ch. 14-15) 2- Experimental design 3- MLK Day 4- Experimental design (cont.) 5- Issues in analyzing data from experimental designs 6- Issues in analyzing data from experimental designs (cont.) 7- Issues in analyzing data from experimental designs (cont.) 8- Midterm 9- Meta-analysis Ch. 8 Ch. 7,8 Reading Ch. 15 Ch. 13, Readings pg. 17 11 - Introduction to sampling Ch. 9 Ch. 5 12 - Determining sample size Reading Part of Ch. 9 13 - Quasi-experimental research and causal inference revisited pgs. 13-17, Ch. 4-7 Ch 11 Ch. 10 Reading Ch. 12 10 – Spring Break 14 - Quasi-experimental research (cont.) 15 - Survey research 16 - Final Exam Other Useful Readings American Educational Research Association. (2006). Standards for reporting on empirical social science research in AERA publications. Educational Researcher, 35(6), 33 – 40. Aivazidis, C., Lazaridou, M., & Hellden, G. F. (2006). A comparison between a traditional and an online environmental educational program. The Journal of Environmental Education, 37(4), 45 – 54. Batiuk, M. E., Boland, J. A., & Walker, N. (2004). Project trust: Breaking down barriers between middle school children. Adolescence, 39(155), 531 – 538. 7P:220. Briggs, D. C., Ruiz-Primo, M. A., Furtak, E., & Shepard, L. (2012). Meta-analytic methodology and inferences about the efficacy of formative assessment. Educational Measurement: Issues and Practice, 31(4), 13 – 17. de Anda, D. (2006). Baby think it over: Evaluation of an infant simulation intervention for adolescent pregnancy prevention. Health & Social Work, 31(1), 26 – 35. Feinman, R. D. (2009). Intention-to-treat: What is the question? Nutrition & Metabolism, 6(1). Han, H., Lee, J., Kim, J., Hedlin, H. K., Song, H., & Kim, M. T. (2009). A meta-analysis of interventions to promote mammography among ethnic minority women. Nursing Research, 58(4), 246 – 254. Harwell, M., & LeBeau, B. (2010). Student eligibility for a free lunch as an SES measure in education research. Educational Researcher, 39(2), 120-131. Hughes, E. K., Gullone, E., Dudley, A., & Tonge, B. (2010). A case-control study of emotion regulation and school refusal in children and adolescents. Journal of Early Adolescence, 30(5), 691 – 706. Kingston, N., & Nash, B. (2011). Formative assessment: A meta-analysis and a call for research. Educational Measurement: Issues and Practice, 30(4), 28 – 37. Kingston, N., & Nash, B. (2012). How many formative assessment angels can dance on the head of a meta-analytic pin: .2.. Educational Measurement: Issues and Practice, 31(4), 18 – 19. Leake, M., & Lesik, S. A. (2007). Do remedial English programs impact first-year success in college? An illustration of the regression-discontinuity design. International Journal of Research & Method in Education, 30(1), 89 – 99. Liu, Y. (2007). A comparative study of learning styles between online and traditional students. Journal of Educational Computing Research, 37(1), 41 – 63. Miller, G. A., & Chapman, J. P. (2001). Misunderstanding Analysis of covariance. Journal of Abnormal Psychology, 110(1), 40 – 48. Roberts, J. V., & Gepotys, R. J. (1992). Reforming rape laws: Effects of legislative change in Canada. Law and Human Behavior, 16(5), 555 – 573. Robinson, T. N., Wilde, M. L., Navracruz, L. C., Haydel, K. F., & Varady, A. (2001). Effects of reducing children’s television and video game use on aggressive behavior. Archives of Pediatric and Adolescent Medicine, 155, 17 – 23. Russo, M. W. (2007). How to review a meta-analysis. Gastroenterology & Hepatology, 3(8), 637 – 642. Shavelson, R. J., & Towne, L. (Eds.). (2002). Scientific research in education. Washington, DC: National Academy Press. Simmons, J. P., Nelson, L. D., & Simonsohn, U. (2011). False-positive psychology: Flexibility in data collection and analysis allows presenting anything as significant. Psychological Science, 22(11), 1359 – 1366. Steiner, P. M., Wrolewski, A., & Cook, T. D. (2009). Randomized experiments and quasiexperimental research designs in educational research. In K. E. Ryan, & J. B. Cousins (Eds.), The Sage International Handbook on Educational Evaluation. Thousand Oaks, CA: Sage. Sterner, W. R. (2011). What is missing in counseling research? Reporting missing data. Journal of Counseling & Development, 89, 56 – 62. Swern, A. S. (2010). A story of evidence-based medicine: Hormone replacement therapy and coronary heart disease in postmenopausal women. Chance, 23(3), 52 – 56. Tracz, S. M., Nelson, L. L., Newman, I., & Beltran, A. (2005). The misuse of ANCOVA: The academic and political implications of type IV errors in studies of achievement and socioeconomic status. Multiple Linear Regression Viewpoints, 31(1), 16 – 21. West, S. G., & Thoemmes, F. (2010). Campbell’s and Rubin’s perspectives on causal inference. Psychological Methods, 15(1), 18 – 37. Other Sources American Educational Research Association. (2000). Ethical Standards of the American Educational Research Association. Washington, DC: Author. American Psychological Association. (2010). Publication manual of the American psychological association (6th ed.). Washington, DC: Author. American Psychological Association. (2002). Ethical principles of psychologists and code of conduct. American Psychologist, 57, 1060 – 1073. Armijo-Olivo, S., Warren, S., & Magee, D. (2009) Intention to treat analysis, compliance, dropouts and how to deal with missing data in clinical research: A review. Physical Therapy Reviews, 14(1), 36 – 49. Berk, R. A., & Freedman, D. (2005). Statistical assumptions as empirical commitments. In T. G. Blomberg & S. Cohen (Eds.), Punishment, and Social Control: Essays in Honor of Sheldon Messinger (2nd ed.; pp. 235 – 254). Piscataway, NJ: Aldaine. Campbell, D. T., & Fiske, D. W. (1959). Convergent and discriminant validation by the multitrait-multimethod matrix. Psychological Bulletin, 56(2), 81 – 105. Cohen, J. (1990). Things I have learned (so far). American Psychologist, 45(12), 1304 – 1312. Cohen, J. (1994). The earth is round (p < .05). American Psychologist, 49(12), 997 – 1003. Cole, J. C. (2008). How to deal with missing data: Conceptual overview and details for implementing two modern methods. In J. W. Osborne (Ed.), Best practice is quantitative methods (pp. 214 – 238). Thousand Oaks, CA: Sage. Cook, T. D. (2002). Randomized experiments in educational policy research: A critical examination of the reasons the educational community has offered for not doing them. Educational Evaluation and Policy Analysis, 24(3), 175 – 199. Cook, T. D. (2008). Waiting for life to arrive: A history of the regression-discontinuity design in psychology, statistics and economics. Journal of Econometrics, 142, 636 – 654. Cowles, M., & Davis, C. (1982). On the origins of the .05 level of statistical significance. American Psychologist, 37(5), 553 – 558. DeVaney, T. A. (2001). Statistical significance, effect size, and replication: What do the journals say?. The Journal of Experimental Education, 69(3), 310 – 320. Freedman, D. (1999). From association to causation: Some remarks on the history of statistics. Statistical Science, 14(3), 243 – 258. 7P:220. Hall, N. S. (2007). R. A. Fisher and his advocacy of randomization. Journal of the History of Biology, 40, 295 – 325. Hall, M. V., Wilson, L. A., & Sanger, M. J. (2012). Student success in intensive versus traditional introductory college chemistry courses. Journal of Chemical Education, 89, 1109 – 1113. Holland, P. W. (1986). Statistics and causal inference. Journal of the American Statistical Association, 81(396), 945 – 960. Judd, C. M., McClelland, G, H, & Culhane, S. E. (1995). Data analysis: Continuing issues in the everyday analysis of psychological data. Annual Review of Psychology, 46, 433 – 465. Kenny, D. A., & Judd, C. M. (1986). Consequences of violating the independence assumption in analysis of variance. Psychological Bulletin, 99(3), 422 – 431. Lehrer, J. (2010, December 13). The truth wears off: Is there something wrong with the scientific method? The New Yorker. Retrieved from http://www.newyorker.com/reporting/2010/12/13/101213fa_fact_lehrer. Lohman, D. F., & Korb, K. A. (2006). Gifted today but not tomorrow: Longitudinal changes in ability and achievement during elementary school. Journal for the Education of the Gifted, 29(4), 451 – 484. Lord, F. M. (1967). A paradox in the interpretation of group comparisons. Psychological Bulletin, 68(5), 304 – 305. Lord, F. M. (1969). Statistical adjustments when comparing preexisting groups. Psychological Bulletin, 72(5), 336 – 337. Messick, S. (1995). Validity of psychological assessment: Validation inferences from persons’ responses and performances as scientific inquiry into score meaning. American Psychologist, 50(9), 741 – 749. Osborne, J. W. (2000). Advantage of hierarchical linear modeling. Practical Assessment, Research & Evaluation 7(1). Retrieved January 23, 2008 from http://PAREonline.net/getvn.asp?v=7&n=1. Pearl, J. (2009). Causal inference in statistics: An overview. Statistics Surveys, 3, 96 – 146. Robison, W., Boiisjoly, R., Hoeker, D., & Young, S. (2002). Representation and misrepresentation: Tufte and the Morton Thiokol engineers on the Challenger. Science and Engineering Ethics, 8, 59 – 81. Rodriguez, M. C. (2005). Three options are optimal for multiple-choice items: A meta-analysis of 80 years of research. Educational Measurement: Issues and Practice, 24(2), 3 – 13. Rubin, D. B. (2008). For objective causal inference, design trumps analysis. The Annals of Applied Statistics, 2(3), 808 – 840. Schneider, B. (2004). Building a scientific community: The need for replication. Teachers College Record, 106(7), 1471 – 1483. Schooler, J. (2011). Unpublished results hide the decline effect. Nature, 470, 437. Schreiber, J. B., & Griffin, B. W. (2004). Review of multilevel modeling and multilevel studies in The Journal of Educational Research (1992-2002). The Journal of Educational Research, 98(1), 24 – 33. Shadish, W. R. (1995). Philosophy of science and the quantitative-qualitative debates: Thirteen common errors. Evaluation and Program Planning, 18(1), 63 – 75. 7P:220. Shadish, W. R., Clark, M. H., & Steiner, P. M. (2008). Can nonrandomized experiments yield accurate answers? A randomized experiment comparing random and nonrandom assignments. Journal of the American Statistical Association, 103(484), 1334 – 1356. Shadish, W. R., Cook, T. D. (1999). Design rules: More steps toward a complete theory of quasiexperimentation. Statistical Science, 14, 294 – 300. Shadish, W. R., & Cook, T. D. (2009). The renaissance of field experimentation in evaluating interventions. Annual Review of Psychology, 60, 607 – 629. Shadish, W. R., Galindo, R., Wong, V. C., Steiner, P. M., & Cook, T. D. (2011). A randomized experiment comparing random and cutoff-based assignment. Psychological Methods, 16(2), 179 – 191. Shea, C. (2011, November 13). Fraud scandal fuels debate over practices of social psychology. The Chronicle of Higher Education. Retreived from http://chronicle.com/article/As-DutchResearch-Scandal/129746/. Sheiner, L. B., & Rubin, D. (1995). Intention-to-treat analysis and the goals of clinical research. Clinical Pharmacology & Therapeutics, 57(1), 6 – 15. Shields, S. P., Hogrebe, M. C., Spees, W. M., Handlin, L. B., Noelken, G. P., Riley, J. M., & Frey, R. F. (2012). A transition program for underprepared students in general chemistry: Diagnosis, implementation, and evaluation. Journal of Chemical Education, 89, 995 – 1000. Smith, M. L., & Glass, G. V. (1977). Meta-analysis of psychotherapy outcomes. American Psychologist, 32, 752 – 760. Thistlewaite, D. L., & Campbell, D. T. (1960). Regression-discontinuity analysis: an alternative to the ex post facto experiment. The Journal of Educational Psychology, 51(6), 309 – 317. Wainer, H. (1992). Understanding graphs and tables. Educational Researcher 21(1), 14 – 23. Wainer, H. (1999a). One cheer for null hypothesis significance testing. Psychological Methods, 4(2), 212 – 213. Wainer, H. (1999b). Is the Akebono school failing its best students? A Hawaiian adventure in regression. Educational Measurement: Issues and Practice, 18(3), 26 – 31. Wainer, H., & Brown, L. M. (2004). Two statistical paradoxes in the interpretation of group differences: Illustrated with medical school admission and licensing data. The American Statistician, 58(2), 117 – 123. Wainer, H., & Robinson, D. H. (2003). Shaping up the practice of null hypothesis significance testing. Educational Researcher, 32(7), 22 – 30. Wilkinson, J., & the Task Force on Statistical Inference. (1999). Statistical methods in psychology journals. American Psychologist, 54, 594 – 604. Other Useful Books Campbell, D. T., & Stanley, J. C. (1963). Experimental and quasi-experimental designs for research. Boston, MA: Houghton Mifflin. Booth, W. C., Colomb, G. G., & Williams, J. M. (2003). The craft of research (2nd ed.). Chicago, IL: The University of Chicago Press. 7P:220. Cook, T. D, & Campbell, D. T. (1979). Quasi-experimentation: Design and analysis for field settings. Boston, MA: Houghton Mifflin. Freedman, D. (2010). Statistical models and causal inference: A dialogue with the social sciences (D. Collier, J. S. Sekhin, & P. B. Stark; Eds.). New York, NY: Cambridge. Murnane, J. R., & Willett, J. B. (2011). Methods Matter: Improving Causal Inference in Educational and Social Science Research. New York, NY: Oxford University Press. Strunk, W., & White. (2000). The elements of style (4th ed.). Needham Heights, MA: Allyn & Bacon. Tufte, E. R. (2001). The visual display of quantitative information (2nd ed.). Cheshire, MA: Graphics Press.