Game's

advertisement

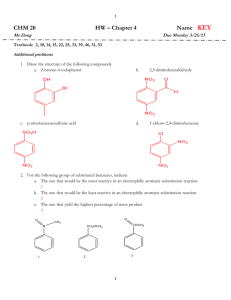

Character Artificial Intelligence CE00875-3 Introduction to Agent-based AI Lecture 2 Agents, Animats and Game Platforms • • • • • What are agents and artificial life? Considerations for game AI Goal orientation, planning and control Reactive techniques for games Reflective vs Reactive behaviour Agents • Arguably the most important development in AI of the • • • • last decade has been the notion of agents Comes from the philosophical notions of action, and the notion of Rational Economic Man An agent is a self-contained bundle of software with the characteristics of autonomy, action and rationality Related to, but more sophisticated than, the notion of an object in OOL. Higher level of abstraction, in which what counts is the choice of action in response to goals and situation. Newell’s concept of behavioural laws governing action eg An agent will choose the action most likely to lead to an outcome that matches one of its goals. • In a multi-agent system complex behaviour emerges as the result of the interactions between many, relatively simple agents according to social principles Artificial Life – Cellular automata • • A-life shares some elements with agent research, especially the multi-agent approach • A few rules control the birth, survival and death of counters on a rectangular grid, to produce growing, complex patterns over cycles Earliest forms were a class of game called cellular automata eg Conway’s Life Cells can be either live (contain counter) or dead (no counter). Each cell has 8 neighbours. To decide what will happen in a cell for each cycle, count the number of live and dead cells 1) A dead cell with exactly 3 live neighbors becomes a live cell (birth). 2) A live cell with 2 or 3 live neighbors stays alive (survival). 3) In all other cases, a cell dies or remains dead (overcrowding or loneliness). Artificial Life - Norns • However, A-life today is about making biologically plausible software models •Some of this work has resulted in extremely valuable creatures which may be used in game-playing scenarios eg Norns in Cyberlife’s Creatures • Norns have a simulated body, with a ‘digestive system’ and ‘biochemistry’ Image: Cyberlife/New Scientist Considerations for Game AI • Are the AI approaches applicable to the • software design of NPCs? ie able to provide: – control of primitive behaviours • eg picking things up, pressing switches, using objects, etc. – movement • move around the game world, dealing with obstacles and navigating to target destinations – decision-making • working out what tasks to perform, and in what order • Traditionally, these things were done by laborious, detailed, procedural programming now becoming more difficult as games evolve • From a games point of view, use of AI not important Considerations for Game AI • In nouvelle game AI, we consider how the needs of • • game designers work for (and against) AI methods In games, some NPC characters need to behave intelligently to be believable and entertaining – maybe easier to accomplish with AI programs... ...but only if the program can really perform (very fast response to new situation) - challenging for AI • Software control of actions within a game has until recently needed to be explicit – ie scripting • For each of a number of situations arising in the game, a standard behaviour response is rigidly encoded • More recently, agent and other AI technologies have enabled implicit control – NPC assesses situation, chooses its action with respect to its own goals Considerations for Game AI • Basic conflict for game design: Building in intelligence allows NPC characters to behave autonomously, yet human designers need to control them, to make the game work as expected • Eg many games follow a script like a movie, so that a certain sequence of events happens to unfold a story line. If NPCs (especially learning ones) can run their own show, they might not stick to the script! • What role would intelligent decisions have in a scripted storyline? • One idea would be implicit design – no explicit script, just the elements of an interesting world and both player and NPC improvise • Another is to alternate sequences of scripted action (in which the NPCs intelligence is switched off) with free form action (in which the intelligence is on). Might be difficult to keep on track though! Goal-orientation, planning • Goals may be defined as a representation of a state of affairs which is marked as somehow desirable, to be achieved • Maintenance goals involve making sure some state of affairs is not lost (eg keep breathing) • Achieving a goal may involve a number of actions to move the actual state of the world closer to the desired state (eg move toward a source of food or target) • It might not be clear what to do next to get closer to a goal – planning is needed to select and order individual actions • All this requires collecting and maintaining models of the world- state, comparing them to goal states and then making decisions • This can take a lot of design and a lot of computation • Planning programs exist, but they tend to be slow and complex The problem of planning • Conventional AI planning program requires • i) a list of goals represented using a knowledge representation • ii) a problem in the form of some data representing opportunities or problems • iii) a set of possible actions which the machine can choose from iv) a planning algorithm which organises actions into a optimal plan The program would then do standard information processing: 1) accepts these as data files 2) process the data 3) prints out an optimal plan, ie ordered list of actions Reflective vs Reactive Behaviour • This distinction is important to game development, mostly because of the need for fast reactions • Early AI tended to be reflective – meaning that a problem- solving algorithm would exhaustively consider all possible courses of action, then choose the optimal (in terms of goal satisfaction) one • Humans don’t really do that, though. They satisfice, which means come up with a “good enough” solution, but more quickly, especially when time is an issue. Saves mental effort • Reflective programs build representational models from input data, evaluate or reason about them, plan a course of action then output it – expensive in computing power • Reactive programs use the world as their model. They interact directly with the world, exploiting simple signals and regularities there, to get behaviour which is not perfect, but fast • Now game AI tends to be mostly reactive One does not want to be stuck in high-level planning when there’s an emergency bearing down on you <train bearing down> Image: Benjamin Hobson, The Canal Gallery Maybe it’s quicker and easier to sense the world and act directly on it, instead of building and maintaining internal models of the world This is how insects are able to do a lot of clever things without a big brain and lots of memory Image: Mike Williams Animats • To simplify writing of AI code, the environment and integrating the two, we can use a standard development platform, Unreal Development Kit, UDK • Creates embodied bots ie that have a (simulated) body, are subject to physical constraints in the (simulated) world and do not have full information about the game • Just as human game players has an avatar in the game world, so a disembodied AI software controls its own avatar • Unreal is designed to support development of an first person shooter games. • The game developer specifies the interfaces, modules and architectures of an bot in a given world, in a domain-specific language called Uscript but has a myriad of other features which support various game-specific AI concepts. Reactive Techniques for Games • Another advantage of reactive designs is that they tend to be deterministic – fully specified by inputs • That means reactive code can very simple, easy to test and be highly optimised for speed eg - lookup tables scripts rule-based systems Finite State Machines •Benefits of bots - fits in with the idea of embodiment very well - environment can be enriched which provides more information to the bot - most learning techniques are based on reactive mappings - easy to create, test and maintain Environments, simulations, platforms • According to the notion of embodiment, the best place for an AI in immersed in the world, connected to it by many sensors and actuators Eg a robot roams about a house, sensing with cameras, microphones and using motorised limbs to move • Game AI modifies this slightly, and says the ‘world’ doesn’t have to be physical – it can be a computer simulation of the real world – Advantages • Cheaper and smaller than robots in the real world • Can develop and make changes faster in simulation • Can’t do any harm if it goes wrong – Disadvantages • world may be too simple to properly challenge an AI program • world may not be realistic, or even logically consistent • may have to build - or at least configure - an artificial world; extra work • In game AI, we are more or less forced use to a simulated world. But this is still better than prepared, cut-and-dried data sets Reactive animat, search and obstacle avoidance • • • • • • • Game’s “Physical” Environment Machine Vision Representing Space in the Game World Movement in the Game World Navigation in the Game World Obstacle Avoidance A Reactive Control System Game’s “Physical” Environment • We may distinguish between two aspects of a game environment: Structure - topography of the environment as it constrains movement (physics and layout of walls, paths, obstacles etc. Detail – graphical appearance of the game world and placement of objects which don’t impede movement • What about players, NPCs and monsters? Really need to consider moving things as a third category, especially when interactions go beyond simply destroying everything you see • Humans perceive the world mostly visually through detail, while AI sees the world a as simplified data structures and must interpret these as well as it can • We can try to make an AI interpret the graphical world directly, as if it was seeing through eyes, but such machine vision has proven to be very difficult to program and expensive to compute (at least at a human level of skill) • It is an important concept of nouvelle game AI that an animat should only have local, not global, knowledge of the game (like a human) • Having complete, perfect knowledge of the world is not good for AI or games Machine Vision • Getting a machine to see is a traditional sub-discipline of AI • A typical system might involve a camera returning a digitised image, interpretive software and some kind of output arrangement • Eg handwritten letter recogniser – easy pattern recognition software digital camera raw image ASCII for ‘2’ 00110010 data representation • To get more sophisticated output information requires more complex processing. Eg. scene analysis to aid robot navigation – hard • The output for that would be information enabling the robot to identify particular objects, or find their range and bearing, to help navigate around them Face recognition from security video is now a mainstream application of machine vision Representing Space in the Game World • How space is represented is important • 2D vs 3D – how the location of objects is encoded in the structure, not how the detail makes the world appear • Discrete vs continuous – meaning whether objects are placed in a grid or matrix with a finite number of locations or not (eg chess vs marbles) • Representation of time - also discrete (turn-taking) or continuous (stream of consciousness) • Conversions – discrete vs continuous is a relative matter, since a fine enough unit size (grid or time-steps) may be considered continuous in practice • In fact, all representations in computers must ultimately be discrete, approximating continuous to a greater or lesser degree! Movement in the Game World • At present, game engines provide a locomotion layer which abstracts basic movement actions away from the strategic control of direction • Physics simulation is required to handle gravity, throwing, fire, water etc. • Low level motion might now Control (forward/backward, turns) signals from user or parameters via API from the decision-making AI be handled by the AI Collision detection Physics in the game signals a collision halting (forward) motion Image: Chris Bayliss Integration signals from environment alter behaviour as appropriate (eg falls) Simulation loop (walking, running) physics handles displacement animation handles limb cycling locomotion layer Representing Space in the Game World • In a classical AI navigation experiment, travel paths in the world model might be represented at design-time as a graph, with nodes representing rooms and arcs representing passages or doors between them • Finding an optimal path from a current location to a target was then a matter of search on the graph • There are well-studied and good search algorithms available Search - Basics • Uninformed search algorithms simply follow a pattern to examine all nodes until one containing the goal is found (aka “brute force”) • Depth-first search - start at a root and explore as far as possible along each branch before backtracking until goal is found • Breadth-first search - start at a root and explore all neighbouring nodes, then for each neighbour, explore their unexplored neighbours, and so on until goal is found On this graph, starting at A, choosing left nodes before right and remembering previously visited nodes: - Depth First Search visits nodes in this order: A,B,D,F,E,C,G -Breadth First Search visits nodes in this order: A,B,C,E,D,F,G Search – Using Domain Information • Informed search algorithms use some method to choose intelligently which • • • • • • node to search next Best-first search – modifies breadth-first method to order all current paths by a heuristic which estimates how close the end of the path is to a goal. Paths that are closest to a goal are extended first. A* search - is a best-first method that calculates a cost and an estimate for the path leading to each node: Cost is zero for the first node; for all others, cost is the cumulative sum associated with all its ancestor nodes plus the cost of the operation which reached the node (e.g. linear distance) An estimate measures how far a given node is thought to be from a goal state (e.g. intensity on a sensory gradient) Cost and estimate are summed to score each path for eligibility for exploration For more detail, view the ‘Graph Search Methods’ link on the website Navigation in the Game World • There are problems with this kind of classical search however: – Depends on global information at design-time, so – Question of realism arises – not comparable with the limited viewpoint of real biological creatures – A lot of information may overwhelm decision-making processes – Information does not update dynamically via sensors, so cannot track changes (eg moving creatures in the world) • Nouvelle game AI animats are (virtually) embodied, which implies that - They have a (simulated) limited perceptual system which updates the AI continuously - They need more plausible navigation algorithms which can work on limited information and in real time • For now, we are interested in reactive solutions • Fortunately, such solutions have been studied for the design of robots Modelling a Bot’s State in Space • For many (but not all) AI models, need a description of the animat’s position and orientation in space, as well as how it will move Animat (3,3) Object (3,2) Object (6,1) (0,0) World Origin Absolute Coordinate System Animat (0,0) Egocentric Origin Relative Coordinate System 90 deg angles any angle any distance Continuous moves and turns unit distance Discrete moves and turns Obstacle Avoidance – Basic functionality • Finding one’s way around obstacles is fundamental to • • 1. navigation Well suited to implementation by a reactive control system Begins from general principles: When no obstacle is sensed, move ahead normally (straight or wandering randomly) 4 2. If wall detected on one side, veer away slowly 3. If obstacle detected ahead, turn to one side to avoid it 4. When stuck in a corner, a more radical turn is needed 3 2 1 A Reactive Control System • A reactive system requires three elements: • 1) a set of perceptual and action functions, which apply in a particular situation • 2) a mapping showing which percepts release which behaviours. That means a theory about how the animat behave (note related to idea of behavioural laws for agents). See previous slide. • An if-else-if-else structure could be used to order calls to perceptual and motor functions – procedural • A rule-based system (later topic!) is another possibility – declarative • UDK is based on the client-server model. Requests by a client are made and do not return until the server has computed a result. Usually based on simple function calls. Commonly used for the delegation of tasks A Reactive Control System • Could also use asynchronous events. Based on something called the observer design pattern: have a set of behaviours ready to go and a set of events that will trigger them. A kind of event-based processing • These can interrupt another routine and transfer control when something comes up unexpectedly • These should operate in ‘parallel’, competing for control of the animat’s body => need for 3) arbitration in case of a tie • How are competing motor outputs combined to control the limbs? - Independent sum – different outputs connected to different effectors so that they cannot clash - Combination – some formula (eg weighted sum) combines two or more outputs to blend them into output commands - Suppression – certain components get priority over others, and can replace their output with their own (eg. subsumption architecture) - Sequential – output of different behaviours getting control at alternate times Subsumption architecture works on real robots! Motor load Random Act Bump switches Escape IR proximity Avoid Photosensors Follow Sensory triggers Cruise s s s s s Motor command Heroes of AI #3 – The Radical • The radical idea that sophisticated robot behaviour could be accomplished without high-powered computing was advanced in the 1980s by ex-patriot Australian engineer Rodney Brooks. At the time robots were slow and clumsy, using classical planning to compute their motions • Brooks argued that robots could interact directly with the world via properly designed reactive network of sensors and actuators, and created a number of behaviour-based control architectures for robots. Without the need for complex representations. In the 1990s he and his students at the MIT robotics lab demonstrated ever more ingenious robots that used his subsumption architecture. • Brooks was featured in a 1997 documentary called “Fast, Cheap and Out of Control’, the name of his paper to the British Interplanetary Society arguing that insect-like robots could be used for exploration of the solar system. • Formed a company called iRobot, which now provides pack-bot robots to the US military as well as mass producing Rhoomba floor-cleaning robots • Latest and most demanding project is Cog, a behaviour-based humanoid robot Summary • Agents are an AI development which enables software to choose actions autonomously to achieve one or more goals which it has • Artificial life is an attempt to model the more biological aspects of life, such as reproduction (Life) or the digestion of food (eg Norns) • Both these technologies can be used to make lifelike, believable and entertaining NPCs for computer games • The software control of NPCs can be explicit (eg scripts) or implicit (eg rational agent) • Good control of gameplay in interactive games is part of the art • In conventional AI, accomplishment of goals required planning, which could be complex and processor-intensive • Conventional AI planners were reflective, which means they did not excel in time critical situations (like games) • Behavioural control in games tends to be reactive, which means less logical modeling of the world and more reacting directly to stimuli Summary • Game virtual worlds generally distinguish structure and detail. Moving objects could be considered a third category • Humans see detail, but game characters generally interact via structure • Machine vision is an important but difficult sub-field of AI • The representation of space and time may be 2D or 3D, discrete or continuous • Present game engines provide a locomotion layer which abstracts basic movement actions away from the strategic control of direction. In future AI may automate basic interactions with world • Travel paths in through space may be represented as graphs. These are traditionally searched by search methods such as breadth-first, depth-first, or A* search. Such search is a general problem-solving method • Reactive control systems require 1) perceptual & action functions 2) a mapping from percepts to actions representing a theory of behaviour and 3) a method to arbitrate conflicts – to resolve which action will be taken in case of a tie • Could be implemented procedurally as if-else-if statement or declaratively as a set of rules in a RBS References • Brooks, R. & Flynn, A. Fast, Cheap and Out of Control: The Robotic Invasion of the Solar System. Journal of The British Interplanetary Society, Vol. 42, 1989, 478-485. • Newell, A. The Knowledge Level, Artificial Intelligence, 1982, 18, 87-127 • Martin Gardner. The fantastic combination of John Conway's new solitaire game of “life”. Scientific American, 1970, 223, 120-123 • Cliff D. & Grand S. The Creatures Global Digital Ecosystem. Artificial Life, 1999, 5, 1, 77-93