What processes enable us to control uncertainty?

advertisement

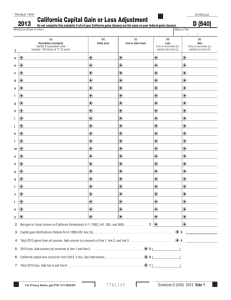

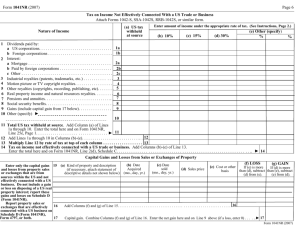

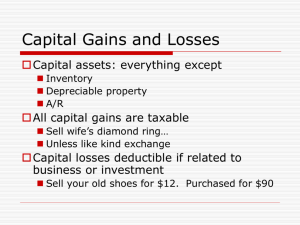

THE TAMING OF UNCERTAINTY Magda Osman QMUL Head of Dynamic Learning and Decision-making Lab www.magdaosman.co.uk m.osman@qmul.ac.uk The Taming of Uncertainty Act 1 – The Comedy of Gains & Losses Act 2- The Tragedy of Unknowing Act 3 – The Triumph of Heroes Scene setting Decision-Making Scenarios Meder, Le Lec & Osman (2013). Trend in Cognitive Science Types of Uncertainty Meder, Le Lec & Osman (2013). Trend in Cognitive Science How are dynamic situations studied in the lab? • Mircoworlds – mini computerised situations that mimic uncertain domains in which the participant interacts with and then attempts to control various outcomes 1. A brief Scenario is presented 2. The participant is then shown the computer-based task 3. They have a set number of trials in which to manipulate variables in order to control an outcome to criterion 4. This is followed by knowledge tests designed to access their understanding of the rules or causal structure connecting the input variables to the output variables Characters Dynamic Decision-Making (DDM) “….is a goal directed process that involves selecting actions that will reliably achieve and maintain the same outcome over time” • (Brehmer, 1992) • Where can DDM be found? • Changes occur either endogenously and/or exogenously • What are the defining Characteristics of DDM? • Sequentially, interdependent, online Osman (2010). Psychological Bulletin Agency & Control Choice involves an selection between alternatives, inherent in this is an action (mental/physical) that identifies the preferred choice Control “… is the combination of cognitive processes needed to co-ordinate actions in order to achieve an goal on a reliable basis over time” Agency “…is the overarching state or sustained experience that is concerned with ownership of and responsibility of observed actions” Osman (2014). Future-minded: The psychology of agency and control Heroes vs. Villains • Bounded Rationality • Unconscious • Reinforcement learning • Invalidity • Causality • Unreliability Plot Advances in DDM Research Machine learning/ Animal learning/ Neuroscience: Huys & Dayan (2009),Dayan (2009); Daw, Niv & Dayan, (2005), Dickinson (1985); Matignon, Laurent, & Fort-Piat (2006) Dynamic control / Naturalistic decision making tasks/ Contingency learning/ Management Science: Brehmer (1992), Busemeyer (1999), Edwards (1962), Kirlik, Miller & Jagacinski (1993), Sterman (1989) So, what are the key influences on Dynamic DecisionMaking? Monitoring & Control Theory (Osman, 2008, 2010, 2011) The agent The agent is engaged in a goal-directed way (goals), in order to achieve a certain outcome (reward), and repeatedly behaves as if an action will achieve and maintain a certain goal (sense of agency/contingency learning) The situation The actions of the agent are informed by state changes in the environmental, and the feedback/reward structure, in which the state changes are potentially knowable Simple prediction Only manipulations of Agency/ Goals/Reward/Feedback/Contingency should impact decision-making performance Synopsis Research Programme Learning: - Mode of learning (prediction vs. control) (observation vs. action) - Type of goal (Exploration vs. Exploitation) Sense of Agency: (Low vs. High) Contingency: (Extreme, High, Moderate, Low) Performance feedback: (Positive, Negative, Both, Neither) Reward: (Gains, Losses) Preamble losses vs. Gains Lab-based Decision-making – Hedonic principle + Loss aversion = Losses are generally more salient than gains (a la Prospect theory) So, losses should drive learning more effectively Real-world Decision-making - During early stages of learning negative rewards lead to improved performance So, losses should drive learning more effectively (Brett & VandeWalle, 1999; Kaheman & Tversky, 1970; Latham & Locke, 1990; Tabernero & Wood, 1999) Experimental Set up Actions • Experimental Goal of task Reward set upset up •• • • • -5 100 Learning trials Intervene on Input 1, input outcome 2,Losses input 3,tono Learnt to control a dynamic a specific Maximize gains vs. Minimize intervention 20 Test trial (reward is performance related) – goal Familiar goal (1-100) Value setting 20 Test trials (reward is performance related)Unfamiliar goal Points convert s to money +5 Structure Reward is based on the discrepancy between achieved and target value Gains +10 (maximum gain) Losses -5 (minimum loss) the closer the outcome value is to target as compared to the previous trial Gains +5 (minimum gain) Losses -10 (maximum loss) the further the outcome value is to target as compare to the previous trial But point assignment is probabilistic, 80% reliable Total points in Test 1 + Test 2 * 2.5 = final wins 20 Structure of Task Environment 3 inputs , 1 Output (Continuous variables) y(t) = y(t-1) + b1 x1(t) + b2 x2(t) + et • • • • • Output value = y(t) Previous output value = y(t-1) Positive input= b1 = 0.65 Negative input = b2 = -0.65 (Null input) Random noise = et The random noise was drawn from a normal distribution with mean of 0, SD 8 (Intermediate Noise) Act 1: The Comedy of Gains & Losses Learning Performance - outcome feedback every trial Trials 1-100 N = 40 ( Gains N = 20, Losses N = 20) - No differences in learning patterns Test Performance : Outcome feedback every trial Test 1 Limited difference in test performance Test 2 Act 2: The Tragedy of Unknowing Learning Performance: Outcome feedback every 5th trial Trials 1-100 100 90 80 70 60 50 Gains 40 Losses 30 20 10 N = 50 ( Gains N = 25, Losses N = 25) - Advantage for gains group 97 93 89 85 81 77 73 69 65 61 57 53 49 45 41 37 33 29 25 21 17 13 9 5 1 0 Test Performance: Outcome feedback every 5th trial Test 2 Test 1 100 100 90 90 80 80 70 70 60 60 50 50 40 40 30 30 20 20 10 10 0 0 1 3 5 7 9 11 13 15 17 19 Gains Losses 1 3 5 Small but reliable advantage for the Gains group in test performance 7 9 11 13 15 17 19 Comparison of both Experiments Learning Performance Exp 1a Exp 1b Test Performance Exp 1a Exp 1b Final Act: The Triumph of Heroes Best Circumstances for DDM Despite impoverished information (Exp 1b) DDM is robust enough that learning is possible - but only when maximizing gains 1. High quality (but simple) outcome information presented frequently, with reward signals – socially framed] 2. Incentivization schemes make a difference but only under extreme uncertainty [avoid punishment schedules under these conditions] [Not Strategies When the conditions are highly unstable people 1. intervene on the system a lot 2. make dramatic changes in parameter setting 3. Change multiple variables at once When it is highly stable people 1. intervene on the system very little 2. make conservative changes in parameter setting 3. Make minimal systematic changes to variables Under both conditions people seem to stick to their choice of strategy than switch over time Monitoring and Control • Simple prediction • Only manipulations of Agency/ Goals/Reward/Feedback/Contingency should impact decisionmaking performance • This has been supported in several studies • Critically the above factors impact on forecasting behaviour as well as DDM (control behaviour) • Critically, there is NO evidence that these factors have differential effect on DDM/forecasting because they tap in different “systems” (i.e. System 1 vs. System 2) Thanks to Researchers Undergraduate Students Patrycja Marta Bartoszek Zuzanna Hola Bjoern Meder Brian Glass Agata Ryterska Maarten Speekenbrink Susanne Stollewerk Exploitation, Optimality, Variability Exploitation Optimality Variability