The European Journal of Political Research and DART: when

advertisement

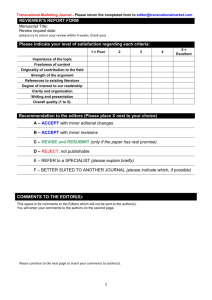

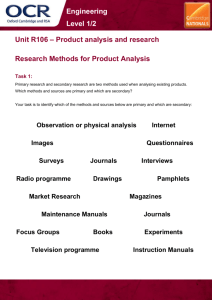

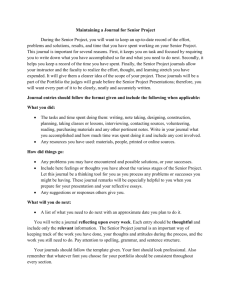

The European Journal of Political Research and DA-RT: when laggards learn from best practice Yannis Papadopoulos, University of Lausanne and EJPR co-editor European Journal of Political Research • EDITORS Claudio Radaelli, University of Exeter (2009-2015) Yannis Papadopoulos, University of Lausanne (2012-2015, renewable) Cas Mudde, University of Georgia (2015-2018, renewable) • EDITORIAL MANAGER Oliver Fritsch, University of Leeds (2009-2015?) 2 • 3 ECPR journals (EJPR + EPSR + EPS) + PDY + Press • EJPR « flagship »: 2 year impact Factor: 2.152, Ranking 2013: 8/156 (Political Science) • Generalist • Since 2014: 4 issues per year, each of them with 11-12 articles • 2013: 267 manuscripts submitted, rejection rate about 90% • Turnover time: 35 days (mean) 3 Gherghina, Sergiu and Alexia Katsanidou (2013), “Data availability in Political Science Journals”, European Political Science, 12(3), 333-349. • investigates the extent to which political science journals adopt data availability policies and their content • sample of 120 peer-reviewed journals • website scrutiny and emails to editors (Oct-Dec. 2011) • policies on website: 19 journals • 76 journals had no policy (and no info on their plans), 7 planned to adopt a policy, 18 did not plan any • (strongest) impact of impact factor: journals with more citations more likely to have a policy • EJPR responded that no proper policy ⇒ deviant case 4 « Political science replication » blog (Nicole Janz)http://politicalsciencereplication.wordpress.com/2013/0 3/08/only-18-of-120-political-science-journals-have-areplication-policy/ (accessed Oct.10, 2014) Post on March 8, 2013, based on Gherghina & Katsanidou (2013) • « The good ones: journals with a policy » • « The bad ones »… « out of these, seven have a high impact factor so that I would have expected that they take care of this: [1] European Journal Of Political Research [2]… » ⇒naming and shaming! 5 • “In the biomedical domain, most results presented as statistically significant are exaggerated or frankly false (…) My major concern is to accelerate the self-correction of the scientific system (…) This is only possible through independent and rapid verification of results”. Interview of Professor John P.A. Ioannidis, Horizons (Magazine of the SNF), 100, March 2014, pp. 47-48. • Make information about data transparent: - to enhance scientific credibility/persuasiveness - to allow replication (whenever applicable) - for the improvement of research practice - for the cumulative research process - to facilitate evidence-based scholarly debate • Variations in policy models depending on how much responsibility lies with the author and how much with the journal John Ishiyama, “Replication, Research Transparency, and Journal Publications: Individualism, Community Models, and the Future of Replication Studies”, PS, January 2014, 78-83. 6 • Example of journal policy (AJPS): - Submission stage: "The use of supporting information (SI) is strongly encouraged (and treated as a substitute for appendices). Such information will not be counted toward the word count noted below. SI should be aimed at a sophisticated reader with specialized field expertise. For example, formal theory may include proofs; computational models may include computer code; experiments may include the details of the protocol; empirical work may include data coding, alternative econometric specifications and additional tables and figures that elaborate the manuscript’s primary point. SI will be sent to reviewers. If the manuscript is accepted for publication the SI will be permanently posted on the publisher’s website with the electronic copy of the article.” - Publication stage: "If a manuscript is accepted for publication, the manuscript will not be published unless the first footnote explicitly states where the data used in the study can be obtained for purposes of replication and any sources that funded the research. All replication files must be stored on the AJPS Data Archive on Dataverse. Please refer to the Accepted Article Guidelines for instructions relating to the AJPS Dataverse Archive. In addition, if Supporting Information (SI) accompanies the article, it must be ready for permanent posting. Manuscripts without data or SI are exempt.” 7 • First quickly and easily implemented measure by EJPR (July 2014): “We … strongly advise authors to make data and any other supporting information available in an appendix file that will be published alongside the online version of the article. These files can be submitted at the same time as article manuscripts.” (no word limit for appendices) • EJPR articles with supporting information / online appendices: - 2013: 1/34 - 2014: 22/46 • No guidelines for appendix content ⇒ large variation ⇒ content not always helpful for replication ⇒limited measure: not binding, and no controls (“soft”) ⇒need more rigorous measures, but… 8 • Lack of resources : initial lack of familiarity with the issue, and lack of time to catch up - absorbed by day-to-day management of submissions + achieve new policy goals ⇒ broaden the scope of the journal ⇒resume with special issues • Information collection on recent (mainly) North American developments (under the aegis of NSF, APSA…) ⇒ working groups, meetings of editors of top journals, PS Symposium on transparency (January 2014): - Commitment to adopting common standards by January 2016 (DA-RT as “default” settings): section 6 of APSA’s Guide to Professional Ethics, Rights and Freedoms (as amended in October 2012) : “Researchers have an ethical obligation to facilitate the evaluation of their evidence- based knowledge claims through data access, production trans- parency, and analytic transparency so that their work can be tested or replicated.” - Joint statement in support of DA-RT by editors of major US political 9 science journals (October 6, 2014) “Political science is a diverse discipline comprising multiple, and sometimes seemingly irretrievably insular, research communities” Lupia, Arthur & Colin Elman, “Openness in Political Science: Data Access and Research Transparency”, PS, January 2014, 19-24 ⇒ mutual recognition of divergent ways of implementing as appropriate to distinctive qualitative and quantitative research communities (APSA: partly different draft guidelines) ⇒ development of practical protocols through close work between quantitative and qualitative scholars (e.g. guidelines for active citation in qualitative work: complete and precise citation hyperlinked to a corresponding entry in a transparency appendix) 10 Diversity of problems + policy models ⇒ questions for debate: - when should editors receive data, in what form and for what purpose, e.g. what kind of data should be communicated to reviewers, does data access reduce anonymity of authors…? - who is responsible for enforcement of provisions, who should verify the provided material? - where should data be deposited? (e.g. AJPS authors must use the journal’s own site for storage of all replication files) - how to deal with confidentiality and protection of privacy, with data that are under ethical or proprietary constraint? 11 • Next steps for the journal editors… - Data replication one of the major issues of the agenda with the incoming editor (2015: Cas Mudde) - Keep contact with APSA and editors of journals with “best practice” - Topic for discussion at “retreat” of ECPR journal editors with ECPR publications committee (Febr. 2015) - Roundtable in a ECPR event: major European outlets, US journals publishing quantitative and qualitative material ⇒ EJPR wants to contribute to: - extend the debate among journal editors in Europe - make appropriate policy choices • Next steps beyond… Journal editors are gatekeepers (quality control) but are not responsible for the input⇒ incorporate issues of DA-RT into training programs. 12