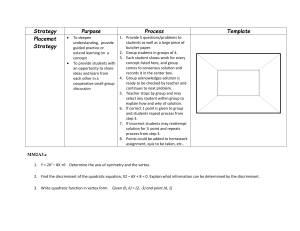

Course Outline

advertisement

Bayesian Decision Theory

(Sections 2.1-2.2)

• Decision problem posed in probabilistic terms

• Bayesian Decision Theory–Continuous Features

• All the relevant probability values are known

Probability Density

Jain CSE 802, Spring 2013

Course Outline

MODEL INFORMATION

COMPLETE

Bayes Decision

Theory

Parametric

Approach

“Optimal”

Rules

Plug-in

Rules

INCOMPLETE

Supervised

Learning

Nonparametric

Approach

Unsupervised

Learning

Parametric

Approach

Density

Geometric Rules

Estimation

(K-NN, MLP)

Mixture

Resolving

Nonparametric

Approach

Cluster Analysis

(Hard, Fuzzy)

Introduction

• From sea bass vs. salmon example to “abstract”

decision making problem

• State of nature; a priori (prior) probability

• State of nature (which type of fish will be observed next) is

unpredictable, so it is a random variable

• The catch of salmon and sea bass is equiprobable

•

P(1) = P(2) (uniform priors)

•

P(1) + P( 2) = 1 (exclusivity and exhaustivity)

• Prior prob. reflects our prior knowledge about how likely we are to

observe a sea bass or salmon; these probabilities may depend on

time of the year or the fishing area!

• Bayes decision rule with only the prior information

• Decide 1 if P(1) > P(2), otherwise decide 2

• Error rate = Min {P(1) , P(2)}

• Suppose now we have a measurement or feature

•

•

on the state of nature - say the fish lightness value

Use of the class-conditional probability density

P(x | 1) and P(x | 2) describe the difference in

lightness feature between populations of sea bass

and salmon

Amount of overlap between the densities determines the “goodness” of feature

• Maximum likelihood decision rule

• Assign input pattern x to class 1 if

P(x | 1) > P(x | 2), otherwise 2

• How does the feature x influence our attitude

(prior) concerning the true state of nature?

• Bayes decision rule

• Posteriori probability, likelihood, evidence

• P(j , x) = P(j | x)p (x) = p(x | j) P (j)

• Bayes formula

P(j | x) = {p(x | j) . P (j)} / p(x)

j2

where

P ( x ) P ( x | j )P ( j )

j 1

• Posterior = (Likelihood. Prior) / Evidence

• Evidence P(x) can be viewed as a scale factor that

•

guarantees that the posterior probabilities sum to 1

P(x | j) is called the likelihood of j with respect to x; the

category j for which P(x | j) is large is more likely to be

the true category

•

•

P(1 | x) is the probability of the state of nature being 1

given that feature value x has been observed

Decision based on the posterior probabilities is called the

Optimal Bayes Decision rule

For a given observation (feature value) X:

if P(1 | x) > P(2 | x)

if P(1 | x) < P(2 | x)

decide 1

decide 2

To justify the above rule, calculate the probability of error:

P(error | x) = P(1 | x) if we decide 2

P(error | x) = P(2 | x) if we decide 1

• So, for a given x, we can minimize te rob. Of error,

decide 1 if

P(1 | x) > P(2 | x);

otherwise decide 2

Therefore:

P(error | x) = min [P(1 | x), P(2 | x)]

• Thus, for each observation x, Bayes decision rule

•

minimizes the probability of error

Unconditional error: P(error) obtained by

integration over all x w.r.t. p(x)

• Optimal Bayes decision rule

Decide 1 if P(1 | x) > P(2 | x);

otherwise decide 2

• Special cases:

(i) P(1) = P(2); Decide 1 if

p(x | 1) > p(x | 2), otherwise 2

(ii) p(x | 1) = p(x | 2); Decide 1 if

P(1) > P(2), otherwise 2

Bayesian Decision Theory –

Continuous Features

• Generalization of the preceding formulation

• Use of more than one feature (d features)

• Use of more than two states of nature (c classes)

• Allowing other actions besides deciding on the state of

•

nature

Introduce a loss function which is more general than the

probability of error

• Allowing actions other than classification primarily

allows the possibility of rejection

• Refusing to make a decision when it is difficult to

decide between two classes or in noisy cases!

• The loss function specifies the cost of each action

• Let {1, 2,…, c} be the set of c states of nature

(or “categories”)

• Let {1, 2,…, a} be the set of a possible actions

• Let (i | j) be the loss incurred for taking

action i when the true state of nature is j

• General decision rule (x) specifies which action to take for every

possible observation x

j c

Conditional Risk

R( i | x ) ( i | j )P ( j | x )

j 1

For a given x, suppose we take the action i ; if the true state is j ,

we will incur the loss (i | j). P(j | x) is the prob. that the true

state is j But, any one of the C states is possible for given x.

Overall risk

R = Expected value of R(i | x) w.r.t. p(x)

Conditional risk

Minimizing R

Minimize R(i | x) for i = 1,…, a

Select the action i for which R(i | x) is minimum

The overall risk R is minimized and the resulting risk

is called the Bayes risk; it is the best performance that

can be achieved!

• Two-category classification

1 : deciding 1

2 : deciding 2

ij = (i | j)

loss incurred for deciding i when the true state of nature is j

Conditional risk:

R(1 | x) = 11P(1 | x) + 12P(2 | x)

R(2 | x) = 21P(1 | x) + 22P(2 | x)

Bayes decision rule is stated as:

if R(1 | x) < R(2 | x)

Take action 1: “decide 1”

This results in the equivalent rule:

decide 1 if:

(21- 11) P(x | 1) P(1) >

(12- 22) P(x | 2) P(2)

and decide 2 otherwise

Likelihood ratio:

The preceding rule is equivalent to the following rule:

P ( x | 1 ) 12 22 P ( 2 )

if

.

P ( x | 2 ) 21 11 P ( 1 )

then take action 1 (decide 1); otherwise take action 2

(decide 2)

Note that the posteriori porbabilities are scaled by the loss

differences.

Interpretation of the Bayes decision rule:

“If the likelihood ratio of class 1 and class 2

exceeds a threshold value (that is independent of

the input pattern x), the optimal action is to decide

1”

Maximum likelihood decision rule: the threshold

value is 1; 0-1 loss function and equal class prior

probability

Bayesian Decision Theory

(Sections 2.3-2.5)

• Minimum Error Rate Classification

• Classifiers, Discriminant Functions and Decision Surfaces

• The Normal Density

Minimum Error Rate Classification

• Actions are decisions on classes

If action i is taken and the true state of nature is j then:

the decision is correct if i = j and in error if i j

• Seek a decision rule that minimizes the probability

of error or the error rate

• Zero-one (0-1) loss function: no loss for correct decision

and a unit loss for any error

0 i j

( i , j )

1 i j

i , j 1 ,..., c

The conditional risk can now be simplified as:

j c

R( i | x ) ( i | j ) P ( j | x )

j 1

P( j | x ) 1 P( i | x )

j 1

“The risk corresponding to the 0-1 loss function is the

average probability of error”

• Minimizing the risk requires maximizing the

posterior probability P(i | x) since

R(i | x) = 1 – P(i | x))

• For Minimum error rate

• Decide i if P (i | x) > P(j | x) j i

• Decision boundaries and decision regions

12 22 P ( 2 )

P( x | 1 )

Let

.

then decide 1 if :

21 11 P ( 1 )

P( x | 2 )

• If is the 0-1 loss function then the threshold involves

only the priors:

0 1

1 0

then

P( 2 )

a

P( 1 )

0 2

2 P( 2 )

then

if

b

P( 1 )

1 0

Classifiers, Discriminant Functions

and Decision Surfaces

• Many different ways to represent pattern

classifiers; one of the most useful is in terms of

discriminant functions

• The multi-category case

• Set of discriminant functions gi(x), i = 1,…,c

• Classifier assigns a feature vector x to class i if:

gi(x) > gj(x) j i

Network Representation of a Classifier

• Bayes classifier can be represented in this way, but

the choice of discriminant function is not unique

• gi(x) = - R(i | x)

(max. discriminant corresponds to min. risk!)

• For the minimum error rate, we take

gi(x) = P(i | x)

(max. discrimination corresponds to max. posterior!)

gi(x) P(x | i) P(i)

gi(x) = ln P(x | i) + ln P(i)

(ln: natural logarithm!)

• Effect of any decision rule is to divide the feature

space into c decision regions

if gi(x) > gj(x) j i then x is in Ri

(Region

Ri means assign x to i)

• The two-category case

• Here a classifier is a “dichotomizer” that has two

discriminant functions g1 and g2

Let g(x) g1(x) – g2(x)

Decide 1 if g(x) > 0 ; Otherwise decide 2

•

So, a “dichotomizer” computes a single

discriminant function g(x) and classifies x

according to whether g(x) is positive or

not.

• Computation of g(x) = g1(x) – g2(x)

g( x ) P ( 1 | x ) P ( 2 | x )

P( x | 1 )

P( 1 )

ln

ln

P( x | 2 )

P( 2 )

The Normal Density

• Univariate density: N( , 2)

• Normal density is analytically tractable

• Continuous density

• A number of processes are asymptotically Gaussian

• Patterns (e.g., handwritten characters, speech signals ) can be

viewed as randomly corrupted versions of a single typical or

prototype (Central Limit theorem)

P( x )

2

1

1 x

exp

,

2

2

where:

= mean (or expected value) of x

2 = variance (or expected squared deviation) of x

• Multivariate density: N( , )

• Multivariate normal density in d dimensions:

P( x )

1

( 2 )

d/2

1/ 2

1

t

1

exp ( x ) ( x )

2

where:

x = (x1, x2, …, xd)t (t stands for the transpose of a vector)

= (1, 2, …, d)t mean vector

= d*d covariance matrix

•

•

•

|| and -1 are determinant and inverse of , respectively

The covariance matrix is always symmetric and positive semidefinite; we

assume is positive definite so the determinant of is strictly positive

Multivariate normal density is completely specified by [d + d(d+1)/2]

parameters

If variables x1 and x2 are statistically independent then the covariance

of x1 and x2 is zero.

Multivariate Normal density

Samples drawn from a normal population tend to fall in a single

cloud or cluster; cluster center is determined by the mean vector

and shape by the covariance matrix

The loci of points of constant density are hyperellipsoids whose

principal axes are the eigenvectors of

r 2 ( x )t 1 ( x )

Transformation of Normal Variables

Linear combinations of jointly normally distributed random variables are

normally distributed

Coordinate transformation can convert an arbitrary multivariate normal

distribution into a spherical one

Bayesian Decision Theory

(Sections 2-6 to 2-9)

• Discriminant Functions for the Normal Density

• Bayes Decision Theory – Discrete Features

Discriminant Functions for the

Normal Density

• The minimum error-rate classification can be

achieved by the discriminant function

gi(x) = ln P(x | i) + ln P(i)

• In case of multivariate normal densities

1

1

d

1

t

g i ( x ) ( x i ) ( x i ) ln 2 ln i ln P ( i )

2

2

2

i

• Case i = 2.I

(I is the identity matrix)

Features are statistically independent and each

feature has the same variance

g i ( x ) w x w i 0 (linear discriminant function)

t

i

where :

i

1

t

wi 2 ; wi 0

i i ln P ( i )

2

2

( i 0 is called the threshold for the ith category! )

• A classifier that uses linear discriminant functions is called

“a linear machine”

• The decision surfaces for a linear machine are pieces of

hyperplanes defined by the linear equations:

gi(x) = gj(x)

• The hyperplane separating Ri and Rj

1

2

x0 ( i j )

2

i j

2

P( i )

ln

( i j )

P( j )

is orthogonal to the line linking the means!

1

if P ( i ) P ( j ) then x0 ( i j )

2

• Case 2: i = (covariance matrices of all classes

are identical but otherwise arbitrary!)

• Hyperplane separating Ri and Rj

ln P ( i ) / P ( j )

1

x0 ( i j )

.( i j )

t

1

2

( i j ) ( i j )

• The hyperplane separating Ri and Rj is generally

not orthogonal to the line between the means!

• To classify a feature vector x, measure the

squared Mahalanobis distance from x to each of

the c means; assign x to the category of the

nearest mean

Discriminant Functions for 1D Gaussian

• Case 3: i = arbitrary

• The covariance matrices are different for each category

g i ( x ) x tWi x w it x w i 0

where :

1 1

Wi i

2

w i i 1 i

1 t 1

1

w i 0 i i i ln i ln P ( i )

2

2

In the 2-category case, the decision surfaces are

hyperquadrics that can assume any of the general forms:

hyperplanes, pairs of hyperplanes, hyperspheres,

hyperellipsoids, hyperparaboloids, hyperhyperboloids)

Discriminant Functions for the Normal Density

Discriminant Functions for the Normal Density

Discriminant Functions for the Normal Density

Decision Regions for Two-Dimensional Gaussian Data

x2 3.514 1.125 x1 0.1875x12

Error Probabilities and Integrals

• 2-class problem

• There are two types of errors

• Multi-class problem

– Simpler to computer the prob. of being correct (more

ways to be wrong than to be right)

Error Probabilities and Integrals

Bayes optimal decision boundary in 1-D case

Error Bounds for Normal Densities

•

•

The exact calculation of the error for the

general Guassian case (case 3) is extremely

difficult

However, in the 2-category case the general

error can be approximated analytically to give

us an upper bound on the error

Error Rate of Linear Discriminant Function (LDF)

• Assume a 2-class problem

p x ~ N ( , ), p x ~ N ( , )

1

1

2

2

1

gi ( x) log P( x i ) ( x i )t 1 ( x i ) log P(i )

2

• Due to the symmetry of the problem (identical

), the two types of errors are identical

• Decide x if g ( x) g ( x) or

1

1

2

1

1

t 1

( x 1 ) ( x 1 ) log P(1 ) ( x 2 )t 1 ( x 2 ) log P(2 )

2

2

or

1 t 1

t

( 2 1 ) x 1 1 2 1 2 log P(1 ) / P(2 )

2

t

1

Error Rate of LDF

• Let h( x) ( 2 1 )t 1 x 12 1t 1 1 t21 2

• Compute expected values & variances of

when x 1 & x 2

1 E h( x) x 1 ( 2 1 )t 1 E x 1

1

( 2 1 )t 1 ( 2 1 )

2

where

h( x)

1 t 1

1 1 t2 1 2

2

1

( 2 1 )t 1 ( 2 1 )

= squared Mahalanobis distance between

1 & 2

Error Rate of LDF

• Similarly

1

2

2 ( 2 1 )t 1 ( 2 1 )

12 E h( x) 1 1 E ( 2 1 )t 1 ( x 1 ) x 1

2

( 2 1 )t 1 ( 2 1 )

2

22 2

p h( x) x 1 ~ N ( , 2 )

p h( x) x 2 ~ N ( , 2 )

Error Rate of LDF

1 P g1 ( x) g 2 ( x) x 1 P h( x ) 1 dh

t

n t

2

1

2

1

e 2 d

2

1 1

erf

2 2

t

4

h( x ) ~

1

()

1

e 2

2 2

Error Rate of LDF

P (1 )

t log

P

(

)

2

2

erf (r )

1 1

2 erf

2 2

r

e

x2

dx

0

t

4

Total probability of error

Pe P (1 )1 P (2 ) 2

Error Rate of LDF

1

P 1 P 2

t0

2

1 1

1 1

1 2 erf

erf

2 2

4 2 2

( 1 2 )t 1 ( 1 2 )

2 2

(i) No Class Separation

( 1 2 )t 1 ( 1 2 ) 0

1 2

1

2

(ii) Perfect Class Separation

( 1 2 )t 1 ( 1 2 ) 0

∞

1 2 0

(erf 1)

Mahalanobis distance is a good measure of separation between classes

Chernoff Bound

• To derive a bound for the error, we need the

following inequality

Assume conditional prob. are normal

where

Chernoff Bound

Chernoff bound for P(error) is found by determining the

value of that minimizes exp(-k())

Error Bounds for Normal Densities

• Bhattacharyya Bound

• Assume = 1/2

• computationally simpler

• slightly less tight bound

• Now, Eq. (73) has the form

When the two covariance matrices are equal, k(1/2) is te

same as the Mahalanobis distance between the two means

Error Bounds for Gaussian Distributions

Chernoff Bound

P(error ) P (1 ) P1 (1 ) p ( x | 1 ) p1 ( x | 2 )dx

p

0 1

( x | 1 ) p1 ( x | 2 )dx e k ( )

k ( )

(1 )

2

1

t

( 2 1 ) [ 1 (1 ) 2 ] ( 2 1 )

1 (1 ) 2

1

ln

2

|1| |2 |1

Best Chernoff error bound is 0.008190

Bhattacharya Bound (β=1/2)

P(error ) P(1 ) P(2 ) P( x | 1 ) P( x | 2 )dx P(1 ) P (2 )e k (1/2)

k (1 / 2) 1 / 8( 2 1 )

t

2

1

2

1

2–category, 2D data

1 2

1

2

( 2 1 ) ln

2

|1 ||2 |

Bhattacharya error bound is 0.008191

True error using numerical integration = 0.0021

Neyman-Pearson Rule

“Classification, Estimation and Pattern recognition” by Young and Calvert

Neyman-Pearson Rule

Neyman-Pearson Rule

Neyman-Pearson Rule

Neyman-Pearson Rule

Neyman-Pearson Rule

Signal Detection Theory

We are interested in detecting a single weak pulse,

e.g. radar reflection; the internal signal (x) in detector

has mean m1 (m2) when pulse is absent (present)

p( x | 1 ) ~ N ( 1 , 2 )

p ( x | 2 ) ~ N ( 2 , 2 )

The detector uses a threshold

x* to determine the presence of pulse

Discriminability: ease of determining

whether the pulse is present or not

d'

| 1 2 |

For given threshold, define hit,

false alarm, miss and correct

rejection

P( x x*| x 2 ) :

P( x x*| x 1 ) :

P( x x*| x 2 ) :

P( x x*| x 1 ) :

hit

false alarm

miss

correct rejection

Receiver Operating Characteristic

(ROC)

• Experimentally compute hit and false alarm rates for

•

•

fixed x*

Changing x* will change the hit and false alarm rates

A plot of hit and false alarm rates is called the ROC

curve

Performance

shown at different

operating points

Operating Characteristic

• In practice, distributions may not be Gaussian

•

and will be multidimensional; ROC curve can still

be plotted

Vary a single control parameter for the decision

rule and plot the resulting hit and false alarm rates

Bayes Decision Theory – Discrete Features

•

•

Components of x are binary or integer valued; x can

take only one of m discrete values

v1, v2, …,vm

Case of independent binary features for 2-category

problem

Let x = [x1, x2, …, xd ]t where each xi is either 0 or 1, with

probabilities:

pi = P(xi = 1 | 1)

qi = P(xi = 1 | 2)

• The discriminant function in this case is:

d

g ( x ) w i x i w0

i 1

where :

pi ( 1 q i )

w i ln

q i ( 1 pi )

i 1 ,..., d

and :

1 pi

P( 1 )

w0 ln

ln

1 qi

P( 2 )

i 1

d

decide 1 if g(x) 0 and 2 if g(x) 0

Bayesian Decision for Three-dimensional

Binary Data

• Consider a 2-class problem with three independent binary

features; class priors are equal and pi = 0.8 and qi = 0.5, i =

1,2,3

• wi = 1.3863

• w0 = 1.2

• Decision surface g(x) = 0 is shown below

Decision boundary for 3D binary features. Left figure shows the case when pi=.8 and

qi=.5. Right figure shows case when p3=q3 (Feature 3 is not providing any

discriminatory information) so decision surface is parallel to x3 axis

Handling Missing Features

• Suppose it is not possible to measure a certain

feature for a given pattern

• Possible solutions:

• Reject the pattern

• Approximate the missing feature

•

•

Mean of all the available values for the missing feature

Marginalize over the distribution of the missing feature

Handling Missing Features

Other Topics

• Compound Bayes Decision Theory & Context

– Consecutive states of nature might not be statistically independent; in

sorting two types of fish, arrival of next fish may not be independent of

the previous fish

– Can we exploit such statistical dependence to gain improved

performance (use of context)

– Compound decision vs. sequential compound decision problems

– Markov dependence

• Sequential Decision Making

– Feature measurement process is sequential (as in medical diagnosis)

– Feature measurement cost

– Minimize the no. of features to be measured while achieving a

sufficient accuracy; minimize a combination of feature measurement

cost & classification accuracy

Context in Text Recognition