Homework Set 1 – Data Exploration and Pattern Recognition

advertisement

Homework Set 1 – Data Exploration and Pattern Recognition

Due date: Sunday 21/12/98

Instructions:

When asked to prove a statement, or evaluate an expression, state and explain

clearly your assumptions and steps, so that a reader can easily follow it. (However,

please try to be concise and avoid superfluous explanations.)

We recommend that homework papers be printed (either in Hebrew or

English). Incomprehensible handwritten papers will not be credited.

Submission in pairs only.

Students who go to reserve duty (“miluim”) must set in advance (before due

date) the submission date of their homework. Exemptions and delays will not be

approved after the due date.

Answer the following questions.

_____________________________________________________________________

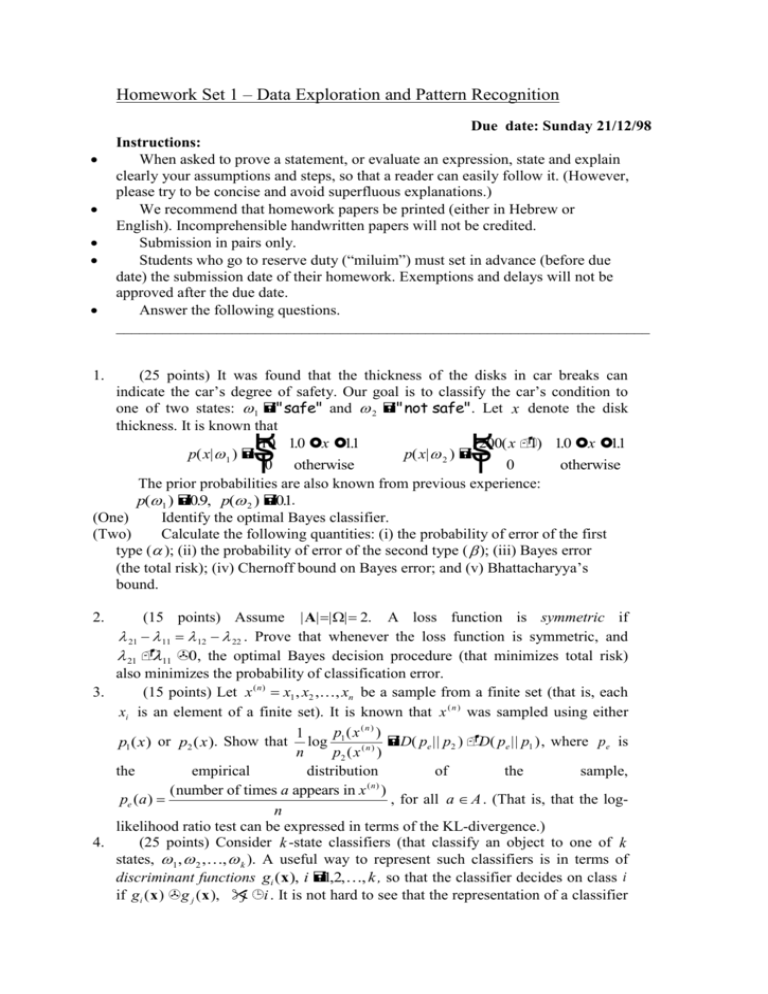

1.

(25 points) It was found that the thickness of the disks in car breaks can

indicate the car’s degree of safety. Our goal is to classify the car’s condition to

one of two states: 1 "safe" and 2 "not safe" . Let x denote the disk

thickness. It is known that

10 10

. x 11

.

200( x

1) 10

. x 11

.

p( x| 1 )

p( x| 2 )

0 otherwise

0

otherwise

The prior probabilities are also known from previous experience:

p(1 ) 09

. , p( 2 ) 01

..

(One)

Identify the optimal Bayes classifier.

(Two)

Calculate the following quantities: (i) the probability of error of the first

type ( ); (ii) the probability of error of the second type ( ); (iii) Bayes error

(the total risk); (iv) Chernoff bound on Bayes error; and (v) Bhattacharyya’s

bound.

R

S

T

R

S

T

(15 points) Assume | A| | | 2. A loss function is symmetric if

21 11 12 22 . Prove that whenever the loss function is symmetric, and

21 11 0, the optimal Bayes decision procedure (that minimizes total risk)

also minimizes the probability of classification error.

3.

(15 points) Let x ( n ) x1 , x2 ,, xn be a sample from a finite set (that is, each

xi is an element of a finite set). It is known that x ( n ) was sampled using either

p1 ( x ( n ) )

1

D( pe || p2 ) D( pe || p1 ) , where pe is

p1 ( x ) or p2 ( x ). Show that log

n

p2 ( x ( n ) )

the

empirical

distribution

of

the

sample,

(n)

( number of times a appears in x )

, for all a A . (That is, that the logpe (a )

n

likelihood ratio test can be expressed in terms of the KL-divergence.)

4.

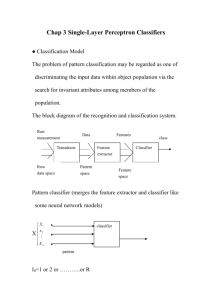

(25 points) Consider k -state classifiers (that classify an object to one of k

states, 1 , 2 ,, k ). A useful way to represent such classifiers is in terms of

discriminant functions gi (x ), i 1,2,, k , so that the classifier decides on class i

if gi ( x ) g j ( x ), j i . It is not hard to see that the representation of a classifier

2.

in terms of discriminant functions {gi } is not unique since the same classifier is

obtained from { f ( gi )} when f is monotone increasing. For example, the Bayes

classifier is given by gi ( x ) p( i | x ) or by gi ( x ) log p( i | x ) , etc.

(One)

Show that the Bayes classifier can be represented by the discriminant

functions gi ( x ) ln p( x| i ) ln p( i )

(Two)

Considering the classifier of part (a), assume that p( x| i ) are

(univariate) normal distributions, N ( i , i ) . Evaluate and simplify the

discriminant functions for this case.

(Three)

Evaluate and further simplify the discriminant functions of part (b) for

the special case i , i 1, , k . Show that in this case the discriminant

function is linear in x .

(Four)

Evaluate D N ( 1 , 1 )|| N ( 2 , 2 ) and D N ( 1 , )|| N ( 2 , ) . Use

the “differential KL-divergence” defined for densities:

f (x)

D( f || g ) f ( x ) ln

dx

g( x )

5.

(20 points) The optimal Bayesian decision function, that minimizes the total

risk, is deterministic. Prove that no randomized decision algorithm can achieve

lower (expected) total risk. Guidance: use p(ai | x ) , to denote the probability used

by a randomized algorithm for choosing action ai (given the observation x ), to

express the total risk of the randomized algorithm. Then prove that the total risk of

the optimal deterministic algorithm is not larger.

6.

(Bonus question: 10 points) Two different real numbers, x and y , are picked

(their distributions are unknown). You get to see one of them, say x and it is

known that x is the larger (smaller) with probability 1/2. Construct an algorithm

that can decide if x is the larger, with error probability less than 1 / 2, no matter

what the distributions of x and y are. Using the Bayesian framework prove that

your algorithm is correct.

b

g

z

b

g