Normal Density:

advertisement

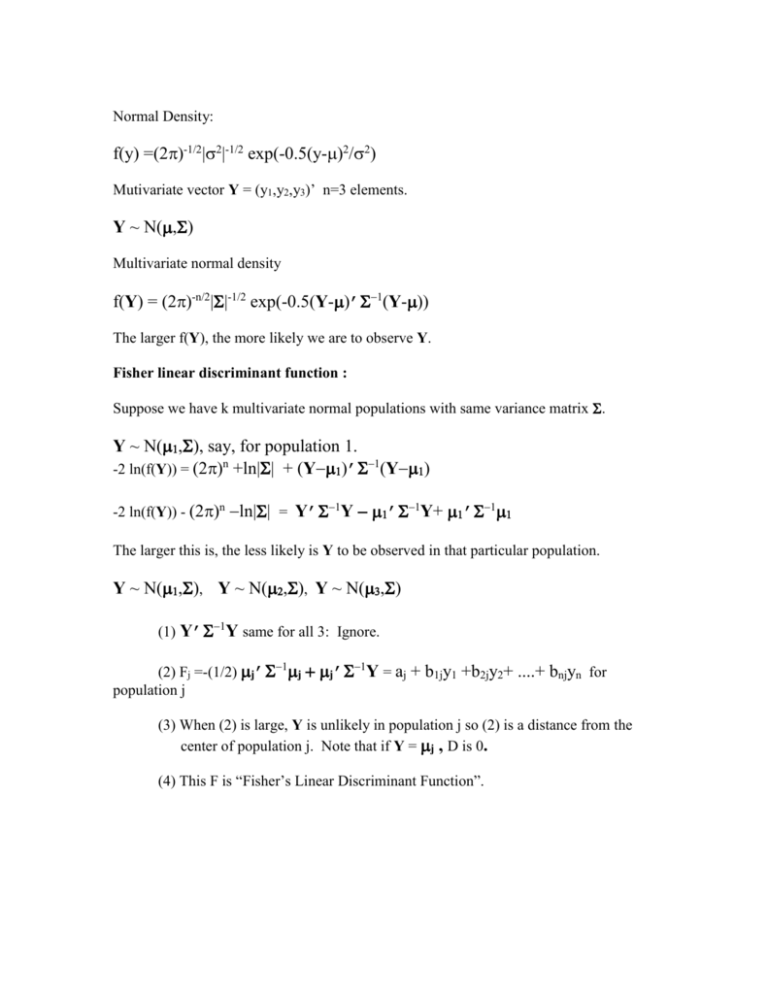

Normal Density:

f(y) =(2)-1/2||-1/2 exp(-0.5(y-)2/2)

Mutivariate vector Y = (y1,y2,y3)’ n=3 elements.

Y ~ N()

Multivariate normal density

f(Y) = (2)-n/2||-1/2 exp(-0.5(Y-)’Y-))

The larger f(Y), the more likely we are to observe Y.

Fisher linear discriminant function :

Suppose we have k multivariate normal populations with same variance matrix .

Y ~ N(), say, for population 1.

-2 ln(f(Y)) = (2)n +ln|| + (Y)’Y)

-2 ln(f(Y)) - (2)n ln|| = Y’Y ’Y+ ’

The larger this is, the less likely is Y to be observed in that particular population.

Y ~ N(), Y ~ N(), Y ~ N()

(1) Y’Y same for all 3: Ignore.

(2) Fj =-(1/2) j’j j’Y = aj + b1jy1 +b2jy2+ ....+ bnjyn for

population j

(3) When (2) is large, Y is unlikely in population j so (2) is a distance from the

center of population j. Note that if Y = j , D is 0.

(4) This F is “Fisher’s Linear Discriminant Function”.

Example:

7.5 7.5 6.25

= 7.5 25 12.5

=

6.25 12.5 31.25

2

2

1

1 0 1

1

1

1

0.05 0.02

0.2

0.05 0.0625 0.015

0.02 0.015 0.042

1

Y 2

3

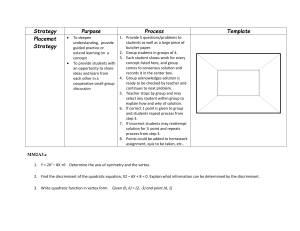

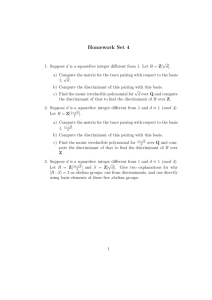

** Class notes example **;

PROC IML;

S = {2 1 1, 0 4 2, 0 0 5}; S = S*S`;

S = 10*S/8;

IN = inv(S);

m1 = {2,-1,1}; m2 = {-2,0,1}; m3 = {1,-1,1};

print S in m1 m2 m3;

D1 =-0.5*m1`*in*m1||(m1`*IN);

D2 =-0.5*m2`*in*m2||( m2`*IN);

D3 =-0.5*m3`*in*m3||( m3`*IN);

D = D1//D2//D3;

Y = {1,2,3};

discriminant = D*({1}//Y);

print D Y discriminant;

S

7.5

7.5

6.25

IN

7.5 6.25

25

12.5

12.5 31.25

0.2 -0.05

-0.02

-0.05 0.0625 -0.015

-0.02 -0.015

0.042

D

-0.52725

-0.461

-0.19725

M1

M2

M3

2

-1

1

-2

0

1

1

-1

1

Y

0.43

-0.42

0.23

-0.1775

0.085

-0.1275

0.017

0.082

0.037

1

2

3

DISCRIMINANT

-0.40125

-0.465

-0.11125

Y is least far from the third population mean. We showed Fj = -2 ln(fj(Y)) + C where C

is constant across all 3 populations. The pdf of Y in population j is then exp(-(1/2)(Fj –

C)). The ratio of any two of these pdf’s, j vs. k for example, would be

exp(-(1/2)(Fj –Fk) ). Thus if we compute exp( -(1/2)Fj) / [exp( -(1/2)Fk)], these will be

3 probabilities that add to 1 and are in the proper ratio. If we think in Bayesian terms of

equal prior probabilities that an observed vector comes from population j then we have

computed the posterior probability of being from group j given the observed Y.

Now if the variance matrices j differ, then we see that ln|j| is no longer constant and

both ln|j| and Y’jY (a quadratic form) must be re-included in Fj giving Fisher’s

quadratic discriminant analysis.

Finally, if there are non-equal prior probabilities pj for each population then that also

must be accounted for in Fj