A Hybrid Task Graph Scheduler for High Performance

advertisement

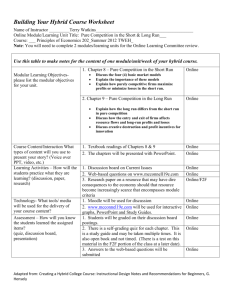

A Hybrid Task Graph Scheduler for High Performance Image Processing Workflows TIMOTHY BLAT TNER NIST | UMBC 12/15/2015 GLOBAL CONFERENCE ON SIGNAL AND INFORMATION PROCESSING 1 Outline Introduction Challenges Image Stitching Hybrid Task Graph Scheduler Preliminary Results Conclusions Future Work 12/15/2015 GLOBAL CONFERENCE ON SIGNAL AND INFORMATION PROCESSING 2 Credits Walid Keyrouz (NIST) Milton Halem (UMBC) Shuvra Bhattacharrya (UMD) 12/15/2015 GLOBAL CONFERENCE ON SIGNAL AND INFORMATION PROCESSING 3 Introduction Hardware landscape is changing Traditional software approaches to extracting performance from the hardware ◦ Reaching complexity limit ◦ Multiple GPUs on a node ◦ Complex memory hierarchies We present a novel abstract machine model ◦ Hybrid task graph scheduler ◦ Hybrid pipeline workflows ◦ Scope: Single node with multiple CPUs and GPUs ◦ Emphasis on ◦ Execution pipelines to scale to multiple GPUs/CPU sockets ◦ Memory interface to attach to hierarchies of memory ◦ Can be expanded beyond single node (clusters) 12/15/2015 GLOBAL CONFERENCE ON SIGNAL AND INFORMATION PROCESSING 4 Introduction – Future Architectures Future hybrid architecture generation ◦ Few fat cores with many more simpler cores ◦ Intel Knights Landing ◦ POWER 9 + NVIDIA Volta + NVLink ◦ Sierra cluster ◦ Faster interconnect ◦ Deeper memory hierarchy Programming methods must present the right machine model to programmers so they can extract performance Figure: NVIDIA Volta GPU (nvidia.com) 12/15/2015 GLOBAL CONFERENCE ON SIGNAL AND INFORMATION PROCESSING 5 Introduction – Data transfer costs Copying data between address spaces is expensive ◦ PCI express bottleneck Current hybrid CPU+GPU systems contain multiple independent address spaces ◦ Unification of the address spaces ◦ Simplification for programmer ◦ Good for prototyping ◦ Obscures the cost of data motion Techniques for improving hybrid utilization ◦ Have enough computation per data element ◦ Overlap data motion with computation ◦ Faster bus (80 GB/s NVLink versus 16 GB/s PCIe) ◦ NVLink requires multiple GPUs to reach peak performance [NVLink whitepaper 2014] 12/15/2015 GLOBAL CONFERENCE ON SIGNAL AND INFORMATION PROCESSING 6 Introduction – Complex Memory Hierarchies Data locality is becoming more complex ◦ Non-volatile storage devices ◦ ◦ ◦ ◦ NVMe 3D XPoint (future) SATA SSD SATA HDD ◦ Volatile memories ◦ HBM / 3D stacked ◦ DDR ◦ GPU Shared Memory / L1,L2,L3 Cache Need to model these memories within programming methods ◦ Effectively utilize based on size and speed Figure: Memory hierarchies speed, cost, and capacity. [Ang et. Al. 2014] ◦ Hierarchy-aware programming 12/15/2015 GLOBAL CONFERENCE ON SIGNAL AND INFORMATION PROCESSING 7 Key Challenges Changing H/W landscape ◦ Hierarchy-aware programming ◦ Manage data locality ◦ Wider data transfer channels ◦ Requires multi-GPU computation ◦ NVLink ◦ Hybrid computing ◦ Utilize all compute resource A programming and execution machine model is needed to address the above challenges ◦ Hybrid Task Graph Scheduler (HTGS) model ◦ Expands on hybrid pipeline workflows [Blattner 2013] 12/15/2015 GLOBAL CONFERENCE ON SIGNAL AND INFORMATION PROCESSING 8 Hybrid Pipeline Workflows Hybrid pipeline workflow system ◦ Schedule tasks using a multiple-producer multiple-consumer model ◦ Prototype in 2013 Master’s thesis [Blattner 2013] ◦ Kept all GPUs busy ◦ Execution pipelines, one per GPU ◦ Stayed within memory limits ◦ Overlapped data motion with computation ◦ Tailored for image stitching ◦ Required significant programming effort to implement ◦ Prevent race conditions, manage dependencies, and maintain memory limits We expand on hybrid pipeline workflows ◦ Formulates a model for a variety of algorithms ◦ Will reduce programmer effort ◦ Hybrid Task Graph Scheduler (HTGS) 12/15/2015 GLOBAL CONFERENCE ON SIGNAL AND INFORMATION PROCESSING 9 Hybrid Workflow Impact – Image Stitching Image Stitching ◦ Addresses the scale mismatch between microscope field of view and a plate under study ◦ Need to ‘stitch’ overlapping images to form one large image ◦ Three compute stages ◦ (S1) fast Fourier Transform (FFT) of an image ◦ (S2) Phase correlation image alignment method (PCIAM) (Kuglin & Hines 1975) ◦ (S3) Cross correlation factors (CCFs) Figure: Image stitching dataflow graph 12/15/2015 GLOBAL CONFERENCE ON SIGNAL AND INFORMATION PROCESSING 10 Hybrid Workflow Impact – Image Stitching Implementation using traditional parallel techniques (Simple-GPU) ◦ Port computationally intensive components to the GPU ◦ Copy to/from GPU as needed ◦ 1.14x speedup end-to-end time compared to a sequential CPU-only implementation ◦ Data motion dominated the run-time Implementation using hybrid workflow system ◦ ◦ ◦ ◦ Reuse existing compute kernels 24x speedup end-to-end compared to Simple-GPU Scales using multiple GPUs (~1.8x from one to two GPUs) Requires significant programming effort [Blattner et al. 2014] 12/15/2015 GLOBAL CONFERENCE ON SIGNAL AND INFORMATION PROCESSING 11 HTGS Motivation Performance gains using a hybrid pipeline workflow Figure 1: Simple-GPU Profile Figure 2: Hybrid Workflow Profile 12/15/2015 GLOBAL CONFERENCE ON SIGNAL AND INFORMATION PROCESSING 12 HTGS Motivation Transforming dataflow graphs Into task graphs 12/15/2015 GLOBAL CONFERENCE ON SIGNAL AND INFORMATION PROCESSING 13 Dataflow and Task Graphs Contains a series of vertices and edges ◦ A vertex is a task/compute function ◦ Implements a function applied on data ◦ An edge is data flowing between tasks ◦ Main difference between dataflow and task graphs ◦ Scheduling ◦ Effective method for representing MIMD concurrency Figure: Example task graph Figure: Example dataflow graph 12/15/2015 GLOBAL CONFERENCE ON SIGNAL AND INFORMATION PROCESSING 14 HTGS Motivation Scale to multiple GPUs ◦ Partition task graph into sub-graphs ◦ Bind sub-graph to separate GPUs Memory interface ◦ Represent separate address spaces ◦ CPU ◦ GPU ◦ Managing complex memory hierarchies (future) Overlap computation with I/O ◦ Pipeline computation with I/O 12/15/2015 GLOBAL CONFERENCE ON SIGNAL AND INFORMATION PROCESSING 15 Hybrid Task Graph Scheduler Model Four primary components ◦ ◦ ◦ ◦ Tasks Data Dependency Rules Memory Rules Construct task graphs using the four components ◦ Vertices are tasks ◦ Edges are data flow Figure: Task graph 12/15/2015 GLOBAL CONFERENCE ON SIGNAL AND INFORMATION PROCESSING 16 Hybrid Task Graph Scheduler Model Tasks ◦ Programmer implements ‘execute’ ◦ Defines functionality of the task ◦ Special task types ◦ GPU Tasks ◦ Binds to device prior to execution ◦ Bookkeeper ◦ Manages dependencies ◦ Threading ◦ Each task is bound to one or more threads in a thread pool 12/15/2015 GLOBAL CONFERENCE ON SIGNAL AND INFORMATION PROCESSING 17 CUDA Task Binds CUDA graphics card to a task ◦ Provides CUDA context and stream to the execute function ◦ 1 CPU thread launches GPU kernels with thousands or millions of GPU threads Figure: CUDA Task 12/15/2015 GLOBAL CONFERENCE ON SIGNAL AND INFORMATION PROCESSING 18 Memory Interface Attaches to a task needing reusable memory Memory is freed based on memory rules ◦ Programmer defined Task requests memory from manager ◦ Blocks if no memory is available Acts as a separate channel from dataflow Figure: Memory Manager Interface 12/15/2015 GLOBAL CONFERENCE ON SIGNAL AND INFORMATION PROCESSING 19 Hybrid Task Graph Scheduler Model Execution Pipelines ◦ Encapsulates a sub graph ◦ Creates duplicate instances of the sub graph ◦ Each instance is scheduled and executed using new threads ◦ Can be distributed among available GPUs (one instance per GPU) Figure: Execution Pipeline Task 12/15/2015 GLOBAL CONFERENCE ON SIGNAL AND INFORMATION PROCESSING 20 HTGS API Using the model, implement the HTGS API ◦ Tasks ◦ Default ◦ Bookkeeper ◦ Execution Pipeline ◦ CUDA ◦ Memory Interface ◦ Attaches to any task to allocate/free/update memory 12/15/2015 GLOBAL CONFERENCE ON SIGNAL AND INFORMATION PROCESSING 21 Prototype HTGS API – Image Stitching Full implementation in Java ◦ Uses image stitching as a test case Figure: Image Stitching Task Graph 12/15/2015 GLOBAL CONFERENCE ON SIGNAL AND INFORMATION PROCESSING 22 Preliminary Results Machine specifications ◦ ◦ ◦ ◦ ◦ ◦ Two Xeon E5620 (16 logical cores) Two NVIDIA Tesla C2070s and one GTX 680 Libraries: JCuda and JCuFFT Baseline implementation: [Blattner et al. 2014] Problem size: 42x59 images (70% overlap) HTGS prototype similar runtime as baseline, 23.6% reduction in code size HTGS Exec Pipeline GPUs Runtime (s) Lines of Code 3 29.8 949 1 43.3 725 1 41.4 726 2 26.6 726 3 24.5 726 12/15/2015 GLOBAL CONFERENCE ON SIGNAL AND INFORMATION PROCESSING <- Baseline Hybrid pipeline workflow 23 Conclusions Prototype HTGS API ◦ Reduces code size by 23.6% ◦ Compared to the hybrid pipeline workflow implementation ◦ Speedup of 17% ◦ Enables multi-GPU execution by adding a single line of code Coarse-grained parallelism ◦ ◦ ◦ ◦ Decomposition of algorithm and data structures Memory management Data locality Scheduling 12/15/2015 GLOBAL CONFERENCE ON SIGNAL AND INFORMATION PROCESSING 24 Conclusions The HTGS model and API ◦ ◦ ◦ ◦ ◦ Scales using multiple GPUs and CPUs Overlap data motion Keeps processors busy Memory interface for separate address spaces Restricted to single node with multiple CPUs and multiple NVIDIA GPUs A Tool to represent complex, image processing algorithms that require high performance 12/15/2015 GLOBAL CONFERENCE ON SIGNAL AND INFORMATION PROCESSING 25 Future Work Release of C++ implementation of HTGS (currently in development) Use HTGS with other classes of algorithms ◦ Out-of-core matrix multiplication and LU factorization Expand execution pipelines to support clusters and Intel MIC Image Stitching with LIDE++ ◦ Lightweight dataflow environment [Shen, Plishker, & Bhattacharyya 2012] ◦ Tool-assisted acceleration ◦ Annotated dataflow graphs ◦ Manage memory and data motion ◦ Enhanced scheduling ◦ Improved concurrency 12/15/2015 GLOBAL CONFERENCE ON SIGNAL AND INFORMATION PROCESSING 26 References [Ang et al. 2014] Ang, J. A.; Barrett, R. F.; Benner, R. E.; Burke, D.; Chan, C.; Cook, J.; Donofrio, D.; Hammond, S. D.; Hemmert, K. S.; Kelly, S. M.; Le, H.; Leung, V. J.; Resnick, D. R.; Rodrigues, A. F.; Shalf, J.; Stark, D.; Unat, D.; and Wright, N. J. 2014. abstract machine models and proxy architectures for exascale computing. In proceedings of the 1st international workshop onhardware-software co-design for high performance computing, co-hpc ’14,25–32. ieee press. [Blattner et al. 2014] Blattner, T.; Keyrouz, W.; Chalfoun, J.; Stivalet, B.; Brady, M.; and Zhou, S. 2014. a hybrid cpugpu system for stitching large scale optical microscopy images. In 43rd international conference on parallel processing (icpp), 1–9. [Blattner 2013] Blattner, T. 2013. A Hybrid CPU/GPU Pipeline Workflow System. Master’s thesis, University of Maryland Baltimore County. [Shen, Plishker, & Bhattacharyya 2012] C. Shen, W. Plishker, and S. S. Bhattacharyya. Dataflow-based design and implementation of image processing applications. In L. Guan, Y. He, and S.-Y. Kung, editors, Multimedia Image and Video Processing, pages 609-629. CRC Press, second edition, 2012. Chapter 24 [Kuglin & Hines 1975] Kuglin, C. D., and Hines, D. C. 1975. the phase correlation image alignment method. In proceedings of the 1975 ieee international conference on cybernetics and society, 163–165. [NVLink Whitepaper 2014] NVIDIA 2014. http://www.nvidia.com/object/nvlink.html 12/15/2015 GLOBAL CONFERENCE ON SIGNAL AND INFORMATION PROCESSING 27 Thank You Questions? 12/15/2015 GLOBAL CONFERENCE ON SIGNAL AND INFORMATION PROCESSING 28