Semantics

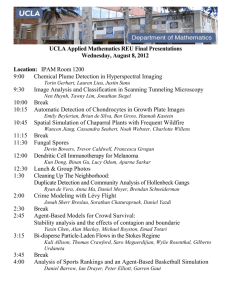

advertisement

Take-Home • Handed out Friday 12/12 2:30pm • Due Monday 12/15 2:30pm • Points Worth 2 problem sets © Daniel S. Weld 1 Tournament • Wednesday 3 rounds each (unless requested or finals) • Before each battle, teams describe project 6 min per group Both people to talk Focus on specific approach, surprises, lessons 2 slides max (print on transparencies) © Daniel S. Weld 2 Report • 8 page limit; 12 pt font; 1” margins • Model on conference paper Include abstract, conclusions, but no introduction Online and offline aspect of your agent Describe your use of search (if you did) Section on lessons learned / experiments Short section explaining who did what • Due 12/18 1pm • Points Worth 40-60% of project score © Daniel S. Weld 3 Outline • Review of topics • Hot applications Internet “Search” Ubiquitous computation Crosswords © Daniel S. Weld 4 573 Topics Perception NLP Robotics Multi-agent Reinforcement Learning MDPs Supervised Learning Planning Search Uncertainty Knowledge Representation Problem Spaces Agency © Daniel S. Weld 5 State Space Search • Input: Set of states Operators [and costs] Start state Goal state test • Output: Path Start End May require shortest path © Daniel S. Weld 6 • GUESSING (“Tree Search”) Guess how to extend a partial solution to a problem. Generates a tree of (partial) solutions. The leafs of the tree are either “failures” or represent complete solutions • SIMPLIFYING (“Inference”) Infer new, stronger constraints by combining one or more constraints (without any “guessing”) Example: X+2Y = 3 X+Y =1 therefore Y = 2 • WANDERING (“Markov chain”) Perform a (biased) random walk through the space of (partial or total) solutions © Daniel S. Weld 7 Some Methods • Guessing – State Space Search 1. 2. 3. 4. 5. 6. 7. BFS, DFS Iterative deepening, limited discrep Bidirectional Best-first search, beam A*, IDA*, SMA* Game tree Davis-Putnam (logic) + Satisfaction Constraint • Simplification – Constraint Propagation 1. Forward Checking 2. Path Consistency 3. Resolution • Wandering – Randomized Search 1. 2. 3. 4. © Daniel S. Weld Hillclimbing Simulated annealing Walksat Monte-Carlo Methods 8 Admissable Heuristics • f(x) = g(x) + h(x) • g: cost so far • h: underestimate of remaining costs Where do heuristics come from? © Daniel S. Weld 9 Relaxed Problems • Derive admissible heuristic from exact cost of a solution to a relaxed version of problem For transportation planning, relax requirement that car has to stay on road Euclidean dist For blocks world, distance = # move operations heuristic = number of misplaced blocks What is relaxed problem? # out of place = 2, true distance to goal = 3 • Cost of optimal soln to relaxed problem cost of optimal soln for real problem © Daniel S. Weld 10 CSP Analysis: Nodes Explored BT=BM More BJ=BMJ=BMJ2 FC Fewer © Daniel S. Weld CBJ=BM-CBJ =BM-CBJ2 FC-CBJ 11 Semantics • Syntax: a description of the legal arrangements of symbols (Def “sentences”) • Semantics: what the arrangement of symbols means in the world Sentences Facts © Daniel S. Weld Sentences Semantics World Semantics Representation Inference Facts 12 Propositional. Logic vs. First Order Ontology Syntax Objects, Facts (P, Q) Properties, Relations Variables & quantification Atomic sentences Sentences have structure: terms Connectives father-of(mother-of(X))) Semantics Truth Tables Interpretations (Much more complicated) Inference Algorithm DPLL, GSAT Fast in practice Unification Forward, Backward chaining Prolog, theorem proving NP-Complete Semi-decidable Complexity © Daniel S. Weld 13 Computational Cliff • Description logics • Knowledge representation systems © Daniel S. Weld 14 Machine Learning Overview • Inductive Learning Defn, need for bias, … • One method: Decision Tree Induction • • • • Hill climbing thru space of DTs Missing attributes Multivariate attributes Overfitting Ensembles Naïve Bayes Classifier Co-learning © Daniel S. Weld 15 Learning as search thru hypothesis space Yes Humid Outlook Wind Temp On which attribute should we split? When stop growing tree? © Daniel S. Weld 16 Ensembles of Classifiers • Assume Errors are independent Majority vote • Probability that majority is wrong… = area under binomial distribution Prob 0.2 0.1 Number of classifiers in error • If individual area is 0.3 • Area under curve for 11 wrong is 0.026 • Order of magnitude improvement! © Daniel S. Weld 17 PS 3 Feedback • Independence in Ensembles © Daniel S. Weld Classifier A X X 66% error Classifier B X X 66% error Classifier C X X 66% error Ensemble X X X 100% error 18 Planning • The planning problem Simplifying assumptions • Searching world states Forward chaining (heuristics) Regression • Compilation to SAT, CSP, ILP, BDD • Graphplan Expansion (mutex) Solution extraction (relation to CSPs) © Daniel S. Weld 19 Simplifying Assumptions Static vs. Dynamic Perfect vs. Noisy Environment Fully Observable vs. Partially Observable Percepts Instantaneous vs. Durative Deterministic vs. Stochastic What action next? Actions Full vs. Partial satisfaction © Daniel S. Weld 20 How Represent Actions? • Simplifying assumptions Atomic time Agent is omniscient (no sensing necessary). Agent is sole cause of change Actions have deterministic effects • STRIPS representation World = set of true propositions Actions: • Precondition: (conjunction of literals) • Effects (conjunction of literals) north11 a W0 © Daniel S. Weld a W1 north12 a W2 21 STRIPS Planning actions Frame problem Qualification problem Ramification problem (:operator walk :parameters (?X ?Y) :precondition (and (at ?X) (at ?X) (neq ?Y ?Z)) :effect (and (at ?y) (not (at ?x)))) © Daniel S. Weld 22 Markov Decision Processes S = set of states set (|S| = n) A = set of actions (|A| = m) Pr = transition function Pr(s,a,s’) represented by set of m n x n stochastic matrices each defines a distribution over SxS R(s) = bounded, real-valued reward function represented by an n-vector © Daniel S. Weld 23 Value Iteration (Bellman 1957) Markov property allows dynamic programming Value iteration Policy iteration V ( s) R( s), s 0 Bellman backup V (s) R(s) max Pr( s, a, s' ) V s ' a k * ( s, k ) arg max s ' Pr( s, a, s' ) V k 1 k 1 ( s' ) ( s' ) a Vk is optimal k-stage-to-go value function © Daniel S. Weld 24 Dimensions of Abstraction Uniform ABC ABC ABC ABC ABC ABC ABC ABC Exact 5.3 5.3 5.3 5.3 Nonuniform A AB ABC ABC © Daniel S. Weld = 2.9 2.9 9.3 9.3 Approximate A B C 5.3 5.2 5.5 5.3 Adaptive Fixed 2.9 2.7 9.3 9.0 25 Partial observability • Belief states POMDP MDP © Daniel S. Weld 26 Reinforcement Learning • Adaptive dynamic programming Learns a utility function on states • Temporal-difference learning Don’t update value at every state • Exploration functions Balance exploration / exploitation • Function approximation Compress a large state space into a small one Linear function approximation, neural nets, … © Daniel S. Weld 27 PROVERB © Daniel S. Weld 28 PROVERB • Weaknesses © Daniel S. Weld 29 PROVERB • Future Work © Daniel S. Weld 30 Grid Filling and CSPs © Daniel S. Weld 31 CSPs and IR Domain from ranked candidate list? Tortellini topping: TRATORIA, COUSCOUS,SEMOLINA,PARMESAN, RIGATONI, PLATEFUL, FORDLTDS, SCOTTIES, ASPIRINS, MACARONI,FROSTING, RYEBREAD, STREUSEL, LASAGNAS, GRIFTERS, BAKERIES,… MARINARA,REDMEATS, VESUVIUS, … Standard recall/precision tradeoff. © Daniel S. Weld 32 Probabilities to the Rescue? Annotate domain with the bias. © Daniel S. Weld 33 Solution Probability Proportional to the product of the probability of the individual choices. Can pick sol’n with maximum probability. Maximizes prob. of whole puzzle correct. Won’t maximize number of words correct. © Daniel S. Weld 34 Trivial Pursuit™ Race around board, answer questions. Categories: Geography, Entertainment, History, Literature, Science, Sports © Daniel S. Weld 35 Wigwam QA via AQUA (Abney et al. 00) • back off: word match in order helps score. • “When was Amelia Earhart's last flight?” • 1937, 1897 (birth), 1997 (reenactment) • Named entities only, 100G of web pages Move selection via MDP (Littman 00) • Estimate category accuracy. • Minimize expected turns to finish. © Daniel S. Weld 36 Mulder • Question Answering System User asks Natural Language question: “Who killed Lincoln?” Mulder answers: “John Wilkes Booth” • KB = Web/Search Engines • Domain-independent • Fully automated © Daniel S. Weld 37 © Daniel S. Weld 38 Architecture Question Parsing ? Question Classification Query Formulation ? ? ? Final Answers Answer Selection © Daniel S. Weld Search Engine Answer Extraction 39 Experimental Methodology • Idea: In order to answer n questions, how much user effort has to be exerted • Implementation: A question is answered if • the answer phrases are found in the result pages returned by the service, or • they are found in the web pages pointed to by the results. Bias in favor of Mulder’s opponents © Daniel S. Weld 40 Experimental Methodology • User Effort = Word Distance # of words read before answers are encountered • Google/AskJeeves query with the original question © Daniel S. Weld 41 Comparison Results 70 % Questions Answered Mulder 60 Google 50 40 30 AskJeeves 20 10 0 0 5.0 0.5 1.0 1.5 2.0 2.5 3.0 3.5 4.0 4.5 User Effort (1000 Word Distance) © Daniel S. Weld 42 Know It All • Research project started June 2003 • Large scale information extraction Domain-independent extraction PMI-IR Completeness of web © Daniel S. Weld 43 Domain-independent extraction • Cities such as X, Y, Z Proper nouns • Movies such as X, Y, Z © Daniel S. Weld 44 TOEFL Synonyms Used in college applications. fish (a) (b) (c) (d) scale angle swim dredge Turney: PMI-IR © Daniel S. Weld 45 Comprehensive Coverage Search on: boston seattle paris chicago london © Daniel S. Weld 46 Ubiquitous Computing © Daniel S. Weld 47 Mode Prediction © Daniel S. Weld 48 Placelab • Location-aware computing © Daniel S. Weld 49 Adapting UIs to Device (& User) Characteristics