slides

advertisement

Characterizing the

Uncertainty of Web Data:

Models and Experiences

Lorenzo Blanco, Valter Crescenzi, Paolo Merialdo, Paolo Papotti

Università degli Studi Roma Tre

Dipartimento di Informatica ed Automazione

{blanco,crescenz,merialdo,papotti}@dia.uniroma3.it

The Web as a

Source of Information

Opportunities

−

−

−

a huge amount of information publicly available

valuable data repository can be built by aggregating

information spread over many sources

abundance of redundancy for data of many domains

The Web as a

Source of Information

Opportunities

−

−

−

a huge amount of information publicly available

valuable data repository can be built by aggregating

information spread over many sources

abundance of redundancy for data of many domains

[Blanco et al. WebDb2010, demo@WWW2011]

Sy

La

Min

Max

Vol

Open

Ibm

8

88

73

99

Cisc

1

33

44

12

2342

Appl

8

88

73

99

1998

Appl

8

88

73

99

1998

Data conflicts

HRBN max price?

20.64

Limitations

−

20.49

−

…

20.88

sources are

inaccurate,

uncertain and

unreliable

some sources

reproduce the

contents

published by

others

Popularity-based rankings

Several ranking methods for web sources

E.g. Google PageRank, Alexa Traffic Rank

Mainly based on the popularity of the sources

Several factors can compromise the quality of

data even when extracted from authoritative

sources

Errors in the editorial process

Errors in the publishing process

Errors in the data extraction process

Problem Definition

w1

w2

w3

errors

in bold

A set of sources (possibly with copiers) provide values

of several attributes for a common set of objects

Problem Definition

w1

w2

w3

w4 (Copier)

A set of sources (possibly with copiers) provide values

of several attributes for a common set of objects

We want to compute automatically

−

A score of accuracy for each web source

−

The probability distribution for each value

score (w1)?

...

score (w4)?

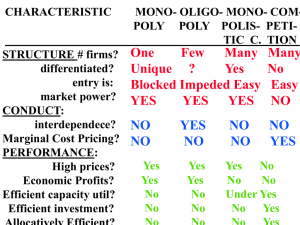

State-of-the-art

Probabilistic models to evaluate the accuracy of web

data sources

(i.e., algorithms to reconcile data from inaccurate sources)

NAIVE (voting)

ACCU [Yin et al, TKDE08; Wu&Marian, WebDb07;

Galland et al, WSDM10]

DEP [Dong et al, PVLDB09]

M-DEP [Blanco et al, Caise10; Dong et al, PVLDB10]

Goals

The goal of our work is twofold:

illustrate the state-of-the-art models

compare the result of these models on the same real

world datasets

NAIVE

Independent sources

Consider a single attribute at a time

Count the votes for each possible value

Sources

Truth

it works

it does not!

381 gets 2 votes

380 gets 1 vote

Limitations of the NAIVE Model

Real sources can exhibit different accuracies

Every source is considered equivalent

independently from its authority and accuracy

More accurate sources should weight more than

inaccurate sources

ACCU: a Model considering the

Accuracy of the Sources

The vote of a source is weighted according to

its accuracy with respect to that attribute

Sources

Accuracy 3/3

542

45

1/3

Result

Truth

1/3

Main intuition: it's difficult that sources agree on errors!

Consensus on (many) true values allows the algorithm

to compute accuracy

Source

Accuracy

Discovery

Truth

Discovery

(consensus)

Limitations of the ACCU model

Misleading majorities might be formed by copiers

Sources: Independents

Copier

Result

Truth

2/3

Accuracy

3/3 2/3

1/3

Both values (380 and 381) get 3/3 as weighted vote

Copiers have to be detected to neutralize the

“copied” portion of their votes

A Generative Model of Copiers

Source 1

Truth

e2

e

independently

produced objects

Copier

e1

Source 2

Source 3

e1

e2

copied

objects

DEP: A Model to Consider Source

Dependencies

Sources:

A source is copying 2/3 of its tuples

Independents

Copier

Result

Truth

2/3

Accuracy 3/3 2/3

3/3 3/3

“Portion” of

independent opinion

1/3

1/3

380 gets 3/3 as independent

weighted vote

381 gets 2/3 x 3/3 + 1/3 x 1/3 =

7/9 as independent weighted vote

Main intuition: copiers can be detected as they propagate

false values (i.e., errors)

Contextual Analysis of Truth,

Accuracies, and Dependencies

Truth

Discovery

Source

Accuracy

Discovery

Dependence

Detection

M-DEP: Improved Evidence from

MULTIATT Analysis

w1

w2

Truth

w3

MULTIATT(3)

w4

Copier

errors

in bold

An analysis based only on the Volume would fail in this

example: it would recognizes w2 as a copier of w1 but

it would not detect w4 as a copier of w3

actually w1 and w2 are independent sources sharing a

common format for volumes

Experiments with Web Data

Soccer players

Truth: hand crafted from official pages

Stats: 976 objects and 510 symbols (on average)

Videogames

Truth: www.esrb.com

Stats: 227 objects and 40 symbols (on average)

NASDAQ Stock Quotes

Truth: www.nasdaq.com

Stats: 819 objects, 2902 symbols (on average)

Sample Accuracies of the Sources

Sampled accuracy: the number of true values correctly

reported over the number of objects.

Pearson correlation

coefficient shows that

quality of data and

popularity do not overlap

Experiments with Models

a

Probability Concentration measures the performance in

computing probability distributions for the observed objects.

Low scores for Soccer: no authority on the Web

Differences in VideoGames: #of distinct symbols (5 vs 75)

High SA scores in Finance for every model: large #of

distinct symbols

Global Execution Times

Lessons Learned

Three dimensions to decide which technique to use:

• Characteristics of the domain

- domains where authoritative sources exist are

much easier to handle

- large number of distinct symbols help a lot too

• Requirements on the results

- on average, more complex models return better

results, especially for Probability Concentration

• Execution times

- depend on the number of objects and number of

distinct symbols. Naïve always scales well.

Thanks!