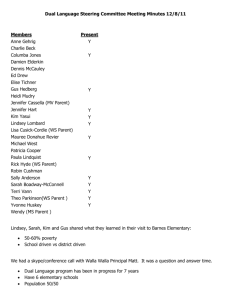

Slides

advertisement

Dual Composition Applications

Presenters: Ben & Red

Section(s) 1, 2 and 3:

Introduction to Dual Composition

Abstract

Dual decomposition, and more generally

Lagrangian relaxation, is a classical problems in

natural language processing(NLP)

A central theme of this tutorial is that Lagrangian

relaxation is naturally applied in conjunction with

a broad class of combinatorial algorithms.

Introduction

In many problems in statistical natural language

processing, the task is to map some input x(a

string) to some structured output y(a parse

tree).This mapping is often defined as

y*= arg max h(y)

y

Where γ is a finite set of possible structures for

the input x, and h: γ->R is a function that

assigns a score h(y) to each y in γ.

Introduction

The problem of finding y* is referred to as the

decoding problem. The size of γ typically grows

exponentially with respect to the size of the

input x, making exhaustive search for y*

intractable.

Introduction

Dual decomposition leverages the observation

that many decoding problems can be

decomposed into two or more sub-problems,

together with linear constraints that enforce

some notion of agreement between solutions to

the different problems.

The sub-problems are chosen such that they can

be solved efficiently using exact combinatorial

algorithms.

Properties

They are typically simple and efficient.

They have well-understood formal properties, in

particular through connections to linear

programming relaxations.

In cases where the underlying LP relaxation is

tight, they produce an exact solution to the

original decoding problem, with a certificate of

optimality.

Dual Decomposition

Some finite set γ Rd,Z Rd’ .Each vector y∈γ and

z∈Z has a associated score

f(y)=y·θ(1) , g(z)=z·θ(2)

where θ(1) is a vector in Rd

The decoding problem is then to find

arg max y (1) z ( 2)

y , zZ

Such that Ay+Cz=b

Where A∈Rp ×d ,C∈Rp ×d’ ,b∈Rp

Section 4:

Optimization of the Finite State Tagger

x: an input sentence

y: a parse tree

γ : the set of all parse trees for x

h(y): the score for any parse tree y ∈ γ

We want to find : y*= arg max h(y)

y

How to decide h(y)

First, find the

score for y

under a

weighted

context-free

grammar

Second, find the score for

the part-ofspeech(POS)

sequence in y under a

finite-state part –ofspeech tagging model

h(y)=f(y)+g(l(y))

f(y) is the score for y under a weighted context-free grammar(WCFG).

A WCFG consists of a context- free grammar with a set of rules G,

A scoring function θ:G->R assigns a real-valued score to each rule in G

f(y)= θ(S->NP VP) + θ(NP->N) + θ(N->United)+ θ(VP->V NP)+…

How to define θ is not very important

θ(a->b)=log p(a->b) in a probabilistic context-free grammar

Θ(a->b)=w*Φ(a->b) in a conditional random field

h(y)=f(y)+g(l(y))

l(y) is a function that maps a parse tree y

to the sequence of part-of-speech tags in y

l(y)= N V D A N

h(y)=f(y)+g(l(y))

g(z) is the score for the part-of-speech tag sequence

z under a k’th-order finite-state tagging model

n

Under this model g ( z ) (i, zik , zik 1 ,..., zi )

i 1

Where θ is the score for the sub-sequence of

tags ending at position i in the sentence.

Motivation

The scoring function h(y)=f(y)+g(l(y)) combines

information

from both the parsingmodel and the tagging model.

The POS tagger captures information about adjacent POS

tags that will missing under f(y).

This information may improve both parsing and tagging

performance, in comparison to using f(y) alone.

The dual decomposition algorithm

Define T to be the set of all POS tags.

Assume the input sentence has n words.

1...if . parse.tree. y.has.tag.t.at. position.i

y (i, t )

0...otherwise

1...if .the.tag.sequence.has.tag.t.at. position.i

z (i, t )

0...otherwise

Find

arg max . f ( y) g ( z )

y , zZ

Such that for all i∈{1…n}, for all t∈T, y(I,t)=z(I,t)

Assume that we introduce variables u( i, t)∈R for

i∈{i…n}, and t∈T. We assume that for any value

of these variables, we can find

arg max ( f ( y ) u (i, t ) y (i, t ))

y

efficiently.

i ,t

Assumption 2

arg max ( g ( z ) u (i, t ) z (i, t ))

zZ

i ,t

The dual decomposition algorithm

for integrated parsing and tagging

Initialization: Set u (i, t ) 0 for all i∈{1…n}, t∈T

For k=1 to K

y arg max ( f ( y ) u

(i, t ) y (i, t ))

[P

z ( k ) arg max zZ ( g ( z ) u ( k 1) (i, t ) z (i, t ))

[Ta

i ,t

(k )

(k )

If y (i, t ) z (i, t )

for all i,t Return ( y ( k ) , z ( k ) )

Else u ( k 1) (i, t ) u ( k ) (i, t ) k ( y ( k ) (i, t ) z ( k ) (i, t ))

δk for k=1…K is the step size at the k’th iteration.

( 0)

(k )

( k 1)

y

i ,t

Relationship to decoding problem

The set γ is the set of all parses for the input sentence

The set Z is the set of all POS sequences for the input sentence.

Each parse tree y∈Rd is represented as a vector

such that f(y)=u·θ(1) for some θ(1)∈Rd

Each tag sequence z∈Rd’ is represented as a vector

such that g(z)=z·θ(2) for some θ(2)∈Rd’

The constraints

y(i,t)=z(i,t)

for all (i,t) can be encoded though linear constraints

Ay+Cz=b

For suitable choices of A,C and b

Assuming that the vectors y and z include components

y(i,t) and z(i,t) respectively.

Section 5:

Formal Properties of the Finite State Tagger

Recall

The equation we are optimizing:

Lagrangian Relaxation and Optimization

We first define a Lagrangian eq. for the optimization.

Next, from this we derive a dual objective.

Finally, we must minimize the dual objective.

(We do this with sub-gradient optimization)

Creation of the Dual Objective

Find the Lagrangian:

Introduce Lagrangian Multiplier u(i, t) for each y(i, t) = z(i, t)

From the equality constraint we get:

Next we regroup based on y and z to get the dual objective:

Minimization of the Lagrangian (1)

We need to minimize the Lagrangian:

Why? We see that for any value of u:

The proof is elementary given the equality constraint:

This is because if y(i, t) = z(i, t) for all (i, t) we have:

Therefore, our goal is to minimize the Lagrangian, as seen above

because it sets an upper limit on the dual.

Minimizing the Lagrangian (2)

Recall the Algorithm introduced in Section 4:

(The algorithm for dual composition)

From this we see the algorithm converges to

the tightest possible upper bound given by the

dual. From this we derive the theorem:

given

we end up with (as we reach infinite iterations)

Given infinite iterations, this dual is guaranteed to d-converge.

This is actually a Sub-gradient minimization methodology, which we will return to in the next section.

Minimizing the Lagrangian (3)

From the previous slide, we can derive these two functions:

So, if there exists some u such that:

For all (i, t),

Then,

(or

)

The proof of this is also, relatively simple:

From the definitions of

Because, once again,

We also know that,

However, because y* and z* are optimal, we also see:

Therefore we have proven,

, for all u, which implies:

This equation is said to e-converge under these circumstances because we have found an iteration that

completely converges to the optimization by bounding it from about and below.

D-convergence vs. E-convergence

D-convergence occurs in the first case,

D-Convergence is guaranteed by Dual Composition provided infinite iterations.

Short for 'dual convergence'.

It refers to the convergence of the minimum dual value algorithm:

(

).

E-convergence occurs in the later case,

E-convergence is not guaranteed by Dual Composition.

Short for 'exact convergence'.

It refers to the convergence of ALL constraints.

(Which implies the discovery of a guaranteed optimization)

Sub-gradient Optimization

What are sub-gradient algorithms?

Algorithm used to optimize convex functions.

Uses gradient descent to find the local minimum.

Takes iterative steps negative to the approximate gradient relative to

the current point in order to find a local minimum of any function.

To the right, we see a gradient optimization for:

(Credits to: http://en.wikipedia.org/wiki/Gradient_descent )

The 'zig-zag' nature of the algorithm is obvious here.

Sub-gradient Applications

Recall the equation (and the goal to minimize it):

We notice two things about the equation:

It is a convex function:

Formally defined:

It is non-differentiable (It is a piecewise linear function).

Because it can't be differentiated, we can't use gradient descent.

However, because it is a convex function, we can use a gradient algorithm.

This is the property that allows us to compute duals efficiently.

Section 6:

Additional Applications of Dual Composition

Applications of Dual Composition

Where is Dual Composition and Lagrangian Relaxation useful?

Various NP-Hard Problems such as (as the paper suggests):

Language translation.

Routing problems or Traveling Salesman problems.

Syntactical parsing based on complex CFGs.

The MAP problem

Each of these techniques will be covered in the following

segment.

Other examples of NP-Hard problems solvable by DC

optimization:

Minimum degree/leaf spanning tree

Steiner tree problem

Chinese postman problem

Vehicle routing problem

Bipartite match

A lot of other problems, many can be found on Wikipedia*:

“ http://en.wikipedia.org/wiki/List_of_NP-complete_problems “

* Some of these on the list are not applicable ( Just be wary when researching, use your brain! )

Example 1: The MAP Problem

As this was covered by the previous group we will not discuss it in detail.

Goal was to find optimization of:

We break the problem up into easier sub problems.

We then solve these 'easier' problems using an iterative subgradient algorithm.

(Into sub-trees of the main graph)

Doable because it is convex.

The sub-gradient algorithm for any given iteration (k):

Example 2: Traveling Salesman Problems

The Traveling Salesman Problem (TSP) is one of the most

famous NP-Hard problems of all time:

Goal is to find the maximum scoring route that visits all node at least once.

More formally stated it is the optimization of this problem:

Given that:

Y is the set of all possible tours.

E is the set of all edges.

Theta is the weight of edge e.

ye is 1 iff e is in the subset, 0 otherwise.

Held & Karp made this an easy problem to solve (well, “easy”).

(TSP) Held & Karp's idea

The idea of a 1-tree:

A 1-tree is a tree with a single cycle.

Most importantly, notice how any possible route of the TSP is a 1-tree.

How we can use this information:

Alter the problem to be:

where Y' is the set of all possible 1-trees.

(Hence, Y is a subset of Y' b/c all tours are 1-trees)

However, the first step becomes to find the Maximum Scoring Tree.

And the second is to add the two highest scoring edges that include vertex 1.

Lastly, we add constraints on all edges in the form of the following:

This constrains the equation to 1-trees that each have 2 edges per vertex.

(This is the equivalent to a route and, when optimized, the best route)

(TSP) Getting to the Solution

Next, we introduce the following Lagrangian:

(Where

And it is clearly constrained by 2 edges per vertex.

are the Lagrange multipliers).

And define the dual objective as:

To finally end up with the following as our sub-gradient:

So, if the constraints hold the algorithm terminates and we have

d-convergence; however, for e-convergence we must

reevaluate the weights for the graph to include the Lagrange

Multipliers (not shown).

Example 3: Phrase Based Translation

Phrase based translation is a scheme to translate sentences from one

language to another based on the scoring of certain phrases within a

sentence.

Takes in an input sentence in the first language.

Pairs sequences of words in first language with

the same sequence of words in the target lang.

For example, (1, 3, we must also), means that

words 1, 2 and 3 in the input could possibly mean “we must also”.

Then, once we have a potential translation (all words mapped), we score it.

The scoring function for any set of potential tuple translations is:

Where g(y) is the log-probability of y under a n-gram language model.

(PBT) Constraining the Equation

First, we must find a constraint for the function.

The constraint would be to ensure that any correct translation only

translates each word in the input 1 time in the output.

Defining this formally, we introduce y(i), to represent the number of

times word i is translated in the output:

So, intuitively, the constraint would become:

Where P* is the set of all finite phrase sequences.

So, the goal becomes to maximize the score as follows:

(PBT) Relaxation and Solution

The relaxation for this algorithm would be:

Instead of solving the NP-hard problem, we find Y' such that Y is a subset of Y’.

The relaxation is based on:

(In other words, the relaxation only checks to see if there are n words in the generated phrase)

So,

So, from this, our goal is to ensure that the constraint y(i) = 1 is met for all i.

Thus, we introduce Lagrange multipliers for each constraint, giving us the Lagrangian:

From this, we define u(i) = 0 initially to get the iteration, and then the sub-gradient:

We can see when we have y(i) = 1, for all i = {1,...,n} the sub-gradient step is optimal.

Then, like in the TSP problems, we must reweigh the scores with the Lagrange

Multipliers (if we want e-convergence):

is a member of Y' and not Y.

Section 7:

Practical Issues of Dual Composition

Practical Issues

Each iteration of the algorithm produces a number

of useful terms:

Y(k) and z(k).

The current dual value L(u(k))

When we have a function l:γ->Z that maps

each structure y∈γ to a structure l(y)∈Z ,

we also have

A primal solution y(k) , l(y(k))

A primal value f(y(k))+g(l(y(k)))

An Example run of the algorithm

Because L(u) provides an upper bound on

f(y*)+g(z*) for any value of u, we have

L(u(k))>=f(y(k))+g(l(y(k)))

at every iteration

We have e-convergence to an exact solution,

which L(u(k))=f(y(k))+g(z(k)) with (y(k),z(k))

guaranteed to be optimal.

The dual values L(u(k)) are not monotonically

decreasing

The primal value f(y(k))+g(z(k)) fluctuates

Useful quantities

L(u(k))-L(u(k-1)) is the change in the dual value from

one iteration to the next.

L*k=mink’<=kL(u(k’)) is the best dual value found so

far.

p*k=maxk’<=kf(y(k’))+g(l(y(k’))) is the best primal

value found so far.

L*k-p*k is the gap between the best dual and best

primal solution found so far by the algorithm

Choice of the step sizes δk

c

k

t 1

C>0 is a constant

t is the number of iterations

Prior to k where the dual value

increases

The dual value L(u) versus the number of iterations k,

For different fixed step sizes.

Small step size-convergence is smooth

Large step size-dual fluctuates erratically

Recovering Approximate

Solutions

Early Stopping

Section 8:

Linear Programming Relaxations

(A Basic Introduction & Summary)

Recall

The equations from Sections 3, 4 and 5:

Defining the LP Relaxation

We first define two sets, one for y the other for z:

From this, we can define the new optimization to be:

This optimization is now a Linear Program.

Next, we would define y' (and z' in a similar manner) as:

Both y and z are simplex, corresponding to the set of probability distributions over Y.

Such that y is a subset of y' (Sounds familiar, right?)

So, finally we get the optimization problem to be:

Defining the LP Relaxation (2)

Finally, we end up with the Lagrangian (Note that it is identical to the one in section 5):

The dual objective is also rather similar:

We notice that all of these Linear Programming principles are VERY close to the

Lagrangian relaxation that we see in Dual Composition.

However, Linear Programming problems are often solved to tighten the econvergence on Dual Composition algorithms.

Because e-convergence is not guaranteed, Linear Programs can often 'fix' the

problems where dual composition only d-converges.

We can do this by constraining the Linear Program further.

Summary of LP Relaxation

The dual L(u) from Sect 4/5 is the same as the

dual for the LP Relaxation M(u, a, b).

LP Relaxation is used as a tightening method.

These methods improve convergence.

Does this by selectively adding more constraints.

LP solvers exist and therefore LP problems can

be automatically solved by software.

This can be useful for debugging hard DC problems.

Section 9:

Conclusion

Conclusion

Dual Composition...

Is useful in solving various machine learning problems.

Is useful in solving various inference / statistical NLP problems.

Uses combinatorial algorithms combined with constraints.

Lagrangian Relaxation...

Introduces constraints using Lagrange multipliers.

Iterative methods are then used to solve the resulting LR.

The LR algorithm is always bounded by the dual objective.

Is similar to linear programming relaxation.

(Most importantly) has the potential to produce the exact solution.

Therefore, DC and LR produce optimal solutions more

efficiently than many exact methods, or other methodologies of

approximation.

Questions?

Perhaps… Answers?