116_Presentation for CS 257 (006530706)

advertisement

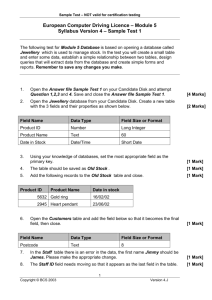

PRESENTATION FOR

CS 257

DATABASE PRINCIPLES

1

Submitted to : Dr T.Y Lin

Prepared By: Ronak Shah(116)

(006530706)

CHAPTERS COVERED

Chapter

13. Secondary storage

Chapter 15. Query execution

Chapter 16. Query complier

Chapter 18. Concurrency control

Chapter 21. information Integration

2

CHAPTER 13

SECONDARY STORAGE

13.1 Secondary Storage Management

13.2 Disks

13.3 Accelerating Access to Secondary Storage

13.4 Recovery from Disk Crashes

13.5 Arranging data on disk

13.6 Representing Block and Record Addresses

13.7 Variable length data and record

13.8 Record Modification

3

13.1 SECONDARY STORAGE

MANAGEMENT

In Secondary Storage Management Database

systems always involve secondary storage like

the disks and other devices that store large

amount of data that persists over time.

4

MEMORY HIERARCHY

A typical computer system has several different

components in which data may be stored.

These components have data capacities ranging

over at least seven orders of magnitude and also

have access speeds ranging over seven or more

orders of magnitude.

5

DIAGRAM OF MEMORY

HIERARCHY

6

CACHE MEMORY

It

is the lowest level of the hierarchy is a

cache. Cache is found on the same chip as

the microprocessor itself, and additional

level-2 cache is found on another chip.

Data and instructions are moved to cache

from main memory when they are needed

by the processor.

Cache data can be accessed by the

processor in a few nanoseconds.

7

MAIN MEMORY

In

the center of the action is the

computer's main memory. We may think

of everything that happens in the

computer - instruction executions and

data manipulations - as working on

information that is resident in main

memory

Typical times to access data from main

memory to the processor or cache are in

the 10-100 nanosecond range

8

SECONDARY STORAGE

Essentially every computer has some sort of

secondary storage, which is a form of storage that

is both significantly slower and significantly

more capacious than main memory.

The time to transfer a single byte between disk

and main memory is around 10 milliseconds.

9

TERTIARY STORAGE

As

capacious as a collection of disk units

can be, there are databases much larger

than what can be stored on the disk(s) of a

single machine, or even of a substantial

collection of machines.

Tertiary storage devices have been

developed to hold data volumes measured

in terabytes.

Tertiary storage is characterized by

significantly higher read/write times than

secondary storage, but also by much

larger capacities and smaller cost per byte

than is available from magnetic disks.

10

TRANSFER OF DATA BETWEEN

LEVELS

Normally, data moves between adjacent

levels of the hierarchy.

At the secondary and tertiary levels,

accessing the desired data or finding the

desired place to store data takes a great

deal of time, so each level is organized to

transfer large amount of data or from the

level below, whenever any data at all is

needed.

The disk is organized into disk blocks and

the entire blocks are moved to or from a

continuous section of main memory called

a buffer

11

VOLATILE AND NONVOLATILE

STORAGE:

A

volatile device "forgets" what is stored

in it when the power goes off.

A nonvolatile device, on the other hand, is

expected to keep its contents intact even

for long periods when the device is turned

off or there is a power failure.

Magnetic and optical materials hold their

data in the absence of power. Thus,

essentially all secondary and tertiary

storage devices are nonvolatile. On the

other hand main memory is generally

volatile.

12

VIRTUAL MEMORY

When

we write programs the data we use,

variables of the program, files read and so

on occupies a virtual memory address

space.

Many machines use a 32-bit address

space; that is, there are 2(pow)32 bytes or

4 gigabytes.

The Operating System manages virtual

memory, keeping some of it in main

memory and the rest on disk.

Transfer between memory and disk is in

units of disk blocks

13

13.2 DISKS

The use of secondary storage is one of the

important characteristics of a DBMS, and

secondary storage is almost exclusively based on

magnetic disks

14

MECHANICS OF DISKS

The

two principal moving pieces of a disk

drive are a disk assembly and a head

assembly.

The disk assembly consists of one or more

circular platters that rotate around a

central spindle

The upper and lower surfaces of the

platters are covered with a thin layer of

magnetic material, on which bits are

stored.

15

DIAGRAM FOR DISK MANAGEMENT

16

0’S AND 1’S ARE REPRESENTED BY DIFFERENT PATTERNS

IN THE MAGNETIC MATERIAL.

A COMMON DIAMETER FOR THE DISK PLATTERS IS 3.5

INCHES.DISK IS ORGANIZED INTO TRACKS, WHICH ARE

CONCENTRIC CIRCLES ON A SINGLE PLATTER.THE TRACKS

THAT ARE AT A FIXED RADIUS FROM A CENTER, AMONG ALL

THE SURFACES FORM ONE CYLINDER.

TRACKS ARE ORGANIZED INTO SECTORS, WHICH ARE

SEGMENTS OF THE CIRCLE SEPARATED BY GAPS THAT ARE

MAGNETIZED TO REPRESENT EITHER 0’S OR 1’S. THE

SECOND MOVABLE PIECE THE HEAD ASSEMBLY, HOLDS THE

DISK HEADS.

17

THE DISK CONTROLLER

One or more disk drives are controlled by a disk

controller, which is a small processor capable of:

Controlling the mechanical actuator that moves the

head assembly to position the heads at a particular

radius.

Transferring bits between the desired sector and the

main memory.

Selecting a surface from which to read or write, and

selecting a sector from the track on that surface that is

under the head.

18

An example of single processor is shown in next slide.

SIMPLE COMPUTER ARCHITECTURE

SYSTEM

19

DISK ACCESS

CHARACTERISTICS

Seek Time: The disk controller positions the head

assembly at the cylinder containing the track on

which the block is located. The time to do so is

the seek time.

Rotational Latency: The disk controller waits

while the first sector of the block moves under

the head. This time is called the rotational

latency.

Transfer Time: All the sectors and the gaps

between them pass under the head, while the

disk controller reads or writes data in these

sectors. This delay is called the transfer time.

20

The sum of the seek time, rotational latency, transfer time

is the latency of the time.

13.3 ACCELERATING ACCESS TO

SECONDARY STORAGE

Several approaches for more-efficiently

accessing data in secondary storage:

Place blocks that are together in the same cylinder.

Divide the data among multiple disks.

Mirror disks.

Use disk-scheduling algorithms.

Prefetch blocks into main memory.

Scheduling Latency – added delay in

accessing data caused by a disk scheduling

algorithm.

Throughput – the number of disk accesses per

21

second that the system can accommodate.

THE I/O MODEL OF COMPUTATION

The number of block accesses (Disk I/O’s) is a

good time approximation for the algorithm.

This should be minimized.

Ex 13.3: You want to have an index on R to

identify the block on which the desired tuple

appears, but not where on the block it resides.

For Megatron 747 (M747) example, it takes 11ms to

read a 16k block.

A standard microprocessor can execute millions of

instruction in 11ms, making any delay in searching for

the desired tuple negligible.

22

ORGANIZING DATA BY CYLINDERS

If we read all blocks on a single track or

cylinder consecutively, then we can neglect all

but first seek time and first rotational latency.

Ex 13.4: We request 1024 blocks of M747.

If data is randomly distributed, average latency is

10.76ms by Ex 13.2, making total latency 11s.

If all blocks are consecutively stored on 1 cylinder:

6.46ms + 8.33ms * 16 = 139ms

(1 average seek)

(time per rotation)

(# rotations)

23

USING MULTIPLE DISKS

If we have n disks, read/write performance will

increase by a factor of n.

Striping – distributing a relation across multiple

disks following this pattern:

Data on disk R1: R1, R1+n, R1+2n,…

Data on disk R2: R2, R2+n, R2+2n,…

…

Data on disk Rn: Rn, Rn+n, Rn+2n, …

Ex 13.5: We request 1024 blocks with n = 4.

6.46ms + (8.33ms * (16/4)) = 39.8ms

24

(1 average seek)

(time per rotation)

(# rotations)

MIRRORING DISKS

Mirroring Disks – having 2 or more disks hold

identical copied of data.

Benefit 1: If n disks are mirrors of each other, the

system can survive a crash by n-1 disks.

Benefit 2: If we have n disks, read performance

increases by a factor of n.

Performance increases further by having the

controller select the disk which has its head closest to

desired data block for each read.

25

DISK SCHEDULING AND THE

ELEVATOR PROBLEM

Disk controller will run this algorithm to select which

of several requests to process first.

Pseudo code:

requests[]

// array of all non-processed data

requests

upon receiving new data request:

requests[].add(new request)

while(requests[]

is not empty)

move head to next location

if(head location is at data in requests[])

retrieve data

remove data from requests[]

if(head reaches end)

reverse head direction

26

DISK SCHEDULING AND THE

ELEVATOR PROBLEM (CON’T)

Events:

Head starting point

Request data at 8000

Request data at 24000

Request data at 56000

Get data at 8000

Request data at 16000

Get data at 24000

Request data at 64000

Get data at 56000

Request Data at 40000

Get data at 64000

Get data at 40000

Get data at 16000

64000

56000

48000

40000

32000

24000

16000

8000

Current

time

13.6

26.9

34.2

45.5

56.8

4.3

10

20

30

0

data

time

8000..

4.3

24000..

13.6

56000..

26.9

64000..

27

40000..

45.5

16000..

56.8

34.2

DISK SCHEDULING AND THE

ELEVATOR PROBLEM (CON’T)

Elevator

Algorithm

data

time

FIFO

Algorithm

data

time

8000..

4.3

8000..

4.3

24000..

13.6

24000..

13.6

56000..

26.9

56000..

26.9

64000..

34.2

16000..

42.2

40000..

45.5

64000..

59.5

16000..

56.8

40000..

70.8

28

PRE FETCHING AND LARGE-SCALE

BUFFERING

If at the application level, we can predict the order

blocks will be requested, we can load them into main

memory before they are needed.

29

13.4 RECOVERY FROM DISK CRASHES

Ways

to recover data

The most serious mode of failure for disks is “head crash”

where data permanently destroyed.

So to reduce the risk of data loss by disk crashes there are

number of schemes which are know as RAID (Redundant

Arrays of Independent Disks) schemes.

Each of the schemes starts with one or more disks that

hold the data and adding one or more disks that hold

information that is completely determined by the contents

of the data disks called Redundant Disk.

30

MIRRORING /REDUNDANCY

TECHNIQUE

Mirroring Scheme is referred as RAID level 1

protection against data loss scheme.

In this scheme we mirror each disk.

One of the disk is called as data disk and other

redundant disk.

In this case the only way data can be lost is if there

is a second disk crash while the first crash is being

repaired.

31

PARITY BLOCKS

RAID level 4 scheme uses only one redundant disk

no matter how many data disks there are.

In the redundant disk, the ith block consists of the

parity checks for the ith blocks of all the data

disks.

It means, the jth bits of all the ith blocks of both

data disks and redundant disks, must have an

even number of 1’s and redundant disk bit is used

to make this condition true.

32

PARITY BLOCKS – READING DISK

Reading data disk is same as reading block from

any disk.

We could read block from each of the other disks and

compute the block of the disk we want to read by taking the

modulo-2 sum.

disk 2: 10101010

disk 3: 00111000

disk 4: 01100010

If we take the modulo-2 sum of the bits in each column, we

get

disk 1: 11110000

33

PARITY BLOCK - WRITING

When

we write a new block of a data disk, we

need to change that block of the redundant

disk as well.

One

approach to do this is to read all the

disks and compute the module-2 sum and

write to the redundant disk.

But this approach requires n-1 reads of data,

write a data block and write of redundant

disk block.

Total = n+1 disk I/Os

34

CONTINUED………………

Better

approach will require only four disk I/Os

1. Read the old value of the data block being

changed.

2. Read the corresponding block of the redundant

disk.

3. Write the new data block.

4. Recalculate and write the block of the

redundant disk.

35

PARITY BLOCKS – FAILURE

RECOVERY

If any of the data disk crashes then we just have to

compute the module-2 sum to recover the disk.

Suppose that disk 2 fails. We need to re compute each block

of the replacement disk. We are given the corresponding

blocks of the

first and third data disks and the redundant disk, so the

situation looks like:

disk 1: 11110000

disk 2: ????????

disk 3: 00111000

disk 4: 01100010

If we take the modulo-2 sum of each column, we deduce

that the missing block of disk 2 is : 10101010

36

AN IMPROVEMENT: RAID 5

RAID 4 is effective in preserving data unless there are

two simultaneous disk crashes.

Whatever scheme we use for updating the disks, we need

to read and write the redundant disk's block. If there are

n data disks, then the number of disk writes to the

redundant disk will be n times the average number of

writes to any one data disk.

However we do not have to treat one disk as the

redundant disk and the others as data disks. Rather, we

could treat each disk as the redundant disk for some of

the blocks. This improvement is often called RAID level 5.

37

CONTINUE : AN IMPROVEMENT: RAID 5

For instance, if there are n + 1 disks numbered 0

through n, we could treat the ith cylinder of disk j as

redundant if j is the remainder when i is divided by n+1.

For example, n = 3 so there are 4 disks. The first disk,

numbered 0, is redundant for its cylinders numbered 4,

8, 12, and so on, because these are the numbers that

leave remainder 0 when divided by 4.

The disk numbered 1 is redundant for blocks numbered

1, 5, 9, and so on; disk 2 is redundant for blocks 2, 6.

10,. . ., and disk 3 is redundant for 3, 7, 11,. . . .

38

COPING WITH MULTIPLE DISK CRASHES

Error-correcting

codes theory known as

Hamming code leads to the RAID level 6.

By

this strategy the two simultaneous crashes

are correctable.

The bits of disk 5 are the modulo-2 sum of the

corresponding bits of disks 1, 2, and 3.

The bits of disk 6 are the modulo-2 sum of the

corresponding bits of disks 1, 2, and 4.

The bits of disk 7 are the module2 sum of the

corresponding bits of disks 1, 3, and 4

39

COPING WITH MULTIPLE DISK CRASHES

– READING/WRITING

We

may read data from any data disk normally.

To

write a block of some data disk, we compute the

modulo-2 sum of the new and old versions of that

block. These bits are then added, in a modulo-2

sum, to the corresponding blocks of all those

redundant disks that have 1 in a row in which the

written disk also has 1.

40

13.5 ARRANGING DATA ON DISK

Data elements are represented as records, which

stores in consecutive bytes in same disk block.

Basic layout techniques of storing data :

Fixed-Length Records

Allocation criteria - data should start at word boundary.

Fixed Length record header

A pointer to record schema.

The length of the record.

Timestamps to indicate last modified or last read.

41

EXAMPLE

CREATE TABLE employee(

name CHAR(30) PRIMARY KEY,

address VARCHAR(255),

gender CHAR(1),

birthdate DATE

);

Data should start at word boundary and contain header and

four fields name, address, gender and birthdate.

42

PACKING FIXED-LENGTH RECORDS INTO BLOCKS

Records are stored in the form of blocks on the

disk and they move into main memory when we

need to update or access them.

A block header is written first, and it is followed

by series of blocks.

43

BLOCK HEADER CONTAINS THE FOLLOWING

INFORMATION

Links to one or more blocks that are part of a

network of blocks.

Information about the role played by this block

in such a network.

Information about the relation, the tuples in

this block belong to.

A "directory" giving the offset of each record in

the block.

Time stamp(s) to indicate time of the block's

last modification and/or access.

44

EXAMPLE

Along with the header we can pack as many

record as we can in one block as shown in the

figure and remaining space will be unused.

45

13.7 VARIABLE LENGTH DATA AND

RECORD

A simple but effective scheme is to put all

fixed length fields ahead of the variablelength fields. We then place in the record

header:

1. The length of the record.

2. Pointers to (i.e., offsets of) the beginnings of

all the variable-length fields. However, if the

variable-length fields always appear in the

same order then the first of them needs no

pointer; we know it immediately follows the

fixed-length fields.

46

RECORDS WITH REPEATING FIELDS

A similar situation occurs if a record contains a variable

number of Occurrences of a field F, but the field itself is of

fixed length. It is sufficient to group all occurrences of field

F

together and put in the record header a pointer to the first.

We can locate all the occurrences of the field F as follows.

Let the number of bytes devoted to one instance of field F be

L. We then add to the offset for the field F all integer

multiples of L, starting at 0, then L, 2L, 3L, and so on.

Eventually, we reach the offset of the field following F.

Where upon we stop.

47

An alternative representation is to keep the

record of fixed length, and put the variable

length portion - be it fields of variable length or

fields that repeat an indefinite number of times on a separate block. In the record itself we keep:

– Pointers to the place where each repeating field

begins, and

– Either how many repetitions there are, or where the

repetitions end.

48

STORING VARIABLE-LENGTH FIELDS

SEPARATELY FROM THE RECORD

49

VARIABLE-FORMAT RECORDS

The

simplest representation of variable-format

records is a sequence of tagged fields, each of

which consists of:

1. Information about the role of this field,

such as:

(a) The attribute or field name,

(b) The type of the field, if it is not apparent

from the field name and some readily available

schema information, and

(c) The length of the field, if it is not apparent

from the type.

2. The value of the field.

50

There are at least two reasons why tagged fields would make

sense.

1. Information integration applications - Sometimes, a

relation has been constructed from several earlier sources,

and these sources have different kinds of information For

instance, our movie star information may have come from

several sources, one of which records birthdates, some give

addresses, others not, and so on. If there are not too many

fields, we are probably best off leaving NULL those values

we do not know.

2. Records with a very flexible schema - If many fields of a

record can repeat and/or not appear at all, then even if we

know the schema, tagged fields may be useful. For instance,

medical records may contain information about many tests,

but there are thousands of possible tests, and each patient

has results for relatively few of them.

51

A RECORD WITH TAGGED FIELDS

52

RECORDS THAT DO NOT FIT IN A BLOCK

These

large values have a variable length, but even if

the length is fixed for all values of the type, we need

to use some special techniques to represent these

values. In this section we shall consider a technique

called “spanned records" that can be used to manage

records that are larger than blocks.

Spanned

records also are useful in situations where

records are smaller than blocks, but packing whole

records into blocks wastes significant amounts of

space.

For both these reasons, it is sometimes desirable to

allow records to be split across two or more blocks.

The portion of a record that appears in one block is

53

called a record fragment.

If records can be spanned, then every record and

record fragment requires some extra header

information:

1. Each record or fragment header must contain a bit

telling whether or not it is a fragment.

2. If it is a fragment, then it needs bits telling whether

it is the first or last fragment for its record.

3. If there is a next and/or previous fragment for the

same record, then the fragment needs pointers to

these other fragments.

54

Storing spanned records across blocks

BLOBS

• Binary, Large OBjectS = BLOBS

• BLOBS can be images, movies, audio files and other very large

values that can be stored in files.

• Storing BLOBS

– Stored in several blocks.

– Preferable to store them consecutively on a cylinder or

multiple disks for efficient retrieval.

• Retrieving BLOBS

– A client retrieving a 2 hour movie may not want it all at the

same time.

– Retrieving a specific part of the large data requires an index

structure to make it efficient. (Example: An index by seconds

on a movie BLOB.)

55

COLUMN STORES

An alternative to storing tuples as records is to store

each column as a record. Since an entire column of a

relation may occupy far more than a single block, these

records may span many block, much as long as files do.

If we keep the values in each column in the same order

then we can reconstruct the relation from column

records

56

13.6 REPRESENTING BLOCK AND

RECORD ADDRESSES

INTRODUCTION

Address of a block and Record

In Main Memory

Address of the block is the virtual memory address

of the first byte

Address of the record within the block is the

virtual memory address of the first byte of the

record

In Secondary Memory: sequence of bytes describe the

location of the block in the overall system

Sequence of Bytes describe the location of the

block : the device Id for the disk, Cylinder

number, etc.

57

ADDRESSES IN CLIENT-SERVER SYSTEMS

The addresses in address space are represented

in two ways

Physical Addresses: byte strings that determine the

place within the secondary storage system where the

record can be found.

Logical Addresses: arbitrary string of bytes of some

fixed length

Physical Address bits are used to indicate:

Host to which the storage is attached

Identifier for the disk

Number of the cylinder

Number of the track

Offset of the beginning of the record

58

ADDRESSES IN CLIENT-SERVER SYSTEMS

(CONTD..)

Map Table relates logical addresses to physical

addresses.

Logical

Physical

Logical Address

Physical Address

59

LOGICAL AND STRUCTURED ADDRESSES

Purpose of logical address?

Gives more flexibility, when we

Move the record around within the block

Move the record to another block

Gives us an option of deciding what to do when a

record is deleted?

Unused

Rec

ord

4

Offset table

Header

Rec

ord

3

Rec

ord

2

Rec

ord

1

60

POINTER SWIZZLING

Having pointers is common in an objectrelational database systems

Important to learn about the management of

pointers

Every data item (block, record, etc.) has two

addresses:

database address: address on the disk

memory address, if the item is in virtual

memory

61

POINTER SWIZZLING (CONTD…)

Translation Table: Maps database address to

memory address

Dbaddr

Mem-addr

Database address

Memory Address

All addressable items in the database have

entries in the map table, while only those items

currently in memory are mentioned in the

translation table

62

POINTER SWIZZLING (CONTD…)

Pointer consists of the following two fields

Bit indicating the type of address

Database or memory address

Example 13.17

Dis

k

Memory

Swizzled

Block 1

Block 1

Unswizzle

d

Block 2

63

EXAMPLE 13.7

Block 1 has a record with pointers to a second

record on the same block and to a record on

another block

If Block 1 is copied to the memory

The first pointer which points within Block 1 can be

swizzled so it points directly to the memory address

of the target record

Since Block 2 is not in memory, we cannot swizzle

the second pointer

64

POINTER SWIZZLING (CONTD…)

Three types of swizzling

Automatic Swizzling

Swizzling on Demand

As soon as block is brought into memory, swizzle all

relevant pointers.

Only swizzle a pointer if and when it is actually followed.

No Swizzling

Pointers are not swizzled they are accesses using the

database address.

65

PROGRAMMER CONTROL OF SWIZZLING

Unswizzling

When a block is moved from memory back to disk, all

pointers must go back to database (disk) addresses

Use translation table again

Important to have an efficient data structure for the

translation table

66

PINNED RECORDS AND BLOCKS

A block in memory is said to be pinned if it

cannot be written back to disk safely.

If block B1 has swizzled pointer to an item in

block B2, then B2 is pinned

Unpin a block, we must unswizzle any pointers to it

Keep in the translation table the places in memory

holding swizzled pointers to that item

Unswizzle those pointers (use translation table to

replace the memory addresses with database (disk)

addresses

67

13.8 RECORD MODIFICATION

When a data manipulation operation is performed ,

called as record Modification

Example: Record Structure for a person Table

CREATE TABLE PERSON ( NAME CHAR(30), ADDRESS CHAR(256)

, GENDER CHAR(1), BIRTHDATE CHAR(10));

68

TYPES OF RECORDS

Fixed length Records

•

Varaible Length Records

•

CREATE TABLE SJSUSTUDENT(STUDENT_ID INT(9) NOT NULL ,

PHONE_NO INT(10) NOT NULL);

CREATE TABLE SJSUSTUDENT(STUDENT_ID INT(9) NOT NULL,NAME

CHAR(100) ,ADDRESS CHAR(100) ,PHONE_NO INT(10) NOT NULL);

Record Modification

•

Insert, update & delete

69

STRUCTURE OF A BLOCK & RECORDS

Various Records are clubbed together and stored

together in memory in blocks

Structure of a Block

70

BLOCKS & RECORDS

If records need not be any particular order, then just

find a block with enough empty space

We keep track of all records/tuples in a relation/tables

using Index structures, File organization concepts

71

INSERTING NEW RECORDS

If

Records are not required to be a particular

order, just find an empty block and place the

record in the block.eg: Heap Files

What if the Records are to be Kept in a

particular Order (eg: sorted by primary key) ?

Locate appropriate block,check if space is

available in the block if yes place the record in

the block.

72

INSERTING NEW RECORDS

We may have to slide the Records in the Block to place

the Record at an appropriate place in the Block and

suitably edit the block header.

73

WHAT IF THE BLOCK IS FULL ?

We need to Keep the record in a particular block but

the block is full. How do we deal with it ?

We find room outside the Block

There are 2 approaches to finding the room for the

record.

I. Find Space on Nearby Block

II. Create an Overflow Block

74

APPROACHES TO FINDING ROOM FOR RECORD

Find a space on nearby block

Block B1 has no space

If space is available on block B2 move records of B1 tp

B2

If there are external Pointers to records of B1 Moved to

B2 Leave Forwarding Address in offset Table of B1

75

APPROACHES TO FINDING ROOM FOR

RECORD

Create Overflow block

Each Block B has in its header pointer to an overflow

block where additional blocks of B can be placed

76

DELETION

Try to reclaim the space available on a record after

deletion of a particular record

If an offset table is used for storing information about

records for the block then rearrange/slide the

remaining records.

If Sliding of records is not possible then maintain a

SPACE-AVAILABLE LIST to keep track of space

available on the Record.

77

TOMSTONE

What about pointer to deleted records ?

A tombstone is placed in place of each deleted record

A tombstone is a bit placed at first byte of deleted record

to indicate the record was deleted ( 0 – Not Deleted 1 –

Deleted)

A tombstone is permanent

78

UPDATING RECORDS

For Fixed-Length Records, there is no effect on the

storage system

For variable length records :

If length increases, like insertion “slide the records”

• If length decreases, like deletion we update the spaceavailable list, recover the space/eliminate the overflow

blocks.

•

79

CHAPTER 15

QUERY EXECUTION

15.1 Introduction to Physical-Query-Plan Operators

15.2 One-Pass Algorithms for Database Operations

15.3 Nested-Loop Joins

15.4 Two-Pass Algorithms Based on Sorting

15.5 Two-Pass Algorithms Based on Hashing

15.6 Index-Based Algorithms

15.7 Buffer Management

15.8 Algorithms Using More Than Two Passes

15.9 Parallel Algorithms for Relational Operations

80

QUERY COMPILATION

Query compilation is divided into 3 major

steps:

Parsing, in which a parse tree construct

the structure and query

Query rewrite, in which the parse tree is

converted to an initial query plan, which

is an algebraic representation of the

query.

Physical Plan Generation, where the

abstract query plan is converted into

physical query plan.

81

QUERY COMPILATION

82

15.1 INTRODUCTION TO PHYSICALQUERY-PLAN OPERATORS

Physical query plans are built from the operators

each of which implements one step of the plan.

Physical operators can be implementations of the

operators of relational algebra.

However they can also be operators of nonrelational algebra like ‘scan’ operator used for

scanning tables.

83

FOR SCANNING A TABLE

We have two different approach

Table scan

Relation R is stored in secondary memory with its

tuples arranged in blocks. It is possible to get the

blocks one by one

Index scan

if there is an index on any attribute of relation R,

then we can use this index to get all the tuples of R.

84

SORTING IS THE MAJOR TOPIC

WHILE SCANNING THE TABLE

Reasons why we need sorting while scanning

tables

Various algorithms for relational-algebra operations

require one or both of their arguments to be sorted

relation

The query could include an ORDER BY clause.

Requiring that a relation be sorted

A Physical-query-plan operator sort-scan takes a

relation R and a specification of the attributes on which

the sort is to be made, and produces R in that sorted

order.

If we are to produce a relation R sorted by attribute a, and

if there is a B-tree index on a, then index scan is used.

If relation R is small enough to fit in main memory, then we

can retrieve its tuples using a table scan.

85

PARAMETERS FOR MEASURING COSTS

Parameters that mainly affect the performance of a

query are:

1.

2.

3.

The cost mainly depends upon size of memory block on the

disk and the size in the main memory affects the

performance of a query.

Buffer space availability in the main memory at the time of

execution of the query.

Size of input and the size of the output generated

This are the number of disk I/O’s needed for each of

the scan operators.

1.

2.

3.

If a relation R is clustered, then the number of disk I/O’s is

approximately B where B is the number of blocks where R

is stored.

If R is clustered but requires a two phase multi way merge

sort then the total number of disk i/o required will be 3B.

If R is not clustered, then the number of required disk I/0's

is generally much higher.

86

IMPLEMENTATION OF PHYSICAL

OPERATOR

The three major functions are open(), getnext(),

close()

Open() we have to start process by getting tuples and

initialize the data structure and perform operation.

Getnext() this function returns the next tuple in

result

Close() finally closes all operation and function

87

15.2 ONE-PASS ALGORITHMS FOR

DATABASE OPERATIONS

To

transform a logical query plan into a

physical query a algorithm is required.

Main classes of Algorithms:

Sorting-based methods

Hash-based methods

Index-based methods

Division

cost:

based on degree difficulty and

1-pass algorithms

2-pass algorithms

3 or more pass algorithms

88

ONE-PASS ALGORITHM METHODS

One-Pass Algorithms for Tuple-at-a-Time

Operations (Unary operation) such as Selection &

projection.

read the blocks of R one at a time into an input

buffer

perform the operation on each tuple

move the selected tuples or the projected

tuples to the output buffer

The disk I/O requirement for this process

depends only on how the argument relation R is

provided.

If R is initially on disk, then the cost is

whatever it takes to perform a table-scan or

index-scan of R.

89

THE BLOCK DIAGRAM THE MEMORY

OPERATION PERFORMED USING ONE

PASS ALGORITHM TUPLE-AT-A TIME

OPERATION FIGURE(1) AND OTHER FOR

DUPLICATION ELIMINATION OF

RECORDS

90

Figure(1)

Figure(2)

Ref: - Database Complete Book By Hector Garcia-Molina,Jeffrey D. UllmanJennifer Widom

ONE-PASS ALGORITHMS FOR UNARY,

FILL-RELATION OPERATIONS

Duplicate

Elimination

To eliminate duplicates, we can read each

block of R one at a time, but for each tuple we

need to make a decision as to whether:

It is the first time we have seen this tuple, in

which case we copy it to the output, or

2. We have seen the tuple before, in which case we

must not output this tuple.

1.

One memory buffer holds one block of R's

tuples, and the remaining M - 1 buffers can be

used to hold a single copy of every tuple.

91

DUPLICATION ELIMINATION

CONTINUED……….

When a new tuple from R is considered, we compare it with

all tuples

if it is not equal: copy both to the output and add it to the inmemory list of tuples we have seen.

if there are n tuples in main memory: each new tuple takes

processor time proportional to n, so the complete operation

takes processor time proportional to n2.

We need a main-memory structure that allows each of the

operations:

Add a new tuple, and

Tell whether a given tuple is already there

Note: Basic data structure that are used for searching and

sorting is

Hash table

Balanced binary search tree

92

ONE-PASS ALGORITHMS FOR

UNARY, FILL-RELATION

OPERATIONS

Grouping

The grouping operation gives us zero or more grouping

attributes and presumably one or more aggregated attributes.

If we create in main memory one entry for each group then we

can scan the tuples of R, one block at a time. The entry for a

group consists of values for the grouping attributes and an

accumulated value or values for each aggregation.

Min() or Max() ,Avg() ,Count (), sum()

Binary

operations include

Union

Intersection

Difference

Product

Join

93

BINARY OPERATION

We read S into M - 1 buffers of main memory and

build a search structure where the search key is

the entire tuple.

All these tuples are also copied to the output.

Read each block of R into the Mth buffer, one at a time.

Set Union : - For each tuple t of R, see if t is in S, and if

Set intersection: -Read each block of R, and for each

Set Difference: - Read each block of R, and for each

not, we copy t to the output. If t is also in S, we skip t.

tuple t of R, see if t is also in S. If so, copy t to the output,

and if not, ignore t.

tuple t of R, see if t is also in S. then copy the remaining

that are not in R to the ouput.

94

CONTINUED………………

Bag Difference

S -B R, read tuples of S into main memory & count no. of

occurrences of each distinct tuple

Then read R; check each tuple t to see whether t occurs in S,

and if so, decrement its associated count. At the end, copy to

output each tuple in main memory whose count is positive, &

no. of times we copy it equals that count.

To compute R -B S, read tuples of S into main memory & count

no. of occurrences of distinct tuples.

Think of a tuple t with a count of c as c reasons not to copy t to

the output as we read tuples of R.

Read a tuple t of R; check if t occurs in S. If not, then copy t to

the output. If t does occur in S, then we look at current count c

associated with t. If c = 0, then copy t to output. If c > 0, do not

95

copy t to output, but decrement c by 1.

CONTINUED….

Product

Read S into M - 1 buffers of main memory. Then read each block of R, and

for each tuple t of R concatenate t with each tuple of S in main memory.

Output each concatenated tuple as it is formed.

Natural Join

To compute the natural join, do the following:

1.

Read all tuples of S & form them into a main-memory search structure.

Hash table or balanced tree are good e.g. of such structures. Use M - 1

blocks of memory for this purpose.

2.

Read each block of R into 1 remaining main-memory buffer.

For each tuple t of R, find tuples of S that agree with t on all attributes

of Y, using the search structure.

For each matching tuple of S, form a tuple by joining it with t,

& move resulting tuple to output.

96

15.3 NESTED LOOPS JOINS

Tuple-Based Nested-Loop Join

The simplest variation of nested-loop join has loops

that range over individual tuples of the relations

involved. In this algorithm, which we call tuplebased nested-loop join, we compute the join as

follows

For each tuple s in S DO

For each tuple r in R Do

if r and s join to make a tuple t THEN

output t;

97

If

we are careless about how the buffer the

blocks of relations R and S, then this algorithm

could require as many as T(R)T(S) disk .there

are many situations where this algorithm can

be modified to have much lower cost.

One

case is when we can use an index on the

join attribute or attributes of R to find the

tuples of R that match a given tuple of S,

without having to read the entire relation R.

The

second improvement looks much more

carefully at the way tuples of R and S are

divided among blocks, and uses as much of the

memory as it can to reduce the number of disk

I/O's as we go through the inner loop. We shall 98

consider this block-based version of nested-loop

join.

AN ITERATOR FOR TUPLE-BASED NESTEDLOOP JOIN

Open() {

R.Open();

S.open();

A:=S.getnext();

}

GetNext() {

Repeat {

r:= R.Getnext();

IF(r= Not found) {/* R is exhausted for the

current s*/

R.close();

s:=S.Getnext();

IF( s= Not found) RETURN Not Found;

/* both R & S are exhausted*/

R.Close();

r:= R.Getnext();

}

}

until ( r and s join)

RETURN the join of r and s;

}

Close() {

R.close ();

S.close ();

}

99

A BLOCK-BASED NESTED-LOOP JOIN

ALGORITHM

1.

2.

3.

Organizing access to both argument relations by blocks.

Using as much main memory as we can to store tuples belonging to the relation S, the

relation of the outer loop.

Algorithm

FOR each chunk of M-1 blocks of S DO BEGIN

read these blocks into main-memory buffers;

organize their tuples into a search structure whose

search key is the common attributes of R and S;

FOR each block b of R DO BEGIN

read b into main memory;

FOR each tuple t of b DO BEGIN

find the tuples of S in main memory that

join with t ;

output the join of t with each of these tuples;

END ;

END ;

END ;

100

ANALYSIS OF NESTED-LOOP JOIN

Assuming S is the smaller relation, the number

of chunks or iterations of outer loop is B(S)/(M 1). At each iteration, we read hf - 1 blocks of S

and B(R) blocks of R. The number of disk I/O's is

thus

B(S)/M-1(M-1+B(R)) or

B(S)+B(S)B(R)/M-1

Assuming all of M, B(S), and B(R) are large, but

M is the smallest of these, an approximation to

the above formula is B(S)B(R)/M. That is, cost is

proportional to the product of the sizes of the two

relations, divided by the amount of available

main memory.

101

EXAMPLE

B(R) = 1000, B(S) = 500, M = 101

Important Aside: 101 buffer blocks is not as unrealistic

as it sounds. There may be many queries at the same

time, competing for main memory buffers.

Outer loop iterates 5 times

At each iteration we read M-1 (i.e. 100) blocks of S and all

of R (i.e. 1000) blocks.

Total time: 5*(100 + 1000) = 5500 I/O’s

Question: What if we reversed the roles of R and S?

We would iterate 10 times, and in each we would read

100+500 blocks, for a total of 6000 I/O’s.

Compare with one-pass join, if it could be done!

We would need 1500 disk I/O’s if B(S) M-1

102

CONTINUED………

1.

2.

The cost of the nested-loop join is not much

greater than the cost of a one-pass join, which

is 1500 disk 110's for this example. In fact.if

B(S) 5 lZI - 1, the nested-loop join becomes

identical to the one-pass join algorithm of

Section 15.2.3

Nested-loop join is generally not the most

efficient join algorithm.

103

15.4 TWO-PASS ALGORITHMS BASED ON SORTING

INTRODUCTION

In

two-pass algorithms, data from the

operand relations is read into main

memory, processed in some way, written

out to disk again, and then reread from

disk to complete the operation.

In this section, we consider sorting as

tool from implementing relational

operations. The basic idea is as follows if

we have large relation R, where B(R) is

larger than M, the number of memory

buffers we have available, then we can

104

1.

2.

3.

Read M blocks of R in to main memory

Sort these M blocks in main memory, using

efficient, main memory algorithm.

Write sorted list into M blocks of disk, refer

this contents of the blocks as one of the

sorted sub list of R.

105

DUPLICATE ELIMINATION USING SORTING

To

perform δ(R)

operation in two

passes, we sort

tuples of R in

sublists. Then we

use available

memory to hold one

block from each

stored sublists and

then repeatedly copy

one to the output

and ignore all tuples

identical to it.

106

The

no. of disk I/O’s performed by this

algorithm,

1). B(R) to read each block of R when creating

the stored sublists.

2). B(R) to write each of the stored sublists to

disk.

3). B(R) to read each block from the sublists at

the appropriate time.

So , the total cost of this algorithm is 3B(R).

107

GROUPING AND AGGREGATION USING SORTING

Reads the tuples of R into memory, M blocks at a time.

Sort each M blocks, using the grouping attributes of L

as the sort key. Write each sorted sublists on disk.

Use one main memory buffer for each sublist, and

initially load the first block of each sublists into its

buffer.

Repeatedly find least value of the sort key present

among the first available tuples in the buffers.

As for the δ algorithm, this two phase algorithm for γ

takes 3B(R) disk I/O’s and will work as long as B(R) <=

M^2

108

A SORT BASED

UNION ALGORITHM

When bag-union is wanted, one pass algorithm is used

in that we simply copy both relation, works regardless

of the size of arguments, so there is no need to consider

a two pass algorithm for Union bag.

The one pass algorithm for Us only works when at least

one relation is smaller than the available main memory.

So we should consider two phase algorithm for set

union. To compute R Us S, we do the following steps,

1. Repeatedly bring M blocks of R into main memory,

sort their tuples and write the resulting sorted sublists

back to disk.

2.Do the same for S, to create sorted sublist for relation

109

S.

3.Use one main memory buffer for each sublist of R

and S. Initialize each with first block from the

corresponding sublist.

4.Repeatedly find the first remaining tuple t among

all buffers. Copy t to the output , and remove

from the buffers all copies of t.

110

A SIMPLE SORT-BASED JOIN ALGORITHM

Given relation R(x,y) and S(y,z) to join, and given M

blocks of main memory for buffers,

1. Sort R, using a two phase, multiway merge sort,

with y as the sort key.

2. Sort S similarly

3. Merge the sorted R and S. Generally we use only

two buffers, one for the current block of R and the

other for current block of S. The following steps

are done repeatedly.

a. Find the least value y of the join attributes Y

that is currently at the front of the blocks for R

and S.

111

b. If y doesn’t appear at the front of the other

relation, then remove the tuples with sort key y.

c. Otherwise identify all the tuples from both

relation having sort key y

d. Output all the tuples that can be formed by

joining tuples from R and S with a common Y

value y.

e. If either relation has no more unconsidered

tuples in main memory reload the buffer for

that relation.

The

simple sort join uses 5(B(R) + B(S)) disk

I/O’s

It requires B(R)<=M^2 and B(S)<=M^2 to

work

112

SUMMARY OF SORT-BASED ALGORITHMS

Main memory and disk I/O requirements for sort based algorithms

113

15.5 TWO-PASS ALGORITHMS

BASED ON HASHING

Hashing is done if the data is too big to

store in main memory buffers.

Hash all the tuples of the argument(s)

using an appropriate hash key.

For all the common operations, there is a

way to select the hash key so all the

tuples that need to be considered together

when we perform the operation have the

same hash value.

This reduces the size of the operand(s) by

a factor equal to the number of buckets.

114

PARTITIONING RELATIONS BY

HASHING

Algorithm:

initialize M-1 buckets using M-1 empty buffers;

FOR each block b of relation R DO BEGIN

read block b into the Mth buffer;

FOR each tuple t in b DO BEGIN

IF the buffer for bucket h(t) has no room for t THEN

BEGIN

copy the buffer t o disk;

initialize a new empty block in that buffer;

END;

copy t to the buffer for bucket h(t);

END ;

END ;

FOR each bucket DO

IF the buffer for this bucket is not empty THEN

write the buffer to disk;

115

DUPLICATE ELIMINATION

For

the operation δ(R) hash R to M-1

Buckets.

Note: - That two copies of the same tuple t will hash to the

same bucket

Do

duplicate elimination on each bucket Ri

independently, using one-pass algorithm

The

result is the union of δ(Ri), where Ri is

the portion of R that hashes to the ith bucket

116

REQUIREMENTS

Number

of disk I/O's: 3*B(R)

B(R) < M(M-1), only then the two-pass, hash-based

algorithm will work

In

order for this to work, we need:

hash function h evenly distributes the tuples among

the buckets

each bucket Ri fits in main memory (to allow the onepass algorithm)

i.e., B(R) ≤ M2

117

UNION, INTERSECTION, AND

DIFFERENCE

For

binary operation we use the same

hash function to hash tuples of both

arguments.

R U S we hash both R and S to M-1

R ∩ S we hash both R and S to 2(M-1)

R-S we hash both R and S to 2(M-1)

Requires 3(B(R)+B(S)) disk I/O’s.

Two pass hash based algorithm requires

min(B(R)+B(S))≤ M2

118

HASH-JOIN ALGORITHM

Use

same hash function for both

relations; hash function should depend

only on the join attributes

Hash R to M-1 buckets R1, R2, …, RM-1

Hash S to M-1 buckets S1, S2, …, SM-1

Do one-pass join of Ri and Si, for all i

3*(B(R) + B(S)) disk I/O's; min(B(R),B(S)) ≤

M2

119

SORT BASED VS HASH BASED

For Binary operations, hash-based only limits

size to min of arguments, not sum

Sort-based can produce output in sorted order,

which can be helpful

Hash-based depends on buckets being of equal

size

Sort-based algorithms can experience reduced

rotational latency or seek time

120

15.6 INDEX-BASED ALGORITHMS

Clustering

and Non-Clustering Indexes

Clustered Relation: Tuples are packed into

roughly as few blocks as can possibly hold

those tuples

Clustering indexes: Indexes on attributes that

all the tuples with a fixed value for the search

key of this index appear on roughly as few

blocks as can hold them

A

relation that isn’t clustered cannot have

a clustering index

A clustered relation can have Nonclustering indexes

121

INDEX-BASED SELECTION

For a selection σC(R), suppose C is of the form

a=v, where a is an attribute

For clustering index R.a:

The number of disk I/O’s will be

B(R)/V(R,a)

Index is not kept entirely in main memory

They spread over more blocks

May not be packed as tightly as possible into

blocks

122

EXAMPLE

B(R)=1000, T(R)=20,000 number of I/O’s

required:

clustered, not index

1000

not clustered, not index

20,000

If V(R,a)=100, index is clustering

10

If V(R,a)=10, index is nonclustering

2,000

123

JOINING BY USING AN INDEX

Natural join R(X, Y) S S(Y, Z)

Number of I/O’s to get R

Clustered: B(R)

Not clustered: T(R)

Number of I/O’s to get tuple t of S

Clustered: T(R)B(S)/V(S,Y)

Not clustered: T(R)T(S)/V(S,Y)

124

EXAMPLE

R(X,Y):

1000 blocks S(Y,Z)=500 blocks

Assume 10 tuples in each block,

so T(R)=10,000 and T(S)=5000

V(S,Y)=100

If R is clustered, and there is a clustering

index on Y for S

the number of I/O’s for R is:

1000

the number of I/O’s for S

is10,000*500/100=50,000

125

JOINS USING A SORTED INDEX

Natural join R(X, Y) S (Y, Z) with index on Y for

either R or S

Extreme case: Zig-zag join

Example:

relation R(X,Y) and R(Y,Z) with index on Y for

both relations

search keys (Y-value) for R: 1,3,4,4,5,6

search keys (Y-value) for S: 2,2,4,6,7,8

126

15.7 BUFFER MANAGEMENT

Buffer Manager manages the required memory for the

Read/Write

process with minimum delay.

Buffers

Request

Buffer

Manager

127

BUFFER MANAGEMENT

ARCHITECTURE

Two types of architecture:

Buffer Manager controls main memory directly

Buffer Manager allocates buffer in Virtual Memory

In Each method, the Buffer Manager should limit the

number of buffers in use which fit in the available main

memory.

When Buffer Manager controls the main memory directly,

it selects the buffer to empty by returning its content to

disk. If it fails, it may simply be erased from main memory.

If all the buffers are really in use then very little useful

works gets done.

128

BUFFER MANAGEMENT STRATEGIES

LRU (Least Recent Used)

It makes buffer free from the block that has not been read or write for the longest

time.

FIFO (First In First Out)

It makes buffer free that has been occupied the longest and assigned to

new request.

The “Clock” Algorithm

0

1

1

0

0

1

129

THE RELATIONSHIP BETWEEN PHYSICAL

OPERATOR

SELECTION AND BUFFER MANAGEMENT

The query optimizer will eventually select a set of

physical operators that will be used to execute a

given query.

the buffer manager may not be able to guarantee

the availability of the buffers when the query is

executed.

130

CHAPTER 16

QUERY COMPILER

16.1 Parsing

16.2Algebraic Laws for Improving Query Plans

16.3 From Parse Trees to Logical Query Plans

16.4 Estimating the Cost of Operations

16.5 Introduction to Cost-Based Plan Selection

16.6 Choosing an Order for Joins

16.7 Completing the Physical-Query-Plan.

131

16.1 PARSING

Query compilation is divided into three steps

1. Parsing: Parse SQL query into parser tree.

2. Logical query plan: Transforms parse tree into

expression tree of relational algebra.

3.Physical query plan: Transforms logical query plan

into physical query plan.

Operation performed

Order of operation

Algorithm used

The way in which stored data is obtained and passed from

one

operation to another.

132

Parser

Preprocessor

Logical Query plan

generator

Query rewrite

Preferred logical

query plan

133

SYNTAX ANALYSIS AND PARSE TREE

Parser takes the sql query and convert it to

parse tree.

Nodes of parse tree:

#Atoms: known as Lexical elements such as key words,

constants, parentheses, operators, and other schema elements.

#Syntactic categories: Subparts that plays a

134

SIMPLE GRAMMAR

<Query> ::= <SFW>

<Query> ::= (<Query>)

<SFW> ::= SELECT <SelList> FROM <FromList> WHERE <Condition>

<SelList> ::= <Attribute>,<SelList>

<SelList> ::= <Attribute>

<FromList> ::= <Relation>, <FromList>

<FromList> ::= <Relation>

<Condition> ::= <Condition> AND <Condition>

<Condition> ::= <Tuple> IN <Query>

<Condition> ::= <Attribute> = <Attribute>

<Condition> ::= <Attribute> LIKE <Pattern>

<Tuple> ::= <Attribute>

Atoms(constants), <syntactic categories>(variable),

::= (can be expressed/defined as)

135

QUERY AND PARSE TREE

StarsIn(title,year,starName)

MovieStar(name,address,gender,birthdate)

Query:

Give titles of movies that have at least one star

born in 1960

SELECT title FROM StarsIn WHERE starName IN

(

SELECT name FROM MovieStar WHERE birthdate

LIKE '%1960%'

);

SELECT title

FROM StarsIn, MovieStar

WHERE starName = name AND birthdate LIKE '%1960%'

;

136

137

PARSE TREE

138

PREPROCESSOR

Functions of Preprocessor

. If a relation used in the query is virtual view then each use

of this relation in the form-list must replace by parser tree

that describe the view.

. It is also responsible for semantic checking

1. Checks relation uses : Every relation mentioned in

FROMclause must be a relation or a view in current schema.

2. Check and resolve attribute uses: Every attribute

mentioned

in SELECT or WHERE clause must be an attribute of

same

relation in the current scope.

3. Check types: All attributes must be of a type

appropriate to

their uses.

139

PREPROCESSING QUERIES INVOLVING

VIEWS

When an operand in a query is a virtual view, the

preprocessor needs to replace the operand by a piece

of parse tree that represents how the view is

constructed from base table.

Base Table: Movies( title, year, length, genre,

studioname,

producerC#)

View definition : CREATE VIEW ParamountMovies

AS

SELECT title, year FROM movies

WHERE studioName =

'Paramount';

Example based on view:

SELECT title FROM ParamountMovies WHERE year

= 1979;

140

16.2 ALGEBRAIC LAWS FOR IMPROVING

QUERY PLANS

Optimizing the Logical Query Plan

The translation rules converting a parse

tree to a logical query tree do not always

produce the best logical query tree.

It is often possible to optimize the logical

query tree by applying relational algebra

laws to convert the original tree into a

more efficient logical query tree.

Optimizing a logical query tree using

relational algebra laws is called heuristic

optimization

141

RELATIONAL ALGEBRA LAWS

These laws often involve the properties of:

Commutativity - operator can be applied to

operands independent of order.

E.g. A + B = B + A - The “+” operator

is commutative.

Associativity - operator is independent of operand

grouping.

E.g.

A + (B + C) = (A + B) + C - The

“+” operator is associative.

142

ASSOCIATIVE AND COMMUTATIVE

OPERATORS

The relational algebra operators of cross-product

(×), join (⋈), union, and intersection are all

associative and commutative.

Commutative

Associative

R X S=S X R

(R X S) X T = S X (R X T)

R⋈S=S⋈R

(R ⋈ S) ⋈ T= S ⋈ (R ⋈ T)

RS=SR

(R S) T = S (R T)

R ∩S =S∩ R

(R ∩ S) ∩ T = S ∩ (R ∩ T)

143

LAWS INVOLVING SELECTION

Complex selections involving AND or OR can be

broken into two or more selections: (splitting

laws)

σC1 AND C2 (R) = σC1( σC2 (R))

σC1 OR C2 (R) = ( σC1 (R) ) S ( σC2 (R) )

Example

R={a,a,b,b,b,c}

p1 satisfied by a,b, p2 satisfied by b,c

σp1vp2 (R) = {a,a,b,b,b,c}

σp1(R) = {a,a,b,b,b}

σp2(R) = {b,b,b,c}

σp1 (R) U σp2 (R) = {a,a,b,b,b,c}

144

CONTINUED………………….

Selection is pushed through both arguments for union:

σC(R S) = σC(R) σC(S)

Selection is pushed to the first argument and optionally the

second for difference:

σC(R - S) = σC(R) - S

σC(R - S) = σC(R) - σC(S)

All other operators require selection to be pushed to only one of

the arguments.

For joins, may not be able to push selection to both if argument

does not have attributes selection requires.

σC(R × S) = σC(R) × S

σC(R ∩ S) = σC(R) ∩ S

σC(R ⋈ S) = σC(R) ⋈ S

σC(R ⋈D S) = σC(R) ⋈D S

145

LAWS INVOLVING PROJECTION

It is also possible to push projections down the logical query tree.

However, the performance gained is less than selections because

projections just reduce the number of attributes instead of

reducing the number of tuples.

Laws for pushing projections with joins:

πL(R × S) = πL(πM(R) × πN(S))

πL(R ⋈ S) = πL((πM(R) ⋈ πN(S))

πL(R ⋈D S) = πL((πM(R) ⋈D πN(S))

Laws for pushing projections with set operations.

Projection can be performed entirely before union.

πL(R UB S) = πL(R) UB πL(S)

Projection can be pushed below selection as long as we also keep

all attributes needed for the selection

(M = L attr(C)).

πL ( σC (R)) = πL( σC (πM(R)))

146

LAWS INVOLVING JOIN

1.

2.

3.

Joins are commutative and associative.

Selection can be distributed into joins.

Projection can be distributed into joins

147

LAWS INVOLVING DUPLICATE

ELIMINATION

The duplicate elimination operator (δ) can be pushed

through many operators.R has two copies of tuples t, S has

one copy of t,

δ (RUS)=one copy of t

δ (R) U δ (S)=two copies of t

Laws for pushing duplicate elimination operator (δ):

δ(R × S) = δ(R) × δ(S)

δ(R

S) = δ(R)

δ(S)

δ(R D S) = δ(R)

D δ(S)

δ( σC(R) = σC(δ(R))

The duplicate elimination operator (δ) can also be pushed

through bag intersection, but not across union, difference,

or projection in general.

δ(R ∩ S) = δ(R) ∩ δ(S)

148

LAWS INVOLVING GROUPING

The

grouping operator (γ) laws depend on

the aggregate operators used.

There

is one general rule, however, that

grouping subsumes duplicate elimination:

δ(γL(R)) = γL(R)

The

reason is that some aggregate functions

are unaffected by duplicates (MIN and

MAX) while other functions are (SUM,

COUNT, and AVG).

149

16.3 FROM PARSE TREES TO LOGICAL

QUERY PLANS

Parsing

Goal is to convert a text string containing a query

into a parse tree data structure:

Leaves form the text string (broken into lexical elements)

Internal nodes are syntactic categories

Uses standard algorithmic techniques from compilers

Given a grammar for the language (e.g., SQL), process the

string and build the tree

150

CONVERT PARSE TREE TO RELATIONAL

ALGEBRA

The

complete algorithm depends on

specific grammar, which determines forms

of the parse trees

Here is a flavor of the approach

Suppose there are no subqueries.

SELECT att-list FROM rel-list WHERE cond

is converted into

PROJatt-list(SELECTcond (PRODUCT (rellist))),

or

att-list(cond( X (rel-list)))

151

SELECT MOVIETITLE

FROM STARSIN, MOVIESTAR

WHERE STARNAME = NAME AND BIRTHDATE LIKE '%1960';

<Query>

<SFW>

SELECT <SelList> FROM <FromList>

<Attribute>

movieTitle

WHERE

<Condition>

<RelName> , <FromList>

StarsIn

<RelName>

AND <Condition>

<Attribute> LIKE <Pattern>

MovieStar

birthdate

'%1960'

<Condition>

<Attribute> = <Attribute>

152

starName

name

EQUIVALENT ALGEBRAIC EXPRESSION

TREE

movieTitle

starname = name AND birthdate LIKE '%1960'

X

StarsIn

MovieStar

153

Query:

‘%1960’

SELECT title

FROM StarsIn

WHERE starName IN (

SELECT name

FROM MovieStar

WHERE birthdate LIKE

);

Use an intermediate format called twoargument selection

154

EXAMPLE: TWO-ARGUMENT

SELECTION

title

StarsIn

<condition>

<tuple>

<attribute>

starName

IN

name

birthdate LIKE ‘%1960’

MovieStar

155

CONVERTING TWO-ARGUMENT

SELECTION

To continue the conversion, we need rules for

replacing two-argument selection with a

relational algebra expression

Different rules depending on the nature of the

sub query

Here is shown an example for IN operator and

uncorrelated query (sub query computes a

relation independent of the tuple being tested)

156

IMPROVING THE LOGICAL QUERY

PLAN

There are numerous algebraic laws concerning

relational algebra operations

By applying them to a logical query plan

judiciously, we can get an equivalent query plan

that can be executed more efficiently

157

EXAMPLE: IMPROVED LOGICAL QUERY

PLAN

title

starName=name

StarsIn

name

birthdate LIKE ‘%1960’

MovieStar

158

Associative and

Commutative Operations

•

•

•

•

Product

Natural join

Set and Bag union

Set and Bag intersection

Associative: (A op B) op C = A op (B op C)

Commutative: A op B = B op A

159

Laws Involving Selection

• Selections usually reduce the size of the relation

• Usually good to do selections early, i.e., "push them down the tree"

• Also can be helpful to break up a complex selection into parts

Selection Splitting

C1 AND C2 (R) = C1 ( C2 (R))

C1 OR C2 (R) = ( C1 (R)) Uset ( C2 (R))

if R is a set

C1 ( C2 (R)) = C2 ( C1 (R))

160

Selection and Binary Operators

• Must push selection to both arguments:

– C (R U S) = C (R) U C (S)

• Must push to first arg, optional for 2nd:

– C (R - S) = C (R) - S

– C (R - S) = C (R) - C (S)

• Push to at least one arg with all attributes

mentioned in C:

– product, natural join, theta join, intersection

– e.g., C (R X S) = C (R) X S, if R has all the atts in C

161

Pushing Selection Up the

Tree

• Suppose we have relations

– StarsIn(title,year,starName)

– Movie(title,year,len,inColor,studioName)

• and a view

– CREATE VIEW MoviesOf1996 AS

SELECT *

FROM Movie

WHERE year = 1996;

• and the query

– SELECT starName, studioName

FROM MoviesOf1996 NATURAL JOIN StarsIn;

162

The Straightforward Tree

starName,studioName

year=1996

Movie

StarsIn

Remember the rule

C(R S) = C(R)

S?

163

The Improved Logical Query

Plan

starName,studioName

starName,studioName

starName,studioName

year=1996

year=1996

StarsIn

year=1996 year=1996

Movie

Movie

push selection

up tree

StarsIn

Movie

push selection

down tree

StarsIn

164

Grouping Assoc/Comm

Operators

• Groups together adjacent joins, adjacent unions, and

adjacent intersections as siblings in the tree

• Sets up the logical QP for future optimization when

physical QP is constructed: determine best order for

doing a sequence of joins (or unions or intersections)

U

D

U

A

E

U

F

A

B

D

E

F

C

165

B

C

16.4 ESTIMATING THE COST OF

OPERATIONS

Physical

Plan

An order and grouping for associative-andcommutative operations like joins, unions.

An Algorithm for each operator in the logical plan.

whether nested loop join or hash join to be used

Additional operators that are needed for the

physical plan but that were not present explicitly

in the logical plan. eg: scanning, sorting

The way in which arguments are passed from one

operator to the next.

166

ESTIMATING SIZES OF INTERMEDIATE

RELATIONS

Rules for estimating the number of tuples in an

intermediate relation:

Give accurate estimates

2. Are easy to compute

3. Are logically consistent

Objective of estimation is to select best physical plan with

least cost.

1.

Estimating the Size of a Projection

The projection is different from the other operators, in that the size of the

result is computable. Since a projection produces a result tuple for every

argument tuple, the only change in the output size is the change in the lengths

of the tuples

167

ESTIMATING THE SIZE OF A SELECTION(1) &

SELECTION(2)

Let S A c ( R ), where A is an attribute of R and C

is a constant. Then we recommend as an estimate:

T(S) =T(R)/V(R,A)

The rule above surely holds if all values of attribute A

occur equally often in the database.

If S a c (R )

,then our estimate for

T(s) is: T(S) = T(R)/3

We may use T(S)=T(R)(V(R,a) -1 )/ V(R,a) as an

estimate.

When the selection condition C is the And of several

equalities and inequalities, we can treat the selection

as a cascade of simple selections, each of which checks

for one of the conditions.

168

ESTIMATING THE SIZE OF A

SELECTION(3)

A less simple, but possibly more accurate estimate

of the size S

of c1 OR c2(R)

is to assume that

2

C1 and

of which satisfy C2, we would estimate

the number of tuples in S as

m

n(1 (1 m1 / n)(1 m2 / n))

In explanation,1 m1 / n

is the fraction of tuples

that do not satisfy C1, and1 m / n is the

2

fraction that do not satisfy C2. The product of

these numbers is the fraction of R’s tuples that are

not in S, and 1 minus this product is the fraction

that are in S.

169

ESTIMATING THE SIZE OF A JOIN

Two

simplifying assumptions:

1. Containment of Value Sets

If R and S are two relations with attribute Y and V(R,Y)<=V(S,Y) then

every Y-value of R will be a Y-value of S.

2. Preservation of Value Sets

Join a relation R with another relation S with attribute A in R and not in

S then all distinct values of A are preserved and not lost.V(S

R,A) =

V(R,A)

Under these assumptions, we estimate

T(R S) = T(R)T(S)/max(V(R,Y), V(S, Y))

170

NATURAL JOINS WITH MULTIPLE JOIN

ATTRIBUTES

Of the T(R),T(S) pairs of tuples from R and S, the

expected number of pairs that match in both y1 and

y2 is:

T(R)T(S)/max(V(R,y1), V(S,y1)) max(V(R, y2), V(S,

y2))

In general, the following rule can be used to

estimate the size of a natural join when there are

any number of attributes shared between the two

relations.

The estimate of the size of R

S is computed by

multiplying T(R) by T(S) and dividing by the largest

of V(R,y) and V(S,y) for each attribute y that is

common to R and S.

171

JOINS OF MANY RELATIONS(1) & (2)

Rule for estimating the size of any join

Start with the product of the number of tuples in each

relation. Then, for each attribute A appearing at least twice,

divide by all but the least of V(R,A)’s.

We can estimate the number of values that will remain for

attribute A after the join. By the preservation-of-value-sets

assumption, it is the least of these V(R,A)’s.

Based on the two assumptions-containment and preservation of

value sets:

No matter how we group and order the terms in a natural

join of n relations, the estimation of rules, applied to each

join individually, yield the same estimate for the size of the

result. Moreover, this estimate is the same that we get if we

apply the rule for the join of all n relations as a whole.

172

ESTIMATING SIZES FOR OTHER

OPERATIONS

Union: the average of the sum and the larger.

Intersection:

approach1: take the average of the extremes, which is the half the smaller.

approach2: intersection is an extreme case of the natural join, use the

formula

T(R S) = T(R)T(S)/max(V(R,Y), V(S, Y))

•

Difference: T(R)-(1/2)*T(S)

•

Duplicate Elimination: take the smaller of (1/2)*T(R) and the product of all

the V(R, )’s.

•

Grouping and Aggregation: upper-bound the number of groups by a

product of V(R,A)’s, here attribute A ranges over only the grouping

attributes of L. An estimate is the smaller of (1/2)*T(R) and this product.

173

16.5 INTRODUCTION TO COSTBASED PLAN SELECTION

The "cost" of evaluating an expression is approximated well

by the number of disk I/O's performed.

The number of disk I/O’s, in turn, is influenced by:

1. The particular logical operators chosen to implement the

query, a matter decided when we choose the logical query

plan.

2. The sizes of intermediate results (whose estimation we

discussed in Section 16.4)

3. The physical operators used to implement logical operators.

e.g.. The choice of a one-pass or two-pass join, or the choice

to sort or not sort a given relation.

4. The ordering of similar operations, especially joins

5. The method of passing arguments from one physical

operator to the next.

174

Whether selecting a logical query plan or

constructing a physical query plan from a logical

plan, the query optimizer needs to estimate the

cost of evaluating certain expressions.

We shall assume that the "cost" of evaluating an

expression is approximated well by the number of

disk I/O's performed.

175

ESTIMATES FOR SIZE PARAMETER

The formulas of Section 16.4 were predicated on

knowing certain important parameters,

especially T(R), the number of tuples in a relation R, and V(R,

a), the number of different values in the column of relation R

for attribute a.

A modern DBMS generally allows the user or

administrator explicitly to request the gathering of

statistics,

such as T(R) and V(R, a). These statistics are then used in

subsequent query optimizations to estimate the cost of

operations.

By scanning an entire relation R, it is straightforward to count

the number of tuples T(R) and also to discover the number of

different values V(R, a) for each attribute a.

The number of blocks in which R can fit, B(R), can be

estimated either by counting the actual number of blocks used

(if R is clustered), or by dividing T(R) by the number of tuples

per block

176

COMPUTATION OF STATISTICS