Price and Incentives I

advertisement

Pricing and Incentives II

KSE 801

Uichin Lee

The Labor Economics of Paid

Crowdsourcing

John J. Horton and Lydia B. Chilton

EC’10

Labor-Supply of Crowdsourcing

• How workers decide whether or not to

participate in a crowdsourcing project?

• How workers decide the amount to produce,

conditional upon participating?

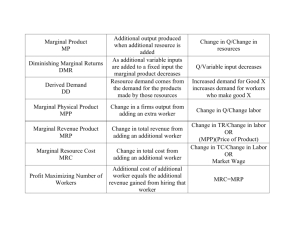

Theory

• Every time consuming activity generates an

“opportunity cost”

– Opportunity cost of doing A is the foregone net

benefits one would have obtained from doing a nextbest option B

• A person will work only when the net benefits

from working exceed the hypothetical net

benefits from their next-best alternative (e.g.,

job, leisure, or a renewed job search)

– “next-best alternative” is characterized as a

reservation wage

Theory

• Reservation way is difficult to estimate in practice

– All jobs offer a mixture of non-monetary benefits, costs,

amenities/disaminities, etc.

• E.g., non-monetary difference between working as a coal miner and

working as an ice-cream taster

– Observing someone working says (1) total benefits exceed total

cost, and (2) what the total cost actually is

• Example:

– Job offers wage w, a stream of amenities/disamenities a and d,

respectively

– If a worker works for time t, they receive the benefit of (w+a)*t

and bear costs d*t

– If the worker has a reservation wage of w, then observing

someone working tells us this: (w+a-d)*t >= w*t

Theory

• To identify w, we need to identify the worker’s

indifference point of w* where w*+a-d = w

• To push a worker down to their indifference point, we

lower the wage by small amount “until they chose to

quit”

– Happening when they are “indifferent” between working

and continuing reservation wage

• “Decreasing wage” is less practical in traditional labor

relationship, but in online labor marketplaces this can

be easily done for “small, piece-rate tasks”

– When the process is explained up front and workers have

little emotional investment in their seconds-old “job”

Theory

• Workers choose some positive, continuous

quantity to produce y >= 0

• Worker’s maximization problem

max P( y) C ( y) s.t. y 0

y

– P(y) strictly increasing: P’(y) > 0, concave: P’’(y) < = 0

– It costs the worker C(y) to complete y tasks

• The first-order condition: P’(y*) = C’(y*) when the

marginal benefit of working equals the marginal

cost

Theory

• Max exists only when P(y) – C(y) is concave

• Marginal cost C’(y):

– Increasing: C’’(y) > 0, say if a task is very tiring

– Decreasing: C’’(y) < 0, say if a worker gets experienced

– Linear: C’’(y) = 0, or mixture of these..

• If P() is linear, i.e., P(y) = π*y, then P(y) – C(y) is concave

only when –C(y) is strictly concave, or C(y) is strictly

convex

• What if C(y) is not increasing?

– If π/t>=w, completes the whole task

– Otherwise, not perform any tasks

Theory

• Assume C’(y) = w*t(y) where t(y) is the marginal

completion time of a worker

– t(y) is actually constant (measured from the experiment), and

the marginal cost is linear: C’(y) = wt

– Thus, -C(y) is strictly concave

• If P(y) is strictly concave, i.e., “P(y) – C(y)” is concave,

solution of worker's max problem is P’(y*) = wt

• A worker’s reservation wage is estimated directly from their

output choice:

– If a worker i completes yi* tasks (or quits after that many tasks),

then wi = P’(yi*)/ti where ti is the worker i’s average completion

time

– Discrete tasks: wi = {p(yi*) + p(yi*+1)} / 2ti

• Here, p(y) = P(y) –P(y-1)

Experimental Setup

• Experimenting two ways of lowering wages:

– Experiment A: Increasing “output” they produce to earn

previous wage (i.e., easy vs. difficult tasks)

– Experiment B: Lowering their wage while keeping the required

amount of work constant

• User interface test: Fitts’ law test

– Difficult measured by the distance

– Block: a block of 10 back-and-forth clicks

Fixed

Width

Distance: easy 100px,

difficult: 600px

Click!

Bar moves

Experimental Setup

• Payment function:

– Up-front display of payment in each block

– k is configured as P(10) = ½*

10

9

P(5) = 8.2

7

P(10) = 5

P(5) = 2.9

p(3) = P(3)-P(2)

= 1.87-1.29

= 0.58

p(3)

= 10, k=1/10*ln2

5 10

25

y = # of blocks

Δ Difficulty (EASY vs. HARD)

• Time between clicks and output

Mean

Avg.=6.04s

Avg.=20.08

Avg.=19.83

Avg.=10.93s

Δ Difficulty (EASY vs. HARD)

• Reservation wage: $1.49/hr (easy) vs. $0.89/hr (hard)

Density

Density

– HARD tasks have relatively higher reservation wage

log(reservation wage)

Δ Price (LOW vs. HIGH)

• Max payment:

Mean=24.07

– LOW = $0.10

– HIGH = $0.30

Counts of subjects

• HIGH has

slightly larger

number of

blocks

completed

LOW

Mean=21.26

HIGH

Blocks completed

Δ Price (LOW vs. HIGH)

• Reservation wage: $0.71/hr (low) vs. $1.57/hr (high)

Density

Density

– Being in LOW lowers a worker’s reservation wage?

log(reservation wage)

Discussion

• Results:

– Low pay reduces output

– “But being in LOW” lowers a worker’s reservation wage implies

that the lower output in LOW is not low as it should be

• Why a worker’s reservation wage of the same task is

different?

– Systematic misinterpretation of schedule (less likely)

• Early marginal payments bleed over and affect the perception of

future marginal payment; less likely to happen as marginal payment is

displayed up-front

– Marginal cost is increasing? (less likely)

– Target earners trying to obtain some self-imposed earning goals

rather than responding to the current offered wage (more likely)

Departure from the rational model

• Workers create earnings targets that influence

their output decisions

• In the absence of income effects, past earnings

are irrelevant to the decision they must make at

the margin

– Income effects: when wage π rises, the price of leisure

becomes higher, and the individual will choose less

leisure (i.e., more work will be done)

• A kind of sunk-cost fallacy: when wages are high,

a target earner works less, whereas a rational

worker works more

Departure from the rational model

Floor of earnings

in cents

• Rational workers: bimodal

• Target earners: workers

try to ear the full amount

possible and quit only

when they realize this

goal is unattainable

# subjects

30

15

10

60

Total 198 subjects

Earnings in HIGH group

29 cents

Summary

• Experiment A (EASY/HARD) confirms a simple rational

model (reservation wage of easy task ≤ that of hard

task)

• Experiment B (LOW/HIGH) shows an irrational

behavior: some workers work to targets

– Designers should consider this propensity when designing

incentive schemes and give people natural targets that will

increase output

– Yet, they should also consider that such schemes might

seem manipulative and could backfire (and potentially

unethical)

Designing Incentives for Inexpert

Human Raters

Aaron D. Shaw, John J. Horton, Daniel L. Che

CSCW ’11

Inexpert Human Raters

• Often compelling research questions require

the quantification of complex constructs such

as trustworthiness, beauty, or aggression

• Researchers often need to look at primary

source material and then classify it according

to some coding scheme (also called as content

analysis)

• Often times these qualitative coding tasks

require human judgment, but not any experts

Inexpert Human Raters

• But tasks are often tedious and timeconsuming and finding research assistance to

perform them may be difficult or expensive

• M-Turk can be used for content analysis, but it

is difficult to elicit and synthesize high-quality

judgments from non-expert raters

collaborating remotely

• Goal: compare the effects of various incentive

schemes in terms of quality of judgements

Task

• Performing content analysis of “Kiva.org”

Task

• Identifying (1) a privacy policy; (2) “avatars” or other visual

representations of user identities were present on the site

(multiple choice questions)

– For both of these questions an “uncertain” answer choice was

also available.

• (3)/(4) asked subjects to assess how frequently members of

the site engaged in specific behaviors (ranking or rating (3)

content and (4) other users)

– Using a five point scale ranging from “Very frequently” to “Very

rarely or never”

• Identify whether there are specific features related to (5)

social networking and (6) revenue creation (YES/NO)

– Subjects could check boxes to select any combination of answer

choices from a pre-defined list.

Experimental Setup

• Gold standard: tasks were given to research assistants

(high inter-rater agreements)

• M-Turk task components:

– Pre-treatment question: Q#1 (privacy policy)

– Post-treatment questions: Q#2~#6

– Demographic questions:

• Age, gender, country of residence, education level, language skills,

employment status, household size, internet skills

• M-Turk:

– A worker can comply only a single task: $0.30

– Experiment ran from July 2 to Sept. 23, 2009

– Total 2159 subjects (2055 completes +104 dropouts)

Treatment Setup

• Control conditions:

– Control: workers were presented with pretreatment, post-treatment, and demographic

questions

– Demographic: workers were presented with pretreatment and demographic questions

Treatment Setup

• Incentive framing with social and financial factors

• Social treatment conditions:

–

–

–

–

–

–

–

Tournament scoring

Cheap Talk—Surveillance (just warning)

Cheap Talk – Normative (emphasizing its importance)

Solidarity (hybrid)

Humanization (thank you!)

Trust

Normative priming questions (attitude)

Treatment Setup

• Financial treatment conditions:

– Reward accuracy (10% bonus if accurate)

– Reward agreement (10% bonus if agreeing with

others)

– Punishment accuracy (10% punishment if wrong)

– Punishment agreement (10% punishment if

disagreeing with the majority)

– Promise of future work

– Bayesian truth serum (BTS) “asking what others who

complete the task would answer the question” (bonus

if agreeing)

– Betting on results

Results (Q#2-#6)

Condition

Number of workers

Correct answers

(group size ranges between 113 and 167 subjects)

Discussion

• Why do BTS and Punishment disagreement

performs better than others?

• BTS:

– Confusion on how exactly they are evaluated

– Cognitive demand on thinking carefully about the

responses of other subjects

• Punishment disagreement:

– Rejection (bad reputation?)

• Strong association of residence in India, web

skills, household size on the performance

What's the Right Price? Pricing

Tasks for Finishing on Time

Siamak Faridani, Bjorn Hartmann,

Panagiotis G. Ipeirotis

HCOMP 2011

Survival Curve and Task Completion

• Survival curve for crowdsourcing tasks

http://www.ieor.berkeley.edu/~faridani/papers/csdm2011.pdf

Survival Curve and Task Completion

• Possible to find reward value based on the

expected completion time