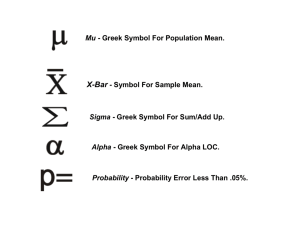

Probability (Type I error) = = level of significance of the test

advertisement

8.2 Testing a Proportion (Hypothesis Testing) Objectives To understand the logic of a significance test for a proportion. To know the vocabulary of significance tests. To learn how to do a significance test for a proportion. To compute and interpret a P-value. To know the meanings of Type I and Type II errors and how to reduce their probability. To know the meaning of the power of a test and how to increase it. Confidence intervals are one of the two most common types of statistical inference. They are used when your goal is to estimate a population parameter. The second common type of inference is called significance tests or hypothesis tests. They have a different goal: to assess the evidence provided by sample data about some claim concerning a population. First we will look at some general concepts of significance tests, and then later we will look specifically at significance tests for a proportion. Demo: “Fair and Unfair Dice”– testing hypotheses empirically. Logic of a Significance Test A hypothesis is a claim or statement about one or more values of a population characteristic. E.g. p0.01, where p is the proportion of email messages that are undeliverable. A significance test or hypothesis test is a method that uses sample data to decide between two competing hypotheses about a population characteristic. The null hypothesis, denoted by H0, is a claim about a population characteristic that is initially assumed to be true (status quo). The alternative hypothesis, denoted by Ha, is the competing claim. Similar to the judicial system, the sample data (evidence) is considered, and if it strongly suggests that H0 is false then the H0 hypothesis will be rejected in favor of Ha, otherwise H0 will not be rejected. Therefore the two possible conclusions of a significance test are: reject H0 or fail to reject H0. 1 8.2 Testing a Proportion The form of a null hypothesis is: H0: population characteristic (p) = hypothesized value ( p0 ) The hypothesized value is a specific number (status quo) determined by the problem context. The alternative hypothesis will have one of the following three forms: Ha: population characteristic > hypothesized value Ha: population characteristic < hypothesized value Ha: population characteristic hypothesized value Notes Because Ha expresses the effect we are hoping to find evidence for, it is often easier to begin by writing Ha, and then set up H0. A significance test is only capable of showing strong support for the alternative hypothesis. When the null hypothesis is not rejected, it does not mean we accept H0 – only lack of strong evidence against it. Large Sample Significance Tests for a Population Proportion The large sample test procedure is based on the same properties of the sampling distribution of p̂ that were used previously to obtain a confidence interval for p̂ : 1. p̂ p p0 (1- p0 ) n 3. When n is large enough that both np and n(1- p) are at least 10, the sampling distribution of p̂ is approximately normal. 2. s p̂ = The standardized variable (z-score) z= = statistic - parameter standard deviation of statistic p̂ - p0 p0 (1- p0 ) n has approximately a standard normal distribution when n is large. This z-score is called the test statistic and it tells you how many standard errors the sample proportion p̂ lies from the null hypothesized value. It is used to decide whether or not to reject the null hypothesis. 2 8.2 Testing a Proportion The P-value (also sometimes called the observed significance level) is a measure of inconsistency between the hypothesized value for a population characteristic and the observed sample. It is the probability, assuming that H0 is true, of obtaining a test statistic value at least as inconsistent with H0 as what actually resulted. If you were to repeatedly sample from the population you would get a sample proportion bigger than the hypothesized value P% of the time, and a sample proportion the same as or smaller than the hypothesized value 1 – P% of the time. Note: 1 – P% is not the probability of the H0 hypothesis being true. Find the P-Value when the Test Statistic is Z 1. Upper-tailed test: H a : p > hypothesized value 2. Lower-tailed test: H a : p < hypothesized value 3. Two-tailed test: H a : p ¹ hypothesized value 3 8.2 Testing a Proportion This P-value is compared to the chosen level of significance of the test, : H0 should be rejected if P-value H0 should not be rejected if P-value > The following sequence of steps is recommended when carrying out a hypothesis test (PHANTOMS): 1. Describe the population characteristic about which hypotheses are to be tested (parameter). 2. State Ho and Ha. 3. Check any assumptions required for the test are met. Bernoulli Trials (experiment) 1. The trials are independent. 2. Both np and n(1 – p) ≥ 10 (these calculations must be shown). 4. 5. 6. 7. Sampling without Replacement 1. The sample is random. 2. The sample is less than 10% of the population. 3. Both np and n(1 – p) ≥10 (these calculations must be shown). Name the test: 1-proportion z-test. Calculate the test statistic (z-score). Use the test statistic to find the P-value. Compare the chosen level of significance, α, with the P-value, make a decision (reject or cannot reject Ho), and write a clear conclusion using English sentences. This conclusion should be stated in the context of the problem, and the level of significance should be included. Example: P27 p.511 4 8.2 Testing a Proportion Errors in Hypothesis Testing There are two types of errors that can occur in hypothesis testing. The only way to guarantee that neither type of error occurs is to base the decision on a census of the entire population. The risk of error is the price researchers pay for basing an inference on a sample. We need to understand what the two types of error are and how to influence the chances of these errors. Null Hypothesis is Actually True Don’t reject H0 (negative) Correct decision Your Decision Reject H0 Type I error (positive) False Type II error Correct decision Power = 1 – Type I Error (false positive result) A Type I error means we have observed there is a difference when none exists. In many practical applications this type of error is more serious and so care is usually made to reduce the probability of a Type I error. E.g. In a criminal trial a Type I error means an innocent person is convicted. Probability (Type I error) = = level of significance of the test To decrease the probability of a Type I error make smaller. Changing the sample size has no effect on the probability of a Type I error. Type II Error (false negative result) A Type II error means we have failed to observe a difference when a difference exists. E.g. In a criminal trial a Type II error means a guilty person is not convicted. E.g. In a clinical trial of a new drug a Type II error means no difference between the two drugs is concluded when in fact the new drug is better. Probability (Type II error) = Using a smaller results in a larger . The exact probability of a Type II error is generally unknown. 5 8.2 Testing a Proportion Power of a Test The power of a hypothesis test is the probability it will correctly lead to a rejection of the null hypothesis. A test lacking statistical power could easily result in a costly study that produces no significant findings. Power can be expressed in different ways and one of these ways may “click” with you: Power is the probability of rejecting the null hypothesis when in fact it is false. Power is the probability of making a correct decision (to reject the null hypothesis) when the null hypothesis is false. Power is the probability that a test of significance will pick up an effect that is present. Power is the probability that a test of significance will detect a deviation from the null hypothesis, should such a deviation exist. There are four things that affect the power of a test: 1. The significance level of a test. As increases, so does the power of a test. This is because a larger α means a larger rejection region for the test and thus a greater probability of rejecting the null hypothesis. That translates to a more powerful test. The price of this increased power is that as α goes up, so does the probability of a Type I error should the null hypothesis in fact be true. 2. The sample size, n. As n increases, so does the power of the significance test. This is because a larger sample size narrows the distribution of the test statistic. The hypothesized distribution of the test statistic and the true distribution of the test statistic (should the null hypothesis in fact be false) become more distinct from one another as they become narrower, so it becomes easier to tell whether the observed statistic comes from one distribution or the other. The price paid for this increase in power is the higher cost in time and resources required for collecting more data. There is usually a sort of "point of diminishing returns" up to which it is worth the cost of the data to gain more power, but beyond which the extra power is not worth the price. 3. The inherent variability in the measured response variable. As the variability increases, the power of the test of significance decreases. 4. The difference between the hypothesized value of a parameter and its true value. The bigger the difference the greater the power of the test. Intuitively this can be thought of as; “the bigger the difference the easier it is to detect”. Note: You only need to understand the concept of power and what features influence it, you do not need to know how to calculate it. 6 8.2 Testing a Proportion Graphical Representation of Power 7 8.2 Testing a Proportion