Lecture 11

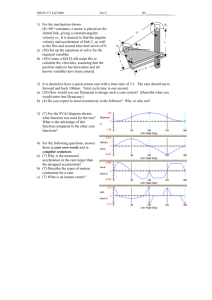

advertisement

Associative Memory • “Remembering” ? – Associating something with sensory cues • Cues in terms of text, picture or anything • Modeling the process of memorization • The minimum requirements of a content addressable memory Associative Memory • Able to store multiple independent patterns • Able to recall patterns with reasonable accuracy • Should be able to recall partial or noisy input patterns • Other expectations – Speed and biological similarities Associative Memory Modeling Memory • • • • Analogy with physical systems Systems that flow towards locally stable points Ball in a bowl Think in terms of “remembering” the bottom of bowl • Initial position is now our sensory cue Modeling Memory • Surface with several stable points -> storing several patterns • Depending on initial position (sensory cue) the ball will end up in one of the stable points (stored pattern) • Stable point closest to initial position is reached Modeling Memory • Two important points – Stores a set patterns and recalls the one closest to initial position – Minimum energy state is reached • Properties of network – Described by state vectors – Has a set of stable states – Network evolves to reach stable state closest to initial state resulting in decrease of energy notes from http://www.cs.ucla.edu/~rosen/ Hopfield Networks • A recurrent neural network which can function as a associative memory • A node is connected to all other nodes (except with itself) • Each node behaves as an input as well as output node • Network’s current state defined by outputs of nodes Hopfield Networks • Nodes are not self connected wii = 0 • Strength of connections are symmetric meaning wji = wij Image Source : http://www.comp.leeds.ac.uk/ai23 Classification and Search Engines • Classification engine receives streams of packets as its input. • It applies a set of application-specific sorting rules and policies continuously on the packets. • It ends up compiling a series of new parallel packet streams in queues of packets.ored. • For classification the NP should consult a memory bank, a lookup table or even a data base where the rules are stored. • Search engines are used for consultation of a lookup table or a database based on rules and policies for the correct classification. Search engines are mostly based on associative memory, which is also known as CAM What is CAM? • Content Addressable Memory is a special kind of memory! • Read operation in traditional memory: Input is address location of the content that we are interested in it. Output is the content of that address. • In CAM it is the reverse: Input is associated with something stored in the memory. Output is location where the associated content is stored. 00 1 0 1 X X 01 0 1 1 0 X 10 0 1 1 X X 11 1 0 0 1 1 0 1 1 0 X 0 1 Traditional Memory 00 1 0 1 X X 01 0 1 1 0 X 10 0 1 1 X X 11 1 0 0 1 1 01 0 1 1 0 1 Content Addressable Memory CAM for Routing Table Implementation • CAM can be used as a search engine. • We want to find matching contents in a database or Table. • Example Routing Table Simplified CAM Block Diagram The input to the system is the search word. The search word is broadcast on the search lines. Match line indicates if there were a match btw. the search and stored word. Encoder specifies the match location. If multiple matches, a priority encoder selects the first match. Hit signal specifies if there is no match. The length of the search word is long ranging from 36 to 144 bits. Table size ranges: a few hundred to 32K. Address space : 7 to 15 bits. Source: K. Pagiamtzis, A. Sheikholeslami, “Content-Addressable Memory (CAM) Circuits and Architectures: A Tutorial and Survey,” IEEE J. of Solid-state circuits. March 2006 CAM Memory Size • Largest available around 18 Mbit (single chip). • Rule of thumb: Largest CAM chip is about half the largest available SRAM chip. A typical CAM cell consists of two SRAM cells. • Exponential growth rate on the size Source: K. Pagiamtzis, A. Sheikholeslami, “Content-Addressable Memory (CAM) Circuits and Architectures: A Tutorial and Survey,” IEEE J. of Solid-state circuits. March 2006 CAM Basics • The search-data word is loaded into the search-data register. • All match-lines are pre-charged to high (temporary match state). • Search line drivers broadcast the search word onto the differential search lines. • Each CAM core compares its stored bit against the bit on the corresponding search-lines. • Match words that have at least one missing bit, discharge to ground. Source: K. Pagiamtzis, A. Sheikholeslami, “Content-Addressable Memory (CAM) Circuits and Architectures: A Tutorial and Survey,” IEEE J. of Solid-state circuits. March 2006 Type of CAMs • Binary CAM (BCAM) only stores 0s and 1s – Applications: MAC table consultation. Layer 2 security related VPN segregation. • Ternary CAM (TCAM) stores 0s, 1s and don’t cares. – Application: when we need wilds cards such as, layer 3 and 4 classification for QoS and CoS purposes. IP routing (longest prefix matching). • Available sizes: 1Mb, 2Mb, 4.7Mb, 9.4Mb, and 18.8Mb. • CAM entries are structured as multiples of 36 bits rather than 32 bits. CAM Advantages • They associate the input (comparand) with their memory contents in one clock cycle. • They are configurable in multiple formats of width and depth of search data that allows searches to be conducted in parallel. • CAM can be cascaded to increase the size of lookup tables that they can store. • We can add new entries into their table to learn what they don’t know before. • They are one of the appropriate solutions for higher speeds. CAM Disadvantages • They cost several hundred of dollars per CAM even in large quantities. • They occupy a relatively large footprint on a card. • They consume excessive power. • Generic system engineering problems: – Interface with network processor. – Simultaneous table update and looking up requests. CAM structure Output Port Control Mixable with 72 bits x 16384 144 bits x 8192 288 bits x 4096 576 bits x 2048 Flag Control • 72 bits 131072 CAM (72 bits x 16K x 8 structures) Priority Encoder • CAM control Empty Bit • Global mask registers Decoder • Control & status registers I/O Port Control • • The comparand bus is 72 bytes wide bidirectional. The result bus is output. Command bus enables instructions to be loaded to the CAM. It has 8 configurable banks of memory. The NPU issues a command to the CAM. CAM then performs exact match or uses wildcard characters to extract relevant information. There are two sets of mask registers inside the CAM. Pipeline execution control (command bus) • CAM structure Output Port Control I/O Port Control Control & status registers Global mask registers Flag Control Mixable with 72 bits x 16384 144 bits x 8192 288 bits x 4096 576 bits x 2048 Priority Encoder Decoder 72 bits 131072 CAM (72 bits x 16K x 8 structures) Empty Bit CAM control Pipeline execution control (command bus) There is global mask registers which can remove specific bits and a mask register that is present in each location of memory. The search result can be one output (highest priority) Burst of successive results. The output port is 24 bytes wide. Flag and control signals specify status of the banks of the memory. They also enable us to cascade multiple chips. CAM Features • CAM Cascading: – We can cascade up to 8 pieces without incurring performance penalty in search time (72 bits x 512K). – We can cascade up to 32 pieces with performance degradation (72 bits x 2M). • Terminology: – Initializing the CAM: writing the table into the memory. – Learning: updating specific table entries. – Writing search key to the CAM: search operation • Handling wider keys: – Most CAM support 72 bit keys. – They can support wider keys in native hardware. • Shorter keys: can be handled at the system level more efficiently. CAM Latency • • • • • Clock rate is between 66 to 133 MHz. The clock speed determines maximum search capacity. Factors affecting the search performance: – Key size – Table size For the system designer the total latency to retrieve data from the SRAM connected to the CAM is important. By using pipeline and multi-thread techniques for resource allocation we can ease the CAM speed requirements. Source: IDT Associative-Memory Networks Input: Pattern (often noisy/corrupted) Output: Corresponding pattern (complete / relatively noise-free) Process 1. Load input pattern onto core group of highly-interconnected neurons. 2. Run core neurons until they reach a steady state. 3. Read output off of the states of the core neurons. Inputs Input: (1 0 1 -1 -1) Outputs Output: (1 -1 1 -1 -1) Associative Network Types 1. Auto-associative: X = Y *Recognize noisy versions of a pattern 2. Hetero-associative Bidirectional: X <> Y BAM = Bidirectional Associative Memory *Iterative correction of input and output Associative Network Types (2) 3. Hetero-associative Input Correcting: X <> Y *Input clique is auto-associative => repairs input patterns 4. Hetero-associative Output Correcting: X <> Y *Output clique is auto-associative => repairs output patterns Hebb’s Rule Connection Weights ~ Correlations ``When one cell repeatedly assists in firing another, the axon of the first cell develops synaptic knobs (or enlarges them if they already exist) in contact with the soma of the second cell.” (Hebb, 1949) In an associative neural net, if we compare two pattern components (e.g. pixels) within many patterns and find that they are frequently in: a) the same state, then the arc weight between their NN nodes should be positive b) different states, then ” ” ” ” negative Matrix Memory: The weights must store the average correlations between all pattern components across all patterns. A net presented with a partial pattern can then use the correlations to recreate the entire pattern. Correlated Field Components • Each component is a small portion of the pattern field (e.g. a pixel). • In the associative neural network, each node represents one field component. • For every pair of components, their values are compared in each of several patterns. • Set weight on arc between the NN nodes for the 2 components ~ avg correlation. a a ?? ?? b b Avg Correlation wab a b Quantifying Hebb’s Rule Compare two nodes to calc a weight change that reflects the state correlation: Auto-Association: w jk i pk i pj Hetero-Association: w jk i pk o pj * When the two components are the same (different), increase (decrease) the weight i = input component o = output component Ideally, the weights will record the average correlations across all patterns: P Auto: w jk i pk i pj P Hetero: p 1 w jk i pk o pj p 1 Hebbian Principle: If all the input patterns are known prior to retrieval time, then init weights as: Auto: 1 P w jk i pk i pj P p 1 Hetero: 1 P w jk i pk o pj P p 1 Weights = Average Correlations Matrix Representation Let X = matrix of input patterns, where each ROW is a pattern. So xk,i = the ith bit of the kth pattern. Let Y = matrix of output patterns, where each ROW is a pattern. So yk,j = the jth bit of the kth pattern. Then, avg correlation between input bit i and output bit j across all patterns is: 1/P (x1,iy1,j + x2,iy2,j + … + xp,iyp,j) = wi,j To calculate all weights: Hetero Assoc: W = XTY Auto Assoc: W = XTX XT X P1 Dot product P2 Pp Out P1: y1,1.. y1,j……y1,n In Pattern 1: x1,1..x1,n In Pattern 2: x2,1..x2,n : In Pattern p: x1,1..x1,n Y X1,i X2,i .. Xp,i Out P2: y2,1.. y2,j……y2,n : Out P3: yp,1.. yp,j ……yp,n Auto-Associative Memory 1. Auto-Associative Patterns to Remember 1 2 1 2 3 4 3 4 Comp/Node value legend: dark (blue) with x => +1 dark (red) w/o x => -1 light (green) => 0 2. Distributed Storage of All Patterns: -1 1 2 1 3 4 • 1 node per pattern unit • Fully connected: clique • Weights = avg correlations across all patterns of the corresponding units 3. Retrieval 1 2 3 4 1 2 3 4 1 2 3 4 1 2 3 4 Hetero-Associative Memory 1. Hetero-Associative Patterns (Pairs) to Remember 1 1 a 2 a 2 b 3 b 3 2. Distributed Storage of All Patterns: -1 1 1 a 2 b 3 • 1 node per pattern unit for X & Y • Full inter-layer connection • Weights = avg correlations across all patterns of the corresponding units 3. Retrieval Hopfield Networks • • • • • Auto-Association Network Fully-connected (clique) with symmetric weights State of node = f(inputs) Weight values based on Hebbian principle Performance: Must iterate a bit to converge on a pattern, but generally much less computation than in back-propagation networks. Input Output (after many iterations) n Discrete node update rule: x pk (t 1) sgn( wkj x pj (t ) I pk ) j 1 Input value Hopfield Network Example 1. Patterns to Remember p1 p2 3. Build Network p3 1/3 1 2 1 1 2 2 1 2 [-] 1/3 3 4 3 4 3 4 -1/3 -1/3 [+] 1/3 4 3 2. Hebbian Weight Init: -1 Avg Correlations across 3 patterns p1 p2 p3 Avg W12 W13 1 1 1 -1 -1 -1 1/3 -1/3 1 2 W14 -1 1 1 1/3 3 4 W23 1 -1 1 1/3 W24 -1 1 -1 -1/3 W34 -1 -1 -1 -1 4. Enter Test Pattern 1/3 +1 1/3 -1/3 -1/3 1/3 0 -1 -1 Hopfield Network Example (2) 5. Synchronous Iteration (update all nodes at once) Inputs Node 1 2 3 4 From discrete output rule: sign(sum) 1 2 3 4 1 1/3 -1/3 1/3 0 0 0 0 0 0 0 0 -1/3 1/3 1 -1 Output 1 1 1 -1 Values from Input Layer 1/3 p1 1/3 = -1/3 -1/3 1/3 -1 Stable State 1 2 3 4 Using Matrices Goal: Set weights such that an input vector Vi, yields itself when multiplied by the weights, W. X = V1,V2..Vp, where p = # input vectors (i.e., patterns) So Y=X, and the Hebbian weight calculation is: W = XTY = XTX 1 1 -1 1 1 1 -1 1 1 1 X= 1 1 -1 1 X T= 1 -1 1 -1 1 1 -1 -1 1 -1 3 1 -1 1 XTX = 1 3 1 -1 -1 1 3 -3 1 -1 -3 3 Common index = pattern #, so this is correlation sum. w2,4 = w4,2 = xT2,1x1,4 + xT2,2x2,4 + xT2,3x3,4 Matrices (2) • The upper and lower triangles of the product matrix represents the 6 weights wi,j = wj,i • Scale the weights by dividing by p (i.e., averaging) . Picton (ANN book) subtracts p from each. Either method is fine, as long we apply the appropriate thresholds to the output values. • This produces the same weights as in the non-matrix description. • Testing with input = ( 1 0 0 -1) (1 0 0 -1) 3 1 -1 1 1 3 1 -1 -1 1 3 -3 = (2 2 2 -2) 1 -1 -3 3 Scaling* by p = 3 and using 0 as a threshold gives: (2/3 2/3 2/3 -2/3) => (1 1 1 -1) *For illustrative purposes, it’s easier to scale by p at the end instead of scaling the entire weight matrix, W, prior to testing. Hopfield Network Example (3) 4b. Enter Another Test Pattern 1/3 1 2 3 4 Spurious Outputs 1/3 • Input pattern is stable, but not one of the original patterns. -1/3 -1/3 1/3 -1 • Attractors in node-state space can be whole patterns, parts of patterns, or other combinations. 5b. Synchronous Iteration Inputs Node 1 2 3 4 1 2 3 4 1 1/3 -1/3 1/3 1/3 1 1/3 -1/3 0 0 0 0 0 0 0 0 Output 1 1 0 0 Hopfield Network Example (4) 4c. Enter Another Test Pattern 1/3 1 2 3 4 1/3 -1/3 -1/3 Asynchronous Updating is central to Hopfield’s (1982) original model. 1/3 -1 5c. Asynchronous Iteration (One randomly-chosen node at a time) Update 3 Update 4 1/3 1/3 -1/3 1/3 -1 1/3 1/3 1/3 -1/3 Update 2 1/3 -1/3 -1/3 1/3 -1 Stable & Spurious -1/3 -1/3 1/3 -1 Hopfield Network Example (5) 4d. Enter Another Test Pattern 1/3 1 2 3 4 1/3 -1/3 -1/3 1/3 -1 5d. Asynchronous Iteration Update 3 Update 4 1/3 1/3 -1/3 1/3 -1 1/3 -1/3 -1/3 1/3 -1 Stable Pattern p3 1/3 1/3 1/3 -1/3 Update 2 -1/3 -1/3 1/3 -1 Hopfield Network Example (6) 4e. Enter Same Test Pattern 1/3 1 2 3 4 1/3 -1/3 -1/3 1/3 -1 5e. Asynchronous Iteration (but in different order) Update 2 Update 3 or 4 (No change) 1/3 1/3 1/3 1/3 -1/3 -1/3 1/3 -1 -1/3 -1/3 1/3 -1 Stable & Spurious Associative Retrieval = Search p3 p1 p2 Back-propagation: • Search in space of weight vectors to minimize output error Associative Memory Retrieval: • Search in space of node values to minimize conflicts between a) node-value pairs and average correlations (weights), and b) node values and their initial values. • Input patterns are local (sometimes global) minima, but many spurious patterns are also minima. • High dependence upon initial pattern and update sequence (if asynchronous) Energy Function Basic Idea: Energy of the associative memory should be low when pairs of node values mirror the average correlations (i.e. weights) on the arcs that connect the node pair, and when current node values equal their initial values (from the test pattern). E a wkj x j xk b I k xk k j When pairs match correlations, wkjxjxk > 0 k When current values match input values, Ikxk > 0 Gradient Descent A little math shows that asynchronous updates using the discrete rule: n x pk (t 1) sgn( wkj x pj (t ) I pk ) j 1 yield a gradient descent search along the energy landscape for the E defined above. Storage Capacity of Hopfield Networks Capacity = Relationship between # patterns that can be stored & retrieved without error to the size of the network. Capacity = # patterns / # nodes or # patterns / # weights • If we use the following definition of 100% correct retrieval: When any of the stored patterns is entered completely (no noise), then that same pattern is returned by the network; i.e. The pattern is a stable attractor. • A detailed proof shows that a Hopfield network of N nodes can achieve 100% correct retrieval on P patterns if: P < N/(4*ln(N)) In general, as more patterns are added to a network, the avg correlations will be less likely to match the correlations in any particular pattern. Hence, the likelihood of retrieval error will increase. => The key to perfect recall is selective ignorance!! N Max P 10 1 100 5 1000 36 10000 271 1011 109