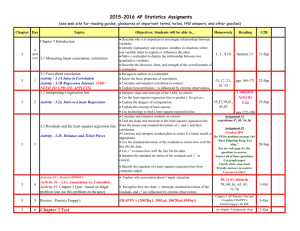

Chapter 13

advertisement

Chapter 15 Describing Relationships: Regression, Prediction, and Causation Chapter 15 1 Linear Regression • Objective: To quantify the linear relationship between an explanatory variable and a response variable. We can then predict the average response for all subjects with a given value of the explanatory variable. • Regression equation: y = a + bx – x is the value of the explanatory variable – y is the average value of the response variable – note that a and b are just the intercept and slope of a straight line – note that r and b are not the same thing, but their signs will agree Chapter 15 2 Thought Question 1 How would you draw a line through the points? How do you determine which line ‘fits best’? 80 Axis Title 60 40 20 0 Axis Title 0 20 40 Chapter 15 60 3 Linear Equations Y Y = mX + b m = Slope Change in Y Change in X b = Y-intercept X High School Teacher Chapter 15 4 The Linear Model • Remember from Algebra that a straight line can be written as: y mx b • In Statistics we use a slightly different notation: ŷ b b x 0 1 • We write ŷ to emphasize that the points that satisfy this equation are just our predicted values, not the actual data values. Chapter 15 5 Fat Versus Protein: An Example • The following is a scatterplot of total fat versus protein for 30 items on the Burger King menu: Chapter 15 6 Residuals • The model won’t be perfect, regardless of the line we draw. • Some points will be above the line and some will be below. • The estimate made from a model is the predicted value (denoted as ŷ ). Chapter 15 7 Residuals (cont.) • The difference between the observed value and its associated predicted value is called the residual. • To find the residuals, we always subtract the predicted value from the observed one: residual observed predicted y yˆ Chapter 15 8 Residuals (cont.) • A negative residual means the predicted value is too big (an overestimate). • A positive residual means the predicted value is too small (an underestimate). Chapter 15 9 “Best Fit” Means Least Squares • Some residuals are positive, others are negative, and, on average, they cancel each other out. • So, we can’t assess how well the line fits by adding up all the residuals. • Similar to what we did with deviations, we square the residuals and add the squares. • The smaller the sum, the better the fit. • The line of best fit is the line for which the sum of the squared residuals is smallest. Chapter 15 10 Least Squares • Used to determine the “best” line • We want the line to be as close as possible to the data points in the vertical (y) direction (since that is what we are trying to predict) • Least Squares: use the line that minimizes the sum of the squares of the vertical distances of the data points from the line Chapter 15 11 The Linear Model (cont.) • We write b1 and b0 for the slope and intercept of the line. The b’s are called the coefficients of the linear model. • The coefficient b1 is the slope, which tells us how rapidly ŷ changes with respect to x. The coefficient b0 is the intercept, which tells where the line hits (intercepts) the y-axis. Chapter 15 12 The Least Squares Line • In our model, we have a slope (b1): – The slope is built from the correlation and the standard deviations: b1 r sy sx – Our slope is always in units of y per unit of x. – The slope has the same sign as the correlation coefficient. Chapter 15 13 The Least Squares Line (cont.) • In our model, we also have an intercept (b0). – The intercept is built from the means and the slope: b0 y b1 x – Our intercept is always in units of y. Chapter 15 14 Example Fill in the missing information in the table below: Chapter 15 15 Interpretation of the Slope and Intercept • The slope indicates the amount by which ŷ changes when x changes by one unit. • The intercept is the value of y when x = 0. It is not always meaningful. Chapter 15 16 Example The regression line for the Burger King data is Interpret the slope and the intercept. Slope: For every one gram increase in protein, the fat content increases by 0.97g. Intercept: A BK meal that has 0g of protein contains 6.8g of fat. Chapter 15 17 Thought Question 2 From a long-term study on several families, researchers constructed a scatterplot of the cholesterol level of a child at age 50 versus the cholesterol level of the father at age 50. You know the cholesterol level of your best friend’s father at age 50. How could you use this scatterplot to predict what your best friend’s cholesterol level will be at age 50? Chapter 15 18 Predictions In predicting a value of y based on some given value of x ... 1. If there is not a linear correlation, the best predicted y-value is y. 2. If there is a linear correlation, the best predicted y-value is found by substituting the x-value into the regression equation. Chapter 15 19 Fat Versus Protein: An Example The regression line for the Burger King data fits the data well: – The equation is – The predicted fat content for a BK Broiler chicken sandwich that contains 30g of protein is 6.8 + 0.97(30) = 35.9 grams of fat. Chapter 15 20 Prediction via Regression Line Husband and Wife: Ages Hand, et al., A Handbook of Small Data Sets, London: Chapman and Hall ^ = 3.6 + 0.97x The regression equation is y – y is the average age of all husbands who have wives of age x For all women aged 30, we predict the average husband age to be 32.7 years: 3.6 + (0.97)(30) = 32.7 years Suppose we know that an individual wife’s age is 30. What would we predict her husband’s age to be? Chapter 15 21 The Least Squares Line (cont.) • Since regression and correlation are closely related, we need to check the same conditions for regressions as we did for correlations: – Quantitative Variables Condition – Straight Enough Condition – Outlier Condition Chapter 15 22 Guidelines for Using The Regression Equation 1. If there is no linear correlation, don’t use the regression equation to make predictions. 2. When using the regression equation for predictions, stay within the scope of the available sample data. 3. A regression equation based on old data is not necessarily valid now. 4. Don’t make predictions about a population that is different from the population from which the sample data were drawn. Chapter 15 23 Definitions Marginal Change – refers to the slope; the amount the response variable changes when the explanatory variable changes by one unit. Outlier - A point lying far away from the other data points. Influential Point - An outlier that that has the potential to change the regression line. Chapter 15 24 Residuals Revisited • Residuals help us to see whether the model makes sense. • When a regression model is appropriate, nothing interesting should be left behind. • After we fit a regression model, we usually plot the residuals in the hope of finding…nothing. Chapter 15 25 Residual Plot Analysis If a residual plot does not reveal any pattern, the regression equation is a good representation of the association between the two variables. If a residual plot reveals some systematic pattern, the regression equation is not a good representation of the association between the two variables. Chapter 15 26 Residuals Revisited (cont.) • The residuals for the BK menu regression look appropriately boring: Plot Chapter 15 27 Coefficient of Determination (R2) • Measures usefulness of regression prediction • R2 (or r2, the square of the correlation): measures the percentage of the variation in the values of the response variable (y) that is explained by the regression line r=1: R2=1: r=.7: R2=.49: regression line explains almost half (50%) of the variation in y regression line explains all (100%) of the variation in y Chapter 15 28 2 R (cont) • Along with the slope and intercept for a regression, you should always report R2 so that readers can judge for themselves how successful the regression is at fitting the data. • Statistics is about variation, and R2 measures the success of the regression model in terms of the fraction of the variation of y accounted for by the regression. Chapter 15 29 A Caution Beware of Extrapolation 100 height (cm) • Sarah’s height was plotted against her age • Can you predict her height at age 42 months? • Can you predict her height at age 30 years (360 months)? Chapter 15 95 90 85 80 30 35 40 45 50 55 60 65 age (months) 30 A Caution Beware of Extrapolation 210 190 height (cm) • Regression line: y = 71.95 + .383 x • height at age 42 months? y = 88 cm. • height at age 30 years? y = 209.8 cm. – She is predicted to be 6' 10.5" at age 30. 170 150 130 110 90 70 30 90 150 210 270 330 390 age (months) Chapter 15 31 Correlation Does Not Imply Causation Even very strong correlations may not correspond to a real causal relationship. Chapter 15 32 Evidence of Causation • A properly conducted experiment establishes the connection • Other considerations: – A reasonable explanation for a cause and effect exists – The connection happens in repeated trials – The connection happens under varying conditions – Potential confounding factors are ruled out – Alleged cause precedes the effect in time Chapter 15 33 Evidence of Causation • An observed relationship can be used for prediction without worrying about causation as long as the patterns found in past data continue to hold true. • We must make sure that the prediction makes sense. • We must be very careful of extreme extrapolation. Chapter 15 34 Reasons Two Variables May Be Related (Correlated) • Explanatory variable causes change in response variable • Response variable causes change in explanatory variable • Explanatory may have some cause, but is not the sole cause of changes in the response variable • Confounding variables may exist • Both variables may result from a common cause – such as, both variables changing over time • The correlation may be merely a coincidence Chapter 15 35 Response causes Explanatory • Explanatory: Hotel advertising dollars • Response: Occupancy rate • Positive correlation? – more advertising leads to increased occupancy rate? Actual correlation is negative: lower occupancy leads to more advertising Chapter 15 36 Explanatory is not Sole Contributor • Explanatory: Consumption of barbecued foods • Response: Incidence of stomach cancer barbecued foods are known to contain carcinogens, but other lifestyle choices may also contribute Chapter 15 37 Common Response (both variables change due to common cause) • Explanatory: Divorce among men • Response: Percent abusing alcohol Both may result from an unhappy marriage. Chapter 15 38 Both Variables are Changing Over Time • Both divorces and suicides have increased dramatically since 1900. • Are divorces causing suicides? • Are suicides causing divorces??? • The population has increased dramatically since 1900 (causing both to increase). Better to investigate: Has the rate of divorce or the rate of suicide changed over time? Chapter 15 39 The Relationship May Be Just a Coincidence We will see some strong correlations (or apparent associations) just by chance, even when the variables are not related in the population Chapter 15 40 Coincidence (?) Vaccines and Brain Damage • A required whooping cough vaccine was blamed for seizures that caused brain damage – led to reduced production of vaccine (due to lawsuits) • Study of 38,000 children found no evidence for the accusations (reported in New York Times) – “people confused association with cause-and-effect” – “virtually every kid received the vaccine…it was inevitable that, by chance, brain damage caused by other factors would occasionally occur in a recently vaccinated child” Chapter 15 41 Key Concepts • • • • • Least Squares Regression Equation R2 Correlation does not imply causation Confirming causation Reasons variables may be correlated Chapter 15 42