Experimental Design for Linguists

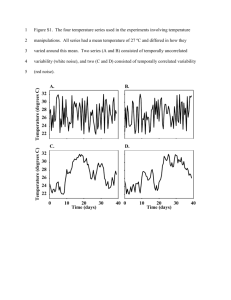

advertisement

Experimental Design for Linguists Charles Clifton, Jr. University of Massachusetts Amherst Slides available at http://people.umass.edu/cec/teaching.html Goals of Course ► Why should linguists do experiments? ► How should linguists do experiments? Part 1: General principles of experimental design ► How should linguists do experiments? Part 2: Specific techniques for (psycho)linguistic experiments Schütze, C. (1996). The empirical basis of linguistics. Chicago: University of Chicago Press. Cowart, W. (1997). Experimental syntax: Applying objective methods to sentence judgments. Thousand Oaks, CA: Sage Publications Inc. Myers, J. L., & Well, A. D. (in preparation). Research design and statistical analysis (3d ed.). Mahwah, NJ: Erlbaum. 1. Acceptability judgments ► Check theorists’ intuitions about acceptability of sentences Acceptability, grammaticality, naturalness, comprehensibility, felicity, appropriateness… ► Aren’t theorists’ intuitions solid? Example of acceptability judgment: Cowart, 1997 ► Subject extraction: I wonder who you think (that) likes John. ► Object extraction: I wonder who you think (that) Mean judged acceptability (z-score) John likes. 0.6 0.4 0.2 0 -0.2 -0.4 -0.6 -0.8 No-That No-That That That Subject Extraction Object Extraction Stability of ratings (Cowart,1997) 2. Sometimes linguists are wrong… ► Superiority effects I’d like to know who hid it where. *I’d like to know where who hid it. ► Ameliorated by a third wh-phrase? ?I’d like to know where who hid it when. …maybe. Paired-comparison preference judgments a. I’d like to know who hid it where. 86% b. (*)I’d like to know where who hid it. 14% c. (*)I’d like to know where who hid it when. d. I’d like to know who hid it where when. 76% 24%. 49% 51% a-b basic superiority violation b-c heads-on comparison, extra wh “when” hurts, doesn’t help c-d the “ameliorated” superiority violation, c, seems good when compared to its non-superiority-violation counterpart Clifton, C. Jr., Fanselow, G., & Frazier, L. (2006). Amnestying superiority violations: Processing multiple questions. Linguistic Inguiry, 37(51-68). Another instance… Question: is the antecedent of an ellipsis a syntactic or a semantic object? Why is (a) good and (b) bad? (a) The problem was to have been looked into, but obviously nobody did. (b) #The problem was looked into by John, and Bob did too. Andrew Kehler’s suggestion: semantic objects for causeeffect discourse relations, syntactic objects for resemblance relations. Corpus data bear his suggestion out. But an experimental approach… Kim looked into the problem even though Lee did. (causal, syntactic parallel) Kim looked into the problem just like Lee did. (resemblance) The problem was looked into by Kim even though Lee did. (causal, nonparallel) The problem was looked into by Kim just like Lee did. (resemblance) Mean Acceptability Rating (5 = good) 4.5 4 Causal Resemblance 3.5 3 2.5 Parallel NonParallel Frazier, L., & Clifton, C. J. (2006). Ellipsis and discourse coherence. Linguistics and Philosophy, 29, 315-346. Context effects ► Linguists: think of minimal pairs ► The contrast between a pair may affect judgments ► Hirotani: Production of Japanese sentences The experimental context in which sentences are produced affects their prosody Hirotani experiment a. Embedded wh-question (ka associated to na’ni-o) (# = Major phrase boundary) Mi’nako-san-wa Ya’tabe-kun-ga Minako-Ms.-TOP Yatabe-Mr.-NOM na’ni-o moyasita’ka (#) gumon-sita’-nokai? what-ACC burned-Q stupid question-did-Q (-wh) ‘Did Minako ask stupidly what Yatabe burned?’ (Yes, it seems (she) asked such a question.’) b. Matrix wh-question (ndai associated to na’ni-o) Mi’nako-san-wa Ya’tabe-kun-ga Minako-Ms.-TOP Yatabe-Mr.-NOM na’ni-o moyasita’ka (#) gumon-sita’-ndai? what-ACC burned-Q stupid question-did-Q (+wh) ‘What did Minako ask stupidly whether Yatabe burned?” (‘The letters (he) received from (his) ex-girlfriend.’) Hirotani results Percentage of insertion of MaP before phrase with question particle Initial Block (pure) Final Block (pair contrast) Embedded question 100% 100% Matrix question 57% 15% Hirotani, Mako. (submitted). Prosodic phrasing of wh-questions in Japanese 3. Unacceptable grammaticality ► Old multiple self-embedding sentence experiments Miller & Isard 1964: sentence recall, right-branching vs. self-embedded (1-4) ► She liked the man that visited the jeweler that made the ring that won the prize that was given at the fair. ► The prize that the ring that the jeweler that the man that she liked visited made won was given at the fair. ► Median trial of first perfect recall: 2.25 vs never Stolz 1967, clausal paraphrases: subjects never understood the self-embedded sentences anyway Miller, G. A., & Isard, S. (1964). Free recall of self-embedded English sentences. Information and Control, 4, 292-303. Stolz, W. (1967). A study of the ability to decode grammatically novel sentences. Journal of verbal Learning and verbal Behavior, 6, 867-873.. 3’. Acceptable ungrammaticality Speeded acceptability judgment and acceptability rating %OK Rating a. OK None of the astronomers saw the comet, but John did. 83% 4.36 B. Embedded VP Seeing the comet was nearly impossible, but John did. 66% 3.71 The comet was nearly impossible to see, / but John did. 44% 3.27 C. VP w/ trace D. Neg adj The comet was nearly unseeable, / but John did. 17% 2.21 Arregui, A., Clifton, C. J., Frazier, L., & Moulton, K. (2006). Processing elided verb phrases with flawed antecedents: The recycling hypothesis. Journal of Memory and Language, 55, 232-246. 4. Provide additional evidence about linguistic structure ►A direct experimental reflex of structure would be nice But we don’t have one ► Are traces real? Filled gap effect: reading slowed at us in My brother wanted to know who Ruth will bring (t) us home to at Christmas. Compared to My brother wanted to know if Ruth will bring us home to at Christmas. Stowe, L. (1986). Parsing wh-constructions: Evidence for on-line gap location. Language and Cognitive Processes, 1, 227-246. Are traces real, cont. ► Pickering and Barry. “no.” ► Possible evidence That’s the pistol with which the heartless killer shot the hapless man yesterday afternoon t. That’s the garage with which the heartless killer shot the hapless man yesterday afternoon t. disrupted at shot in the second example, far before the trace position ► Reading But who’s to say that the parser has to wait to project the trace? Pickering, M., & Barry, G. (1991). Sentence processing without empty categories. Language and Cognitive Processes, 6, 229-259. Traxler, M. J., & Pickering, M. J. (1996). Plausibility and the processing of unbounded dependencies: An eye-tracking study. Journal of Memory and Language, 35, 454-475. 5. Is grammatical knowledge used? ► Serious question early on “psychological reality” experiments ► Direct experimental attack did not succeed Derivational theory of complexity ► Indirect experimental attack has succeeded Build experimentally-based theory of processing 6. Test theories of how grammatical knowledge is used ► Moving beyond modularity debate – more articulated questions about real-time use of grammar ► Phillips: parasitic gaps, selfpaced reading The superintendent learned which schools/students the plan to expand _ … overburdened _. (slowed at expand after students – plausibility effect) The superintendent learned which schools/students the plan that expanded _ … overburdened _. (no differential slowing at expand – no plausibility effect) Phillips, C. (2006) The real-time status of island phenomena. Language, 82, 795-823. II: How to do experiments. Part 1, General design principles ► Dictum 1: Formulate your question clearly ► Dictum 2: Keep everything constant that you don’t want to vary ► Dictum 3: Know how to deal with unavoidable extraneous variability ► Dictum 4: Have enough power in your experiment ► Dictum 5: Pay attention to your data, not just your statistical tests Dictum 1: Formulate your question clearly ► Independent variable: variation controlled be experimenter, not by what subject does ► Dependent variable: variation observed in subject’s behavior, perhaps dependent on IV ► Operationalization of variables Formulate your question ► Question: Do you identify a focused word faster than a non-focused word? Must clarify: Syntactic focus? Prosodic focus? Semantic focus? Must operationalize ►Syntactic focus – Clefting? Fronting? Other device? ►Prosodic focus – Natural speech? Manipulated speech? Synthetic speech? Target word or context? Formulate your question ► Question: does discourse context guide or filter parsing decisions? Clarify question: does discourse satisfy reference? establish plausibility? set up pragmatic implications? create syntactic structure biases? Operationalize IV: Lots of choices here ►But also have to worry about dependent variable… Choose appropriate task, DV ► Question about focus: need measure of speed of word identification Conventional possibilities: lexical decision, naming, phoneme detection, reading time ► Question about “guide vs filter:” probably need explicit theory of your task Tanenhaus: linking hypothesis E.g. eye movements in reading: tempting to think that “guide” implicates “early measures,” “filter” implicated “late measures.” ► But what’s early, what’s late? Need model of eye movement control in parsing. Subdictum A: Never leave your subjects to their own devices ► It may not matter a lot Cowart example: 5-point acceptability rating ►A. “….base your responses solely on your gut reaction” ►B. “…would you expect the professor to accept this sentence [for a term paper in an advanced English course]?” ► But sometimes it does matter… Cowart 1997 Dictum 2: Try to keep everything constant except what you want to vary ► Try to hold extraneous variables constant through norms, pretests, corpora… ► When you can’t hold them constant, make sure they are not associated (confounded) with your IV An example: Staub, in press Eyetracking: does the reader honor intransitivity? Compare unaccusative (a), unergative (b), and optionally transitive) a. When the dog arrived the vet1 and his new assistant took off the muzzle2. b. When the dog struggled the vet1 and his new assistant took off the muzzle2. c. When the dog scratched the vet1 and his new assistant took off the muzzle2. Critical regions: held constant (the vet…; took off the muzzle). Manipulated variable (verb): conditions equated on average length and average word frequency of occurrence. Better: match on additional factors (number of stressed syllables, concreteness, plausibility as intransitive, ….) Better: don’t just have overall match, but match the items in each triple. Staub, A. (in press). The parser doesn't ignore intransitivity, after all. Journal of Experimental Psychology: Learning, Memory and Cognition. Another example: NP vs S-comp bias Kennison (2001), eyetracking during reading of sentences like: a. The athlete admitted/revealed (that) his problem worried his parents…. b. The athlete admitted/revealed his problem because his parents worried… Conflicting results from previous research (Ferreira & Henderson, 1990; Trueswell, Tanenhaus, & Kello, 1993): does a bias toward use as S-complement (admit) reduce the disruption at the disambiguating word worried? Problems in previous research: plausibility of direct object analysis not controlled (e.g., Trueswell et al., ambiguous NP (his problem) rated as implausible as direct object of S-biased verb) Kennison, normed material, equated plausibility of subject-verb-object fragment for NP- and S-comp biased verbs; found reading disrupted equally at disambiguating verb worried for both types of verbs. Kennison, S. M. (2001). Limitations on the use of verb information during sentence comprehension. Psychonomic Bulletin & Review, 8, 132-137. What happens when there is unavoidable variation? ► Subdictum B: When in doubt, randomize Random assignment of subjects to conditions Questionnaire: order of presentation of items? ► Single randomization: problems ► Different randomization for each subject ► Constrained randomizations ► Equate confounds by balancing and counterbalancing Alternative to random assignment of subject to conditions: match squads of subjects Counterbalancing of materials ► Counterbalancing Ensure that each item is tested equally often in each condition. Ensure that each subject receives an equal number of items in each condition. ► Why is it necessary? Since items and subjects may differ in ways that affect your DV, you can’t have some items (or subjects) contribute more to one level of your IV than another level. Sometimes you don’t have to counterbalance ► If you can test each subject on each item in each condition, life is sweet ► E.g., Ganong effect (identification of consonant in context) Vary VOT in 8 5-ms steps ► /dais/ - /tais/ ► /daip/ - /taip/ Classify initial segment as /d/ or /t/ ► Present each of the 80 items to each subject 10 times ► Ganong effect: biased toward /t/ in “type,” /d/ in “dice” Connine, C. M., & Clifton, C., Jr. (1987). Interactive use of information in speech perception. Journal of Experimental Psychology: Human Perception and Performance, 13, 291-299. If you have to counterbalance… ► Simple example Questionnaire, 2 conditions, N items Need 2 versions, each with N items, N/2 in condition 1, remaining half in condition 2 ► Versions ► More 1 and 2, opposite assignment of items to conditions general version M conditions, need some multiple of M items, and need M different versions ► Embarrassing if you have 15 items, 4 conditions… ► That means that some subjects contributed more to some conditions than others did; bad, if there are true differences among subjects Counterbalancing things besides items ► Order of testing Don’t test all Ss in one condition, then the next condition… At least, cycle through one condition before testing a second subject Fancier, latin square ► Avoid minor confound if always test cond 1 before cond 2 etc. ► N x n square, sequence x squad, containing condition numbers, such that each condition occurs once in each column, each order ► Location of testing E.g., 2 experiment stations Experimental Design for Linguists Charles Clifton, Jr. University of Massachusetts Amherst Slides available at http://people.umass.edu/cec/teaching.html and at http://coursework.stanford.edu Goals of Course ► Why should linguists do experiments? ► How should linguists do experiments? Part 1: General principles of experimental design ► How should linguists do experiments? Part 2: Specific techniques for (psycho)linguistic experiments Schütze, C. (1996). The empirical basis of linguistics. Chicago: University of Chicago Press. Cowart, W. (1997). Experimental syntax: Applying objective methods to sentence judgments. Thousand Oaks, CA: Sage Publications Inc. Myers, J. L., & Well, A. D. (in preparation). Research design and statistical analysis (3d ed.). Mahwah, NJ: Erlbaum. II: How to do experiments. Part 1, General design principles ► Dictum 1: Formulate your question clearly ► Dictum 2: Keep everything constant that you don’t want to vary ► Dictum 3: Know how to deal with unavoidable extraneous variability ► Dictum 4: Have enough power in your experiment ► Dictum 5: Pay attention to your data, not just your statistical tests So how do you randomize? ► E-mail me (cec@psych.umass.edu) and I’ll send you a powerful program ► But for most purposes, check out http://www-users.york.ac.uk/~mb55/guide/randsery.htm Or http://www.randomizer.org/index.htm Factor out confounds ► Factorial design An example, discussed earlier: Arregui et al., 2006 Initial experiment contained a confound; corrected in second experiment by adding a second factor Arregui et al., rating study Acceptability rating Rating Rating clause 1 a. OK None of the astronomers saw the comet, but John did. 4.36 4.53 B. Embedded VP Seeing the comet was nearly impossible, but John did. 3.71 4.41 C. VP w/ trace The comet was nearly impossible to see, but John did. 3.27 4.81 D. Neg adj The comet was nearly unseeable, but John did. 2.21 4.39 Arregui, A., Clifton, C. J., Frazier, L., & Moulton, K. (2006). Processing elided verb phrases with flawed antecedents: The recycling hypothesis. Journal of Memory and Language, 55, 232-246. Factorial Design First clause Ellipsis absent Ellipsis present Syntactically OK None of the astronomers saw the comet. ..but John did. Embedded VP Seeing the comet was nearly impossible. ..but John did. VP with trace The comet was nearly impossible to see. ..but John did. Nominalization The comet was nearly unseeable. ..but John did. Factor 1: syntactic form of initial clause (4 levels) Factor 2: presence or absence of ellipsis (2 levels) An interaction Interaction: The size of the effect of one factor differs among the different levels of the other factor. Mean Acceptability Rating 5 4 3 OK Embed VP VP trace Nominal 2 1 0 Ellipsis absent Ellipsis present Factorial Designs in Hypothesis Testing ► Cowart (1997), that-trace effect Question: is it bad to extract a subject over that ► ?I wonder who you think (that) t likes John. Acceptability judgment: worse with that ► But: underlying theory talks just about extracting a subject. Does acceptability suffer with extraction of object over that? ►I wonder who you think (that) John likes t. Need to do factorial experiment 1: presence vs. absence of that ► Factor 2: subject vs. object extraction ► Factor Mean judged acceptability (z-score) The results (from before) 0.6 0.4 0.2 No-That 0 -0.2 -0.4 -0.6 -0.8 No-That That That Subject Extraction A clear interaction. Object Extraction A worry about scales ► Interactions of the form “the effect of Factor A is bigger at Level 1 than at Level 2 of Factor B. Cowart, effect of that bigger at subject than object extraction ► Types of scales Ratio: true zero, equal intervals, can talk about ratios (time, distance, weight) Interval: equal intervals, but no true zero (temperature, dates on a calendar) Ordinal: only more or less (ratings on rating scale, measures of acceptability, measures of difficulty) Original Scale 1000 800 600 Factor 1-1 Factor 1-2 400 200 0 Factor 2-1 Factor 2-2 Log scale 3 2 Factor 1-1 Factor 1-2 1 0 Factor 2-1 Is there really an interaction? Factor 2-2 Disordinal and crossover interactions Factor 1-1 Factor 1-2 35 30 30 25 25 20 20 15 15 10 10 5 0 Factor 1-1 Factor 1-2 5 Factor 2-1 Factor 2-2 0 Factor 2-1 Factor 2-2 An example of an important but problematic experiment: Frazier & Rayner, 1982 Closure: LC: Since Jay always jogs a mile and a half this seems like a short distance to him. 40 40 ms/ch EC: Since Jay always jogs a mile and a half seems like a very short distance to him. 35 54 ms/ch Attachment: MA: The lawyers think his second wife will claim the entire family inheritance. 36 ms/ch NMA: The second wife will claim the entire family inheritance belongs to her. 37 51 ms/ch Data shown: ms/character first pass times for the colored regions. Problems??? Frazier, L., & Rayner, K. (1982). Making and correcting errors during sentence comprehension: Eye movements in the analysis of structurally ambiguous sentences. Cognitive Psychology, 14, 178-210. Dictum 3: Know how to deal with unavoidable extraneous variability ► i.e., know some statistics ► Measures of central tendency (“typical”) Mean (average, sum/N) Median (middle value) Mode (most frequent value) ► Measures of variability Variance (Average squared deviation from mean) Average deviation (Average absolute deviation from median) Computation of Variance Distr 1 X Mean-X Sq’d 7 9 10 6 X Mean-X Sq’d 81 12 4 16 36 14 2 4 … Distr 2 … 30 -14 196 21 -5 25 17 -1 1 17 -1 1 314 64 78.5 16 Sum 64 Mean 16 Variance 46 Variance 11.5 Variance in an experiment ► Systematic variance: variability due to manipulation of IV and other variables you can identify ► Random variance: variability whose origin you’re ignorant of ► Point of inferential statistics: is there really variability associated with IV, on top of other variability? Is there a signal in the noise? Best way to deal with extraneous variability: Minimize it! ► Keep everything constant Reduce experimental noise ►See the signal easier Keep environment, instructions, distractions, experimenter, response manipulanda, etc. constant Pretest subjects and select homogeneous ones, if that suits your purposes One way to minimize extraneous variance: Within-subject designs ► Subjects differ …a lot, in some measures, eg. Reading speed, reaction time ► Present all levels of your IV to each subject Assume the subject effect is a constant across all the levels. Differences among conditions thus abstracted from subject differences ► Counterbalancing necessary Test each item in each condition for an equal number of subjects. ► Worry about experience changing what your subject did E.g., will reading an unreduced relative clause (The horse that was raced past the barn fell) affect reading of a reduced relative clause sentence? Statistical tests/statistical inference ► Never expect observed condition means to be exactly the same Just noise? Or signal + noise? ► Statistical inference: is there really a signal? p value: the probability you’d obtain a difference among the means that is as large as what you observed, if the true signal is zero “null hypothesis” test Basic logic of statistical tests (t, F, etc.) ► Get one estimate of the variabilty due to noise + any signal Estimate from the variation among the observed mean values in the different conditions ► Get another estimate of the variabilty due to noise alone Estimate from how much variation there is among subjects, within a condition ► If signal = 0, ratio is expected to be 1 If it’s enough bigger than 1, then the signal is likely to be non-zero Underlying model ► Subjects are a random sample from some population ► You can make inferences about variability in the population from the observed variability in the sample ► Logical inference: “if the size of the signal in the population is zero, the probability of getting a difference among the means that is as big as we observed is p” where p is the level of significance If p is small enough, reject the proposal that the population signal is zero Between-subject design ► Estimate of signal + noise: variability among the condition means ► Estimate of noise alone: variability among the subject means in each condition ► F = MSbetween conds/MSwithin cond MS, not exactly variance; must divide sum of squares by df, not by N Within-subject design ► Estimate of signal + noise: variability between the condition means ► Estimate of noise alone Get a measure of the variability among condition means for each subject Calculate the variability among these measures Subjects x treatment interaction ► How much the the size of the treatment effect differs among subjects is an estimate of error variability. ►F = MSbetween conditions/MSsubjects x treatments Advanced topics ► Multi-factor designs, tests for interactions ► Treat counterbalancing factors as factors in ANOVA E.g., if have 4 conditions, 4 counterbalancing groups, differing in assignment of items to conditions, you can treat groups as a between-subject factor and pull out variability due to items from the subjects x treatment error term ► Statistical accommodation of extraneous variation Analysis of covariance Multi-level, hierarchical designs Pollatsek, A., & Well, A. D. (1995). On the use of counterbalanced designs in cognitive research: A suggestion for a better and more powerful analysis. Journal of Experimental Psychology: Learning, Memory, and Cognition, 21, 785-794. Forthcoming special issue of the Journal of Memory and Language on new and alternative data analyses. Dictum 4: Have enough power to overcome extraneous variability ► Add more data! Minimizes noise component of differences among condition means ► Law of large numbers The larger the sample size, the more probable it is that the sample mean comes arbitrarily close to the population mean If you’re (almost) looking at population means, any differences have to be real – not sampling error Law of large numbers a population with a variance v2. ► Imagine you take a bunch of independent samples from this population, each sample of size N. ► Each sample will have a mean value. ► These mean values will have a variance, which turns out to be v2/N. ► This variance will be smaller as N gets larger. ► Imagine A sampling simulation ► The effect of sample size on the variability of sample means Bigger samples, smaller variability Standard deviation = square root of variance N=5 http://onlinestatbook.com/stat_sim/index.html N = 25 Means from larger Ns have less noise ► Holds for subject means More subjects, means reflect vagaries of sample less; means have less noise ► Holds for item means too More items, means less affected by peculiarity of individual items ► OK, you can have too many items and burn out your subjects Have enough power…. ► Back to holding everything constant First reason: don’t want variables confounded with our independent variable Second reason: minimize noise. Less noise, more power. Dictum 5: Pay attention to your data, not just your statistical tests ► Look at your data, graph them, try to make sense out of them Don’t just look for p < .05! ► Examine confidence intervals ► Look at your data distributions Stem and leaf graphs By subjects… Confidence intervals ► Confidence subjects) intervals (of means over items and If you have a sample mean and you know the true population standard deviation of the sample σM , you can say that there is a 95% chance that the true population mean is within +/- 1.96 * σM your sample mean. But of course you don’t know σM so you have to estimate it from your data and use the t distribution. But then you can present your means as X +/- CI ►A simulation: http://onlinestatbook.com/stat_sim/index.html Confidence Intervals ► Do you want to look at individual item data? Don’t make too much of the tea leaves Consider getting a confidence interval on the individual item means Example: Self-paced reading time ► Cond 1: This table is slightly dirty and the manager wants it removed. (minimum standard adjective) ► Cond 1: This table is slightly clean and the manager wants it removed. (maximum standard adjective) ► Reading time, clause 2, slower for maximum than minimum standard adjective Are some items more effective than others? Confidence intervals, individual items ► Each item has 12 different observations (different subjects) in each condition. ► Can measure the variability among these subject data points for max std and min std adjective And from that, estimate the variability of the difference, and from that, the confidence interval of the difference Max std adj Min std adj Mean Max Mean Min Diff 95% CI Diff Clean Dirty 1960 1486 474 +/- 662 Safe Dangerous 1572 1258 314 +/- 303 Healthy Sick 1635 1164 471 +/- 485 Dry Wet 1130 1229 -99 +/- 427 Complete Incomplete 1196 1617 -421 +/- 621 Dictum 5: Pay attention to your data, not just your statistical tests ► Graph your data ► Examine confidence intervals ► Look at the distributions of your means Stem and leaf graphs By subjects…and by items Maria asked Bob to invite Fred or Sam to the barbecue. She didn't have enough room to invite both. Frequency Stem & Leaf 3.00 0 . 778 17.00 1 . 01111222333334444 15.00 1 . 556677788889999 11.00 2 . 00011122333 1.00 2. 7 1.00 Extremes (>=3286) Stem width: 1000.00 Each leaf: 1 case(s) Maria asked Bob not to invite Fred or Sam to the barbecue. She didn't have enough room to invite both. Frequency Stem & Leaf 1.00 0. 9 13.00 1 . 1112223334444 22.00 1 . 5555555666677777777788 9.00 2 . 000111344 1.00 2. 7 2.00 Extremes (>=3120) Stem width: 1000.00 Each leaf: 1 case(s) Maria asked Bob to invite Fred or Sam to the barbecue. She didn't have enough room to invite both. Maria asked Bob not to invite Fred or Sam to the barbecue. She didn't have enough room to invite both. By items Frequency Stem & Leaf 7.00 1 . 1122234 12.00 1 . 556666667788 4.00 2 . 1123 1.00 Extremes (>=2629) Stem width: Each leaf: 1000 1 case(s) Frequency Stem & Leaf 1.00 Extremes (=<950) 4.00 1 . 3334 14.00 1 . 56666777777888 4.00 2 . 1222 1.00 Extremes (>=2562) Stem width: 1000.00 Each leaf: 1 case(s) Variation among items ► Treat items as a random sample from some population. Just like we treat subjects as a random sample ► Then do statistical tests to generalize to this population of items. “F1” and “F2” ► Criticisms Should generalize simultaneously to subjects and items, using F’. ► But must estimate F’ unless every you have data from every subject on every condition of every item (min F’; Clark, 1973) We’re fooling ourselves when we view items as anything like a random sample from a population. Alternatives to F1 and F2 ► Some conventional ANOVA designs do permit generalization to subjects and items without full data But generally lack power ► Coming designs trend: multilevel, hierarchical Complex regression-based analyses of individual data points, not subject- or item-means. But what if you recognize that random sampling from a population of items is nutty? ► What you really want is to show that your effects hold for most or all of your items and aren’t due to a couple of oddballs F2 tests a crude attempt to do this. ► People struggling to get a better way. One possibility, from Ken Forster: plot effect size vs effect rank, see if it is pleasingly regular. Forster, “What is F2 good for” ► Plot effect size (difference between two conditions) against rank of effect size (suggested by Peter Killeen) Both cases: a 5 msec mean effect size Left panel: a limited effect (add 100 ms to 5 items) Right panel: a general effect (add 5 ms to 100 items) 20 120 100 R2 = 0.9502 10 R2 = 0.4491 EFFECT SIZE EFFECT SIZE 80 60 40 20 0 0 0 10 20 30 40 -10 0 10 20 30 40 50 -20 -40 -20 RANK RANK Forster, K. (2007). What is F2 good for? Round 2. Unpublished ms, University of Arizona. 50 Bogartz, 2007 ► Effect size vs. rank effect size, Clifton et al. JML 2003 Effect of ambiguity (absence of relative pronoun) on sentences with relative clauses (The man [who was] paid by the parents was unreasonable) Contrasted with Monte Carlo data based on same mean and variance as experimental data Bogartz, R. (2007). Fixed vs. random effects, extrastatistical inference, and multilevel modeling. Unpublished manuscript, University of Massachusetts. III. How to do experiments, Part 2: Experimental procedures ► Acceptability judgment ► Interpretive choices ► Stops making sense ► Self-paced reading ► Eyetracking during reading ► ERP ► Secondary tasks ► Speed-accuracy tradeoff tasks ► Eyetracking during listening (“visual world”) Choose task that is appropriate for your question ► Is this really a sentence of English? ► Does some variable affect how a sentence is understood? ► Is there some difficulty in understanding this sentence? ► Just where in the sentence does the difficulty appear? ► Where in processing does the difficulty appear? ► Can we observe consequences of processing other that difficulty? ► and more…. Acceptability judgment ► Simple written questionnaire See Schütze, Cowart for lots of examples Worry about instructions Rating scales ►Is seven the magical number? ► Magnitude estimation Basis in psychophysics – attempt to build an interval scale Magnitude estimation: an example Which man did you wonder when to meet? Assign an arbitrary number to that item, greater than zero. Now, for each of the following items, assign a number. If the item is better than the first one, use a larger number; if it’s worse, smaller. Make the item proportional to how much better or worse the item is than the original – if twice as good, make the number 2x the start; if 1/3 as good, make the number 1/3 as big as the start. Magnitude estimation : an example ► Which man did you wonder when to meet? Assign an arbitrary number, greater than 0, to this first item. Now, for each successive item, assign a number – bigger if the item is better, smaller if worse, and proportional – if the item is 2x as good, make the number 2x the original; if ¼ as good, make the number ¼ as big as the original. Which book would you recommend reading? ► When do you know the man whom Mary invited? ► This is a paper that we need someone who understands. ► With which pen do you wonder when to write. ► Who did Bill buy the car to please? ► Bard, E. G., Robertson, D., & Sorace, A. (1996). Magnitude estimation of linguistic acceptability. Language, 72. On-line and web-based questionnaires ► WebExp: http://www.webexp.info ► Subject scheduling systems option ► Advantages: Big N, easy, broader population ► Disadvantages: you have to worry about control Speeded acceptability judgment ► Time pressure; discourage navel-examining ► Measure reaction time and acceptability ► Example: is given-new order more acceptable than new-given? Maybe so. Maybe not always. Given-New: DefNP-IndefNP • All the players were watching an umpire. The pitcher threw the umpire a ball. New-Given: IndefNP-DefNP b. The catcher tossed a ball to the mound. The pitcher threw an umpire the ball. Given-New: DefNP-IndefPP c. The catcher tossed a ball to the mound. The pitcher threw the ball to an umpire. New-Given: IndefNP-DefPP d. All the players were watching an umpire. The pitcher threw a ball to the umpire. Given-New New-Given Given-New 3600 100 3200 90 Reaction 2800 Time, ms Percent Accepted 2400 2000 New-Given 80 70 NP-NP NP-PP 60 NP-NP NP-PP Clifton, C. J., & Frazier, L. (2004). Should given information come before new? Yes and no. Memory & Cognition, 32, 886-895. Choice of interpretation ► Paper and pencil or speeded ► Multiple-choice or paraphrase ► Example: interpretation of ellipsis Full stop effect ► Auditory questionnaire Relative size of intonational phrase boundary ► Strengths: does indicate whether a variable has an effect or not ► Weaknesses: don’t know when the effect operates Worst case: subject says sentence to self, mulls it over, reacts to the prosody s/he happened to impose Example of interpretation questionnaire: VPE John said Fred went to Europe and Mary did too. What did Mary do? …went to Europe 60% …said Fred went to Europe 40% John said Fred went to Europe. Mary did too. What did Mary do? …went to Europe 45% …said Fred went to Europe 55% Frazier, L., & Clifton, C. Jr. (2005). The syntax-discourse divide: Processing ellipsis. Syntax, 8, 154-207. Who arrived? Johnny and Sharon’sip inlaws. (0 ip) Who arrived? Johnnyip and Sharon’sip inlaws (ip ip) Who arrived? JohnnyIPh and Sharon’sip inlaws (IPh ip) Alternative answers: Sharon’s inlaws and Johnny; Sharon’s and Johnny’s inlaws Clifton, C. J., Carlson, K., & Frazier, L. (2002). Informative prosodic boundaries. Language and Speech, 45, 87-114. Stops-making-sense task ► Word-by-word, self-paced, but each word make one of two responses: OK, BAD ► Get cumulative proportion of BAD responses and OK RT ► Sensitive to point of difficulty in a sentence Example of SMS Which client/prize did the salesman visit while in the city? (transitive) Which child/movie did your brother remind to watch the show? (object control) Boland, J., Tanenhaus, M., Garnsey, S., & Carlson, G. (1995). Verb argument structure in parsing and interpretation: Evidence from wh-questions. Journal of Memory and Language, 34, 774-806. Stops-making-sense task ► Strengths Begins to address processing dynamics questions Can get both time and choice as relevant data ► Weaknesses Very slow reading time – 500 to 900 ms/word typically Permits more analysis than is done in normal reading Self-paced reading ► Word by word self-paced reading Generally noncumulative Sometimes in place (“RSVP”), sometimes moving across screen Time strongly affected by length of word, frequency of word ►Can ► Variant: statistically adjust reading. phrase by phrase self-paced SPR methods ► Computer programs E-prime (www.pstnet.com) Dmastr/DMDX (http://www.u.arizona.edu/~kforster/dmastr/d mastr.htm) Others (PsyScope, Superlab, various homemade systems) SPR Evaluation ► Cheap and effective Don Mitchell, trailblazing technique ► Slower than normal reading Perhaps 180 words per minute reading Unless reader clicks fast and buffers…. ► Often get effect on word following critical word Spillover ► Phrase-by-phrase: overcomes these difficulties, but you lose precision Mitchell, D. C. (2004). On-line methods in language processing: Introduction and historical review. In M. Carreiras & C. J. Clifton (Eds.), The on-line study of sentence comprehension: Eyetracking, ERPs, and beyond. Brighton, UK: Psychology Press. More SPR evaluation ► Does SPR hide subtle details Maybe: Clifton, Speer, & Abney 1991 JML; Schütze & Gibson 1999 JML attachment: The man expressed his interest in a hurry during the storewide sale… (VP adjunct) ► NP attachment: The man expressed his interest in a wallet during the storewide sale… (NP argument) ► Verb Clifton et al: eyetracking, slow first-pass time in NPattached PP (followed by faster reading for argument than adjunct) Schütze & Gibson, word by word SPR, only the argument advantage ► Better materials ► Worse technique Even more SPR evaluation ► Does SPR introduce unnatural effects? Maybe: Tabor, Galantucci, Richardson, 2004, local coherence effects The coach smiled at the player tossed/thrown the frisbee by the… slowed reading at tossed as if reader considering grammatically illegal main clause interpretation of “the player tossed the…” ► Result: But: scuttlebutt, may not show up in eyetracking ► Global SPR reading speed, 412 ms/word, 145 wpm Eyetracking during reading ► Eye movement measurement Fixations and saccades Reading time affected by word length, frequency, other lexical factors Word-based measures of eye movements (ms) Most cowboys hate to live in houses so they 1 223 2 3 235 178 cowboys SFD: FFD: 235 ms GAZE: 413 ms Go-P: 413 ms 4 6 5 7 301 179 267 199 hate 301 ms 301 ms 301 ms 301 ms houses 267 ms 267 ms 267 ms 436 ms Region-based measures While Mary/ was mending/ the sock/ fell off/ * * * * * * ** * 1 2 3 6 4 7 58 9 277 213 233 277 445 289 401 233 314 First pass: 510 ms Second pass: 401 ms Go-Past: 510 ms Total Time: 911 ms 445 ms 547 ms 1393 ms 992 ms Interpretation of the measures ► “Early” vs. “late” measures Debates about modularity Some measures clearly late – second pass time But early: need explicit model of eye movement control ► Rayner, Pollatsek, Reichle, colleagues – EZ Reader Good model of lexical effects Says little or nothing about parsing & intepretation ERP (event-related potentials) ► Measure electrical activity on scalp Reflect electrical activity of bundles of cortical neurons Good time resolution, questionable spatial resolution ► Standard effects: LAN, N400, P600 Typical peak time, polarity “Standard” ERP effects (Osterhout, 2004) N400 P600 The cat will EAT The cat will BAKE The cat will EAT *The cat will EATING Osterhout, L. et al. (2004). Sentences in the brain…. In M. Carreiras and C. Clifton, Jr., The on-line study of sentence comprehension. New York: Psychology Press, pp 271-308. Secondary tasks: Load effects ► Limited capacity models ► Desire: measure of auditory processing difficulty ► Phoneme monitoring Eg: Cutler & Fodor, 1979 ► Which man was wearing the hat? The man on the corner was wearing the blue hat. ► Which hat was the man wearing? The man on the corner was wearing the blue hat. ► Target: /k/ or /b/; when target started focused word, 360 ms; when started non-focused word, 403 ms. Interpretive difficulties Secondary tasks: Load effects II ► Lexical decision (or naming, or semantic decision, or….) Word unrelated to sentence; measure of available capacity Piñango et al., auditory presentation, visual probe ►The man examined the little bundle of fur for a long time aspect to see if it was… 743 ms ►The man kicked the little bundle of fur for a long time aspect to see if it was… 782 ms Pinango, M. M., Zurif, E., & Jackendoff, R. (1999). Real-time processing implications of enriched composition at the syntaxsemantics interface. Journal of Psycholinguistic Research, 28, 395-414. Secondary tasks: Probe for activation ► Auditory (or visual) presentation Probe semantically related to word in sentence whose activiation you want to measure ► E.g., activation of “filler” at “gap” in longdistance dependency The policeman saw the boy who the crowd at the party1 accused2 of the crime. probe girl or matched unrelated word at point 1 or 2; girl faster at 2. ►Present Worries, criticisms… Nicol, J., Swinney, D., Love, T., & Hald, L. (2006). The on-line study of sentence comprehension: An examination of dual task paradigms. Journal of Psycholinguistic Research, 35, 215-231. Speed-accuracy tradeoff ► Present sentence (usually RSVP), subject to make judgment (grammaticality, etc.) ► But judgment is made in response to a signal that is presented some time after a critical point. ► Accuracy increases with time after the critical point. ► Note, current procedure, multiple signals and multiple responses, e.g., every 350 ms Early procedure: just one signal, one response, per trial McElree, B., Pylkkanen, L., Pickering, M., & Traxler, M. (2006). A time course analysis of enriched composition. Psychonomic Bulletin & Review, 13, 53-59. McElree et al. data Best fit: coercion lowered asymptote and lowered rate of approach to asymptote. Visual World (“head-mounted eyetracking”) ► Measure where you look when you are listening to speech. Cooper, 1974. About 40% probability of fixating on referent, 30% fixating on related picture ►About 10% in control group. ► Permits on-line measure of processing during listening. Not just difficulty – actual content Both incidental looks and controlled reaching Cooper, R. M. (1974). The control of eye fixation by the meaning of spoken language: A new methodology for the real-time investigation of speech perception, memory, and language processing. Cognitive Psychology, 6, 84-107. Cooper, 1974 While on a photographic safari in Africa, I managed to get a number of breathtaking shots of the wild terrain. These included pictures of rugged mountains and forests as well as muddy streams winding their way through big game country. One of my best shots thought was ruined by my scatterbrained dog Scotty. Just as I had slowly wormed my way on my stomach to within range of a flock…. Allopenna, Magnuson & Tanenhaus (1998) Eye camera Scene camera Pick up the beaker Allopenna, P. D., Magnuson, J. S., & Tanenhaus, M. K. (1998). Tracking the time course of spoken word recognition using eye movements: Evidence for continuous mapping models. Journal of Memory and Language, 38, 419-439. Allopenna et al. Results 200 ms after coarticulatory information in vowel Thanks! Enjoy the Institute!