Quizzing with WebCT

advertisement

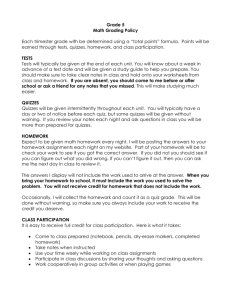

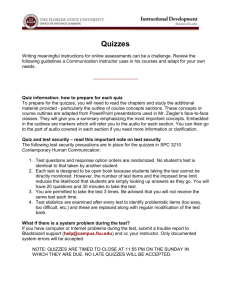

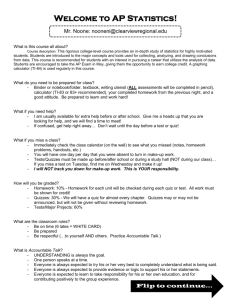

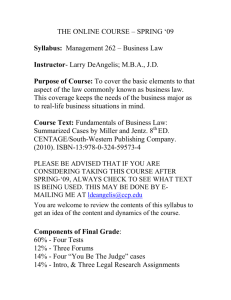

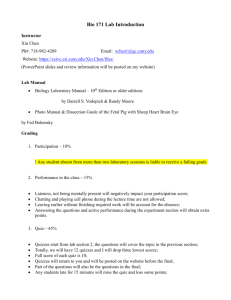

Online Learning Tools (workshop) •John O’Byrne (Sydney) –Mastering physics software: 40 min •Alex Merchant (RMIT) –Online assignments: 20 min •Geoff Swan (ECU) –WebCT Quizzes: 20 min Key Issues in Learning and Teaching in Undergraduate Physics – A National Workshop (16:00 to 17:30 Sept 28, 2005, University of Sydney). WebCT Quizzes: Points of view • Student viewpoint: ACTIVITY – Do the autc05 quiz at least twice checking grades and individual question feedback – Use student logins & passwords (autc1 to autc12) – http://thomson4.webct.com/public/swanserwaycowan/index.html • Staff viewpoint: Show – Quizzes & individual questions (eg ECU example) • Creating new questions and quizzes? – Course management • Results (graph) & download – Other? THE END Key to Participation Geoff Swan Physics Program School of Engineering and Mathematics Faculty of Computing, Health and Science Edith Cowan University Perth, AUSTRALIA AIP Congress, Canberra ACT (Feb 1, 2005) Outline • PART 1: Setting the scene – Background – Online quizzing environment – 2003 trial • PART 2: Online quizzes in 2004 – Major Adjustments from trial – Results • • • • Participation rates Unit grades Student evaluation Open responses issues • PART 3: Discussion Background • Setting: Physics of Motion – first year first sem physics unit – Engineering, Aviation, Physics major & Education students – Assessment: 60% Exam, 20% Labs, 20% test and/or assignments? – Homework: selected end of chapter problems (Serway & Beichner/Jewitt). Not assessed. Students have worked solutions • Problem (anecdotal): Struggling students do insufficient physics at home. – Time: Competing demands study vs work vs family etc – Motivation (affected by many factors) • A Solution? Provide regular online quizzes – – – – – – Engage students in regular problem solving Help students keep up with the curriculum Aim at struggling students – increase confidence Encourage good study practices early (ie sem 1, year 1) Provide early & continuous feedback (positive and negative) Improve students’ overall performances Motion Quizzes Trial. Sem 1, 2003 Quiz No. & Topic(s) Set 0 Week Set ECUGUEST: check out ID & Password + quiz site 1 2 3 4 Motion in one dimension, Vectors Motion in two dimensions Newton's Laws Circular motion dynamics, Work & Kinetic Energy 5 Conservation of Energy, Momentum Assessment 1 NONE 2 3 4 6 Highest Average Average Average 7 Average 9 11 Average Average 12 Average -------------------------------------mid-semester break----------------------------------------- 6 Rotational Motion 7 Static Equilibrium, Elasticity, Oscillatory Motion 8 Gravitation, Fluids Notes: 12 week term. Max 70 minutes for each quiz (4-6 questions). Available after topic material covered. Allowed about 8 days to complete up to 2 attempts. Student orientated 1 • Flexible delivery – Attempt: any place (home or ECU or ?) & any time • Fit in with other commitments – Guest quiz • Rewards – Intrinsic: better understanding – Assessment: use to improve final grade (if better than mid-semester test: 20%) – Revision: have extra revision questions and answers for exam • No access at all if online quiz not attempted within timeframe Student orientated 2 (Feedback) • Feedback – General (how am I going in this physics unit?) - Grades – Specific (how do I answer this question?) - Detailed Feedback! • NO detailed feedback provided by publisher’s test bank • Immediate Feedback (within seconds!) – Grades and detailed feedback • Any time and any place • Can act straight away on detailed feedback – Attempt a similar but different quiz (2003 wait of 30 minutes) • strike while the iron is hot! • Can improve grade • Formative assessment • Significant advantages exist with an online environment Online advantages for the Lecturer • Save TIME? (for large classes) – existing publisher supplied WebCT test bank (1000 questions but no detailed feedback!) and server – automatic marking – results easily transferred to excel mark sheet • Reliable assessment – Individual tests (similar but different) • Alternate multiple choice questions • Calculated questions (truth tables - usually 20 variations) • individual quizzes = harder to cheat plus 2nd attempt option • Flexible settings – questions, assessment, access and timing options Belief: Both pedagogical and practical advantages to using the online environment WebCT Question types • Multiple choice – 1000 question test bank (no detailed feedback) • Calculated – Use formula to create truth table. • ie same question but (say) 20 alternate sets of numbers – Supported math operators: • ( ), +, -, *, /, **, sqrt, sin(x), cos(x), exp, log (base e ie ln) • Also available (but not used): – Paragraph (tutor marked) – Matching – Short answer Example: Masses on strings 1 Example: Masses on strings 2 FLAG – Your opinion?? • RATE the detailed/general feedback – – 1. 2. 3. • • Consider a borderline first year first semester physics student to SOLVE this question FOR THEMSELVES in a second attempt Too much detail About right Not enough detail Compare with the person next to you. How does this compare with a worked solution? 2003 online quizzes trial - RECAP • Online quizzes: Physics of Motion trial in sem 1, 2003 – – – – Engage students with regular problem solving 8 quizzes. Two attempts allowed (average mark normally used) Optional assessment (if better than mid-sem test) WebCT server (supplied by publisher) • Two types of questions – Calculated • Use formula to create truth table. – ie same question but (say) 20 alternate sets of numbers • Supported math operators: – ( ), +, -, *, /, **, sqrt, sin(x), cos(x), exp, log (base e ie ln) – Multiple choice • Based on 1000+ multiple choice question testbank (no detailed feedback) • Detailed Feedback written for each question 2003 Results - SUMMARY • Students LIKED the Quizzes – 85%+: Quizzes easy to access, relevant, improved my understanding of physics, helped me develop problem solving skills etc (N=29 with at least one quiz) • 80% Detailed feedback about right (20% not detailed enough) • Participation was POOR! (Of N=63 students who attempted the exam) – 17% attempted 6,7 or 8 quizzes • No fails in this qroup! One student passed directly due to quizzes!! – 21% attempted 4 or 5 quizzes , 32% attempted 2 or 3 quizzes , 6% attempted 1 quiz – 24% attempted ZERO quizzes. • Four times more likely to fail? – the 19 students who completed zero or one quiz accounted for two thirds of the unit’s fail & borderline pass grades (ie 63% of these students compared with 14% of students who completed more than one quiz) • Why zero quiz attempts? Lack of TIME! • Main Factors given were (N=7): bloody hard, laziness, problems with internet connect - difficult to complete quizzes, time, don’t have time/hate physics anyway, hard to allocate solid 90 (sic) minutes in first few weeks and never got into it, work. • MUST increase participation rate in 2004! Changes in 2004 • ASSESSMENT – Compulsory assessment 20% • Replaced mid-semester test (now just for practice) – Highest mark rather than average of two attempts • From 1 of 8 quizzes in 2003 to 6 of 8 quizzes in 2004 • Big incentive – high marks possible for struggling students • OTHER – – – – Orientation quiz in week 1 (not assessed) Quiz time decreased (from 70 to 60 minutes) New questions & topics rearranged Research: additional survey items on • The quiz environment for students: how they went about it • The use of detailed feedback on individual questions Quizzes in sem 1, 2004 Participation Rates • Participation – how many attempted at least 6 of 8 quizzes? • Consider only students who sat end of semester exam – 2003 N=63 – 2004 N=84 • 2003: Just 17% • 2004: 85% – HUGE increase in participation rates Quiz and Unit Results Consider only students (N=84. sem 1, 2004) who sat exam • Pass rates – Quizzes: Pass rate 70% (Distinction rate 43%) • Note: 15% students with 5 or less quizzes all failed here – Unit: Pass rate 81% (Distinction rate 27%) • 27% students received a distinction for the unit – 87% also received quiz distinction (ie 20 of 23 students) • 19% students failed unit – 75% also failed quizzes (ie 12 of 16 students) – Highest quiz mark for failing student was 58% • Below median average for quizzes • Quizzes as a good indicator for success and failure – Use for early intervention!? Student evaluation of quizzes • Students were extremely positive (% agreement) Respondents (N=34) with at least 6 or 7 quizzes. Survey in penultimate teaching week.): – – – – Were easy to access (97%) Were relevant to the unit content (97%) Improved my understanding of physics (94%) Provided me with necessary practice in solving physics problems (91%) – Helped me develop problem solving skills (94%) – Detailed feedback was helpful (91%) – Overall, helped me learn physics (94%) • Students normally accessed the quizzes from home (70%) and 91% of students thought that the number of quizzes (eight) was about right. Assessment • Assessment by online quizzes was fair and reasonable (71%) • Future assessment – 71% online quizzes only – 29% combination of online quizzes and mid-sem test • 62% prepared more thoroughly for the two average mark quizzes Detailed Feedback • For my learning needs, the amount of feedback provided to assist me in solving the problem for myself was – About right 67% – Not detailed enough 33% – Consider small increase for 2005? • 81% found detailed feedback sometimes useful for correctly answered questions! Interesting – Not expected by author, but interesting! Subsequent conversations reveal students like to compare the lecturer’s method (concepts used and application) with their own regardless of whether they answered the question correctly or not. Open response questions • Provided additional information on: – – – – Preparation Detailed feedback Collaboration Study habits Preparation? • Students listed a variety of ways in which they prepared for highest mark quizzes including – – – – – attending lectures reading through lecture notes reading chapters or chapter summaries in the textbook doing set problems and even reading the formula sheet. • A significant minority were not well prepared, including one student whose preparation was: “Nothing, I would waste the first go & work backwards from the solution” – unlikely to result in any meaningful learning – unlikely to improve their exam score. Detailed feedback? • How did students use detailed feedback? Three examples: “To change the way I look at the questions” “To try and understand the concepts, but sometimes it wasn’t enough” “I used it as a guide to lookup certain information in the book and notes. I found it very good” • Student usage went beyond just getting the correct answer. – Author was surprised and pleased! Collaboration? • • • • 44% provided help to other students 36% received help from other students some students seemed somewhat insulted with these questions!? Collaboration ranged form “nothing” or “verbal” to substantial. Consider two substantial examples: 1. “We all worked out an answer, & compared the way we did it & the answers we got. It was through this that we were able to come to grips with theories” 2. “Group work. Do the quizzes together and work together to a solution” FLAG: Should the collaboration described above (considering example 1 & example 2 separately) be: – – – Encouraged (peer learning) Neutral Discouraged (it’s cheating!) Further thoughts on collaboration • Students already collaborate in class time – Labs: experiments done in small groups – Lectures: short small group discussions on (mainly conceptual) questions. • Collaboration/group work/peer learning – Necessary part of the curriculum – Supported by large body of literature & resources (eg Mazur, McDermott etc) • Attitudes changed over time – Individual with group support now OK • Original object was to replace mid-semester test (20% assessment) – As long as individual learning occurs and each actual quiz attempt is done by the student logged on to the computer • Delete second part of condition?? Students spending time interacting with and discussing physics is always a good thing!? Study habits? • Students fairly positive here: • Helped me work more consistently over the semester (85%) “The quizzes made sure that we were studying without forcing us to study” • The quizzes encouraged and influenced both study at and away from the computer. – Consider preparation for quizzes reported by students. Conclusions • Provide opportunities to learn through – Detailed feedback – Formative assessment – Collaboration • Influence study habits – help students work consistently over the semester – Flexible time and place • Overall help students learn physics (94%) • ECU evaluation (UTEI). BIG increase in overall satisfaction for unit. Partly due to quizzes? – From 64% (N=28) in 2003 to 91% (N=45) in 2004 • Agree or strongly agree on 5 point scale (ie neutral counts as not satisfied) • Online quizzes have made a difference – Adapted for second semester unit: Waves and Electricity 2004 – Continue for 2005… FURTHER INFORMATION? • Contact – – – – – – – Dr Geoff Swan Physics Program, SOEM Edith Cowan University 100 Joondalup Drive Joondalup WA 6027 Tel: +61 08 6304 5447 Email: g.swan@ecu.edu.au • Quiz site at http://thomson4.webct.com/public/swanserwayco wan/index.html (login and password“ecuguest”) Discussion? • How might these online quizzes be improved? – Group work vs individual – Context: Questions in the quizzes are mostly decontextualised. Is there a pedagogical justification? (eg can reality be a distracter to learning physics?) • How might resources like this be shared between institutions? (AUTC report recommendation) • Students’ study habits and online quizzes – Is it our business to design curriculum to encourage good study habits and/or teach study techniques? – Are we responsive enough to competing demands on students who often combine uni & work & family? (AUTC report recommendation) • • • • • • • • • Paper references References Gordon, J.R., McGrew, R., & Serway, R.A. (2004). Student Solutions Manual & Study Guide to accompany Volume 1 Physics for Scientists and Engineers Sixth Edition. Belmont: Brooks/Cole. Honey, M., & Marshall, D. (2003). The impact of on-line multi-choice questions on undergraduate student learning. In G.Crisp, D.Thiele, I.Scholten, S.Barker and J.Baron (Eds.), Interact, Integrate, Impact: Proceedings of the 20th Annual Conference of the Australasian Society for Computers in Learning in Tertiary Education. Adelaide, 7-10 December 2003. McInnis, C, James, R, & McNaught, C (1995). First year on campus: Diversity in the initial experiences of Australian undergraduates. Canberra: Australian Government Publishing Service. Serway, R.A., & Jewett, J.W. (2004). Physics for Scientists and Engineers with Modern Physics. (6th ed.). Belmont: Brooks/Cole. Swan, G.I. (2003). Regular motion. In G.Crisp, D.Thiele, I.Scholten, S.Barker and J.Baron (Eds.), Interact, Integrate, Impact: Proceedings of the 20th Annual Conference of the Australasian Society for Computers in Learning in Tertiary Education. Adelaide, 7-10 December 2003. Thelwall, M. (2000). Computer-based assessment: a versatile educational tool. Computers & Education, 34, 37-49. Thomson Learning (2004). WebCT server login page. Thomson Learning. Available: http://thomson4.webct.com/public/swanserwaycowan/index.html [July 2004] Additional: Peer instruction by Mazur. Tutorials in introductory physics by McDermott & Shafer. SCP1112: Students usage and feedback • SCP1112 Waves and Electricity: Semester 2, 2001 • Usage (5 full & 1 half quiz) – Of 73 students who attempted the SCP1112 exam • 32 (44%) attempted 3 or more full quizzes • 25 (34%) attempted at 1 or 2 full quizzes • 16 (22%) did not attempt any quiz (for these students unit failure rate > 4 times that of other students) • Feedback – Large majority extremely positive (mid-sem and end-sem) • easy to use, relevant, improved understanding etc • feedback detail about right (a few students wanted more detail!) – More divided on • future compulsory assessment & No. of quizzes • Helping to work more consistently over the semester