gatech - FTP Directory Listing

advertisement

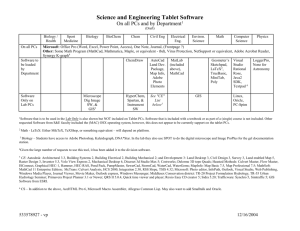

EAS Seminar, Georgia Tech Stephen McIntyre Toronto Ontario Atlanta Feb 8, 2008 1 An interesting ride Left: Front page, Wall Street Journal, Feb 2005; Middle - House Energy and Commerce Committee, Oversight and Investigations Subcommittee: Mann, Ralph Cicerone (NAS), me, Jay Gulledge, Ed Wegman; Right: Best Science Blog 2007 2 Conclusions 3 No “meaningful” conclusion about modern-medieval climate can be derived from the existing corpus of 1000 year studies and which is warmer remains an open question; The main problem is not finding the “right” multivariate method, but getting better proxies and better local data. Some Disclaimers 4 I’ve never asserted or implied that AGW policy stands or falls on the HS. I’ve never suggested that perfect certainty is needed to make climate decisions. People make decisions under uncertainty all the time. I’ve avoided all policy discussions other than archiving. If I had a big policy job, I would be guided by official institutions. I’ve never suggested that any of the issues that I’ve been involved should affect climate policy decisions. Conversely, the “big picture” doesn’t excuse using poor methods or poor dislosure. Even if the HS is irrelevant to climate policy, I’ve become interested in the statistical and historical issues in 1000-year climate reconstructions. The Hockey Stick Left: John Houghton at IPCC TAR WG1 Press conference; right - Al Gore, Inconvenient Truth 5 The iconic hockey stick 6 “The Warmest Decade and Year” The past decade was the world's warmest decade of the century. And that century was the warmest of the past millennium. Without action, the long-term consequences will be devastating. – David Anderson, Oct. 27, 2001 The 20th century was the warmest in the Northern Hemisphere in the past 1000 years. The 1990s was the warmest decade on record and 1998 was the warmest year - in Canada and internationally." - David Anderson, April 5, 2002 The 20th century was the warmest in the Northern Hemisphere for the past 1000 years and the 1990s the warmest decade on record... The science of climate change has been subjected to international scrutiny, open to all qualified experts, peer review, atmospheric modeling and process studies – Liberal Caucus, Aug. 22, 2002 7 “Forgotten the Location” Dear Dr. Mann, I have been studying MBH98 and 99. I located datasets for the 13 series used in 99 at ftp://eclogite.geo.umass.edu/pub/mann/ONLINEPREPRINTS/Millennium/DATA/PROXIES/ (the convenience of the ftp: location being excellent) and was interested in locating similar information on the 112 proxies referred to in MBH98, as well as listing (the listing at http://www.ngdc.noaa.gov/paleo/ei/data_supp.html is for 390 datasets, and I gather/presume that many of these listed datasets have been condensed into PCs, as mentioned in the paper itself. Thank you for your attention. Yours truly, Stephen McIntyre, Toronto, Canada Dear Mr. McIntyre, These data are available on an anonymous ftp site we have set up. I've forgotten the exact location, but I've asked my Colleague Dr. Scott Rutherford if he can provide you with that information. best regards, Mike Mann Steve, The proxies aren't actually all in one ftp site (at least not to my knowledge). I can get them together if you give me a few days. Do you want the raw 300+ proxies or the 112 that were used in the MBH98 reconstruction? Scott 8 MBH98 The Neofs-length solution vector g is obtained by solving the above overdetermined optimization problem by singular value decomposition for each proxy record i= 1,…Nproxy. This yields a matrix of coefficients relating the different proxies to their closest linear combination of the Neofs PCs .This set of coefficients will not provide a single consistent solution, but rather represents an overdetermined relationship between the optimal weights on each on the Neofs PCs and the multiproxy network. These Neofs eigenvectors were trained against the Nproxy indicators, by finding the least-squares optimal combination of the Neofs PCs represented by each individual proxy indicator during the N=79 year training interval from 1902 to 1980 (the training interval is terminated at 1980 because many of the proxy series terminate at or shortly after 1980).… This proxy-by-proxy calibration is well posed (that is, a unique optimal solution exists) as long as N>Neofs (a limit never approached in this study) and can be expressed as the least-squares solution to the overdetermined matrix equation, Ug =Y[,i] , where U is the matrix of annual PCs, and Y[,i] is the time series vector for proxy record i. The Neofs-length solution vector g is obtained by solving the above overdetermined optimization problem by singular value decomposition for each proxy record i=1,…Nproxy. This yields a matrix of coefficients relating the different proxies to their closest linear combination of the Neofs PCs .This set of coefficients will not provide a single consistent solution, but rather represents an overdetermined relationship between the optimal weights on each on the Neofs PCs and the multiproxy network. 9 “Statistical Skill” IPCC TAR: “reconstruction which had significant skill in independent cross-validation tests. Self-consistent estimates were also made of the uncertainties” MBH98: β [or RE] …correlation (r) and squaredcorrelation (r2) statistics are also determined. 10 “Robustness” to presence/absence of dendro proxies 11 Mann et al. 2000: possible low-frequency bias due to non-climatic influences on dendroclimatic (tree-ring) indicators is not problematic in our temperature reconstructions…Whether we use all data, exclude tree rings, or base a reconstruction only on tree rings, has no significant effect on the form of the reconstruction for the period in question. MBH98: the long-term trend in NH is relatively robust to the inclusion of dendroclimatic indicators in the network, suggesting that potential tree growth trend biases are not influential in the multiproxy climate reconstructions. (p. 783, emphasis added.) MM2003, 2005a,b,c,d 12 MBH principal components algorithm was severely biased such that it “mined” for HS-shaped data. This precluded attributing any statistical significance to such a reconstruction. their reconstruction failed important statistical tests in early portions e.g. the verification r2 statistic shown in one of their figures. These adverse results were not reported. we reflected on what it means when one statistic is “99.9% significant” and another statistic is a bust. their reconstruction was not “robust” to the presence/absence of all dendro proxies. It was not even robust to the presence/absence of bristlecones.. Bristlecones are located only in high arid U.S. Southwest and had been previously identified by specialists as problematic, e.g. Biondi (Hughes) et al. (1999) said: “[Bristlecones] are not a reliable temperature proxy for the last 150 years”. Without bristlecones, no HS. Merely using a standard covariance PC algorithm, the weights of bristlecones were reduced such that no HS was obtained. Red Noise Simulations 13 Data decentered against post-1902 mean. Preferentially adds weight to hockey stickshaped series in PC1 The counterattack Mann: This claim by MM is just another in a series of disingenuous (off the record: plainly dishonest) allegations by them about our work. I hope you are not fooled by any of the "myths" about the hockey stick that are perpetuated by contrarians, right-wing think tanks, and fossil fuel industry disinformation. UCAR Press Release: “the highly publicized criticisms of the MBH graph are unfounded. “ 14 The Defense Our criticisms were “wrong” because: They could get an HS without using principal components. If they retained more PCs (down to the PC4), they could still “get” a HS; Other people got an HS using different methods. In MM (EE 2005) we discussed these permutations and combinations. Yes, you can “get” an HS by changing your methods, but each of these other methods has its own problems.Wegman was vey critical of after-the-fact methodology changes. After Wahl and Ammann grudgingly confirmed the verification r2 failure, they argued that verification r2 statistic was no good as it was supposedly prone to rejecting valid reconstructions. Mann to the NAS Panel: calculating a verification r2 statistic would be a “foolish and incorrect thing” to do. Wahl and Ammann agreed that you can’t “get” a HS without the bristlecones, but argued that a bristlecone-free reconstruction fails verification RE statistic) and would never have been proposed and thus claim that bristlecones contain valid and necessary information. 15 Proposal to Ammann and Wahl in my view, the climate science community has little interest at this point in another exchange of controversial articles (and associated weblog commentaries) and has far more interest in the respective parties working together to provide a joint paper, which would set out: (1) all points on which we agree; (2) all points on which we disagree and the reasons for disagreement; (3) suggested procedures by which such disagreements can be resolved. Because our emulations are essentially identical, I think that there is sufficient common ground that the exercise would be practical, as well as desirable. Accordingly I propose the following: (1) we and our coauthors (McKitrick and Wahl) attempt to produce a joint paper in which the above three listed topics are discussed; (2) We allow ourselves until February 28, 2006 to achieve an agreed text for submission to an agreed journal (Climatic Change or BAMS, for example, would be fine with us), failing which we revert back to the present position; (3) as a condition of this “ceasefire”, both parties will put any submissions or actions on hold. On your part, you would notify GRL and Climatic Change of a hold until Feb. 28, 2005. On our part, we would refrain from submitting response articles to GRL or Climatic Change or elsewhere and refrain from blog commentary on the topic. 16 Wegman Report 2006 The debate over Dr. Mann’s principal components methodology has been going on for nearly three years. When we got involved, there was no evidence that a single issue was resolved or even nearing resolution. Dr. Mann’s RealClimate.org website said that all of the Mr. McIntyre and Dr. McKitrick claims had been ‘discredited’. UCAR had issued a news release saying that all their claims were ‘unfounded’. Mr. McIntyre replied on the ClimateAudit.org website. The climate science community seemed unable to either refute McIntyre’s claims or accept them. The situation was ripe for a third-party review of the types that we and Dr. North’s NRC panel have done. While the work of Michael Mann and colleagues presents what appears to be compelling evidence of global temperature change, the criticisms of McIntyre and McKitrick, as well as those of other authors mentioned are indeed valid. I am baffled by the claim that the incorrect method doesn’t matter because the answer is correct anyway. Method Wrong + Answer Correct = Bad Science. 17 NAS Panel – “Schizophrenic” Agreed with our point that the MBH PC method was wrong; Agreed that bristlecones were problematic and said that “strip bark” trees should be “avoided” in temperature reconstructions; Agreed in general terms with our statistical criticisms: “Reconstructions that have poor validation statistics (i.e., low CE) will have correspondingly wide uncertainty bounds, and so can be seen to be unreliable in an objective way.” On no occasion did they contradict any explicit MM statement. 18 NAS Panel 19 Concluded that things were “murky” as you went further back. Overall result was “plausible” showing other recons. North at the House Committee CHAIRMAN BARTON. Dr. North, do you dispute the conclusions or the methodology of Dr. Wegman’s report? DR. NORTH. No, we don’t. We don’t disagree with their criticism. In fact, pretty much the same thing is said in our report. DR. BLOOMFIELD. Our committee reviewed the methodology used by Dr. Mann and his coworkers and we felt that some of the choices they made were inappropriate. We had much the same misgivings about his work that was documented at much greater length by Dr. Wegman. 20 Zorita on the NAS Panel in my opinion the Panel adopted the most critical position to MBH nowadays possible. I agree with you that it is in many parts ambivalent and some parts are inconsistent with others. It would have been unrealistic to expect a report with a summary stating that MBH98 and MBH99 were wrong (and therefore the IPCC TAR had serious problems) when the Fourth Report is in the making. I was indeed surprised by the extensive and deep criticism of the MBH methodology in Chapters 9 and 11. 21 North’s Texas A&M Seminer At a Texas A&M seminar, North said that they “didn’t do any research”, that they just “took a look at papers”, that they got 12 “people around the table” and “just kind of winged it” http://www.met.tamu.edu/people/faculty/dessler/NorthH 264.mp4 minute 55 or so “We did not dissect each and every study in the report to see which trees were used. “ 22 Why hasn’t this been settled? 23 the issue isn’t important enough that it needs to be settled; Science may be “self-correcting” (so are markets). Market imperfections can continue uncorrected for some time and so can science imperfections. still some intellectual work that needs to isolate the issues more surgically than has been done to date. Outline 24 Reconstructions in a statistical context; Econometric thoughts on spurious regression Some important data sets A. Reconstruction Methods Jones and Mann 2004 Composite-plus-scale (CPS) Climate Field Reconstruction (CFR) 25 Proxies are normalized and averaged (perhaps with weights “ e.g., based on area represented or modern correlations with colocated instrumental records” [Jones and Mann 2004] average is then simply scaled against the available temporally overlapping instrumental record “Multivariate calibration of the large-scale information in the proxy data network against the available instrumental data” “most involve the use of empirical eigenvectors of the instrumental data, the proxy data, or both” “Because the large-scale field is simultaneously calibrated against the full information in the network, there is no a priori local relationship assumed between proxy indicator and climatic variable.” Multivariate Calibration: Cook (Briffa, Jones) 1994 Considers “inverse” OLS regression of each gridcell in a network X (MBH98 – 1082) against a network of proxies Y (MBH98 – 415) Applying this to the MBH98 network would lead to the generation of 1082*415=449,030 coefficients from only 79*415=32,785 proxy measurements in the calibration period. Cook et al observed: “experience in reconstructing climate from tree rings indicates that such models frequently produce reconstructions that cannot be verified successfully when compared with climate data not used in estimating the regression coefficients. This can happen regardless of the statistical significance of the overall regression equation.” 26 MBH Method 1. 2. 3. 4. 5. 6. 7. 27 Standardize gridcell temperatures by area-weighting and standard deviations; Calculate temperature PCs; Estimate “transfer coefficients” for proxies to PCs in calibration period by “over-determined optimization” Estimate reconstructed PCs in reconstruction by “overdetermined optimization” Re-scale reconstructed PCs to observed PCs Expand back to gridcell temperatures Calculate NH average temperature “Advantage” of MBH Method “MBH98’s method yields an estimation of the value of the temperature PCs that is optimal for the set of climate indicators as a whole, so that the estimations of individual PCs cannot be traced back to a particular subset of indicators or to an individual climate indicator. This reconstruction method offers the advantage that possible errors in particular indicators are not critical, since the signal is extracted from all the indicators simultaneously.” 28 NH Composite: Linear Algebra All the operations are linear and on the right of the reconstructed PCs. Simple expression for expansion and reflating of reconstructed PCs to area-weighted NH average: where - Reconstructed NH temperature index - Reconstructed TPCs - Gridcell eigenvalues, eigenvectors (EOFs) - Gridcell standard deviations - Gridcell area weights 29 These are the weights (λ) for each reconstructed temperature PC in NH temperature reconstruction 30 All operations in reconstructed Temperature PCs are linear. Optimizations” are Regressions Calibration Reconstruction Rescaling in 1-D Case 1-D Case (AD1000, AD1400) - Matrix of temperature PCs (individual PC) - Matrix of proxies (standardized) - Rescaled estimated PCs; Pre-rescaled PC estimates 31 Arbitrary MBH weights; correlation to temperature PC(1) All MBH operations are linear operations on the right hand side of the proxy matrix Y. Because matrix multiplication is associative, weights can be assigned for each individual proxy. Where, for one-PC case (AD1000, AD1400) weighted unweighted S,V from temperature SVD, σ, μ are gridcell standard deviations, cosine latitude area weights 32 Weights can be assigned for proxies within PC networks as well Regional tree ring PCs are linear combinations of the underlying tree ring network (here X) The mixed MBH proxy network (“regular” and tree ring PCs can be represented as follows (the dimension of V,k is (say) 79 x2 (for 79 tree rings and 2 PCs) 33 Contributions by proxy type can be calculated By allocating weights to individual sites and by classifying sites by continent and type (ice core, bristlecone, other trees, coral, etc), the relative contribution of each class to the MBH reconstruction can be measured (bristlecone plus Gaspé in red). (In deg C) 34 Weights can be shown graphically Prominent weights are for the NOAMER PC1 (bristlecones), Gaspé, Tornetrask and Cook’s Tasmania tree ring. There are 22 weights in this picture in total. 35 Keynes 1940 on Tinbergen (anticipating RegEM?) But my mind goes back to the days when Mr Yule sprang a mine under the contraptions of optimistic statisticians by his discovery of spurious correlation. In plain terms, it is evidence that if what is really the same factor is appearing in several places under various disguises, a free choice of regression coefficients can lead to strange results. It becomes like those puzzles for children where you write down your age, multiply, add this and that, subtract something else and eventually end up the number of the Beast in Revelation. 36 Different weights yield different reconstructions. Plausible ex ante methods yield different weights and results. Burger and Cubasch 2006 37 MBH and Scaled-Composite Reconstructions standardize, average and inflate so variance matches “target variance” With correlation weights MBH (AD1000, AD1400 steps) 38 CPS with Correlation Weights = OneStage Partial Least Squares Bair et al 2004. 39 OLS and PLS 40 OLS regression coefficients are a rotation (and dilation) of the PLS coefficients in coefficient-space From the Frying Pan into the Fire In cases where there is little correlation between proxies, then the rotation matrix is “near-orthogonal” and PLS increasingly approximates OLS 41 Phillips 1998 Figure 4. n: x^2 in interval –pi to pi, repeated periodically. The regression using 1000 observations and 125 white noise regressors Wahl and Ammann’s no PC case: a “near”-OLS regression 79 years long against 65-90 “predictors”. Calibration residuals are meaningless. Magenta – WA; Black - Two synthetic HS series plus 68 red noise series. Statistical pattern is identical to MBH under WA variation: high RE, high calibration r2; ~0 verification r2; negative CE.. In deg C 42 If you insert synthetic HS series plus low-order red noise, you get recons that look like the “no-PC” recons Magenta – WA; Black - Two synthetic HS series plus 68 red noise series. Statistical pattern is identical to MBH under WA variation: high RE, high calibration r2; ~0 verification r2; negative CE. Similar examples used in Reply to Huybers. In deg C 43 You can get the same verification results using tech stocks instead of the Bristlecone PC1+Gaspe. In deg C 44 Ridge Regression is not a magic bullet 45 Stone and Brooks 1990 “Continuum Regression” : Ridge regression coefficients can be arranged as a 1parameter “continuum” between OLS and PLS Borga et al 2000 Taxonomy Solutions to 46 for cases below: Two-parameter mixing You can do one-parameter mixing of and identity matrix to get to CCA (Canonical Correspondence Analysis). 47 OLS gets “cute” with coefficients with values all over the place. In this network, simple is better. 48 How do you test reconstructions – the problem of “spurious regression”? Is there a valid statistical relationship between “climate field” (the temperature PC1) and bristlecones? 49 Yule 1926 - this is RE-resistant Mortality per 1000 (points) and proportion of Church of England marriages per 1000 marriages (line) 50 Hendry’s “Theory of Inflation” 1980 Hendry’s theory of inflation is that a certain variable (of great interest in this country) is the “real cause” of rising prices. .. there is a “good fit”, the coefficients are “significant”, but autocorrelation remains and the equation predicts badly. Assuming a first order autoregressive error process, the fit is spectacular, the parameters are “highly significant”, there is no obvious residual autocorrelation (on an “eyeball ” test and the predictive test does not reject the model. …C is simply cumulative rainfall in the UK. It is meaningless to talk about “confirming theories” when spurious results are so easily obtained. Doubtless some equations extant in econometric folklore are little less spurious than those I have 51 presented. Granger and Newbold 1974 “It is very common to see reported in applied econometric literature time series regression equations with an apparently high degree of fit, as measured by the coefficient of multiple correlation R2 but with an extremely low value for the Durbin-Watson statistic. We find it very curious that whereas every textbook on econometric methodology contains explicit warnings of the dangers of autocorrelated errors, this phenomenon crops up so frequently in well-respected applied work.... … It has been well known for some time now that if one performs a regression and finds the residual series is strongly autocorrelated, then there are serious problems in interpreting the coefficients of the equation. Despite this, many papers still appear with equations having such symptoms and these equations are presented as though they have some worth. It is possible that earlier warnings have been stated insufficiently strongly. From our own studies we would conclude that if a regression equation relating economic variables is found to have strongly autocorrelated residuals, equivalent to a low Durbin-Watson value, the only conclusion that can be reached is that the equation is mis-specified, whatever the value of R2 observed. 52 Canonical reconstructions fail 0.0 1.0 2.0 All multiproxy reconstructions, except MBH99, fail DurbinWatson statistic (minimum 1.5). Passing a DW test is a necessary but not sufficient test of model validity. J98 MBH99 MJ03 CL00 BJ00 BJ01 Esp02 Mob05 0.0 0.4 0.8 Cross-Validation R2 J98 53 MBH99 MJ03 CL00 BJ00 BJ01 Esp02 Mob05 Ferson et al 2003 Data mining for predictor variables [proxies] interacts with spurious regression bias. The two effects reinforce each other because more highly persistent series are more likely to be found significant in the search for predictor variables. Our simulations suggest that many of the regressions in the literature, based on individual predictor variables, may be spurious… The pattern of evidence in the instruments in the literature is similar to what is expected under a spurious mining process with an underlying persistent expected return. In this case, we would expect instruments to arise, then fail to work out of sample. 54 Greene et al 2000 From this perspective data-mining refers to invalid statistical testing as a result of naive over-use of a sample. In particular, the use of a sample both for learning-inspiration and for testing of that which was learned or mined from the sample. Any test of a theory or model is corrupted if the test is conducted using data which overlaps that of any previous empirical study used to suggest that theory or model. The moral is clear. 55 The same proxies are used over and over again. Two problems: severe data mining renders statistical testing meaningless; lack of independence between data sets makes multiple reconstructions vulnerable to data problems. 56 IPCC Box 6.4 Figure 1 The most stylized and repetitively data mined series are shown in IPCC AR4 Box 6.4 Figure 1. Mann’s incorrectly calculated PC1 is even shown. 57 Greene et al 2000 #3 But testing in un-mined data sets is a difficult standard to meet only to the extent one is impatient. There is a simple and honest way to avoid invalid testing. To be specific, suppose in 1980 one surveys the literature on money demand and decides the models could be improved. File the proposed improvement away until 2010 and test the new model over data with a starting date of 1981.. Only new data represents a new experiment. I do not consider this a pessimistic outlook. This is because I thinks much can be learned from exploring a sample. Patience and slow methodical progress are virtuous. 58 “Bring the Proxies Up to Date” Michael Mann: “paleoclimatologists are attempting to update many important proxy records to the present, this is a costly, and labor-intensive activity, often requiring expensive field campaigns that involve traveling with heavy equipment to difficult-to-reach locations (such as high-elevation or remote polar sites). For historical reasons, many of the important records were obtained in the 1970s and 1980s and have yet to be updated.” 59 The “Divergence” Problem The graphs below show results from the only large-population (387 sites) survey: Schweingruber sites chosen ex ante as temperature sensitive. Left - Briffa et al 2001 reconstruction (left) from 387 temperaturesensitive sites; right – from Briffa et al 1998: heavy solid – MXD (used in Briffa et al 2001); dashed – RW; thin solid – temperature. 60 Briffa’s Cargo Cult Briffa et al: In the absence of a substantiated explanation for the decline, we make the assumption that it is likely to be a response to some kind of recent anthropogenic forcing. On the basis of this assumption, the pre-twentieth century part of the reconstructions can be considered to be free from similar events and thus accurately represent past temperature variability. 61 Briffa et al 2001 was an IPCC TAR reconstruction, but spaghetti graph does not show it reverting to early 19th century levels. IPCC truncated the Briffa et al 2001 reconstruction (green) in 1960. Thus no visible “divergence”. Also truncated in AR4 (pale blue). 62 “Divergence” Problem – NAS and IPCC NAS Panel: Cook et al. (2004), who subdivided long tree ring records for the Northern Hemisphere into latitudinal bands, and found …that “divergence” is unique to areas north of 55°N, IPCC AR4: “‘divergence’ is apparently restricted to some northern, high-latitude regions, but it is certainly not ubiquitous even there…the possibility of investigating these issues further [a limit on the potential to reconstruct possible warm periods in earlier times] is restricted by the lack of recent tree ring data at most of the sites from which tree ring data discussed in this chapter were acquired.” (p. 473) 63 Updating Almagre: the Starbucks Hypothesis Almagre CO (about 35 miles west of Colorado Springs CO) is a bristlecone pine site with a Graybill chronology going back to AD1000. We took 64 cores (36 trees), of which 38 cores (20 trees) at or near Graybill site. Highest tree ring millennium chronology in the world! 64 http://picasaweb.google.com/Almagre.Bristlecones.2007/ Exact Graybill Trees We located 16 tagged trees of which 8 have been sampled. We reconciled the tags to the ITRDB archive (before co-operation ceased). Only 3 of the 8 sampled trees had been archived. It appears that Graybill sampled 42 trees, of which only 21 are 65 archived. Reasons for selective archiving unknown at present. Very Low Recent Growth in Many Trees In mm/100. 66 Strip Bark Trees NAS panel said that strip bark trees should be “avoided”. However, this information not recorded in bristlecone and foxtail (or other) archives. Graybill said that he sought out strip bark. (In mm/100) 67 Bizarre Strip Bark Forms Brunstein, C. USGS. 68 Updated Almagre Chronology Decline in recent ring widths – which are obviously not at levels “teleconnecting” with high NH temperatures. 1840-50s are a very “loud” phenomenon in chronology. In dimensionless chronology units, basis 1. 69 Strip Bark At Graumlich Sites From the plot below, I asked Andrea Lloyd whether this tree, with the characteristic discrepancy between cores, was strip bark. She consulted her field notes – the answer was yes. (In mm/100) 70 White Mts very arid 71 Ababneh Sheep Mt Update Left - Graybill 1987 and Ababneh 2006 (PhD thesis) for Sheep Mountain (Chronology units minus 1). Right – Both re-scaled on 1902-1980 showing that Graybill dilates relative to Ababneh after 1840s. (s.d. units) Inset – Weights of Mann and Jones 2003 PC1, showing Sheep Mt dominance. 72 More Low Latitude “Divergence” A statistician, considering this as an “out of sample” test of the hypothesis that there is a linear relationship between ring width and temperature, would conclude that this refutes the hypothesis. In the literature, this is referred to as the “Divergence Problem”. (In sd units) 73 Miller et al 2006 Deadwood tree stems scattered above treeline on tephra-covered slopes of Whitewing Mtn (3051 m) and San Joaquin Ridge (3122 m) show evidence of being killed in an eruption from adjacent Glass Creek Vent, Inyo Craters. Using tree-ring methods, we dated deadwood to 815-1350 CE, and infer from death dates that the eruption occurred in late summer 1350 CE. Using contemporary distributions of the species, we modeled paleoclimate during the time of sympatry [the MWP] to be significantly warmer (+3.2 deg C annual minimum temperature) and 74 slightly drier (-24 mm annual precipitation) than present, Tornetrask: Grudd 2008 is drastic revision of earlier chronologies. Questioned standardization methods of prior studies. Grudd Fig. 12 The thick blue curve is the new Tornetrask MXD lowfrequency reconstruction of April–August temperatures, with a 95% confidence interval (grey shading) adopted from Fig. 5. The thin red curve is from Briffa et al. (1992) ; the hatched curve is from Grudd et al. (2002) and based on TRW. 75 Polar Urals Left – red- Briffa et al (Nature 1995) reported that 1032 was the “coldest year of the millennium”. 11th century was based on only a couple of poorly dated cores. New tranche of data (green -1998 in Esper et al 2002) showed very warm 11th century – but Briffa never published this. Instead he did his own analysis on Yamal site (right –red ) about 100 miles away. Like Sheep Mt, dilation of post-mid 19th century results in Briffa version. Briffa’s Yamal version used in all but one subsequent study without any attempt 76 to reconcile to Polar Urals update. (In sd units) Shiyatov and Naurzbaev Shiyatov 1995: From the middle of the 8th to the end of the 13th, there was intense regeneration of larch and the timberline rose up to 340 a.s.l. The 12th and 13th centuries were most favorable for larch growth. At this time the altitudinal position of the timberline was the highest, stand density the biggest, longevity of trees the longest, size of trees the largest, increment in diameter and height the most intensive as compared with other periods under review… Naurzbaev et al 2004: Trees that lived at the upper (elevational) tree limit during the so-called Medieval Warm Epoch (from A.D. 900 to 1200) show annual and summer temperature warmer by 1.5 and 2.3 deg C, respectively, approximately one standard deviation of modern temperature. Note that these trees grew 150-200 m higher (1-1.2 deg C cooler) than those at low elevation but the same latitude, implying that this may be an underestimate of the actual temperature difference. 77 dO18 in Ice Cores: no global pattern Guliya (3rd) is supposedly evidence of global warming, while Mount Logan attributed to “regional circulation changes”; NAS Panel: “no Antarctic sites show medieval warming” – ahem, what about Law Dome? 78 All centered on mean. Dunde Ice Core: versions are inconsistent and sample data unarchived How can interannual climate be calculated when one ice core yields a spaghetti graph? The purple series strongly influences the Yang composite, one of the extreme IPCC Box 6.4 series. 79 Question: is there a rational way of choosing (a) or (b)? Left: NRC panel. Right: Variations of standard reconstructions using Polar Urals update instead of Yamal and Sargasso Sea SST instead of G Bulloides wind speed proxy and Yakutia instead of problematic bristlecones/foxtails 80 Econometric References: http://www.climateaudit.org/?page_id=2709 81 END 82