ppt

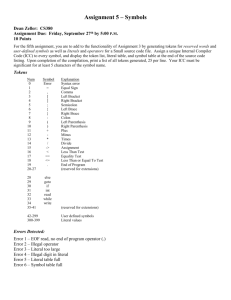

advertisement

Vocabulary size and term distribution: tokenization, text normalization and stemming Lecture 2 Overview Getting started: – – Collection vocabulary – – – tokenization, stemming, compounds end of sentence Terms, tokens, types Vocabulary size Term distribution Stop words Vector representation of text and term weighting Tokenization Friends, Romans, Countrymen, lend me your ears; Friends | Romans | Countrymen | lend | me your | ears Token an instance of a sequence of characters that are grouped together as a useful semantic unit for processing Type the class of all tokens containing the same character sequence Term type that is included in the system dictionary (normalized) The cat slept peacefully in the living room. It’s a very old cat. Mr. O’Neill thinks that the boys’ stories about Chile’s capital aren’t amusing. How to handle special cases involving apostrophes, hyphens etc? C++, C#, URLs, emails, phone numbers, dates San Francisco, Los Angeles Issues of tokenization are language specific – Requires the language to be known Language identification based on classifiers that use short character subsequences as features is highly effective – Most languages have distinctive signature patterns Very important for information retrieval Splitting tokens on spaces can cause bad retrieval results – German: compound nouns – – Search for York University, returns pages containing new york university Retrieval systems for German greatly benefit fron the use of compound-splitter module Checks if a word can be subdivided into words that appear in the vocabulary East Asian Languages (Chinese, Japanese, Korean, Thai) – Text is written without any spaces between words Stop words Very common words that have no discriminatory power Building a stop word list Sort terms by collection frequency and take the most frequent – Why do we need stop lists – – In a collection about insurance practices, “insurance” would be a stop word Smaller indices for information retrieval Better approximation of importance for summarization etc Use problematic in phrasal searches Trend in IR systems over time – – – – Large stop lists (200-300 terms) Very small stop lists (7-12 terms) No stop list whatsoever The 30 most common words account for 30% of the tokens in written text Good compression techniques for indices Term weighting leads to very common words having little impact for document represenation Normalization Token normalization – – – – Canonicalizing tokens so that matches occur despite superficial differences in the character sequences of the tokens U.S.A vs USA Anti-discriminatory vs antidiscriminatory Car vs automobile? Normalization sensitive to query Query term Windows windows Window Terms that should match Windows Windows, windows, window window, windows Capitalization/case folding Good for – – Bad for – – Allow instances of Automobile at the beginning of a sentence to match with a query of automobile Helps a search engine when most users type ferrari when they are interested in a Ferrari car Proper names vs common nouns General Motors, Associated Press, Black Heuristic solution: lowercase only words at the beginning of the sentence; true casing via machine learning In IR, lowercasing is most practical because of the way users issue their queries Other languages 60% of webpages are in english – – Less than one third of Internet users speak English Less than 10% of the world’s population primarily speak English Only about one third of blog posts are in English Stemming and lemmatization Organize, organizes, organizing Democracy, democratic, democratization Am, are, is be Car, cars, car’s, cars’ ==? car Stemming – Crude heuristic process that chops off the ends of the words Democratic democa Lemmatization – Use of vocabulary and morphological analysis, returns the base form of a word (lemma) Democratic democracy Sang sing Porter stemmer Most common algorithm for stemming English – – 5 phases of word reduction SSES SS – IES I – – ponies poni SS SS S – caresses caress cats cat EMENT replacement replac cement cement Vocabulary size Dictionaries – 600,000+ words But they do not include names of people, locations, products etc Heap’s law: estimating the number of terms M kT b M vocabulary size (number of terms) T number of tokens 30 < k < 100 b = 0.5 Linear relation between vocabulary size and number of tokens in log-log space Zipf’s law: modeling the distribution of terms The collection frequency of the ith most common term is proportional to 1/i 1 cf i i If the most frequent term occurs cf1 then the second most frequent term has half as many occurrences, the third most frequent term has a third as many, etc cf i ci k log cf i log c k log i Problems with the normalization A change in the stop word list can dramatically alter term weightings A document may contain an outlier term