(44.171) 830 1007 UK 95-51

advertisement

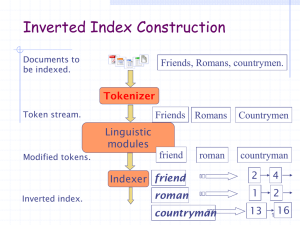

Statistical NLP: Lecture 6 Corpus-Based Work (Ch 4) Corpus-Based Work • Text Corpora are usually big. – Corpora 사용의 중요한 한계점으로 작용 – 대용량 Computer의 발전으로 극복 • Corpus-Based word involves collection a large number of counts from corpora that need to be access quickly • There exists some software for processing corpora Corpora • Linguistically mark-up or not • Representative sample of the population of interest – American English vs. British English – Written vs. Spoken – Areas • The performance of a system depends heavily on – the entropy – Text categorization • Balanced corpus vs. all text available Software • Software – Text editor : 글자 그대로 보여준다. – Regular expression : 정확한 patter을 찾게 한다. – Programming language • C/C++, Perl, awk, Python, Prolog, Java – Programming techniques Looking at Text • Text come a row format or marked up. • Markup – A term is used for putting code of some sort into a computer file – Commercial word processing : WYSIWYG • Features of text in human languages – 자연어 처리의 어려운 점 Low-Level Formatting Issues • Junk formatting/Content. – document headers and separators, typesetter codes, table and diagrams, garbled data in the computer file. – OCR : If your program is meant to deal with only connected English text • Uppercase and Lowercase: – should we keep the case or not? The, the and THE should all be treated the same but “brown” in “George Brown” and “brown dog” should be treated separately. Tokenization: What is a Word?(1) • Tokenization – To divide the input text into unit called token – what is a word? • graphic word (Kucera and Francis. 1967) “a string of contiguous alphanumeric characters with space on either side;may include hyphens and apostrophes, but no other punctuation marks” Tokenization: What is a Word?(2) • Period – 문자의 끝을 나타내는 의미가 있다. – 약어를 나타낸다. : as in etc. or Wash • Single apostrophes – isn’t, I’ll 2 words ? 1 words – 영어의 축약 : I’ll or isn’t • Hyphenation – 일반적으로 인쇄상 다음 줄로 넘어가는 한 단어를 표시 – text-based, co-operation, e-mail, A-1-plus paper, “take-itor-leave-it”, the 90-cent-an-hour raise, mark up mark-up mark(ed) up Tokenization: What is a Word?(3) • Word Segmentation in other languages: no whitespace ==> words segmentation is hard • whitespace not indicating a word break. – New York, data base – the New York-New Haven railroad • 명확한 의미의 정보가 다양한 형태로 존재한다. – +45 43 48 60 60, (202) 522-2230, 33 1 34 43 32 26, (44.171) 830 1007 Tokenization: What is a Word?(4) Phone number Country Phone number Country 0171 378 0647 UK +45 43 60 60 Denmark (44.171) 830 1007 UK 95-51-279648 Pakistan +44 (0) 1225 753678 UK +411/284 3797 Switzerland 01256 468551 UK (94-1) 866854 Sri Lanka (202) 522-2330 USA +49 69 136-2 98 05 Germany 1-925-225-3000 USA 33 1 34 43 32 26 France 212.995.5402 Nerherlands USA ++31-20-5200161 The Table 4.2 Different formats for telephone numbers appearing in an issue of the Economist Morphology • Stemming: Strips off affixes. – sit, sits, sat • Lemmatization: transforms into base form (lemma, lexeme) – Disambiguation • Not always helpful in English (from an IR point of view) which has very little morphology. • IR community has shown that doing stemming does not help the performance • Mutiple words a morpheme ??? • Morphological analysis를 구현하기 위한 추가비용에 비해 효능이 안 좋다 Stemming • 동일 의 단어의 다양한 변형을 하나의 색인어로 변환 – “computer”, “computing” 등을 “compute”로 변환 • 장점 – 저장 공간의 사용을 감소, 검색 속도 개선 – 검색 결과의 질 향상(질의가 “compute”일 경우 “computer”, “computing”등 포함 하는 모든 단어 검색) • 단점 – Over Stemming: 문자를 과도하게 제거하여 연관성 없는 단어들의 매칭을 발생 – Under Stemming : 단어에 포함된 문자를 적게 제거하여 연관성 있는 단어 매칭이 안 되는 현상 Porter Stemming Algorithm • 가장 널리 사용되며, 다양한 규칙을 이용 • 접두사는 제거하지 않고 접미사만을 제거하거나, 새로운 String으로 대치 – Porter Stemming 실행 전 – Porter Stemming 실행 후 Porter Stemming Algorithm Porter Stemming Algorithm • Error #1: Words ending with “yed” and “ying” and having different meanings may end up with – Dying -> dy (impregnate with dye) – Dyed -> dy (passes away) • Error #2: The removal of “ic” or “ical” from words having m=2 and ending with a series of consonant, vowel, consonant, vowel, such as generic, politic…: – Political -> polit – Politic -> polit – Polite -> polit Sentences • What is a sentence? – Something ending with a ‘.’, ‘?’ or ‘!’. True in 90% of the cases. – Colon, semicolon, dash도 문장으로 여겨질 수 있다. • Sometimes, however, sentences are split up by other punctuation marks or quotes. • Often, solutions involve heuristic methods. However, these solutions are hand-coded. Some effort to automate the sentenceboundary process have also been done. • 우리말은 더욱 어려움!!! – 마침표가 없기도 하고 종결형 어미 뒤? – 연결형 어미이면서 종결형 어미 – 따옴표 End-of-Sentence Detection (I) • Place EOS after all . ? ! (maybe ;:-) • Move EOS after quotation marks, if any • Disqualify a period boundary if: – Preceeded by known abbreviation followed by upper case letter, not normally sentence-final: e.g., Prof. vs. Mr. End-of-Sentence Detection (II) – Precedeed by a known abbreviation not followed by upper case: e.g., Jr. etc. (abbreviation that is sentence-final or medial) • Disqualify a sentence boundary with ? or ! If followed by a lower case (or a known name) • Keep all the rest as EOS Marked-Up Data I: Mark-up Schemes • 초기의 markup schemes – 단순히 내용정보만을 위해 header에 삽입 (giving author, date, title, etc.) • SGML – 문서의 구조와 문법을 표준화하는 grammer language • XML – SGML을 web에 응용하기 위해 만든 SGML의 축소판 Marked-Up Data II: Grammatical tagging • first step of analysis – 일반적인 문법적 category로 구별하는 것 – 최상급, 비교급, 명사의 단수, 복수 등의 구별 • Tag sets (Table 4.5) – morphological distinction 을 통합한다. • The design of a tag set – 분류의 관점 • Word의 문법정보가 얼마나 유용한 요소인가 하는 관점 – 예상의 관점 • 문맥에서 다른 word에 어떠한 영향을 미치는지 예상하는 관점 Examples of Tagset(Korean) Examples of Tagset(English) PennTreebank tagset Brown corpus tagset