ch1-2

advertisement

Chapter 1 Introduction

Grammars .... Chomsky

TYPE NAME

0 Regular Grammars -1 Context Free

-2 Context Sensitive-3 Unrestricted

--

hierarchy

EXAMPLE

RECOGNIZER

A -> aB or A -> a

-- RE, FA

A -> ABaB

-- PDA

AaB -> AbB |LF| >= |RT| -- LBA

Anything

-- TM

Programs related to compilers

Interpreters, Assemblers, Linkers, Loaders, Preprocessors,

Editors, Debuggers, Profilers, Project Managers (sccs, rcs)

Major Data Structures

Major Data Structures

Tokens

Syntax tree

Symbol table

Literal table

Intermediate code

Temporary files

Passes

1, 2, 3, or more

Error handling

When to give up.

Porting

Compiler for language A -> Existing Compiler -> Running

compiler

Written in language B

for B

for

language A

Cross compiler - generates a target code for a different

machine from

the one on which it runs.

Bootstrapping - write a small simple translator

language that can

be used to construct the complete translator.

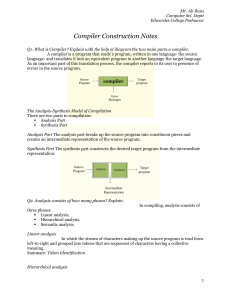

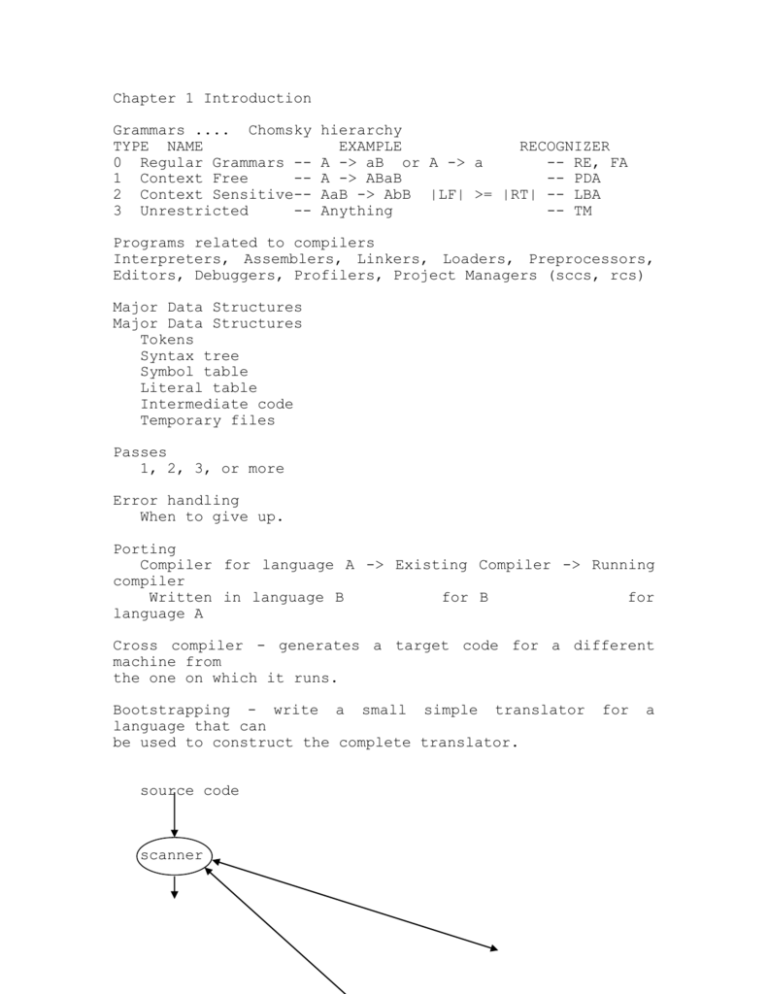

source code

scanner

for

a

tokens

parser

literal table

syntax tree

semantic analyzer

symbol table

annotated tree

source code optimizer

error handler

intermediate code

code generator

target code

target code optimizer

target code

Lexical Analysis

Pattern matching

Applied to other areas such

information retrieval systems.

Programs

strings.

execute

actions

as

triggered

query

by

languages

patterns

Tasks of the lexical analyzer:

strip out comments

remove white space, tab and end of line

correlating error messages associating

with parser error messages

create the listing

preprocessing may be done

line

of

and

in

numbers

Why separate lex and parsing

simpler - a grammar can do both, but this cleaner

faster - lex does lots of i/o, can be separated

portability - different alphabets can be used

tokens, lexemes,

const

const

if

if

relation<,<=

id

pi, d2

inconst 23

patterns

const

if

< or <=

letter followed by letters or digits

digits

typical tokens

keywords, operators, identifiers,

strings and punctuation symbols.

constants,

literal

Some lexical conventions make life tougher

DO 5 I = 1.25 is DO5I = 1.25

FORTRAN

DO 5 I = 1,25 is keyword DO

IF THEN THEN THEN = ELSE; ELSE ELSE = THEN;

PL/1

Attributes for tokens

rel_op matches <, >= etc. but code gen must know which

one

int_const matches 0, 1

Lexical Errors

if 'fi' is encountered, lex returns id, even in wrong

context

bad character in input

cannot form a token

Error recovery

delete characters until the next lexem

inserting a missing character

replace a character

transpose two adjacent characters

Can compute the min number of transformations required to

convert the program into a correct one. Normally not done.

Sometimes done locally.

3 ways of doing lexical analyzer

use LEX

write in a high level language and use language I/O

write in assembler and do your own I/O

Double buffering works quite well

fill one while reading from the other.

ok if token is smaller than the buffer.

Lexical is to scan input and create tokens.

interaction with parser

tokens generated each time required.

tokens written to a file or generated as needed.

Roll of lex with symbol table? Create the symbol table now

or during parsing? Personally, I like during parsing,

however the text discusses the creation during lexical.

specification of tokens

strings and languages

string

length of string

prefix

suffix

substring

empty string

empty set

Operations on Languages

union

concatenation

closure

Lo

=

{}

Regular Expressions

is a re denoting {}, the set containing the empty string

a is re

a | b is re

ab is re

a* is re

(a) - you can have an extra set of parens

digit = [0-9]

nat = digit+

signedNat = (+|-)? nat

number = signedNat (“.” nat)?(E signedNat)?

To implement, one needs a FA.

Some languages cannot be recognized by regular expressions.

id -> letter (letter | digit) *

Draw the FSM for this.

NFA, DFA, Minimize