X. Secure Systems Engineering

advertisement

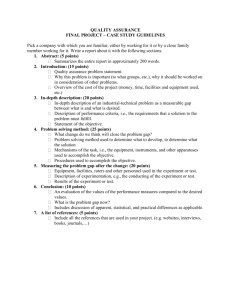

X. Secure Systems Engineering This SOAR focuses primarily on current activities, techniques, technologies, standards, and organizations that have as their main objective the production and/or sustainment of secure software. Should also mention research / research needs. What “organizations” is doing in this first sentence is unclear. Are the activities etc. FOR these organizations? Or is this about organizations that promote good practices for secure software? Finally, will there be any discussion of certification of products, processes, or people? However, software is typically an element or part of a larger system, whether it is a softwareintensive system or a system that is composed of both hardware and software elements. The perspective of this section, then, is that of the systems engineer(s) who seeks to understand the security issues associated with the software components of a larger secure system. Within this section, we will summarize typical issues, topics, and techniques used by systems engineers to build secure systems, with a view towards clarifying the relationships between those issues, topics, and techniques and those that pertain specifically to secure software development. According to [Anderson] Security engineering is about building systems to remain dependable in the face of malice, error, or mischance. As a discipline, it focuses on the tools, processes, and methods needed to design, implement, and test complete systems, and to adapt existing systems as their environment evolves. Security engineering requires cross-disciplinary expertise, ranging from cryptography and computer security, to hardware tamper-resistance and formal methods, to applied psychology, organizational and audit methods and the law… Security requirements differ greatly from one system to another. One typically needs some combination of user authentication, transaction integrity and accountability, fault-tolerance, message secrecy, and covertness. But many systems fail because their designers protect the wrong things, or protect the right things but in the wrong way. To create a context for discussing the development of secure software, we describe here the technical processes and technology should this be technologies and tools? Or techniques and technologies? associated with the development of secure systems, and the relationship between the technical aspects of secure systems engineering and those of secure software engineering as it occurs within systems engineering lifecycle. According to [MIL-HDBK-1785] Systems Security Engineering (SSE) is defined as an element of system engineering that applies scientific and engineering principles to identify security vulnerabilities and minimize or contain risks associated with these vulnerabilities 1 X.1 Background ”System Assurance” is a better title This section needs an introduction that positions Assurance at the center of the SSE activities. The quote is excellent, but it’s too dense to serve as an introduction. “… there is growing awareness that systems must be designed, built, and operated with the expectation that system elements will have known and unknown vulnerabilities. Systems must continue to meet (possibly degraded) functionality, performance, and security goals despite these vulnerabilities. In addition, vulnerabilities should be addressed through a more comprehensive life cycle approach, delivering system assurance, reducing these vulnerabilities through a set of technologies and processes.” “System assurance is the justified confidence that the system functions as intended and is free of exploitable vulnerabilities, either intentionally or unintentionally designed or inserted as part of the system at any time during the life cycle.” [NDIA] As system assurance can be discussed relative to any desirable attribute of a system, this document is specifically addressing the system assurance of the security properties of the entire system in the system lifecycle. Secure systems engineering for purposes of this document equates to system security assurance. X.1.1 Assurance Cases “The assurance case is the enabling mechanism to show that the system will meet its prioritized requirements, and that it will operate as intended in the operational environment, minimizing the risk of being exploited through weaknesses and vulnerabilities.” Source?? An assurance case (i.e., a system security assurance case in this document) is the primary mechanism used in secure systems engineering to support claims that a system will operate as intended in its operational environment and minimizes the risk that it has security weaknesses and vulnerabilities. Development and communication of the assurance case will be part of the overall risk management strategy during the system lifecycle. Assurance claims would be included in the systems requirements list, and tagged as assurance claims. The assurance case is built and maintained throughout the systems engineering lifecycle and system assurance activities become part of the lifecycle. Assurance cases are typically reviewed at major milestones during the lifecycle. The paragraphs flow much better if this one is moved here. [ISO/IEC 15026-2:2011] defines the parts of an assurance case as follows (with emphasis added): “An assurance case includes a top-level claim for a property of a system or product (or set of claims), systematic argumentation regarding this claim, and the evidence and explicit assumptions that underlie this argumentation. Arguing through multiple levels of subordinate 2 claims, this structured argumentation connects the top-level claim to the evidence and assumptions.” As discussed in [NDIA], COMMENT: Footnoting is an obstacle for readers – it draws their eyes away from the main text. Also, the information presented in the footnotes here is extremely valuable and should not be relegated to the footnotes. Recommend putting the footnote information as an explanation after each quote. In addition, it would help if the explanation for each were to indicate the stage where it is used (claims – requirements; argument – architecture and design; evidence – integration, V&V). “Claims identify the system’s critical requirements for assurance, including the maximum level of uncertainty permitted for them. A claim must be a clear statement that can be shown to be true or false–not an action.’1 “An argument is a justification that a given claim (or sub-claim) is true or false. The argument includes context, criteria, assumptions, and evidence.”2 “Evidence is information that demonstrably justifies the argument.”3 COMMENT: this section needs a wrap-up, possibly with a transition to the next topic. X.2 Systems Engineering and Systems Security Engineering Many process models are available to the systems engineer for developing the system, such as the waterfall and spiral models. Given that a risk-centric approach is needed for security engineering, a spiral systems development model, which is a risk-driven approach for development of products or systems, is ideal. However, as a mean for understanding the relationships of systems to software engineering and testing and validation, one of the most illustrative systems process models is the “Vee” process model conceived by Forsberg and Mooz [Forsberg], who assert that it depicts the “technical aspect of the project cycle.” Figure X is derived from this Vee model to demonstrate the relationship between secure systems engineering and secure software engineering. COMMENT: It would be good to point out the position of assurance within the Vee. 1 An example of a claim might be unauthorized users are not able to gain entry into the system. A fault tree is an example of a technique and graphical notation used to represent arguments. 3 Mathematical proofs, verification of test results, and analysis tool results are examples of evidence. 2 3 Figure X. Vee Process Model Applied to Secure Systems Engineering The remainder of this section is organized around this “Vee” model and discussed in the order shown. X.2.1 Systems Security Requirements Definition and Analysis This section addresses defining and analyzing security requirements during the overall system requirements analysis lifecycle phase. In general a higher level of assurance is required for the most critical systems and subsystems. However users may not be totally aware of the security risks, risks to the mission, and vulnerabilities associated with their system. True, but the sentence seems out of place; it needs to be linked to something. Recommend moving to the next paragraph and rewording of that paragraph. To define requirements, systems engineers may, in conjunction with users, perform a top-down and bottom-up analysis of possible security failures that could cause risk to the organization as well as define requirements to address vulnerabilities. However users may not be totally aware of the security risks, risks to the mission, and vulnerabilities associated with their system. Therefore, in identifying and analyzing security requirements (based on [NDIA]), the systems engineers in conjunction with the users should, when considering the operational environment: Identify user’s tolerance for failures, degradation in operation, or loss Based on previous experience identify threats, vulnerabilities, or weaknesses and define requirements to address these issues Identify mitigation and compliance requirements 4 Identify the system boundary and environment; what security services are to be provided by the system; and what security services are provided external to the system boundary. Identify those external capabilities or dependencies for which the system must have defensive mechanisms. It would really improve this to provide a couple of examples of those external capabilities or dependencies; some readers may lack the imagination to come up with examples. Define the system functional breakdown structure with the focus on system assurance, including: o Define critical intrinsic system functions (i.e., if compromised would jeopardize the mission) o Define defensive functions that secures the system and protects critical functions o Identify and document assurance requirements and functions o Identify Commercial off the Shelf (COTS) items and issues associated with the COTS that might affect system assurance (e.g., default settings) o Derive assurance criteria and system claims and include these in the functional breakdown structure. Derive and document all claims, assumptions, and the types of evidence to be collected. If evidence cannot be identified, the claims will need to be redefined. Techniques available to the systems engineer for requirements identification and analysis include: Fault tree analysis for security (sometimes referred to as threat tree or attack tree analysis) is a top-down approach to identifying vulnerabilities. In a fault tree, the attacker’s goal is placed at the top of the tree. Then, the analyst documents possible alternatives for achieving that attacker goal. For each alternative, the analyst may recursively add precursor alternatives for achieving the subgoals that compose the main attacker goal. This process is repeated for each attacker goal. By examining the lowest level nodes of the resulting attack tree, the analyst can then identify all possible techniques for violating the system’s security; preventions for these techniques could then be specified as security requirements for the system. Failure Modes and Effects Analysis (FMEA) is a bottom-up approach for analyzing possible security failures. The consequences of a simultaneous failure of all existing or planned security protection mechanisms are documented, and the impact of each failure on the system’s mission and stakeholders is traced. Failure Mode Effects and Criticality Analysis (FMECA) Failure Modes and Impacts Criticality Analysis (FMICA) Threat modeling; Misuse and abuse cases. Information derived from system security policy models and system security targets that describe the system’s required protection mechanisms Attack tree analyses and FMEAs augment and complement the security requirements derived from the system’s threat models, security policy models, and/or security targets. Would it help to define or give examples of security policy models and security targets? The results of the system security 5 requirements analysis can be used as the basis for security test case scenarios to be used during integration or acceptance testing, discussed in subsequent sections. Examples of security threats to a product include the following (from [Secure CMMI]): Outsider is able to perform denial of service attack on system. Malicious user injects JavaScript code to hijack session of legitimate user. Internal user is able to escalate his privileges in the system due to architectural security flaws. A virus outbreak affects the availability of the system. Rewording to keep the threat in the first position in the sentence Supplier may provide software configuration items that are malicious or of poor quality and insecure. Examples of sources of security needs include the following (from [Secure CMMI]): Country specific regulations and laws (e.g., personal data protection, export controls) Domain specific regulations and laws (e.g., Cyber Security Standards of the North American Electric Reliability Corporation [NERC 2011 a] and [NERC 2011 b], Health Insurance Portability and Accountability Act (HIPAA) [US 1996], Health Information Technology for Economic and Clinical Health Act (HITECH) [US 2009]) Standards and requirements catalogs (e.g., White Paper Requirements for Secure Control and Telecommunication Systems [BDEW 2008], Embedded Device Security Assurance Certification Specification [ISCI 2010], Security Requirements for Vendors of the International Instrument Users’ Association WIB [WIB 2010], System security requirements and security assurance levels of the ISA99 Committee [ISA 2011], Common Criteria for Information Technology Security Evaluation [CCMB 2009], PCI Data Security Standard [SSC 2010]) Request for proposal (RFP) or feature requests Product security risk assessment Development of misuse and abuse cases Organization internal protection goals (e.g., intellectual property protection, licensing regulations) Security activities of competitors or members of an industry association Not always competitors; for example, local water utilities Analysis of existing or future threats by using vulnerability databases (e.g., Common Weakness Enumeration List, SANS TOP 25 Most Dangerous Software Errors) Security topics with high customer attention Analysis of security incidents in own or comparable systems Organizational security baselines and policies X.2.2 Secure Systems Architecture and Design Secure architecture and design involve developing model(s) of the system to be constructed that meets the requirements and assurance claims for the system. It includes allocation of system assurance requirements to elements of the design. 6 From an assurance perspective, many design best practices have been identified in many sources to meet assurance objectives. Some representative issues and approaches identified include (from [NDIA]): Identify and prioritize the criticality of elements of the architecture; and making the most critical elements less complex and more isolated from other elements Identify and prioritize the critical information flows in the system; ensure that such flows can be predictably and securely delivered Use of defense in depth approaches where appropriate Identify trust placed in each element, especially related to COTS and GOTS Include defensive elements in the design Identify the functional requirements assigned to all operators in the system and identify privileges needed Identify and measure a trusted system baseline so that corruption can be identified Design approaches to consider include least privilege access, isolation and containment mechanisms, being able to monitor and respond to illegitimate actions, providing resilience to security violations, providing identification and authentication mechanism, use of cryptography, deception, use of previously assured interfaces and elements. Incorporate anti-tamper mechanisms (e.g., seals, protective packaging) where needed Assurance case arguments are defined to justify why the design will meet assurance claims: When considering alternative architectures, identify the effects on assurance and the mitigation techniques that may be necessary within each candidate architecture Maintain mutual traceability between assurance case/requirements and design and construction elements. For interfaces to untrusted elements Identify and document all elements that implement the interfaces, justify why hardening measures are adequate, and justify why the filtering methods are adequate. Reworded to keep verb and object closer together – makes for easier reading For defense-in-depth approaches, document the multiple methods and why the combination of methods is much more difficult to defeat. Demonstrate that elements will adequately respond to malicious input Examples of security design principles for architecture and design include the following (Secure CMMI): Least privilege principle Defense-in-depth principle Definition of trust boundaries Server side authorization Need-to-know principle Updatability 7 COMMENT: Other key principles from later in the document are limiting the size and complexity of components and maximizing modularity of components / loose coupling. In addition, consider adding to the list design basis threat (DBT) to allocate security resources. The following sections discuss two significant implementation issues with security implications, which are not directly related to security functionality. X.2.3 Construction Construction (e.g., fabrication for hardware and coding for software) transforms architectural and design elements into system elements. Multiple assurance techniques will be needed to detect and prevent vulnerabilities during construction. From an assurance perspective, for many systems the construction and unit test phase is where evidence collection begins. Specifically: Evidence of construction of defensive functions and secure interfaces to trusted and untrusted systems and subsystems Evidence of defense-in-depth results supporting arguments related to traceability to defensive functions Evidence that critical infrastructure components (e.g., network, encryption, identity management) supports assurance claims Evidence from use of automated tools (e.g., static analysis) and processes (e.g., inspections) that weaknesses are not present Verification that the enterprise infrastructure supports the assurance case Examples of secure implementation guideline topics for software include the following (from [Secure CMMI]): Programming language specific contents such as forbidden APIs or secure memory management Technology specific contents such as how to avoid web technology specific security issues Compiler configuration and acceptable compiler warnings Application of additional tools to avoid exploitation of buffer overflows X.2.4 Integration To be effectively addressed during the integration phase, system security issues must first be identified during the requirements and design phases. In today’s large distributed systems, system components typically interface with outside systems whose security characteristics are uncertain or questionable. This section is a bit confusing, because the preceding sentence addresses integration with external systems, which is covered more in the System of Systems section below, while the next sentence focuses on integrating components and subsystems. Recommend keeping this focused on components and subsystems, while noting that external integration will be discussed later. The security of an integrated system is built on the behavior and interactions of its components and subsystems. On the basis of a risk analysis of systems components, systems engineers must build in necessary protection mechanisms. 8 During integration hardware, firmware, software, and other external (sub)systems (including COTS and GOTS) are combined to form a system or subsystem. During integration, systems engineers are concerned with assuring all the elements of the system and the interfaces between the elements. The more modular and loosely coupled the design of the system, the easier it is to trace assurance claims during integration. Each element of the system needs to be assessed as to its risk and appropriate mitigation strategies defined in the requirements need to be applied during integration The architectural design needs to be assured, because of the nature of threats that can occur during the integration: Substitution (whether malicious or unintentional) of incorrect system elements Incorrectly (whether malicious or unintentional) composed system Incorrect (whether malicious or unintentional) interfaces From an assurance perspective, in addition to the activities performed during construction, during integration: What is the subject of this?? The wording is very awkward. Re-verify the system element security properties, behaviors and interfaces Record and resolve non-conformances observed during integration Evaluate the assurance implications of any changes X.2.5 Security Verification, Validation, and Testing This section would benefit from an introduction that stresses the relationship with evidence for assurance. ISO/IEC 15288:2008 states that “The purpose of the Verification Process is to confirm that the specified design requirements are fulfilled by the system.” Further “The purpose of the Validation Process is to provide objective evidence that the services provided by a system when in use comply with stakeholders’ requirements, achieving its intended use in its intended operational environment.” In both cases, testing and use of test tools is a common method used in implementing both processes. Testing includes peer reviews and other desk checking techniques. Verification is a planned activity and occurs throughout the whole product lifecycle and is used to generate and provide evidence for the assurance cases. To be effective, individuals with security engineering and assurance skills are required to execute security verification. This is a great statement! This kind of statement should be included in the other sections as well. When designing systems for security, critical elements should be limited in size and complexity so they can be easily verified. Sources for security verification criteria include the following (from [Secure CMMI]): Product and product component security requirements Organizational security policies Secure architecture and design standards 9 Secure configuration standards Secure implementation standards Parameters for tradeoff between security and cost of testing Proposals and agreements Examples of types of security testing tools include the following (from Secure CMMI, NDIA): Data or network fuzzers Blackbox scanners Port scanners Vulnerability scanners Security oriented dynamic analysis tools and static software code analyzers Dynamic application security testing tools Network scanners Protocol analyzers Modeling and Simulation tools From a system assurance perspective, to support the assurance case evidence, the following need to be collected: The results of all analysis and peer reviews The results of all testing Clarify, to distinguish from the next bullet The results of all other penetration testing and other network testing results Validation is typically performed through operational testing and performed throughout the lifecycle, and in particular after verification is complete. It too is used to generate and provide evidence for the assurance cases. Understanding the root cause of non-conformances and process deficiencies and overall implication on the system assurance case are critical. COMMENT: The following was moved from a point later in the section; it fits much better here: From an assurance case perspective, evidence is to be collected during validation that supports system assurance claims, including: Data to support the argument that the system meets security requirements For any non-conformances or changes in requirements, evidence that revalidation was performed successfully. Examples of types of test cases for security validation include the following (from [Secure CMMI]): Check if security documentation can be implemented. Check if authentication mechanisms can be circumvented by brute force attacks. Check if users (authorized and unauthorized) can perform functions not intended. Check if denial-of-service attacks are successful. 10 Check if code injection can be performed successfully (e.g. java script in web applications). Check if SQL statements can be manipulated (SQL injection) Check the current patch level of third party components. Check if product withstands malicious attack of a sophisticated attacker. Check that the system is secure by default and secure in operation. Examples of types of security validation techniques include the following (from Secure CMMI and NDIA): Architectural risk assessment Threat and risk assessment Threat modeling Security code analysis both static and dynamic Automated vulnerability scanning Penetration testing Friendly hacking Fuzzy testing Re-play testing Distributed testing, particularly for Systems of Systems X.2.6 Transition, Operation and Maintenance As stated in [ISO/IEC 15288:2008]: “The purpose of the Transition Process is to establish a capability to provide services specified by stakeholder requirements in the operational environment. This process installs a verified system, together with relevant enabling systems, e.g., operating system, support system, operator training system, user training system, as defined in agreements.” “The purpose of the Operation Process is to use the system in order to deliver its services.” “The purpose of the Maintenance Process is to sustain the capability of the system to provide a service.” We combine these three processes so as to address taking a system from a development state to being operational with ongoing maintenance and upgrades occurring. Transition for security involves the planning for and preparation of the operational site for the new system such that it will not negatively impact the assurance of the existing systems in the operational site. It includes configuring the system in the operational site, assessing the impact on system assurance in adapting the new system for the operational site, testing the system in the operational site, and training all classes of users at the operational site. From an assurance perspective, transition evidence is to be collected showing that: 11 Changes made do not have a negative effect on assurance of the transitioned system or to the other systems The installed system has not been tampered with Adequate staff training has occurred Any anomalies found are of low risk Key activities during operation involve staff training to maintain system assurance, monitoring of the system to ensure the system is running securely, and making modifications to the system and the assessment of the risk of the modifications on security so as to maintain system assurance. From as assurance perspective, operational evidence to be collected includes: Staff training records to show the right training is being performed periodically Audit records from regular audits to show that the system’s assurance has not been compromised Verification records that demonstrate that environmental assumptions are still valid As stated in [NDIA], maintenance “… monitors system operations and records problems, acts on them to take corrective/adaptive/preventive actions, and confirms restored capability.” It involves development of a maintenance strategy (e.g., schedules and resources required), defining the constraints on system requirements, obtaining the necessary infrastructure to perform maintenance, implementing problem reporting and incident recording, performing root cause analysis on failures, implementing corrective procedures and preventive maintenance, and capturing historical data. Maintenance, from a security point of view, involves maintaining and improving system assurance as making changes to a system may have a significant impact on its system assurance. During maintenance, more evidence is collected, including: Analyzing and documenting defects as well as enhancements to determine if any change impacts assurance cases Determine which phase of the system lifecycle is impacted by any change and preform the systems assurance activities of that phase. X.2.7 Disposal Even at the end of life of a system, when the system is deactivated, disassembled, or destroyed are there system security considerations. The system may contain hardware components (e.g., memory), or secure data, or cryptographic gear which has to be destroyed safely and securely. Further the system may interface to other systems whose systems assurance properties are important. This is vague; given an example or a bit of an explanation. The disposal process involves sanitization or destruction of hardware, software, data, media (e.g., backups), manuals, and training materials. From an assurance case perspective, during disposal evidence needs to be collected to: Demonstrate that elements to be disposed of have been identified and disposed of in an appropriate manner. 12 X.3 Relationship to and Challenges of Systems-of-Systems Security Engineering As stated by [Baldwin], “To implement the war-fighting strategies of today and tomorrow, systems are networked and designed to share information and services in order to provide a flexible and coordinated set of war-fighting capabilities. Under these circumstances, systems operate as part of an ensemble of systems supporting broader capability objectives. This move to s system-of-systems (SoS) environment poses new challenges to systems engineering in general and to specific DoD efforts to engineer secure military capability.” A system of systems is a set or arrangement of systems that results when independent and useful systems are integrated into a larger system that delivers unique capabilities. [SoS Sys Eng] While a System of Systems is still a System, several challenges exist affecting the security aspects of the SoS: COMMENT: This is a great list and its importance deserves to be highlighted more strongly. Also, the order of elements in the list needs to reflect related ideas. Here is a recommended reorganization: 1. With limited attention paid to the broader context of SoS, and with the SoS context being very dynamic, a SoS may be viewed as secure at one point, new vulnerabilities may emerge as the SoS context changes. This one seems to be the most important one to emphasize. 2. Principles of systems engineering are challenged because a system of systems does not have clear boundaries and requirements 3. Because competing authorities and responsibilities exist across the component systems, systems engineering is typically not applied to the SoS. Individual systems are only able to address their individual security issues 4. Since component systems have independent ownership, limited control over the development environment exists 5. Systems engineers may have to sub-optimally allocate functions given they have to use existing systems to achieve required functionality 6. 7. Systems engineers are limited to controlling system security engineering to individual systems. As a result, vulnerabilities in other system components could jeopardize the security status of the overall SoS. This is particularly true when the SoS combines Security Critical and Safety Critical systems for which individual requirements may actually conflict as demonstrated in [Axelrod]. 8. Interfaces between component systems increases vulnerabilities and risks in the SoS 9. 10. Some possible approaches to addressing these issues include: 13 Data-continuity checking across systems What about integrity? To be honest, there isn’t nearly enough about integrity discussed in this section. Sharing of real-time risk assessment SoS configuration hopping to fool adversaries of SoS configuration X.4 Hardware Assurance While this report is focused primarily on software assurance, from a secure system’s engineering perspective, electronic components consisting of semiconductors and integrated circuits represents another source of vulnerability to the security and trustworthiness of systems. A recent report [SRC] from the Semiconductor Research Corporation summarizes the findings of a workshop of experts and researchers from the semiconductor and software engineering communities “to identify research based on the state of the art in each community that can lead to a ‘foundation of trust’ in our world of intelligent, interconnected systems built on secure, trustworthy, and reliable semiconductors.” The vulnerabilities of primary interest within the semiconductor world are counterfeit parts, tainted components, and malicious attacks (e.g., deliberate tampering or insertion of trojan circuits). How does “tainted” differ from “malicious attacks”? Need to clarify or merge the two together At a summary level, the state of the art in current design and manufacturing processes verifies that the semiconductor does what it is supposed to, but does not answer “Does it do anything else?” The report identified the following areas of needed research: “1. Functional specification of circuits and systems at the architecture level, including techniques that support formal reasoning and efficient verification. 2. Properties that, if specified and enforced, can provide assurance that a chip is secure when used in a particular application. 3. Strategies and techniques for ‘functional + security’ verification (i.e. provide a level of confidence that a design does what is desired, and nothing else), for example, including through use of properties identified above, within levels, in particular at the more abstract levels, prior to the Register Transfer Level (RTL). 4. Strategies and techniques for functional + security verification, including through use of properties identified above, at the transition between steps or levels. 5. Strategies and techniques for functional + security verification, including through use of properties identified above, between on-chip modules and at the interface with third-party intellectual property (IP). 6. Metrics/benchmarks for measuring “trustworthiness”. Such metrics are critical for assessing various strategies and analyzing cost/benefit. This is good, but expand a bit more on cost / benefit. 14 7. Strategies that allow customers/users to nondestructively authenticate the provenance of a semiconductor at low cost. Ideally, such strategies should be based on features or functions that are “unclonable” and not proprietary or sensitive.” More detail to follow current practices in semiconductor design, manufacturing, and verification… X.5 Secure Systems Engineering and the CMMI and Other Models COMMENT: No comments in this section; it’s really detailed and somewhat boring in comparison to the preceding sections. Analysis of the information, rather than simply presenting the tables, might be more useful for readers. The Capability Maturity Model® Integration (CMMI) is a widely used process improvement model used by DoD, other suppliers, and development organizations for developing and improving processes and capabilities. CMMI is also a framework for appraising an organization’s process maturity. CMMI has been released to address three areas: CMMI for Development [CMMI-DEV] for product and service development organizations CMMI for Services [CMMI-SVC] for service provider organizations CMMI for Acquisition [CMMI-ACQ] for acquiring products and services As this SOAR is focused on the state of the art related to systems and software engineering organizations, we will concentrate on CMMI-DEV. Prior to 2010, the CMMI did not explicitly address security in the product development lifecycle. Version 1.3 of CMMI® for Development was released in 2010. Version 1.3 addressed aspects of secure systems engineering. As described by [Konrad], “…the practices of CMMI were written for general applicability and for a broad range of acquiring, developing/testing, service delivery missions and environments. Each organization must interpret CMMI for its particular needs.” Thus attributes such as security should be treated as “…drivers to how CMMI practices are approached.” While it is beyond the scope of this SOAR to describe in detail the CMMI-DEV, for those readers familiar with it, we will describe here how software and systems security is addressed in it. Security is specifically addressed in the informative material of the CMMI-DEV in the specific process areas as shown in Table X. CMMI-DEV Process Area CM- Configuration Management IPM – Integrated Project Management MA – Measurement and Analysis OPD - Organizational Process Definition Process Area References SG 2 Track and Control Changes | SP 2.2 Control Configuration Items SG 1 Use the Project’s Defined Process | SP 1.3 Establish the Project’s Work Environment SG 1 Align Measurement and Analysis Activities | SP 1.2 Specify Measures SG 1 Establish Organizational Process Assets | SP 1.6 Establish Work Environment Security Recommendations within Process Area When changes are made to baselines, CM reviews should be performed to ensure that the changes have not compromised the security of the system. When planning, designing, and installing a work environment for the project; tradeoffs need to be made among quality attributes such as security, costs, and risks Examples of commonly used measures includes Information security measures, such as number of system vulnerabilities identified and percentage of system vulnerabilities mitigated Work environment standards and procedures should address the needs of all stakeholders and consider security amongst other factors in the work environment. 15 Standards OPF – Organizational Process Focus OT – Organizational Training PI – Product Integration PMC – Project Monitoring and Control PP - Project Planning SG 1 Determine Process Improvement Opportunities | SP 1.1 Establish Organizational Process Needs SG 1 Establish an Organizational Training Capability | SP 1.2 Determine Which Training Needs Are the Responsibility of the Organization SG 3 Assemble Product Components and Deliver the Product | SP 3.4 Package and Deliver the Product SG 1 Monitor the Project Against the Plan | SP 1.1 Monitor Project Planning Parameters SG 2 Manage Corrective Action to Closure | SP 2.1 Analyze Issues SG 1 Establish Estimates | SP 1.2 Establish Estimates of Work Product and Task Attributes SG 1 Establish Estimates | SP 1.4 Estimate Effort and Cost SG 2 Develop a Project Plan | SP 2.3 Plan Data Management RD- Requirements Development RSKM – Risk Management SAM – Supplier Agreement Management TS – Technical Solution SG 2 Develop Product Requirements | SP 2.2 Allocate Product Component Requirements SG 1 Prepare for Risk Management | SP 1.1 Determine Risk Sources and Categories SG 2 Identify and Analyze Risks | SP 1.2 Select Suppliers SG 2 Develop the Design | SP 2.1 Design the Product or Product Component SG 2 Develop the Design | SP 2.2 Establish a Technical Data Package SG 2 Develop the Design | SP 2.3 Design Interfaces Using Criteria SG 2 Develop the Design | SP 3.2 Develop Product Support Documentation Examples of candidate standards includes ISO/IEC 27001:2005 Information technology – Security techniques – Information Security Management Systems – Requirements [ISO/IEC 2005] NDIA Engineering for System Assurance Guidebook [NDIA 2008] Includes security training Satisfy requirements and standards for packaging and delivering the product, including security Monitor the security environment Examples of possible issues to be collected and analyzed include data access, collection, privacy, and security issues Project estimates will be based on the technical approach of the project. The technical approach would be affected by quality attributes expected in the final products, such as security Security items affecting the cost estimates could include the level of security required for tasks, work products, hardware, software, staff, and work environment Data in this context is referring to deliverables and other forms of documentation. Example deliverables would include security requirements and security procedures. Also included is establishing requirements and procedures to ensure privacy and the security of data Requirements include architecturally significant quality attributes such as security A risk source may include security regulatory constraints If modified COTS products are being considered, consider security requirements Quality attributes for which design criteria can be established includes security Product architectures include abstract views related to issues or features within a product including security Criteria for interfaces reflect critical product parameters that are often associated with security and other mission critical characteristics. Product support documentation includes installation, operation, and maintenance documentation. Documentation standards would include security 16 classification markings. Table X – CMMI-DEV Product Security Direct References Within the CMMI-DEV, security is also addressed indirectly when references to quality attributes are described. The [CMMI-DEV] states: Quality attributes address such things as product availability; maintainability; modifiability; timeliness, throughput, and responsiveness; reliability; security; and scalability. Quality attributes are non-functional, such as timeliness, throughput, responsiveness, security, modifiability, reliability, and usability. They have a significant influence on the architecture. References then to quality attributes within the CMMI-DEV represent proxies for any of these desired product attributes. From that perspective the CMMI-DEV further addresses security in areas described in Table Y. In Table Y, I have replaced CMMI-DEV references to quality attributes with an italicized security to highlight the indirect reference to security. CMMI-DEV Process Area CM- Configuration Management PI – Product Integration QPM – Quantitative Project Management RD – Requirements Development Process Area References SG 3 Establish Integrity | SP 3.2 Perform Configuration Audits SG 1 Prepare for Product Integration | SP 1.3 Establish Product Integration Procedures and Criteria SG 1 Prepare for Quantitative Management | SP 1.1 Establish the Project’s Objectives SG 1 Prepare for Quantitative Management | SP 1.3 Select Subprocesses and Attributes SG 1 Develop Customer Requirements | SP 1.1 Elicit Needs SG 1 Develop Customer Requirements | SP 1.2 Transform Stakeholder Needs into Customer Requirements SG 1 Develop Customer Requirements | SP 2.1 Establish Product and Product Component Requirements SG 3 Analyze and Validate Requirements | SP 3.1 Establish Operational Concepts and Scenarios SG 3 Analyze and Validate Requirements | SP 3.2 Establish a Definition of Required Functionality and Quality Attributes SG 3 Analyze and Validate Requirements | SP 3.4 Security Recommendations within Process Area Functional configuration audits (FCAs) are performed to verify that the development has achieved the desired product functional and security characteristics Product integration criteria can be defined for what product security behaviors the product is expected to have Need to define and document measurable security performance objectives for the project. In selecting attributes critical to evaluating performance, security measures should be considered An example of techniques to elicit needs includes security and other quality attributes elicitation workshop Establish and maintain a prioritization of customer functional and security requirements Develop architectural requirements capturing critical security and security measures necessary for for the product architecture and design Include security needs of the stakeholders in operational concepts and scenarios, including operations, installation, development, maintenance, support, and disposal of the product Security requirements are an essential input to the design process. Determine architecturally significant security requirements based on key mission and business drivers. Consider partitioning requirements into groups, based on established criteria, such as similar security requirements Perform a risk assessment on the security requirements relative to other requirements. Assess the impact of the 17 RSKM – Risk Management TS – Technical Solution Analyze Requirements to Achieve Balance SG 1 Prepare for Risk Management | SP 1.1 Determine Risk Sources and Categories SG 2 Identify and Analyze Risks | SP 2.1 Identify Risks SG 1 Select Product Component Solutions | SP 1.1 Develop Alternative Solutions and Selection Criteria SG 1 Select Product Component Solutions | SP 1.2 Select Product Component Solutions SG 2 Develop the Design | SP 2.1 Design the Product or Product Component architecturally significant security requirements on the product and product development costs and risks. An example risk source includes competing security and other quality attribute requirements that affect the technical solution. Security related risks are examples of technical performance risks. Performance risks can include product behavior and operation with respect to functionality or security. Alternative solutions are based on proposed product architectures that include critical product security requirements. The supplier of COTS products will need to meet product functionality and security requirements. Establish the functional and security requirements associated with the selected set of alternatives. Architecture definition tasks would include “selecting architectural patterns that support the functional and” security “requirements, and instantiating or composing those patterns to create the product architecture” Table Y. Security/Quality Attribute References within CMMI-DEV Some controversy exists surrounding whether the CMMI has gone far enough in addressing security. As can be seen in the [LinkedIn] discussion, some believe that, because the CMMI is such an influential and widely used model, the CMMI can be more effective for cyber security if it were extended to specifically addressed cyber security software assurance and Build Security In practices (resulting in a Cyber CMMI); whereas the framers of the CMMI view that the CMMI is a generic model and that cyber is a product requirement like other important requirements. Konrad indicated in his presentation the “…more organizations ask for extensions to CMMI to address software assurance-related topics.” This may be an indicator that cyber may be addressed more specifically in the future. X.5.1 Security by Design with CMMI for Development, Version 1.3 Security by Design with CMMI for Development, Version 1.3 [Secure CMMI] is an application guide sponsored and developed by Siemens AG for CMMI-DEV V1.3. As an application guide it supplements the CMMI DEV with additional process areas to address security for the entire product lifecycle. The authors created this application guide because they felt that “major guidance was missing” for security engineering within CMMI-DEV. Table 5-x highlights the new process areas and practices of this supplement. 18 Security Process Area Purpose Related CMMI-DEV Process Areas Organizational Preparedness for Secure Development To establish and maintain capabilities to develop secure products and react to reported vulnerabilities Organizational Process Definition Organizational Process Focus Organizational Training Security Management in Projects To establish, identify, plan, and manage securityrelated activities across the project lifecycle and to manage product security risks Integrated Project Management Project Planning Supplier Agreement Management Security Requirements and Technical Solution To establish security requirements and a secure design and to ensure the implementation of a secure product. Requirements Development Technical Solution Security Verification and Validation To ensure that selected work products meet their specified security requirements and to demonstrate that the product or product component fulfills the security expectations when placed in its intended operational environment. Validation Verification Specific Goals/Practices SG 1 Establish an Organizational Capability to Develop Secure Products SP 1.1 Obtain Management Commitment and Sponsorship for Security and Security Business Objectives SP 1.2 Establish Standard Processes and other Process Assets for Secure Development SP 1.3 Establish Awareness, Knowledge, and Skills for Product Security SP 1.4 Establish Secure Work Environment Standards SP 1.5 Establish Vulnerability Handling SG 1 Prepare and Manage Project Activities for Security SP 1.1 Establish the Integrated Project Plan for Security Projects SP 1.2 Plan and Deliver Security Training SP 1.3 Select Secure Supplier and Third Party Components SP 1.4 Identify Underlying Causes of Vulnerabilities SG 2 Manage Product Security Risks SP 2.1 Establish Product Security Risk Management Plan SP 2.2 Perform Product Security Risk Assessment SP 2.3 Plan Risk Mitigation for Product Security SG 1 Develop Customer Security Requirements and Secure Architecture and Design SP 1.1 Develop Customer Security Requirements SP 1.2 Design the Product According to Secure Architecture and Security Design Principles SP 1.3 Select Appropriate Technologies Using Security Criteria SP 1.4 Establish Standards for Secure Product Configuration SG 2 Implement the Secure Design SP 2.1 Use Security Standards for Implementation SP 2.2 Add Security to the Product Support Documentation SG 1 Perform Security Verification SP 1.1 Prepare for Security Verification SP 1.2 Perform Security Verification SG 2 Perform Security Validation SP 2.1 Prepare for Security Validation SP 2.2 Perform Security Validation Table 5-X Summary of Practices for Security by Design for CMMI 19 X.6 Related Systems and Software Security Engineering Standards, Policies, Best Practices, and Guides COMMENT: This section should be organized in some manner. For example, by assurance, engineering, and other; or by organization. The list also needs to be cleaned up – ISO/IEC 27001 / 27000 shows up 3 times. CMMI Department of Homeland Security. Assurance Focus for CMMI (Summary of Assurance for CMMI Efforts), 2009. https://buildsecurityin.us-cert.gov/swa/proself_assm.html. Department of Defense and Department of Homeland Security. Software Assurance in Acquisition: Mitigating Risks to the Enterprise, 2008. https://buildsecurityin.uscert.gov/swa/downloads/SwA_in_Acquisition_102208.pdf. International Organization for Standardization and International Electrotechnical Commission. ISO/IEC 27001 Information Technology – Security Techniques – Information Security Management Systems – Requirements, 2005. http://www.iso.org/iso/iso_catalogue/catalogue_tc/catalogue_detail.htm?csnumber= 42103 ISO/IEC 12207:2008 Systems and Software Engineering – Software Life Cycle Processes [ISO 2008a] ISO/IEC 15288:2008 Systems and Software Engineering – System Life Cycle Processes [ISO 2008b] ISO/IEC 27001:2005 Information technology – Security techniques – Information Security Management Systems – Requirements [ISO/IEC 2005] - . – a standard used for implementing an information security management system (ISMS) within an organization. ISO/IEC 14764:2006 Software Engineering – Software Life Cycle Processes – Maintenance [ISO 2006b] ISO/IEC 20000 Information Technology – Service Management [ISO 2005b] Assurance Focus for CMMI [DHS 2009], https://buildsecurityin.uscert.gov/swa/downloads/Assurance_for_CMMI_Pilot_version_March_2009.pdf Resiliency Management Model [SEI 2010c], http://www.cert.org/resilience/rmm.html IEC 61508, Functional Safety of Electrical/Electronic/Programmable Electronic Safety-related Systems ISO/IEC 27001 Information Security Management System (ISMS) ISO 9001 – Quality Management IA Controls (NIST SP 800-53, DOD 8500.02) and C&A Methodologies (NIST SP 800-37, DIACAP) ISO/IEC 15408, Common Criteria for IT Security Evaluation 20 ISO/IEC 15443, Information technology -- Security techniques -- A framework for IT security assurance (FRITSA) ISO/IEC 21827, System Security Engineering Capability Maturity Model (SSE CMM) revision ISO/IEC 27000 series – Information Security Management System (ISMS) X.7 Supply Chain Risk Management TBD… An untrustworthy supply chain can lead to loss of assurance References for Section [Anderson] Anderson, R., “Security Engineering: A Guide to Building Dependable Distributed Systems,” Wiley, 2008, ISBN: 978-0-470-06852-6 [Axelrod] “Trading Security and Safety Risks within Systems of Systems”, Axelrod, C.W., INCOSE Insight, July 2011, Vol 14 Issue 2, pp 26-30 [Baldwin] Baldwin, K., Dahmann, J., and Goodnight, J., “Systems of Systems and Security: A Defense Perspective”, Insight, July 2011, Vol 14, Issue 2 [CMMI-ACQ] http://www.sei.cmu.edu/library/abstracts/reports/10tr032.cfm [CMMI-DEV] http://www.sei.cmu.edu/reports/10tr033.pdf [CMMI-SVC] http://www.sei.cmu.edu/reports/10tr034.pdf [Forsberg] Kevin Forsberg and Harold Mooz, “The Relationship of System Engineering to the Project Cycle,” in Proceedings of the First Annual Symposium of National Council on System Engineering, October 1991: 57–65. [INCOSE 2012] INCOSE Systems Engineering Handbook (INCOSE 2012): Systems Engineering Handbook: A Guide for System Life Cycle Processes and Activities, version 3.2.2. San Diego, CA, USA: International Council on Systems Engineering (INCOSE), INCOSE-TP-2003-002-03.2.2. [ISO/IEC 15026-2] IEEE Standard—Adoption of ISO/IEC 15026-2:2011 Systems and Software Engineering— Systems and Software Assurance—Part 2: Assurance Case [Konrad] https://buildsecurityin.us-cert.gov/sites/default/files/Konrad-SwAInCMMI.pdf [LinkedIn] LinkedIn Discussion at http://www.linkedin.com/groups/Cyber-Security-CMMI54046.S.200859335?trk=group_search_item_list-0-b-ttl&goback=.gna_54046 (Accessed 10 May 2013) [MIL-HDBK-1785] MIL-HDBK-1785, DEPARTMENT OF DEFENSE HANDBOOK: SYSTEM SECURITY ENGINEERING PROGRAM MANAGEMENT REQUIREMENTS (01 AUG 1995) [NDIA] NDIA System Assurance Committee. Engineering for System Assurance. Arlington, VA: NDIA, 2008. http://www.ndia.org/Divisions/Divisions/SystemsEngineering/Documents/Studies/SA-Guidebook-v1-Oct2008REV.pdf. [Secure CMMI] “Security by Design with CMMI for Development, Version 1.3: An Application Guide for Improving Processes for Secure Products,” Siemens AG Corporate Technology, May 2013, Technical Note Clearmodel 2013TN-01 [SoS Sys Eng] US Department of Defense. 2008. Systems Engineering Guide for Systems of Systems. Washington, DC [SRC] “Research Needs for Secure, Trustworthy, and Reliable Semiconductors,” Semiconductor Research Corporation, January 2013, http://www.src.org/calendar/e004965/sa-ts-workshop-report-final.pdf 21