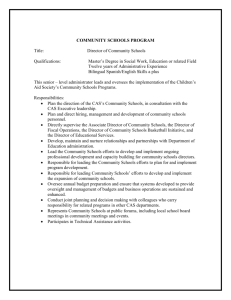

IT-502 Information Storage & Management Exam Paper

advertisement

Department of Information Technology IT-Vth SEM Information Storage and Mgt. (IT-502) PUT- TEST Time: 2:30Hrs. M. Marks: 20 Note: - Attempt any one question from each unit. UNIT-I Q.1. What do you mean by Storage Technology? Q.2 Discuss the characteristics of developing an ILM strategy. Also list its advantages. UNIT-II Q.3 Explain architecture of intelligent disk subsystems. Q.4 What are various factors that affect the performance of disk drives? UNIT-III Q.5 Discuss various forms of network storage used in ISM. Q.6 Explain CAS protocol. UNIT-IV Q.7 What are the Disaster recovery principles which are required to protect essential data of any organization? Q.8 Discuss the key components which are required to manage network in SNMP. UNIT-V Q.9. What is cloud computing? Q.10. Discuss Types of techniques used by RAID Model. Information Storage and Mgt. (IT-502) II-MIDTERM TEST- SOLUTIONS SOLUTION Q.1 Evolution of Storage Technology and Architecture The evolution of open systems and the affordability and ease of deployment that they offer made it possible for business units/departments to have their own servers and storage. In earlier implementations of open systems, the storage was typically internal to the server. Originally, there were very limited policies and processes for managing these servers and the data created. To overcome these challenges, storage technology evolved from non-intelligent internal storage to intelligent networked storage. Highlights of this technology evolution include: Redundant Array of Independent Disks (RAID): This technology was developed to address the cost, performance, and availability requirements of data. It continues to evolve today and is used in all storage architectures such as DAS, SAN, and so on. Direct-attached storage (DAS): This type of storage connects directly to a server (host) or a group of servers in a cluster. Storage can be either internal or external to the server. External DAS alleviated the challenges of limited internal storage capacity. Storage area network (SAN): This is a dedicated, high-performance Fibre Channel (FC) network to facilitate block-level communication between servers and storage. Storage is partitioned and assigned to a server for accessing its data. SAN offers scalability, availability, performance, and cost benefits compared to DAS. Network-attached storage (NAS): This is dedicated storage for file serving applications. Unlike a SAN, it connects to an existing communication network (LAN) and provides file access to heterogeneous clients. Because it is purposely built for providing storage to file server applications, it offers higher scalability, availability, performance, and cost benefits compared to general purpose file servers. Internet Protocol SAN (IP-SAN): One of the latest evolutions in storage architecture, IP-SAN is a convergence of technologies used in SAN and NAS. IP-SAN provides block-level communication across a local or wide area network (LAN or WAN), resulting in greater consolidation and availability of data. Storage technology and architecture continues to evolve, which enables organizations to consolidate, protect, optimize, and leverage their data to achieve the highest return on information assets. Information Lifecycle The information lifecycle is the “change in the value of information” over time. When data is first created, it often has the highest value and is used frequently. As data ages, it is accessed less frequently and is of less value to the organization. Understanding the information lifecycle helps to deploy appropriate storage infrastructure, according to the changing value of information. For example, in a sales order application, the value of the information changes from the time the order is placed until the time that the warranty becomes void (see Figure 1-7). The value of the information is highest when a company receives a new sales order and processes it to deliver the product. After order fulfilment, the customer or order data need not be available for real-time access. The company can transfer this data to less expensive secondary storage with lower accessibility and availability requirements unless or until a warranty claim or another event triggers its need. After the warranty becomes void, the company can archive or dispose of data to create space for other high-value information. Figure: Changing value of sales order information Information Lifecycle Management Today’s business requires data to be protected and available 24 × 7. Data centres can accomplish this with the optimal and appropriate use of storage infrastructure. An effective information management policy is required to support this infrastructure and leverage its benefits. Information lifecycle management (ILM) is a proactive strategy that enables an IT organization to effectively manage the data throughout its lifecycle, based on predefined business policies. This allows an IT organization to optimize the storage infrastructure for maximum return on investment. SOLUTION 2: An ILM strategy should include the following characteristics: Business-centric: It should be integrated with key processes, applications, and initiatives of the business to meet both current and future growth in information. Centrally managed: All the information assets of a business should be under the purview of the ILM strategy. Policy-based: The implementation of ILM should not be restricted to a few departments. ILM should be implemented as a policy and encompass all business applications, processes, and resources. Heterogeneous: An ILM strategy should take into account all types of storage platforms and operating systems. Optimized: Because the value of information varies, an ILM strategy should consider the different storage requirements and allocate storage resources based on the information’s value to the business. Implementing an ILM strategy has the following key benefits that directly address the challenges of information management: Improved utilization by using tiered storage platforms and increased visibility of all enterprise information. Simplified management by integrating process steps and interfaces with individual tools and by increasing automation. A wider range of options for backup, and recovery to balance the need for business continuity. Maintaining compliance by knowing what data needs to be protected for what length of time. Lower Total Cost of Ownership (TCO) by aligning the infrastructure and management costs with information value. As a result, resources are not wasted, and complexity is not introduced by managing low-value data at the expense of high-value data. SOLUTION 3 Disk Drive Components: A disk drive uses a rapidly moving arm to read and write data across a flat platter coated with magnetic particles. Data is transferred from the magnetic platter through the R/W head to the computer. Several platters are assembled together with the R/W head and controller, most commonly referred to as a hard disk drive (HDD). Data can be recorded and erased on a magnetic disk any number of times. This section details the different components of the disk, the mechanism for organizing and storing data on disks, and the factors that affect disk performance. Key components of a disk drive are platter, spindle, read/write head, actuator arm assembly, and controller. Figure: Disk Drive Components Platter A typical HDD consists of one or more flat circular disks called platters (Figure 2-3). The data is recorded on these platters in binary codes (0s and 1s). The set of rotating platters is sealed in a case, called a Head Disk Assembly (HDA). A platter is a rigid, round disk coated with magnetic material on both surfaces (top and bottom). The data is encoded by polarizing the magnetic area, or domains, of the disk surface. Data can be written to or read from both surfaces of the platter. The number of platters and the storage capacity of each platter determine the total capacity of the drive. Figure: Spindle and platter Spindle A spindle connects all the platters, as shown in Figure, and is connected to a motor. The motor of the spindle rotates with a constant speed. The disk platter spins at a speed of several thousands of revolutions per minute (rpm). Disk drives have spindle speeds of 7,200 rpm, 10,000 rpm, or 15,000 rpm. Disks used on current storage systems have a platter diameter of 3.5” (90 mm). When the platter spins at 15,000 rpm, the outer edge is moving at around 25 percent of the speed of sound. The speed of the platter is increasing with improvements in technology, although the extent to which it can be improved is limited. Read/Write Head Read/Write (R/W) heads, read and write data from or to a platter. Drives have two R/W heads per platter, one for each surface of the platter. The R/W head changes the magnetic polarization on the surface of the platter when writing data. While reading data, this head detects magnetic polarization on the surface of the platter. During reads and writes, the R/W head senses the magnetic polarization and never touches the surface of the platter. When the spindle is rotating, there is a microscopic air gap between the R/W heads and the platters, known as the head flying height. This air gap is removed when the spindle stops rotating and the R/W head rests on a special area on the platter near the spindle. This area is called the landing zone. The landing zone is coated with a lubricant to reduce friction between the head and the platter. The logic on the disk drive ensures that heads are moved to the landing zone before they touch the surface. If the drive malfunctions and the R/W head accidentally touches the surface of the platter outside the landing zone, a head crash occurs. In a head crash, the magnetic coating on the platter is scratched and may cause damage to the R/W head. A head crash generally results in data loss. Figure: Actuator arm assembly Actuator Arm Assembly The R/W heads are mounted on the actuator arm assembly, which positions the R/W head at the location on the platter where the data needs to be written or read. The R/W heads for all platters on a drive are attached to one actuator arm assembly and move across the platters simultaneously. Controller The controller is a printed circuit board, mounted at the bottom of a disk drive. It consists of a microprocessor, internal memory, circuitry, and firmware. The firmware controls power to the spindle motor and the speed of the motor. It also manages communication between the drive and the host. In addition, it controls the R/W operations by moving the actuator arm and switching between different R/W heads, and performs the optimization of data access. Physical Disk Structure Data on the disk is recorded on tracks, which are concentric rings on the platter around the spindl. The tracks are numbered, starting from zero, from the outer edge of the platter. The number of tracks per inch (TPI) on the platter (or the track density) measures how tightly the tracks are packed on a platter. Each track is divided into smaller units called sectors. A sector is the smallest, individually addressable unit of storage. The track and sector structure is written on the platter by the drive manufacturer using a formatting operation. The number of sectors per track varies according to the specific drive. The first personal computer disks had 17 sectors per track. Recent disks have a much larger number of sectors on a single track. SOLUTION: 4 A disk drive is an electromechanical device that governs the overall performance of the storage system environment. The various factors that affect the performance of disk drives are discussed below: Disk Service Time Disk service time is the time taken by a disk to complete an I/O request. Components that contribute to service time on a disk drive are seek time, rotational latency, and data transfer rate. Seek Time The seek time (also called access time) describes the time taken to position the R/W heads across the platter with a radial movement (moving along the radius of the platter). In other words, it is the time taken to reposition and settle the arm and the head over the correct track. The lower the seek time, the faster the I/O operation. Disk vendors publish the following seek time specifications: Full Stroke: The time taken by the R/W head to move across the entire width of the disk, from the innermost track to the outermost track. Average: The average time taken by the R/W head to move from one random track to another, normally listed as the time for one-third of a full stroke. Track-to-Track: The time taken by the R/W head to move between adjacent tracks. Each of these specifications is measured in milliseconds. The average seek time on a modern disk is typically in the range of 3 to 15 milliseconds. Seek time has more impact on the read operation of random tracks rather than adjacent tracks. To minimize the seek time, data can be written to only a subset of the available cylinders. This results in lower usable capacity than the actual capacity of the drive. For example, a 500 GB disk drive is set up to use only the first 40 percent of the cylinders and is effectively treated as a 200 GB drive. This is known as short-stroking the drive. Rotational Latency To access data, the actuator arm moves the R/W head over the platter to a particular track while the platter spins to position the requested sector under the R/W head. The time taken by the platter to rotate and position the data under the R/W head is called rotational latency. This latency depends on the rotation speed of the spindle and is measured in milliseconds. The average rotational latency is one-half of the time taken for a full rotation. Similar to the seek time, rotational latency has more impact on the reading/writing of random sectors on the disk than on the same operations on adjacent sectors. Average rotational latency is around 5.5 ms for a 5,400-rpm drive, and around 2.0 ms for a 15,000-rpm drive. Data Transfer Rate The data transfer rate (also called transfer rate) refers to the average amount of data per unit time that the drive can deliver to the HBA. It is important to first understand the process of read and write operations in order to calculate data transfer rates. In a read operation, the data first moves from disk platters to R/W heads, and then it moves to the drive’s internal buffer. Finally, data moves from the buffer through the interface to the host HBA. In a write operation, the data moves from the HBA to the internal buffer of the disk drive through the drive’s interface. The data then moves from the buffer to the R/W heads. Finally, it moves from the R/W heads to the platters. The data transfer rates during the R/W operations are measured in terms of internal and external transfer rates. Internal transfer rate is the speed at which data moves from a single track of a platter’s surface to internal buffer (cache) of the disk. Internal transfer rate takes into account factors such as the seek time. External transfer rate is the rate at which data can be moved through the interface to the HBA. External transfer rate is generally the advertised speed of the interface, such as 133 MB/s for ATA. The sustained external transfer rate is lower than the interface speed. SOLUTION 5 DAS: The most common form of server storage today is still direct attached storage. The disks may be internal to the server or they may be in an array that is connected directly to the server. Either way, the storage can be accessed only through that server. An application server will have its own storage; the next application server will have its own storage; and the file and print servers will each have their own storage. Backups must either be performed on each individual server with a dedicated tape drive or across the LAN to a shared tape device consuming a significant amount of bandwidth. Storage can only be added by taking down the application server, adding physical disks, and rebuilding the storage array. When a server is upgraded, its data must be migrated to the new server. In small installations this setup can work well, but it gets very much more difficult to manage as the number of servers increases. Backups become more challenging, and because storage is not shared anywhere, storage utilization is typically very low in some servers and overflowing in others. Disk storage is physically added to a server based on the predicted needs of the application. If that application is underutilized, then capital cost has been unnecessarily tied up. If the application runs out of storage, it must be taken down and rebuilt after more disk storage has been added. In the case of file services, the typical response to a full server is to add another file server. This adds more storage, but all the clients must now be reset to point to the new network location, adding complexity to the client side. Additional cost in the form of Client Access Licenses (CAL) must also be taken into account. NAS: NAS challenges the traditional file server approach by creating systems designed specifically for data storage. Instead of starting with a general-purpose computer and configuring or removing features from that base, NAS designs begin with the bare-bones components necessary to support file transfers and add features "from the bottom up." Like traditional file servers, NAS follows a client/server design. A single hardware device, often called the NAS box or NAS head, acts as the interface between the NAS and network clients. These NAS devices require no monitor, keyboard or mouse. They generally run an embedded operating system rather than a full-featured NOS. One or more disk (and possibly tape) drives can be attached to many NAS systems to increase total capacity. Clients always connect to the NAS head, however, rather than to the individual storage devices. Clients generally access a NAS over an Ethernet connection. The NAS appears on the network as a single "node" that is the IP address of the head device. A NAS can store any data that appears in the form of files, such as email boxes, Web content, remote system backups, and so on. Overall, the uses of a NAS parallel those of traditional file servers. NAS systems strive for reliable operation and easy administration. They often include built-in features such as disk space quotas, secure authentication, or the automatic sending of email alerts should an error be detected. A NAS appliance is a simplified form of file server; it is optimized for file sharing in an organization. Authorized clients can see folders and files on the NAS device just as they can on their local hard drive. NAS appliances are so called because they have all of the required software preloaded and they are easy to install and simple to use. Installation consists of rack mounting, connecting power and Ethernet, and configuring via a simple browser-based tool. Installation is typically achieved in less than half an hour. NAS devices are frequently used to consolidate file services. To prevent the proliferation of file servers, a single NAS appliance can replace many regular file servers, simplifying management and reducing cost and workload for the systems administrator. NAS appliances are also multiprotocol, which means that they can share files among clients using Windows® and UNIX®-based operating systems. SAN : A SAN allows more than one application server to share storage. Data is stored at a block level and can therefore be accessed by an application, not directly by clients. The physical elements of the SAN (servers, switches, storage arrays, etc.) are typically connected with FibreChannel – an interconnect technology that permits high-performance resource sharing. Backups can be performed centrally and can be more easily managed to avoid interrupting the applications. The primary advantages of a SAN are its scalability and flexibility. Storage can be added without disrupting the applications, and different types of storage can be added to the pool. With the advent of storage area networks, adding storage capacity has become simplified for systems administrators, because it’s no longer necessary to bring down the application server. Additional storage is simply added to the SAN, and the new storage can then be configured and made immediately available to those applications that need it. Upgrading the application server is also simplified; the data can remain on the disk arrays, and the new server just needs to point to the appropriate data set. Backups can be centralized, reducing workload and providing greater assurance that the backups are complete. The time taken for backups is dramatically reduced because the backup is performed over the high speed SAN, and no backup traffic ever impacts users on the LAN. However, the actual implementation of a SAN can be quite daunting, given the cost and complexity of Fibre Channel infrastructure components. For this reason, SAN installations have primarily been confined to large organizations with dedicated storage management resources. The last few years have seen the emergence of iSCSI (which means SCSI over IP or Internet Protocol) as a new interconnect for a SAN. iSCSI is a lower cost alternative to Fibre Channel SAN infrastructure and is an ideal solution for many small and medium sized businesses. Essentially all of the same capability of FC-SAN is provided, but the interconnect is Ethernet cable and the switches are Gigabit Ethernet – the same low-cost technology you use today for your LAN. The tradeoff is slightly lower performance, but most businesses simply will not notice. iSCSI also provides a simple migration path for businesses to use more comprehensive data storage management technologies without the need for a “fork-lift” upgrade. CAS: The Central Authentication Service (CAS) is a single sign-on protocol for the web. Its purpose is to permit a user to access multiple applications while providing their credentials (such as userid and password) only once. It also allows web applications to authenticate users without gaining access to a user's security credentials, such as a password. The name CAS also refers to a software package that implements this protocol. The CAS protocol involves at least three parties: a client web browser, the web application requesting authentication, and the CAS server. It may also involve a back-end service, such as a database server, that does not have its own HTTP interface but communicates with a web application. When the client visits an application desiring to authenticate to it, the application redirects it to CAS. CAS validates the client's authenticity, usually by checking a username and password against a database (such as Kerberos or Active Directory). If the authentication succeeds, CAS returns the client to the application, passing along a security ticket. The application then validates the ticket by contacting CAS over a secure connection and providing its own service identifier and the ticket. CAS then gives the application trusted information about whether a particular user has successfully authenticated. CAS allows multi-tier authentication via proxy address. A cooperating back-end service, like a database or mail server, can participate in CAS, validating the authenticity of users via information it receives from web applications. Thus, a webmail client and a webmail server can all implement CAS. SOLUTION 6: The Central Authentication Service (CAS) is a single sign-on protocol for the web. Its purpose is to permit a user to access multiple applications while providing their credentials (such as userid and password) only once. It also allows web applications to authenticate users without gaining access to a user's security credentials, such as a password. The name CAS also refers to a software package that implements this protocol. The CAS protocol involves at least three parties: a client web browser, the web application requesting authentication, and the CAS server. It may also involve a back-end service, such as a database server, that does not have its own HTTP interface but communicates with a web application. When the client visits an application desiring to authenticate to it, the application redirects it to CAS. CAS validates the client's authenticity, usually by checking a username and password against a database (such as Kerberos or Active Directory). If the authentication succeeds, CAS returns the client to the application, passing along a security ticket. The application then validates the ticket by contacting CAS over a secure connection and providing its own service identifier and the ticket. CAS then gives the application trusted information about whether a particular user has successfully authenticated. CAS allows multi-tier authentication via proxy address. A cooperating back-end service, like a database or mail server, can participate in CAS, validating the authenticity of users via information it receives from web applications. Thus, a webmail client and a webmail server can all implement CAS. SOLUTION 7 Disaster recovery principles for any organization Disaster recovery is becoming increasingly important for businesses aware of the threat of both man-made and natural disasters. Having a disaster recovery plan will not only protect your organization’s essential data from destruction, it will help you refine your business processes and enable your business to recover its operations in the event of a disaster. Though each organization has unique knowledge and assets to maintain, general principles can be applied to disaster recovery. This set of planning guidelines can assist your organization in moving forward with an IT disaster recovery project. Restoration and recovery procedures Imagine that a disaster has occurred. You have the data, now what should you do with it? If you don’t have any restoration and recovery procedures, your data won’t be nearly as useful to you. With the data in hand, you need to be able to re-create your entire business from brand-new systems. You’re going to need procedures for rebuilding systems and networks. System recovery and restoration procedures are typically best written by the people that currently administer and maintain the systems. Each system should have recovery procedures that indicate which versions of software and patches should be installed on which types of hardware platforms. It's also important to indicate which configuration files should be restored into which directories. A good procedure will include low-level file execution instructions, such as what commands to type and in what order to type them. Backups are key As an IT or network administrator, you need to bring all your key data, processes, and procedures together through a backup system that is reliable and easy to replicate. Your IT director's most important job is to ensure that all systems are being backed up on a reliable schedule. This process, though it seems obvious, is often not realized. Assigning backup responsibilities to an administrator is not enough. The IT department needs to have a written schedule that describes which systems get backed up when and whether the backups are full or incremental. You also need to have the backup process fully documented. Finally, test your backup process to make sure it works. Can you restore lost databases? Can you restore lost source code? Can you restore key system files? Finally, you need to store your backup media off-site, preferably in a location at least 50 miles from your present office. Numerous off-site storage vendors offer safe media storage. Iron Mountain is one example. Even if you’re using an off-site storage vendor, it doesn't hurt to send your weekly backup media to another one of your field offices, if you have one. SNMP: Simple Network Management Protocol (SNMP) is an "Internet-standard protocol for managing devices on IP networks". Devices that typically support SNMP include routers, switches, servers, workstations, printers, modem racks, and more. It is used mostly in network management systems to monitor network-attached devices for conditions that warrant administrative attention. SNMP is a component of the Internet Protocol Suite as defined by the Internet Engineering Task Force (IETF). It consists of a set of standards for network management, including an application layer protocol, a database schema, and a set of data objects. SNMP exposes management data in the form of variables on the managed systems, which describe the system configuration. These variables can then be queried (and sometimes set) by managing applications. SOLUTION 8 An SNMP-managed network consists of three key components: Managed device Agent — software which runs on managed devices Network management system (NMS) — software which runs on the manager A managed device is a network node that implements an SNMP interface that allows unidirectional (read-only) or bidirectional access to node-specific information. Managed devices exchange node-specific information with the NMSs. Sometimes called network elements, the managed devices can be any type of device, including, but not limited to, routers, access servers, switches, bridges, hubs, IP telephones, IP video cameras, computer hosts, and printers. An agent is a network-management software module that resides on a managed device. An agent has local knowledge of management information and translates that information to or from an SNMP specific form. A network management system (NMS) executes applications that monitor and control managed devices. NMSs provide the bulk of the processing and memory resources required for network management. One or more NMSs may exist on any managed network. SMI-S: SMI-S, or the Storage Management Initiative – Specification, is a storage standard developed and maintained by the Storage Networking Industry Association (SNIA). It has also been ratified as an ISO standard. SMI-S is based upon the Common Information Model and the Web-Based Enterprise Management standards defined by the Distributed Management Task Force, which define management functionality via HTTP. The most recent approved version of SMI-S is available at the SNIA. The main objective of SMI-S is to enable broad interoperable management of heterogeneous storage vendor systems. The current version is SMI-S V1.6.0. Over 75 software products and over 800 hardware products are certified as conformant to SMI-S. At a very basic level, SMI-S entities are divided into two categories: Clients are management software applications that can reside virtually anywhere within a network, provided they have a communications link (either within the data path or outside the data path) to providers. Servers are the devices under management. Servers can be disk arrays, virtualization engines, host bus adapters, switches, tape drives, etc. CIM: The Common Information Model (CIM) is an open standard that defines how managed elements in an IT environment are represented as a common set of objects and relationships between them. This is intended to allow consistent management of these managed elements, independent of their manufacturer or provider. One way to describe CIM is to say that it allows multiple parties to exchange management information about these managed elements. However, this falls short in expressing that CIM not only represents these managed elements and the management information, but also provides means to actively control and manage these elements. By using a common model of information, management software can be written once and work with many implementations of the common model without complex and costly conversion operations or loss of information. The CIM standard is defined and published by the Distributed Management Task Force (DMTF). A related standard is Web-Based Enterprise Management (WBEM, also defined by DMTF) which defines a particular implementation of CIM, including protocols for discovering and accessing such CIM implementations. The CIM standard includes the CIM Infrastructure Specification and the CIM Schema: CIM Infrastructure Specification The CIM Infrastructure Specification defines the architecture and concepts of CIM, including a language by which the CIM Schema (including any extension schema) is defined, and a method for mapping CIM to other information models, such as SNMP. The CIM architecture is based upon UML, so it is object-oriented: The managed elements are represented as CIM classes and any relationships between them are represented as CIM associations. Inheritance allows specialization of common base elements into more specific derived elements. CIM Schema The CIM Schema is a conceptual schema which defines the specific set of objects and relationships between them that represent a common base for the managed elements in an IT environment. The CIM Schema covers most of today's elements in an IT environment, for example computer systems, operating systems, networks, middleware, services and storage. The CIM Schema defines a common basis for representing these managed elements. Since most managed elements have product and vendor specific behavior, the CIM Schema is extensible in order to allow the producers of these elements to represent their specific features seamlessly together with the common base functionality defined in the CIM Schema. Sol. 9 Cloud Computing In cloud computing large accessible computing resources are provided “as a service” to users on internet because it is “it is internet based system development”. It includes SaaS, web infrastructure, web and other technologies. Industry and research community are attracting towards it. In this paper Construction and the problems that arise during the construction of cloud competing platform is explained. The compatible GFS file system is designed with many chunks of different sizes to help huge data processing. It also introduce implementation enhancements on map reduce to improve the output of system. Some issues are also discussed. The implementation of platform for some specific domain in cloud computing services, it also implements large web text mining as final application. Cloud computing is a new model for delivering and hosting services on the internet. Cloud computing eliminates the user requirements to plan for provisioning, that is why it is attractive for the business community. It has the ability that it can be started from very small scale and can be increased as the resourced increases. Cloud computing provides many opportunities for IT industry for still there are many issues relate to it. In this paper cloud computing is defined and the key concepts, state of art implementation and architectural principles Cloud computing is not a new technology and that is why there are different perceptions of it. To run different businesses in many ways there are many operation models technologies. Virtualization and utility based pricing is not a new technology with respect to cloud computing. These technologies are used to meet economic requirements and demands. Grid computing is distributed computing model that organized network resources to attain common computational objectives. Cloud applications are present on application layer in the highest level of hierarchy. To achieve better availability, lower operating cost and performance cloud computing control automatic scaling feature. The resources are provided as services to public by service providers in the cloud. This cloud is known as internal cloud. Private clouds are made for single organizations to use. Hybrid cloud is the combination of private and public cloud models that tries to deal with limitations of each approach. A hybrid cloud is more flexible than private and public clouds Sol. 10 A RAID set is a group of disks. Within each disk, a predefined number of contiguously addressable disk blocks are defined as strips. The set of aligned strips that spans across all the disks within the RAID set is called a stripe. Figure 3-2 shows physical and logical representations of a striped RAID set. Striped RAID set Strip size (also called stripe depth) describes the number of blocks in a strip, and is the maximum amount of data that can be written to or read from a single HDD in the set before the next HDD is accessed, assuming that the accessed data starts at the beginning of the strip. Note that all strips in a stripe have the same number of blocks, and decreasing strip size means that data is broken into smaller pieces when spread across the disks. Stripe size is a multiple of strip size by the number of HDDs in the RAID set. Stripe width refers to the number of data strips in a stripe. Striped RAID does not protect data unless parity or mirroring is used. However, striping may significantly improve I/O performance. Depending on the type of RAID implementation, the RAID controller can be configured to access data across multiple HDDs simultaneously. Mirroring Mirroring is a technique whereby data is stored on two different HDDs, yielding two copies of data. In the event of one HDD failure, the data is intact on the surviving HDD (see Figure 3-3) and the controller continues to service the host’s data requests from the surviving disk of a mirrored pair. Mirrored disks in an array When the failed disk is replaced with a new disk, the controller copies the data from the surviving disk of the mirrored pair. This activity is transparent to the host. In addition to providing complete data redundancy, mirroring enables faster recovery from disk failure. However, disk mirroring provides only data protection and is not a substitute for data backup. Mirroring constantly captures changes in the data, whereas a backup captures point-in-time images of data. Mirroring involves duplication of data — the amount of storage capacity needed is twice the amount of data being stored. Therefore, mirroring is considered expensive and is preferred for mission-critical applications that cannot afford data loss. Mirroring improves read performance because read requests can be serviced by both disks. However, write performance deteriorates, as each write request manifests as two writes on the HDDs. In other words, mirroring does not deliver the same levels of write performance as a striped RAID. Parity Parity is a method of protecting striped data from HDD failure without the cost of mirroring. An additional HDD is added to the stripe width to hold parity, a mathematical construct that allows re-creation of the missing data. Parity is a redundancy check that ensures full protection of data without maintaining a full set of duplicate data. Parity information can be stored on separate, dedicated HDDs or distributed across all the drives in a RAID set. Figure 3-4 shows a parity RAID. The first four disks, labeled D, contain the data. The fifth disk, labeled P, stores the parity information, which in this case is the sum of the elements in each row. Now, if one of the Ds fails, the missing value can be calculated by subtracting the sum of the rest of the elements from the parity value.