Academy of Management 2010 pape

advertisement

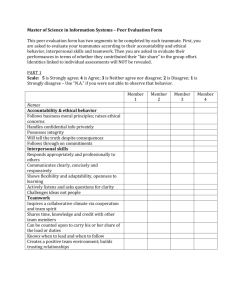

AOM PAPER 13912 Comprehensive Assessment of Team Member Effectiveness: A Behaviorally Anchored Rating Scale Abstract Instructors who use team learning methods often use self and peer evaluations to assess individual contributions to team projects to provide developmental feedback or adjust team grades based on individual contributions. This paper describes the development of a behaviorally anchored rating scale (BARS) version of the Comprehensive Assessment of Team Member Effectiveness (CATME). CATME is a published, Likert-scale instrument for self and peer evaluation that measures team-member behaviors that the teamwork literature has shown to be effective. It measures students’ performance in five areas of team-member contributions: contributing to the team’s work, interacting with teammates, keeping the team on track, expecting quality, and having relevant knowledge, skills, and abilities. A BARS version has several advantages for instructors and students, especially that it can help students to better understand effective and ineffective team-member behaviors and it requires fewer rating decisions. Two studies were conducted to evaluate the instrument. The first shows that the CATME BARS instrument measures the same dimensions as the CATME Likert instrument (linking the BARS version to the theoretical underpinnings of the Likert version). The second provides evidence of convergent and predictive validity for the CATME BARS. Ways to use the instrument and areas for future research are discussed. KEYWORDS: Peer Evaluation, Team-member effectiveness, Teamwork AOM PAPER 13912 College faculty in many disciplines are integrating team-based and peer-based learning methods into their courses in an effort to help students develop the interpersonal and teamwork skills that employers strongly desire but students often lack (Boni, Weingart, & Evenson, S., 2009; Cassidy, 2006; Verzat, Byrne, & Fayolle, 2009). Further, students are often asked to work in teams as part of experiential, service learning, research-based learning, and simulation-based learning projects, which aim to give students more hands-on practice and make them more active participants in their education than traditional lecture-based coursework (Kolb & Kolb, 2005; Loyd, Kern, & Thompson, 2005; Raelin, 2006; Zantow, Knowlton, & Sharp, 2005). As a result, there is a recognized need for students to learn teamwork skills as part of their college education and teamwork is increasingly integrated into the curriculum, yet “teamwork competencies and skills are rarely developed” (Chen, Donahue, & Klimoski, 2004: 28). Teamwork presents challenges for both students and faculty. Student teams often have difficulty working effectively together and may have problems such as poor communication, conflict, and social loafing (Burdett, 2003; Verzat et al., 2009). For instructors, challenges in managing teamwork include preventing or remediating these student issues and assigning fair grades to students based on their individual contributions (Chen & Lou, 2004). Evaluating students’ individual contributions to teamwork in order to develop team skills and deter students from free riding on the efforts of teammates is an important part of team-based instructional methods (Michaelsen, Knight, & Fink, 2004). This paper describes the development and testing of an instrument for self and peer evaluation of individual member’s contributions to the team for use in educational settings where team-based instructional methods are used. The results of two studies provide support for the use of the instrument. 1 AOM PAPER 13912 LITERATURE REVIEW There are several reasons why teamwork is prevalent in higher education. First, educational institutions need to teach students to work effectively in teams to prepare them for careers because many graduates will work in organizations where team work methods are used. About half of U.S. companies and 81% of Fortune 500 companies use team-based work structures because they are often more effective or efficient than individual work (Hoegl & Gemuenden, 2001; Lawler, Mohrman, & Benson, 2001). Second, team-based or collaborative learning is being used with greater frequency in many disciplines to achieve the educational benefits of students working together on learning tasks (Michaelsen, et al., 2004). Team learning makes students active in the learning process, allows them to learn from one another, makes students responsible for doing their part to facilitate learning, and increases students’ satisfaction with the experience compared to lecture classes (Michaelsen, et. al, 2004). Finally, accreditation standards in some disciplines require that students demonstrate an ability to work in teams (ABET, 2000; Commission on Accreditation for Dietetics Education, 2008). Self- and Peer Evaluations Many team-based learning advocates agree that the approach works best if team grades are adjusted for individual performance. Although instructors could use various methods to determine grade adjustments, peer evaluations of teammates’ contributions are the most commonly used method. Millis and Cottell (1998) give strong reasons for using peer evaluation to adjust student grades. Among these are that students, as members of a team, are better able to observe and evaluate members’ contributions than are instructors, who are outsiders to the team. In addition, peer evaluations increase students’ accountability to their teammates and reduce free riding. Furthermore, students learn from the process of completing peer evaluations. Self and 2 AOM PAPER 13912 peer evaluations may also be used to provide feedback in order to improve team skills (Chen, Donahue, & Klimoski, 2004). There is a fairly large body of management research that examines peer ratings, which are used in work contexts, such as 360-degree performance appraisal systems and assessment center selection techniques, in addition to executive education and traditional educational settings (Fellenz, 2006; Hooijberg & Lane, 2009; London, Smither, & Adsit, 1997; Saavedra & Kwun, 1993; Shore, Shore, & Thornton, 1992). Several meta-analytic studies have examined the inter-rater reliability of peer evaluation scores and their correlations with other rater sources and have found that peer ratings are positively correlated with other rating sources and have good predictive validity for various performance criteria (Conway & Huffcutt, 1997; Harris & Schaubroeck, 1988; Schmitt, Gooding, Noe, & Kirsch, 1984; Viswesvaran, Ones, & Schmidt, 1996; Viswesvaran et al., 2005). In work and educational settings, peer evaluations are often used as part of a comprehensive assessment of individuals’ job performance to take advantage of information that only peers are in a position to observe. Thus, peer ratings are often one part of a broader performance assessment that also includes ratings by supervisors or instructors or other sources (Viswesvaran, Schmidt, & Ones, 2005). In addition to assessing performance, peer evaluations of teamwork reduce the tendency of some team members to free-ride on the efforts of others. Completing peer evaluations also helps individuals to understand the performance criteria and how everyone will be evaluated (Sheppard, Chen, Schaeffer, Steinbeck, Neumann, & Ko, 2004). Self appraisals are valuable because they involve ratees in the ratings process, provide information to facilitate a discussion about an individual’s performance, and in the performance appraisal literature, research has shown that ratees feel that self appraisals should be included in 3 AOM PAPER 13912 the evaluation process and that ratees want to provide information about their performance (Inderrieden, Allen, & Keaveny, 2004). Self-appraisals are, however, vulnerable to leniency errors (Inderrieden, et al., 2004). An additional problem that plagues self-appraisals is that people who are unskilled in an area are often unable to recognize deficiencies in their own skills or performance because their lack of competence prevents them from having the metacognitive skills to recognize their deficiencies (Kruger & Dunning, 1999). Therefore, people with poor teamwork skills would be likely to overestimate their own abilities and contributions to the team. A number of problems are associated with both self and peer ratings (Saavedra & Kwun, 1993). One is that many raters, particularly average and below-average performers, do not differentiate in their ratings of team members when it is warranted. Furthermore, when rating the performance of team members, raters tend to use a social comparison framework and rate members relative to one another rather than using objective, independent criteria. Also, some raters worry that providing accurate ratings would damage social relations in the team. In spite of the limitations of self and peer evaluations, instructors commonly use them because instructors need a measure of students’ teamwork contributions that can be used to adjust students’ grades in order to effectively implement team-based learning. However, although the use of peer evaluations is commonplace, there is not widespread agreement about what a system for self and peer evaluation should look like. This paper describes the development of a behaviorally anchored rating scale (BARS) instrument for the self and peer evaluation of students’ contributions to teamwork in higher education classes that is based on research about what types of contributions are necessary for team-members to be effective. CATME Likert-Scale Instrument 4 AOM PAPER 13912 Although a large body of literature examines individual attributes and behaviors associated with effective team performance (Stewart, 2006), there have been no generally accepted instruments for assessing individuals’ contributions to the team. Some peer evaluation instruments, or peer evaluation systems, such as students dividing points among team members, have been published, but they have not become standard in college classes (e.g,. Fellenz, 2006; Gatfield, 1999; Gueldenzoph & May, 2002; McGourty & De Meuse, 2001; Moran, Musselwhite, & Zenger, 1996; Rosenstein & Dickinson, 1996; Sheppard, Chen, Schaeffer, Steinbeck, Neumann, & Ko, 2004; Taggar & Brown, 2001; Van Duzer & McMartin, 2000; Wheelan, 1999). Further, not all published peer evaluation instruments were designed to align closely with the literature on team-member effectiveness. To meet the need for a research-based, peer evaluation instrument for use in college classes, Loughry, Ohland, and Moore (2007) developed the Comprehensive Assessment of Team Member Effectiveness (CATME). The researchers used the teamwork literature to identify the ways by which team members can help their team to be effective. They drew heavily on the work of Anderson and West (1998), Campion, Medsker, and Higgs (1993), Cannon-Bowers, Tannenbaum, Salas, and Volpe (1995), Erez, LePine, and Elms (2002), Guzzo, Yost, Campbell, and Shea (1993), Hedge, Bruskiewicz, Logan, Hanson, and Buck (1999), Hyatt and Ruddy (1997), McAllister (1995), McIntyre and Salas (1995), Niehoff and Moorman (1993), Spich and Keleman (1985), Stevens & Campion (1994 & 1996), and Weldon, Jehn, and Pradhan (1991). Based on the literature, they created a large pool of potential items, which they then tested using two large surveys of college students. They used exploratory factor analysis and confirmatory factor analysis to select the items for the final instrument. The researchers found 29 specific types of team-member contributions that clustered into five broad categories (contributing to the team’s work, interacting with teammates, 5 AOM PAPER 13912 keeping the team on track, expecting quality, and having relevant knowledge, skills, and abilities). The full (87-item) version of the instrument uses three items to measure each of the 29 types of team member contributions with high internal consistency. The short (33-item) version uses a subset of the items to measure the five broad categories (these items are presented in Appendix A). Raters rate each teammate on each item using Likert scales (strongly disagree strongly agree). Although the CATME instrument is solidly rooted in the literature on team effectiveness, instructors who wish to use peer evaluation to adjust their students’ grades in team-based learning projects may need a simpler instrument. Even the short version of the CATME requires that students read 33 items and make judgments about each of the items for each of their teammates. If there are four-person teams and the instructor requires a self-evaluation, each student must make 132 independent decisions to complete the evaluation. To carefully consider all of these decisions may require more effort than students are willing to put forth. Then, in order for instructors to use the instrument to adjust grades within the team, the instructor must review 528 data points (132 for each of the four students on the team) before making the computations necessary to determine an adjustment factor for the four students’ grades. For instructors who have large numbers of students and heavy work loads, this may not be feasible. Other published peer evaluation systems, such as those cited earlier, also require substantial effort, which may be one reason why they have not been widely adopted. Another concern about the CATME instrument is that students using the Likert-scale format to make their evaluations may have very different perceptions about what rating a teammate who behaved in a particular way deserves. This is because the response choices (strongly disagree - strongly agree) are not behavioral descriptions. Therefore, although the 6 AOM PAPER 13912 CATME Likert-format instrument has a number of strengths, some instructors who need to use peer evaluation may prefer a shorter, behaviorally anchored version of the instrument. In the remainder of this paper, we describe our efforts to develop a behaviorally anchored rating scale (BARS) version of the CATME instrument. We believe that a BARS version will have two main advantages that, in some circumstances, may make it more appropriate than the original CATME for instructors who want to adjust individual grades in team learning environments. First, because the BARS instrument will assess only the five broad areas of teammember contribution, raters will only need to make five decisions about each person they rate. In a four-person team, this would reduce the burden for students who fill out the survey from 132 decisions to 20. For the instructor, it reduces the number of data points to review for the team from 528 to 80. In addition, by providing descriptions of the behaviors that a team member would display to warrant any given rating, a BARS version of the instrument could help students to better understand the standards by which both they and their peers are being evaluated. If students learn from reading the behavioral descriptions what constitutes good performance and poor performance, they may try to display more effective team behaviors and refrain from behaviors that harm the team’s effectiveness. Behaviorally Anchored Ratings Scales Behaviorally anchored rating scales provide a way to measure how much an individual’s behavior in various performance categories contributes to achieving the goals of the team or organization of which the individual is a member (Campbell, Dunnette, Arvey, & Hellervick, 1973). The procedure for creating BARS instruments was developed in the early 1960s and became more popular in the 1970s (Smith & Kendall, 1963). Subject-matter experts (SMEs), who fully understand the job for which the instrument is being developed, provide input for its 7 AOM PAPER 13912 creation. More specifically, SMEs provide examples of actual performance behaviors, called “critical incidents,” and help to classify whether the examples represent high, medium, or low performance in the category in question. The scales provide descriptions of specific behaviors that people at various levels of performance would typically display. Research on the psychometric advantages of BARS scales has been mixed (MacDonald & Sulsky, 2009). However, there is research to suggest that BARS scales have a number of advantages over Likert ratings scales, such as greater inter-rater reliability and less leniency error (Campbell, et al., 1973; Ohland, Layton, Loughry, & Yuhasz 2005). In addition, instruments with descriptive anchors may generate more positive rater and ratee reactions, have more face validity, be more defensible in court, and have strong advantages for raters from collectivist cultures (MacDonald & Sulsky, 2009). In addition, training both raters and ratees using a BARS scale prior to using it to evaluate performance may make the rating process easier and more comfortable for raters and ratees (MacDonald & Sulsky, 2009). Using the BARS process for developing anchors also greatly facilitates frame-of-reference (FOR) training, which has been shown to be the best approach to rater training (Woehr & Huffcutt, 1994). DEVELOPMENT OF THE CATME BARS INSTRUMENT A cross-disciplinary team of nine university faculty members with expertise in management, education, education assessment, various engineering disciplines, and engineering education research collaborated to develop a BARS version of the CATME instrument. We used the “critical incident methodology” described in Hedge, et al. (1999) to develop behavioral anchors for the five broad categories of team-member contribution measured by the original CATME instrument. This method is useful for developing behavioral descriptions to anchor a rating scale, and requires the identification of examples of a wide range of behaviors from poor to excellent (rather than focusing only on extreme cases). Critical 8 AOM PAPER 13912 incidents include observable behaviors related to what is being assessed, context, and the result of the behavior, and must be drawn from the experience of subject-matter experts who also categorize and translate the list of critical incidents. All members of the research team can be considered as subject-matter experts on team learning and team-member contributions. All have published research relating to student learning teams and all have used student teams in their classrooms. Collectively, they have approximately 90 years of teaching experience. We began by working individually to generate examples of behaviors that we have observed or heard described by members of teams. These examples came from our students’ teams, other teams that we have encountered in our research and experience, and teams (such as faculty committees) of which we have been members. We noted which of the five categories of team contribution we thought that the observation represented. We exchanged our lists of examples and then tried to generate more examples after seeing each other’s ideas. We then held several rounds of discussions to discuss our examples and arrive at a consensus about which behaviors described in the critical incidents best represented each of the five categories in the CATME Likert instrument. We came to an agreement that we wanted fivepoint response scales with anchors for high, medium and low team-member performance in each category. We felt that having more than five possible ratings per category would force raters to make fine-grain distinctions in their ratings that would increase the cognitive effort required to perform the ratings conscientiously. This would have been in conflict with our goal of creating an instrument that was easier and less time-consuming to use than the CATME-Likert version. We also agreed that we wanted the instrument’s medium level of performance (3 on the 5-point scale) to describe satisfactory team-member performance. Therefore, the behaviors that anchored the “3” rating would describe typical or average team-member contributions. The 9 AOM PAPER 13912 behavioral descriptions that would anchor the “5” rating would describe excellent team contributions, or things that might be considered as going well above the requirements of being a fully satisfactory team member. The “1” anchors would describe behaviors that are commonly displayed by poor or unsatisfactory team members and therefore are frequently heard complaints about team members. The “2” and “4” ratings do not have behavioral anchors, but provide an option for students who want to give a rating in-between the levels described by the anchors. Having agreed on the types of behaviors that belonged in each of the five categories and our goal to describe outstanding, satisfactory, and poor performance in each, we then worked to reach consensus about the descriptions that we would use to anchor each category. We first made long lists of descriptive statements that everyone agreed fairly represented the level of performance for the category. Then we developed more precise wording for our descriptions, and prioritized the descriptions to determine which behaviors were most typical of excellent, satisfactory, and poor performance in each category. There was considerable discussion about how detailed the descriptions for each anchor should be. Longer, more-detailed descriptions that listed more aspects of behavior would make it easier for raters using the instrument to recognize that the ratee’s performance was described by the particular anchor. Thus, raters could be more confident that they were rating accurately. However, longer descriptions require more time to read and take more space on a page, and thus are at odds with our goal of designing an instrument that is simple and quick to complete. Our students have told us often, and in no uncertain terms, that the longer an instrument is, the less likely that they are to read it and put full effort into answering each question. We therefore decided to create an instrument that could fit on one page. We agreed that three bulleted lines of description would be appropriate for each of the five categories of behavior. The instrument we 10 AOM PAPER 13912 developed after many rounds of exchanging drafts among the team members and gathering feedback from students and other colleagues is presented in Appendix B. We pilot-tested the instrument in several settings, including a junior-level course with 30 sections taught by five different graduate assistants, in order to improve both the instrument and our understanding of the factors that are important for a smooth administration. For example, to lessen peer pressure, students must not be seated next to their teammates when they complete the instrument. Because the BARS format is less familiar to students than is the Likert format, we found that it is helpful to students to explain how to use the BARS scale, which takes about 10 minutes. It then takes about 10 minutes for students in 3- to 4-member teams to conscientiously complete the self-and peer evaluation. After our testing, we decided that it would be beneficial to develop a web-based administration of the CATME BARS instrument. A web administration provides confidentiality for the students and makes it easier for instructors to gather the self and peer evaluation data, eliminates the need for instructors to type the data into a spreadsheet, and makes it easier for instructors to interpret the results. After extensive testing to make sure that the web interface worked properly, we conducted two studies of the CATME BARS instrument. STUDY 1: EXAMINING THE PSYCHOMETRIC PROPERTIES OF THE CATME BARS MEASURE The primary goal of Study 1 was to evaluate the psychometric characteristics of the webbased CATME BARS instrument relative to the paper-based CATME-Likert instrument. We therefore administered both measures to a sample of undergraduate students engaged in teambased course-related activity. We then used an application of generalizability (G) theory (Brennan, 1994; Shavelson & Webb, 1991) to evaluate consistency and agreement for each of 11 AOM PAPER 13912 the two rating forms. G theory is a statistical theory developed by Cronbach, Glesser, Nanda, & Rajaratnam (1972) about the consistency of behavioral measures. It uses the logic of analysis of variance (ANOVA) to differentiate multiple systemic sources of variance (as well as random measurement error) in a set of scores. In addition, it allows for the calculation of a summary coefficient (i.e., generalizability coefficient) that reflects the dependability of measurement. This generalizability coefficient is analogous to the reliability coefficient in classical test theory. A second summary coefficient (i.e., dependability coefficient) provides a measure of absolute agreement across various facets of measurement. Thus, G theory provides the most appropriate approach for examining the psychometric characteristics of the CATME instruments. In this study, each participant rated each of their teammates using each of the two CATME instruments (at two different times). Three potential sources of variance in ratings were examined: person effects, rater effects, and scale effects. In the G theory framework, person effects represent ‘true scores,’ while rater and scale effects represent potential sources of systematic measurement error. In addition to these main effects, variance estimates were also obtained for person by rater, person by scale, and scale by rater two-way interactions, and the three-way person by scale by rater interaction (It is important to note that in G theory, the highest level interaction is confounded with random measurement error). All of the interaction terms are potential sources of measurement error. This analysis allowed for a direct examination of the impact of rating scale as well as a comparison of the measurement characteristics of each scale. Method Participants and Procedure Participants were 86 students in two sections of a sophomore-level chemical engineering course (each course was taught by a different instructor). Participants were assigned to one of 17 12 AOM PAPER 13912 teams, consisting of 4 to 5 team members each. The teams were responsible for completing a series of nine group homework assignments worth 20% of their final grade. Participants were randomly assigned to one of two measurement protocols. Participants in Protocol A (n = 48) completed the BARS instrument at Time 1 and the Likert instrument at Time 2 (approximately 6 weeks later). Participants were told that they would be using two different survey formats for the mid-semester and end-of-semester surveys, to help determine which format was more effective. Participants in Protocol B (n = 38) completed the Likert instrument at Time 1 and the BARS at Time 2. All participants rated each of their teammates at both measurement occasions. However, 13 participants did not turn in ratings for their teammates; thus, ratings were obtained from 73 raters (56 participants received ratings from 4 team members, 16 participants received ratings from 3 team members, and 1 participant received ratings from only 1 team member). Thus, the total data set consisted of a set of 273 ratings ([56 x 4] + [16 x 3] + 1). This set of ratings served as input into the G theory analyses. Measures All participants completed both the Likert-type and web-based BARS versions of the CATME (as presented in the Appendices). Ratings on the Likert-type scale were made on a 5 point scale of agreement (i.e., 1 = Strongly Disagree, 5 = Strongly Agree). Results Descriptive statistics for each dimension on each scale are provided in Table 1. Reliabilities (Cronbach’s alpha) for each Likert dimension are also provided in Table 1. This index of reliability could not be calculated for the BARS instrument due to the fact that each team member was only rated on one item per dimension, per rater; however, G theory analysis 13 AOM PAPER 13912 does provide a form of reliability (discussed in more detail later) that can be calculated for both the Likert-type and BARS scales. Correlations among the BARS dimensions and Likert-type dimensions are provided in Table 2. Cross-scale, within-dimension correlations (e.g., convergent validity estimates) were modest, with the exception of those for the dimensions of Interacting with teammates (.74) and Keeping the team on track (.74), which were adequate. Also of interest from the correlation matrix is the fact that correlations among dimensions for the BARS scale are lower than correlations among dimensions for the Likert-type scale. This may indicate that the BARS scale offers better discrimination among dimensions than the Likert-type scale. G theory analyses were conducted using the MIVQUE0 method via the SAS VARCOMP procedure. This method makes no assumptions regarding the normality of the data, can be used to analyze unbalanced designs, and is efficient (Brennan, 2000). Results of the G theory analysis are provided in Table 3. These results support the convergence of the BARS and Likert-type scales. Specifically, the type of scale used explained relatively little variance in responses (e.g., 0% for Interacting with teammates and Keeping the team on track, and 5%, 10%, and 18% for Having relevant KSAs, Contributing to the team’s work, and Expecting quality, respectively). Also, ratee effects explained a reasonable proportion of the variance in responses (e.g., 31% for Contributing to the team’s work). Generalizability coefficients calculated for each dimension were adequate (Table 4). Note that Table 4 presents two coefficients: ρ (rho), which is also called the generalizability coefficient (the ratio of universe score variance to itself plus relative error variance) and is analogous to a reliability coefficient in Classical Test Theory (Brennan, 2000); and φ (phi), also called the dependability coefficient (the ratio of universe score variance to itself plus absolute error variance), which provides an index of absolute agreement. 14 AOM PAPER 13912 It is important to note that in this study, rating scale format is confounded with time of measurement. Thus, the variance associated with scale (i.e., the 2 way interactions as well as the main effect) may reflect true performance differences (i.e., differences between the two measurement occasions. As a result, the previous analyses may underestimate the consistency of measurement associated with the CATME. Consequently, we conducted a second set of G theory analyses in which we analyzed the data separately for each scale. The results from the second GT analysis are presented in Table 5. These results were fairly consistent with the variance estimates provided by the first set of analyses. Generalizability coefficients (similar to estimates of reliability) calculated for each dimension on each scale are provided in Table 6. These estimates indicate similar characteristics for both rating scale formats. Discussion The primary goal of study 1 was to examine the psychometric characteristics of the BARS version of the CATME relative to that of the previously developed Likert version. Results indicate that both scales provide relatively consistent measures of the teamwork dimensions assessed by the CATME. The results of Study 1 do not, however, address the validity of the rating scale relative to external variables of interest. Therefore, we conducted a second study. STUDY 2: EXAMINING THE VALIDITY OF THE CATME BARS MEASURE The objective of Study 2 was to examine the validity of the CATME BARS rating instrument with respect to course grades as well as another published peer evaluation measure (the instrument created by Van Duzer and McMartin, 2000). Here we expect a high degree of convergence between the two peer evaluation measures, both in terms of overall ratings and individual items. In addition, we expect that, to the extent that the peer evaluation measures 15 AOM PAPER 13912 capture effective team performance, they should predict course grades in courses requiring team activity. Thus both measures should be significantly related to final course grades. Method Participants and Procedure Participants in this study were 104 students enrolled in four discussion sections of a freshman-level Introduction to Engineering course. We chose this course because 40% of each student’s grade is based on team-based projects. All participants were assigned to 5-member teams based on a number of criteria including grouping students who lived near one another and matching team-member schedules. Six students were excluded from the final data analysis due to missing data, thus the effective sample size was 98. As part of their coursework, students worked on a series of team projects over the course of the semester. All participants were required to provide peer evaluation ratings of each of their teammates at four points during the semester. Participants used the CATME BARS measure to provide ratings at Times 1 and 3 and the Van Duzer and McMartin measure at Times 2 and 4. Measures CATME BARS. Participants used the web-based CATME BARS measure presented in Appendix B to provide two sets of peer evaluations. As in study 1 there was a high degree of intercorrelation among the 5 CATME performance dimensions (mean r = .76). Consequently, we formed a composite index representing an overall rating as the mean rating across the 5 dimensions (coefficient alpha = .94). In addition, the correlation between the CATME rating composite across the two administrations was .44. We averaged these two measures to form an overall CATME rating composite. 16 AOM PAPER 13912 Van Duzer and McMartin (VM) Scale. The peer evaluation measure presented by Van Duzer and McMartin (2000) is comprised of 11 rating items. Respondents provided ratings on 4point scales of agreement (1= Disagree, 2 = Tend to Disagree, 3= Tend to Agree, 4 = Agree). We formed a composite score as the mean rating across the 11 items. The coefficient alpha for the composite was .91. The composite scores from first and second VM administrations had a correlation of .55. Thus, we averaged these two measures to form an overall VM rating composite. Course Grades. Final course grades were based on a total of 16 requirements. Each requirement was differentially weighted such that the total number of points obtainable was 1000. Of the total points, up to 50 points were assigned on the basis of class participation, which was directly influenced by the peer evaluations. Thus, we excluded the participation points from the final course score, resulting in a maximum possible score of 950. Results Descriptive statistics and intercorrelations for all study variables are presented in Table 7. We next examined the correlation of each of the two overall rating composites with final course grade. As expected, both the VM measure and the CATME measure were significantly related to final course grades (r = .51 for the CATME; r = .60 for the VM). It is also interesting to note that the correlation between the composite peer ratings and the final course score increases as the semester progresses. The peer rating correlates with final course score .27 at time 1 (CATME BARS), .38 at time 2 (VM scale), .54 at time 3 (CATME BARS), and .61 at time 4 (VM scale). In addition, the overall mean ratings decrease over time while rating variability increases Our results indicate a high level of convergence between the CATME BARS and VM composites (r = .64, p < .01). In addition, the 5 components that make up the CATME ratings 17 AOM PAPER 13912 demonstrated differential relationships with the 11 individual VM items (with correlations ranging from -.02 to .58 (M = .34, MDN = .39; SD = .15). Thus, we also examined this set of correlations for coherence. As can be seen in Table 9, the pattern of correlations was highly supportive of convergence across the two measures. Discussion The results of Study 2 provide evidence for both the convergent and predictive validity of the CATME BARS peer evaluation scale. As expected, the CATME scale demonstrates a high degree of convergence with another commonly used peer evaluation measure and a significant relationship with final course grades in a course requiring a high level of team interaction. It should also be noted the CATME BARS measure offers a far more efficient measure of peer performance than does the longer Likert version of the CATME or the measure provided by Van Duzer and McMartin (2000). GENERAL DISCUSSION In this paper, we presented the development and initial testing of a behaviorally anchored rating scale version of the Comprehensive Assessment of Team Member Effectiveness. This instrument is for peer evaluation and self evaluation by team members and may be useful to instructors who use team learning methods and need a simple but valid way to adjust team grades for individual students. Because this instrument measures the same categories as the Likert-scale version, it is based on the literature on team effectiveness and assesses five major ways by which members can contribute to their teams. It uses simple behavioral descriptions to anchor three levels of performance in each category. In general our results provide a high level of support for the CATME BARS instrument as a peer evaluation measure. Specifically, the CATME BARS instrument demonstrates 18 AOM PAPER 13912 equivalent psychometric characteristics to the much longer Likert version. In addition, the CATME scale demonstrates a high degree of convergence with another commonly used peer evaluation measure and a significant relationship with final course grades in a course requiring a high level of team interaction. Because the initial testing of the CATME BARS instrument showed that the instrument was viable and an on-line administration of the instrument was useful, we believed that enhancing the on-line administration was worthwhile and we created additional features for the on-line system to help students and instructors. Security features were added so that team members can complete the survey from any computer with internet access using a passwordprotected log-in, increasing both convenience and privacy for students. A comments field was added so that students can type in comments that are only visible to their instructor. Instructors who wish to do so can insist that their students use the comments field to provide reasons to justify their ratings in order to increase students’ accountability for providing accurate ratings. These comments can also facilitate discussions between instructors and students. Because the ratings are all done electronically, instructors do not need to re-key the data in order to see all of the ratings. In order to further lessen the time that is required for instructors to review and interpret the data, we added features to the system that calculate average ratings for each student in each of the five CATME categories, compare those to the team average, and compute a suggested adjustment factor for instructors who want to adjust team grades based on individual contributions. These adjustment factors are displayed in two ways – one that includes the self-rating and the peer ratings, and the other that uses only the peer ratings. In addition, we developed a program that analyzes the ratings patterns and flags exceptional conditions with color-coded messages that may indicate the need for the instructor’s attention. These can 19 AOM PAPER 13912 indicate team-level situations, such as where the rating patterns indicate that the team has split into cliques, or individual-level situations, such as low-performing team members. We also created a secure system that instructors can use to electronically provide feedback to students indicating their self-rating, the average of how their teammates rated them, and the team average in each of the five categories measured by the CATME BARS instrument. Because the BARS rating format in general and the CATME BARS instrument in particular are unfamiliar to students, we developed a demonstration instrument that instructors can use to help students understand the five areas of teamwork included in the instrument and how they are measured. This practice rating exercise asks users to rate four fictitious team members whose performance is described in written scenarios. After raters complete the rating practice, they receive feedback that shows how they rated each team member in each CATME category and how that rating compares to the ratings assigned by the expert ratings assigned by the researchers who created the rating scale. Challenges, Limitations, and Future Research Needs Our results indicate several problems that are familiar in peer evaluation research. Although we designed the instrument so that a “3” score would be satisfactory performance and a “5” would be excellent performance, the average score for all five dimensions was approximately 4.2. Students in these studies did not appear to use the full range of the scale, resulting in a restriction of range problem with the data. Although this is a common problem in peer evaluation research for a variety of reasons including social pressures to give high ratings (Taggar & Brown, 2006), it reduces the accuracy of the peer ratings and makes it difficult to obtain strong correlations with predicted criteria. 20 AOM PAPER 13912 There were also high correlations among the five factors. Research finds that team members who are highly skilled in areas related to the team’s work also display better social skills and contribute more to team discussions (Sonnetag & Volmer 2009). Thus, there is probably a substantial amount of real correlation among CATME categories due to some team members being stronger or weaker contributors in many or all of the five areas measured by the instrument. For example, team members with strong relevant knowledge, skills, and abilities are more likely to contribute highly to the team’s work than students who lack the necessary skills to contribute. However, the high correlations among the CATME dimensions may also indicate the presence of halo error. A halo effect occurs when peers’ perceptions of a teammate as a good or bad team member affect their ratings in specific areas (Harris & Barnes-Farrell, 1997). A recent meta-analysis found that correlations among different dimensions of job performance that are rated by peers are inflated by 63% due to halo error (Viswesvaran et al., 2005). Instructors may be able to help students learn to rate more accurately by training students about the dimensions of teamwork represented in the rating scale that they use and by giving students practice using the ratings instrument before they use the instrument to rate their teammates. Frame-of-reference training is an established technique for training raters in performance appraisal settings that may be able to be adapted to help make self and peer appraisals more accurate in educational settings (Woehr & Huffcutt, 1994; Woehr, 1994). Although training may help students develop the ability to accurately rate teammates’ performance, training will not motivate students to rate accurately. To improve the accuracy of peer ratings, and thus improve the reliability and validity of peer evaluation scores, future research must identify ways to change the conditions that motivate team members to rate their teammates based on considerations other than the peers’ contributions to the team. Past research 21 AOM PAPER 13912 has found that both students and employees in organizations are reluctant to evaluate their peers, particularly for administrative purposes such as adjusting grades or determining merit-based pay (Bettenhausen & Fedor, 1997; Sheppard et al., 2004). Individuals who are required to participate in peer evaluation systems often resist the systems because they are concerned that peer evaluations will be biased by friendships, popularity, jealousy, or revenge. Recent research suggests that these concerns may be well-founded (Taggar & Brown, 2006). In particular, students who received positive peer ratings then liked their teammates better and rated them higher on subsequent peer evaluations, whereas students who received negative peer ratings liked their teammates less and gave them lower ratings on subsequent peer evaluations. This was the case even though only aggregated feedback from multiple raters was provided. Future research needs to identify the social pressures and moral codes that influence students’ rating patterns and what, if anything, instructors can do to make students want to provide accurate peer ratings. In our pilot tests and numerous discussions with students and other faculty, we found that many students do not attempt to complete peer evaluations carefully and accurately. This adds a large amount of random rater error or halo error to peer evaluation data. In our conversations with students, they have told us candidly that they often do not take peer evaluations seriously. They advised us to keep our surveys short, but also told us that regardless of the survey length, their answers to peer evaluations are usually governed by social concerns, a desire to avoid conflict with teammates, and unspoken norms about how one should rate a teammate. For many students, there is an unspoken rule that teammates should be given the highest rating unless the teammate’s performance falls seriously below the group’s expectations. Future research needs to examine the informal peer pressures that students use to 22 AOM PAPER 13912 reward or punish team teammates, and how these interact with the peer evaluation mechanisms that instructors put in place. References ABET 2000. Criteria for Accrediting Engineering Programs. Published by The Accreditation Board for Engineering and Technology (ABET), Baltimore, Maryland. Anderson, N.R., & West, M. A. 1998. Measuring climate for work group innovation: Development and validation of the team climate inventory. Journal of Organizational Behavior, 19: 235-258. Bettenhausen, K. L., & Fedor, D. B. 1997. Peer and upward appraisals: A comparison of their benefits and problems. Group & Organization Management, 22: 236-263. Boni, A. A., Weingart, L. R., & Evenson, S. 2009. Innovation in an academic setting: Designing and leading a business through market-focused, interdisciplinary teams. Academy of Management Learning & Education, 8: 407-417. Brennan, R. L. 1994. Variance components in generalizability theory. In C. R. Reynolds (Ed.), Cognitive assessment: A multidisciplinary perspective: 175–207. NY: Plenum Press. Brennan, R. L. 2000. Performance assessments from the perspective of generalizability theory. Applied Psychological Measurement, 24: 339-353. Burdett, J. 2003. Making groups work: University students’ perceptions. International Education Journal, 4: 177-190. Campbell, J. P., Dunnette, M. D., Arvey, R. D., and Hellervik, L. V. 1973. The development and evaluation of behaviorally based rating scales. Journal of Applied Psychology, 57: 15-22. 23 AOM PAPER 13912 Campion, M. A.,Medsker, G. J.,& Higgs, A. C. 1993. Relations between work group characteristics and effectiveness: Implications for designing effective work groups. Personnel Psychology, 46 : 823-850. Cassidy, S. 2006. Developing employability skills: Peer assessment in higher education. Education + Training, 48: 508-517. Chen, G., Donahue, L. M., & Klimoski, R. J. 2004. Training undergraduates to work in organizational teams. Academy of Management Learning and Education, 3: 27-40. Chen, Y., & Lou, H. 2004. Students’ perceptions of peer evaluation: An expectancy perspective. Journal of Education for Business, 79: 275-282. Commission on Accreditation for Dietetics Education 2008. 2008 Eligibility requirements and accreditation standards for coordinated programs in Dietetics (CP). Retrieved April 9, 2009 from http://www.eatright.org/ada/files/CP_Final_ERAS_Document_7-08.pdf. Conway, J. M., & Huffcutt, A. I. 1997. Psychometric properties of multisource performance ratings: A meta-analysis of subordinate, supervisory, peer, and self-ratings. Human Performance, 10: 331-360. Cronbach, L. J., Glesser, G. C., Nanda, H., & Rajaratnam, N. 1972. The dependability of behavioral measurements: Theory of generalizability for scores and profiles. New York: John Wiley & Sons, Inc. Erez, A., LePine, J. A., & Elms, H. 2002. Effects of rotated leadership and peer evaluation on the functioning and effectiveness of self-managed teams: A quasi-experiment. Personnel Psychology, 55: 929-248. Fellenz, M. R. 2006. Toward fairness in assessing student groupwork: A protocol for peer evaluation of individual contributions. Journal of Management Education, 30: 570591. 24 AOM PAPER 13912 Gatfield, T. 1999. Examining student satisfaction with group projects and peer assessment. Assessment & Evaluation in Higher Education, 24: 365-377. Guzzo, R. A., Yost, P. R., Campbell, R. J., & Shea, G. P. 1993. Potency in groups: Articulating a construct. British Journal of Social Psychology, 32: 87-106. Gueldenzoph, L. E., & May, G. L. 2002. Collaborative peer evaluation: Best practices for group member assessments. Business Communication Quarterly, 65: 9-20. Harris, T. C., & Barnes-Farrell, J. L. 1997. Components of teamwork: Impact on evaluations of contributions to work team effectiveness. Journal of Applied Social Psychology, 27: 1694-1715. Harris, M. M., & Schaubroeck, J. 1988. A meta-analysis of self-supervisor, self-peer, and peersupervisor ratings. Personnel Psychology, 41: 43-62. Hedge, J. W., Bruskiewicz, K. T., Logan, K. K., Hanson, M. A., & Buck, D. 1999. Crew resource management team and individual job analysis and rating scale development for air force tanker crews (Technical Report No. 336). Minneapolis, MN: Personnel Decisions Research Institutes, Inc. Hoegl, M., & Gemuenden, H. G. 2001. Teamwork quality and the success of innovative projects: A theoretical concept and empirical evidence. Organization Science, 12: 435449. Hooijberg, R. & Lane, N. 2009. Using multisource feedback coaching effectively in executive education. Academy of Management Learning & Education, 8, 483-493. Hyatt, D. E., & Ruddy, T. M. 1997. An examination of the relationship between work group characteristics and performance: Once more into the breech. Personnel Psychology, 50: 553-585. 25 AOM PAPER 13912 Inderrieden, E. J., Allen, R. E., & Keaveny, T. J. 2004. Managerial discretion in the use of selfratings in an appraisal system: The antecedents and consequences. Journal of Managerial Issues, 16: 460-482. Kolb, A. Y., & Kolb, D. A. 2005. Learning styles and learning spaces: Enhancing experiential learning in higher Education. Academy of Management Learning and Education, 4: 193-212. Kruger, J., & Dunning, D. 1999. Unskilled and unaware of it: How difficulties in recognizing one’s own incompetence lead to inflated self-assessments. Journal of Personality and Social Psychology, 77: 1121-1134. Lawler, E. E., Mohrman, S. A., Benson, G. 2001. Organizing for high performance: Employee Involvement, TQM, Reengineering, and Knowledge Management in the Fortune 1000. San Francisco, CA: Jossey-Bass. Loyd, D. L., Kern, M. C., & Thompson, L. 2005. Classroom research: Bridging the ivory divide. Academy of Management Learning & Education, 4: 8-21. London, M., Smither, J. W., & Adsit, D. J. 1997. Accountability: The Achilles’ heel of multisource feedback. Group & Organization Management, 22: 162-184. Loughry, M. L., Ohland, M.W., and Moore, D.D. 2007. “Development of a Theory-Based Assessment of Team Member Effectiveness”. Educational and Psychological Measurement, 67: 505-524. MacDonald, H. A., & Sulsky, L. M. 2009. Rating formats and rater training redux: A contextspecific approach for enhancing the effectiveness of performance management. Canadian Journal of Behavioural Science, 41: 227-240 26 AOM PAPER 13912 McAllister, D. J. 1995. Affect-and cognition-based trust as foundations for interpersonal cooperation in organizations. Academy of Management Journal, 38: 24-59. McGourty, J., & De Meuse, K. P. 2001. The Team Developer: An assessment and skill building program. Student guidebook. New York: John Wiley & Sons, Inc. McIntyre, R. M., & Salas, E. 1995. Measuring and managing for team performance: Emerging principles from complex environments. In R. A. Guzzo & E. Salas (Eds.), Team effectiveness and decision making in organizations (pp. 9-45). San Francisco: JosseyBass. Michaelsen, L. K., Knight, A. B., & Fink, L. D. (Eds.). 2004. Team-based learning: A transformative use of small groups in college teaching. Sterling, VA: Stylus Publishing. Millis, B.J. and P.G. Cottell, Jr. 1998. Cooperative Learning for Higher Education Faculty. Phoenix, AZ: American Council on Education/Oryx Press. Moran, L., Musselwhite, E., & Zenger, J. H. 1996. Keeping teams on track: What to do when the going gets rough. Chicago, IL: Irwin Professional Publishing. Niehoff, B. P., & Moorman, R. H. 1993. Justice as a mediator of the relationship between methods of monitoring and organizational citizenship behavior. Academy of Management Journal, 36: 527-556. Ohland, M. W., Layton, R. A., Loughry, M. L., & Yuhasz, A. G. 2005. Effects of Behavioral Anchors on Peer Evaluation Reliability. Journal of Engineering Education, 94: 319-326. Raelin, J. 2006. Does action learning promote collaborative leadership? Academy of Management Learning and Education, 5: 152-168. Rosenstein, R., & Dickinson, T.L. (1996, August). The teamwork components model: An 27 AOM PAPER 13912 analysis using structural equation modeling.. In R.M. McIntyre (Chair), Advances in definitional team research. Symposium conducted at the annual meeting of the American Psychological Association, Toronto. Saavedra, R. & Kwun, S. K. 1993. Peer evaluation in self-managing work groups. Journal of Applied Psychology, 78: 450-462. Schmitt, N., Gooding, R. Z., Noe, R. A., & Kirsch, M. 1984. Metaanalyses of validity studies published between 1964 and 1982 and the investigation of study characteristics. Personnel Psychology, 37: 407-422. Shavelson, R. J., & Webb, N. M. 1991. Generalizability Theory. Newbury Park: Sage Publications, Inc. Sheppard, S., Chen, H. L. Schaeffer, E., Steinbeck, R., Neumann, H., & Ko, P. 2004. Peer assessment of student collaborative processes in undergraduate engineering education. Final Report to the National Science Foundation, Award Number 0206820, NSF Program 7431 CCLI-ASA. Shore, T. H., Shore, L. M., & Thornton III, G. C. 1992. Construct validity of self-and peer evaluations of performance dimensions in an assessment center. Journal of Applied Psychology, 77: 42-54. Smith, P. C., & Kendall, L. M. 1963. Retranslation of expectations: An approach to the construction of unambiguous anchors for rating scales. Journal of Applied Psychology, 47: 149-155. Sonnetag, S. & Volmer, J. 2009. Individual-level predictors of task-related teamwork processes: The role of expertise and self-efficacy in team meetings. Group & Organization Management, 34, 37-66. 28 AOM PAPER 13912 Spich, R. S., & Keleman, K. 1985. Explicit norm structuring process: A strategy for increasing task-group effectiveness. Group & Organization Studies, 10: 37-59. Stevens, M. J., & Campion, M. A. 1994. The knowledge, skill, and ability requirements for teamwork: Implications for human resource management. Journal of Management, 20: 503-530. Stewart, G. L. 2006. A meta-analytic review of relationships between team design features and team performance. Journal of Management, 32: 29-55. Stevens, M. J., & Campion, M. A. 1999. Staffing work teams: Development and validation of a selection test for teamwork settings. Journal of Management, 25: 207-228. Taggar, S., & Brown, T. C. 2006. Interpersonal affect and peer rating bias in teams. Small Group Research, 37: 87-111. Taggar, S., & Brown, T. C. 2001. Problem-solving team behaviors: Development and validation of BOS and a hierarchical factor structure. Small Group Research, 32: 698726. Van Duzer, E., & McMartin, F. 2000. Methods to improve the validity and sensitivity of a self/peer assessment instrument. IEEE Transactions on Education, 43: 153-158. Verzat, Byrne, & Fayolle, 2009. Tangling with spaghetti: Pedagogical lessons from games. Academy of Management Learning and Education, 8, 356-369. Viswesvaran, C., Ones, D. S., & Schmidt, F. L. 1996. Comparative analysis of the reliability of job performance ratings. Journal of Applied Psychology, 81: 557-574. Viswesvaran, C., Schmidt, F. L., & Ones, D. S. 2005. Is there a general factor in ratings of job performance? A meta-analytic framework for disentangling substantive and error influences. Journal of Applied Psychology, 90: 108-131. 29 AOM PAPER 13912 Weldon, E., Jehn, K. A., & Pradhan, P. 1991. Processes that mediate the relationship between a group goal and improved group performance. Journal of Personality and Social Psychology, 61: 555-569. Wheelan, S. A. 1999. Creating effective teams: A guide for members and leaders. Thousand Oaks, CA: Sage Publications. Woehr, D. J. 1994. Understanding frame-of-reference training: The impact of training on the recall of performance information. Journal of Applied Psychology, 79: 525-534. Woehr, D. J., & Huffcutt, A. I. 1994. Rater training for performance appraisal: A quantitative review. Journal of Occupational and Organizational Psychology, 67: 189-206. Zantow, K., Knowlton, D. S., & Sharp, D. C. 2005. More than fun and games: Reconsidering the virtues of strategic management simulations. Academy of Management Learning and Education 4: 451-458. 30 AOM PAPER 13912 TABLE 1 Descriptive Statistics for CATME BARS and Likert-type Scale Dimensions in Study 1 BARS Scale Contributing to the Team’s Work Interacting With Teammates Keeping the Team on Track Expecting Quality Having Relevant KSAs Likert-type Scale Contributing to the Team’s Work Interacting With Teammates Keeping the Team on Track Expecting Quality Having Relevant KSAs N Min Max Mean SD α 260 264 264 264 264 2.00 1.00 1.00 1.00 2.00 5.00 5.00 5.00 5.00 5.00 4.59 4.33 4.20 4.18 4.24 .62 .75 .78 .75 .71 ------ 273 273 273 273 273 1.88 2.20 2.14 2.50 2.25 5.00 5.00 5.00 5.00 5.00 4.31 4.32 4.19 4.60 4.44 .56 .54 .59 .49 .54 .90 .91 .87 .81 .78 31 AOM PAPER 13912 TABLE 2 Correlations among CATME BARS and Likert-type Scale Dimensions in Study 1 1 1 2 3 4 5 6 7 8 9 10 BARS Scale Contributing to the Team’s Work Interacting With Teammates Keeping the Team on Track Expecting Quality Having Relevant KSAs -.37** .26** .35** .35** BARS Scale 2 3 4 -.44** .51** .47** -.38** .50** -.52** Likert-type Scale Contributing to the Team’s Work .59** .63** .55** .51** Interacting With Teammates .40** .74** .66** .54** Keeping the Team on Track .47** .66** .74** .71** Expecting Quality .43** .50** .52** .41** Having Relevant KSAs .73** .48** .38** .44** Notes. N = 260-273; Convergent validity estimates are in bold. ** p < .01. 5 6 Likert-type Scale 7 8 9 10 -.81** .63** .57** -- -.65** .58** .78** .50** .45** -.71** .80** .68** .73** -.65** .64** -.59** 32 AOM PAPER 13912 TABLE 3 Variance Estimates Provided by Generalizability Theory Models Tested on Each CATME Dimension in Study 1 Component Variance Contributing to the Team’s Work Estimate % Ratee .12 31.45% Rater .04 11.02% Scale .04 9.95% Ratee x Rater .04 10.48% Ratee x Scale .02 6.18% Rater x Scale .05 13.44% Error .07 17.47% Total Variance .37 Note. Scale = BARS vs. Likert-type. Interacting With Teammates Keeping the Team on Track Expecting Quality Having Relevant KSAs Estimate % Estimate % Estimate % Estimate % .09 .14 .00 .06 .03 .04 .06 .43 20.51% 33.10% 0.00% 14.92% 7.69% 9.32% 14.45% .07 .19 .00 .07 .02 .05 .07 .47 15.19% 39.45% 0.00% 15.61% 4.43% 10.55% 14.77% .03 .06 .09 .05 .01 .17 .08 .49 6.94% 12.24% 18.37% 10.20% 1.22% 34.69% 16.33% .06 .05 .02 .06 .02 .12 .07 .41 15.65% 11.98% 5.13% 14.67% 4.40% 30.07% 18.09% 33 AOM PAPER 13912 TABLE 4 Coefficients for Generalizability Theory Models Tested on Each CATME Dimension in Study 1 ρ (rho) φ (phi) Contributing to the Team’s Work Interacting With Teammates Keeping the Team on Track Expecting Quality Having Relevant KSAs .85 .71 .76 .64 .75 .59 .70 .31 .75 .58 34 AOM PAPER 13912 TABLE 5 Variance Estimates Provided by Generalizability Theory Models Tested on Each CATME Dimension for Each Scale in Study 1 Component Variance BARS Scale Ratee Rater Ratee x Rater Total Variance Likert-type Scale Ratee Rater Ratee x Rater Total Variance Contributing to the Team’s Work Interacting With Teammates Keeping the Team on Track Expecting Quality Having Relevant KSAs Estimate % Estimate % Estimate % Estimate % Estimate % .14 .11 .12 .37 36.86% 30.89% 32.25% .17 .20 .21 .58 29.31% 35.00% 35.69% .08 .31 .22 .61 12.77% 50.90% 36.33% .05 .35 .18 .57 8.93% 60.42% 30.65% .05 .27 .19 .50 10.52% 52.58% 36.90% .15 .08 .08 .31 47.60% 25.88% 26.52% .06 .17 .05 .28 20.43% 60.57% 19.00% .09 .17 .08 .34 26.63% 51.18% 22.19% .03 .13 .09 .24 10.64% 53.19% 36.17% .10 .09 .08 .28 37.82% 31.64% 30.55% 35 AOM PAPER 13912 TABLE 6 Coefficients for Generalizability Theory Models Tested on Each CATME Dimension for Each Scale in Study 1 BARS Scale ρ (rho) φ (phi) Likert-type Scale ρ (rho) φ (phi) Contributing to the Team’s Work Interacting With Teammates Keeping the Team on Track Expecting Quality Having Relevant KSAs .90 .82 .87 .77 .74 .54 .70 .44 .70 .48 .93 .88 .90 .67 .91 .74 .70 .49 .91 .83 36 AOM PAPER 13912 TABLE 7 Descriptive Statistics for and Correlations among All Study Variables CATME Time 1 CATME Time 3 CATME composite VM Time 2 VM Time 4 VM composite Course Points Mean SD CATME Time 1 4.20 4.07 4.14 3.72 3.65 3.69 822.73 .38 .59 .41 .19 .37 .25 53.55 -.44** .77** .53** .30** .42** .27** CATME CATME Time 3 composite -.91** .53** .58** .63** .54** -.62** .55** .64** .51** VM Time 1 VM Time 2 VM composite Course Points -.55** .79** .38** -.95** .61** -.60** -- ** p < .01. 37 AOM PAPER 13912 TABLE 8 Correlations between VM Items and CATME BARS Dimensions in Study 2 VM Items 1 Failed to do an equal share of the work. (R) 2 Kept an open mind/was willing to consider other’s ideas 3 Was fully engaged in discussions during meetings. 4 Took a leadership role in some aspects of the project. 5 Helped group overcome differences to reach effective solutions. 6 Often tried to excessively dominate group discussions. (R) 7 Contributed useful ideas that helped the group succeed. 8 Encouraged group to complete the project on a timely basis. 9 Delivered work when promised/needed. 10 Had difficulty negotiating issues with members of the group. (R) 11 Communicated ideas clearly/effectively. Notes (R) = Reverse Scored. * p < .05. ** p < .01. CATME BARS Dimensions Contributing to Interacting with Keeping the Expecting the team’s work teammates team on track quality ** ** ** .45 .32 .41 .37** .15 .20* .14 .14 Having relevant KSAs .40** .19 .44** .33** .42** .35** .41** .58** .38** .52** .44** .52** .49** .40** .49** .45** .52** -.02 .04 .02 .03 .06 .48** .34** .47** .43** .45** .41** .24* .40** .32** .34** .42** .20* .27** .18 .37** .27** .39** .16 .30** .20* .54** .42** .53** .43** .52** 38 AOM PAPER 13912 APPENDIX A Comprehensive Assessment of Team Member Effectiveness - Likert Short Version Contributing to the Team’s Work Did a fair share of the team’s work. Fulfilled responsibilities to the team. Completed work in a timely manner. Came to team meetings prepared. Did work that was complete and accurate. Made important contributions to the team’s final product. Kept trying when faced with difficult situations. Offered to help teammates when it was appropriate. Interacting With Teammates Communicated effectively. Facilitated effective communication in the team. Exchanged information with teammates in a timely manner. Provided encouragement to other team members. Expressed enthusiasm about working as a team. Heard what teammates had to say about issues that affected the team. Got team input on important matters before going ahead. Accepted feedback about strengths and weaknesses from teammates. Used teammates’ feedback to improve performance. Let other team members help when it was necessary. Keeping the Team on Track Stayed aware of fellow team members’ progress Assessed whether the team was making progress as expected. Stayed aware of external factors that influenced team performance. Provided constructive feedback to others on the team. Motivated others on the team to do their best. Made sure that everyone on the team understood important information. Helped the team to plan and organize its work. Expecting Quality Expected the team to succeed. Believed that the team could produce high-quality work. Believed that the team should achieve high standards. Cared that the team produced high-quality work. Having Relevant Knowledge, Skills, and Abilities Had the skills and expertise to do excellent work. Had the skills and abilities that were necessary to do a good job. Had enough knowledge of teammates’ jobs to be able to fill in if necessary. Knew how to do the jobs of other team members. 39 AOM PAPER 13912 APPENDIX B Comprehensive Assessment of Team Member Effectiveness - BARS Version Your name Write the names of the people on your team including your own name. Having Relevant Knowledge, Skills, and Abilities Expecting Quality Keeping the Team on Track Interacting with Teammates Contributing to the Team’s Work This self and peer evaluation asks about how you and each of your teammates contributed to the team during the time period you are evaluating. For each way of contributing, please read the behaviors that describe a “1”, “3,” and “5” rating. Then confidentially rate yourself and your teammates. 5 5 5 5 5 4 4 4 4 4 3 3 3 3 3 2 2 2 2 2 1 1 1 1 1 5 5 5 5 5 4 4 4 4 4 3 3 3 3 3 2 2 2 2 2 1 1 1 1 1 5 5 5 5 5 4 4 4 4 4 3 3 3 3 3 2 2 2 2 2 1 1 1 1 1 5 5 5 5 5 4 4 4 4 4 3 3 3 3 3 2 2 2 2 2 1 1 1 1 1 5 5 5 5 5 4 4 4 4 4 3 3 3 3 3 2 2 2 2 2 1 1 1 1 1 Does more or higher-quality work than expected. Makes important contributions that improve the team’s work. Helps to complete the work of teammates who are having difficulty. Demonstrates behaviors described in both 3 and 5. Completes a fair share of the team’s work with acceptable quality. Keeps commitments and completes assignments on time. Fills in for teammates when it is easy or important. Demonstrates behaviors described in both 1 and 3. Does not do a fair share of the team’s work. Delivers sloppy or incomplete work. Misses deadlines. Is late, unprepared, or absent for team meetings. Does not assist teammates. Quits if the work becomes difficult. Asks for and shows an interest in teammates’ ideas and contributions. Improves communication among teammates. Provides encouragement or enthusiasm to the team. Asks teammates for feedback and uses their suggestions to improve. Demonstrates behaviors described in both 3 and 5. Listens to teammates and respects their contributions. Communicates clearly. Shares information with teammates. Participates fully in team activities. Respects and responds to feedback from teammates. Demonstrates behaviors described in both 1 and 3. Interrupts, ignores, bosses, or makes fun of teammates. Takes actions that affect teammates without their input. Does not share information. Complains, makes excuses, or does not interact with teammates. Accepts no help or advice. Watches conditions affecting the team and monitors the team’s progress. Makes sure that teammates are making appropriate progress. Gives teammates specific, timely, and constructive feedback. Demonstrates behaviors described in both 3 and 5. Notices changes that influence the team’s success. Knows what everyone on the team should be doing and notices problems. Alerts teammates or suggests solutions when the team’s success is threatened. Demonstrates behaviors described in both 1 and 3. Is unaware of whether the team is meeting its goals. Does not pay attention to teammates’ progress. Avoids discussing team problems, even when they are obvious. Motivates the team to do excellent work. Cares that the team does outstanding work, even if there is no additional reward. Believes that the team can do excellent work. Demonstrates behaviors described in both 3 and 5. Encourages the team to do good work that meets all requirements. Wants the team to perform well enough to earn all available rewards. Believes that the team can fully meet its responsibilities. Demonstrates behaviors described in both 1 and 3. Satisfied even if the team does not meet assigned standards. Wants the team to avoid work, even if it hurts the team. Doubts that the team can meet its requirements. Demonstrates the knowledge, skills, and abilities to do excellent work. Acquires new knowledge or skills to improve the team’s performance. Able to perform the role of any team member if necessary. Demonstrates behaviors described in both 3 and 5. Has sufficient knowledge, skills, and abilities to contribute to the team’s work. Acquires knowledge or skills needed to meet requirements. Able to perform some of the tasks normally done by other team members. Demonstrates behaviors described in both 1 and 3. Missing basic qualifications needed to be a member of the team. Unable or unwilling to develop knowledge or skills to contribute to the team. Unable to perform any of the duties of other team members. 40