Users’ Guide to the Urological Literature

How to Use an Article About a Diagnostic Test

Charles D. Scales, Jr.,* Philipp Dahm, Shahnaz Sultan, Denise Campbell-Scherer

and P. J. Devereaux

From the Division of Urology, Department of Surgery, Duke University (CDS), Durham, North Carolina, Departments of Urology (PD)

and Medicine (SS), College of Medicine, University of Florida, Gainesville, Florida, Department of Family Medicine, University of

Michigan (DCS), Ann Arbor, Michigan, and Departments of Medicine and Clinical Epidemiology and Biostatistics, McMaster University

(PJD), Hamilton, Ontario, Canada

Purpose: Urologists frequently confront diagnostic dilemmas, prompting them to select, perform and interpret additional

diagnostic tests. Before applying a given diagnostic test the user should ascertain that the chosen test would indeed help

decide whether the patient has a particular target condition. In this article in the Users’ Guide to the Urological Literature

series we illustrate the guiding principles of how to critically appraise a diagnostic test, interpret its results and apply its

findings to the care of an individual patient.

Materials and Methods: The guiding principles of how to evaluate a diagnostic test are introduced in the setting of a clinical

scenario. We propose a stepwise approach that addresses the question of whether the study results are likely to be valid, what

the results are and whether these results would help urologists with the treatment of their individual patients.

Results: Some of the issues urologists should consider when assessing the validity of a diagnostic test study are how the

authors assembled the study population, whether they used blinding to minimize bias and whether they used an appropriate

reference standard in all patients to determine the presence or absence of the target disorder. Urologists should next evaluate

the properties of the diagnostic test that indicate the direction and magnitude of change in the probability of disease for a

particular test result. Finally, urologists should ask a series of questions to understand how the diagnostic test may impact

the care of their patients.

Conclusions: Application of the guides presented in this article will allow urologists to critically appraise studies of

diagnostic tests. Determining the study validity, understanding the study results and assessing the applicability to patient

care are 3 fundamental steps toward an evidence-based approach to choosing and interpreting diagnostic tests.

Key Words: urology, evidence-based medicine, likelihood functions, diagnostic techniques and procedures,

sensitivity and specificity

bladder cancer. Your current diagnostic algorithm includes

cystoscopy and cytology, in addition to appropriate imaging,

but cytology requires several days for results. A point of care

system could be a convenient adjunct to your current practice. You decide to perform a literature search to find a study

of the diagnostic accuracy of the point of care urine testing

system that you heard about at the meeting, ie NMP22®.

rologists frequently face diagnostic dilemmas, prompting them to select, perform and interpret additional

diagnostic tests. Ideally the selection and interpretation of diagnostic tests should reflect an evidence-based

practice with the intent of helping the urologist decide

whether a patient has a particular disease. We did not aim

to provide a comprehensive evaluation of a specific diagnostic test, which would require a critical appraisal of the entire

relevant body of literature. Instead, in this third article in

the Users’ Guide to the Urological Literature series we used

a clinical scenario to illustrate the guiding principles of how

to critically appraise an individual study of a diagnostic test.

U

SEARCH OF THE MEDICAL LITERATURE

Applying skills gained from the article How to Perform a

Literature Search1 in the Users’ Guide to the Urological

Literature series, you use the PICOT (population, intervention, comparison, outcome and type) framework to formulate

a focused clinical question to guide your search: “In patients

at risk for bladder cancer (population), how does NMP22

(intervention) compare to cystoscopy with urinary cytology

(comparison) in making the diagnosis of bladder cancer (outcome)?” This study question of diagnosis would best be answered in a prospective trial (study type) or cohort study.

Ideally you would hope to find a systematic review of

several high quality studies addressing this specific topic or

an evidence-based synopsis on this topic. Therefore, you

direct your first search to the Evidence-Based Medicine Reviews function in Ovid®, which is available to you through

CLINICAL SCENARIO

You are a busy urologist and frequently see patients referred

for hematuria. While recently attending the annual meeting

of the American Urological Association, you hear about a

point of care urine testing system to enhance the detection of

Submitted for publication January 25, 2008.

* Correspondence: Department of Surgery, Division of Urology,

Box 2922, Duke University Medical Center, Durham, North Carolina 27710 (telephone: 919-668-4605; FAX: 919-681-7423; e-mail:

chuck.scales@duke.edu).

0022-5347/08/1802-0469/0

THE JOURNAL OF UROLOGY®

Copyright © 2008 by AMERICAN UROLOGICAL ASSOCIATION

469

Vol. 180, 469-476, August 2008

Printed in U.S.A.

DOI:10.1016/j.juro.2008.04.026

470

HOW TO USE ARTICLES ABOUT DIAGNOSTIC TESTS

your local institution, and use NMP22 as a search term. This

query returns 3 abstracts, of which none is timely and specifically addresses your clinical question.

You next use the Clinical Queries feature in PubMed®.

You separately search the terms bladder cancer, NMP22

and cytology, which you then combine with the AND function. This yields 114 studies (as accessed November 23,

2007). You then paste the combined search into the Clinical

Queries function for diagnosis, which limits the search to 78

studies. To further minimize the number of studies you limit

your search to the English literature of the last 5 years,

which yields 25 articles. When scanning these titles, you

notice a study in JAMA®, the Journal of the American Medical Association, which you recall as a highly publicized

multicenter study of NMP22.2

The abstract confirms that this study examines the diagnostic accuracy of the NMP22 assay in a prospective, multicenter cohort study that enrolled 1,331 consecutive patients “at elevated risk for bladder cancer.”2 Based on

information that you find in the abstract all patients provided a voided urine sample for NMP22 testing and urinary

cytology before cystoscopy. The investigators concluded that

the NMP22 assay can increase the diagnostic accuracy of

cystoscopy. Intrigued by these results, you print a copy of the

full text article for further review.

HOW TO EVALUATE A STUDY

OF DIAGNOSTIC ACCURACY

The Users’ Guide to the Medical Literature recommends

that the reader of a given study consecutively ask 3 questions.3 1) Are the results of a study valid? 2) What are the

results? 3) How can I apply the results to the care of my

patient? These aspects are interdependent and each is

equally important. For example, we would not care if a new

diagnostic test for bladder cancer was accurate if we were

not convinced that the study was valid. Alternatively a well

designed study of a new test that demonstrates clinically

unimportant results would be of little use in clinical practice, although negative studies are helpful to the extent that

they support unbiased evaluation of diagnostic tests. Finally, study results must be applicable to our patients, and

the likely treatment benefits must be worth the potential

costs, inconveniences and harms. If this third criterion is not

met, study validity and the magnitude of the effect matter

little. Appendix 1 shows the application of these guidelines

to appraise a study of diagnostic accuracy.4,5

Are the Results Valid?

STARD (Standards for Reporting of Diagnostic Accuracy)

criteria serve as a comprehensive guide for the transparent

reporting of diagnostic accuracy studies.6,7 However, for the

purpose of the clinical reader, a more rapid assessment of

study validity relies on a few key questions (Appendix 1).4,5

Primary Guides

Was there an independent, blind comparison to a reference standard? To assess the performance of a diagnostic test a reference standard, sometimes referred to as a gold

standard, must exist to establish the presence or absence of

the target condition. As a reader, you must decide whether

the reference standard used is acceptable, understanding

that no test is perfect. Grossman et al used cystoscopy as the

reference standard.2 Patients were considered to have bladder cancer if a mass was visualized on initial cystoscopy (70)

or on repeat cystoscopy (9), which was only performed for

continued suspicion, within 3 months of initial cystoscopy

and the biopsy, when taken, confirmed malignancy except in

select patients who were deemed poor surgical candidates.

When considering the appropriateness of the reference standard, you recall that cystoscopy is most valuable for diagnosing papillary bladder tumors. However, cystoscopy is not

accurate for making the diagnosis of CIS, which is why you

complement cystoscopy with urinary cytology in your practice. This draws into question the role of cystoscopy as a

single reference standard for all types of bladder cancer. In

an ideal study you might have performed random bladder

biopsies in every patient, although at the cost of significant

feasibility challenges.

The second criterion to consider is blinding. Was the

person performing and interpreting the test under investigation (the index test) unaware of the results of the reference standard and vice versa? Lack of blinding can have a

substantial impact on test results and it may make the test

appear more accurate or useful than it actually is.8,9 Blinding is particularly important when interpreting an index

test or reference standard involves some degree of subjectivity. When a diagnostic test relies on subjective interpretation, ie a cytology specimen or radiographic image, 2 observers may interpret the test results differently (interobserver

variability). Diagnostic tests with low agreement among observers may not be reliable in practice.

Grossman et al explicitly stated that staff members performing the NMP22 test were blinded to the results of cystoscopy.2 In addition, physicians performing cystoscopy were

blinded to the results of the NMP22 essay. Whereas the readout of the NMP22 assay leaves little room for subjectivity, one

could easily envision that a positive index test, eg NMP22,

might lead the urologist to perform a more thorough cystoscopic examination or have a lower threshold for biopsying an

abnormal appearing bladder lesion. However, potential

sources of bias appear to have been appropriately addressed, ie

there was blinding of the index test and reference standard. In

addition, the investigators reported that “cytological examinations. . .[were conducted]. . .physically distant from cystoscopy

and NMP22 evaluations”2 and reportedly at a later time,

thereby implying that urinary cytology results did not affect

and were not affected by the other tests.

Did the patient sample include an appropriate spectrum of patients to whom the diagnostic test would be

applied in clinical practice? A diagnostic test for bladder cancer is useful to the extent that it is able to distinguish

patients with or without bladder cancer. To demonstrate

this ability you would like to see a diagnostic test studied in

a group of patients similar to yours, in which diagnostic

uncertainty exists. The study should include a broad spectrum of patients with disease of different severity, ie from

small low grade tumors to large high grade tumors, and

patients without the disease with symptoms commonly associated with bladder cancer, ie hematuria.10 –17 This point

is particularly important because diagnostic tests perform

differently, depending on the disease severity in a given

patient population, which is a phenomenon known as spectrum bias.18

HOW TO USE ARTICLES ABOUT DIAGNOSTIC TESTS

Therefore, you review the study population in which the

study was performed. Grossman et al reported that patients

eligible for the NMP22 study had no history of bladder

malignancy but all had “bladder cancer risk factors or symptoms, such as smoking, hematuria or dysuria.”2 Patients

were drawn from various practice settings, including private

practice, academic centers and veteran facilities. A detailed

summary of the patient indications for cystoscopy would be

helpful but this was not provided. Nevertheless, these patients appear similar to those whom you evaluate and they

appear to represent a reasonable spectrum in terms of bladder cancer risk.

Secondary Guides

Did the results of the test being evaluated influence the

decision to perform the reference standard? An assessment of diagnostic accuracy is susceptible to bias if the index

test results influence the decision to perform the reference

test (verification bias).19 –25 Verification bias is more common when the reference standard is an invasive procedure.24 Flow diagrams are encouraged in the reporting of

diagnostic accuracy studies because they provide a transparent method to communicate the presence or absence of verification bias to the reader.6,7 The evaluation of the NMP22

point of care test includes a flow diagram of the study, which

demonstrates that all 1,331 patients in whom the index test

(NMP22) was done underwent the reference test (cystoscopy) regardless of the index test result.2 A total of 49

patients did not undergo cystoscopy and they were excluded

for protocol violation. While the lack of cystoscopy results in

these patients could be a potential source of verification bias

if their NMP22 test was negative, we have no reason to

believe that this was the case. We conclude that the decision

to perform cystoscopy as the reference standard was indeed

independent of the NMP22 test result.

Were the methods for performing the test described in

sufficient detail to permit replication? As clinicians, we

like to use new diagnostic technologies if we deem them

worthwhile. Therefore, it is important for studies of diagnostic accuracy to describe in sufficient detail the methodology

for performing a diagnostic test. If a test requires equipment

or especially trained personnel not available in your practice, the validity and results of the study do not really matter

since it is not a technique that you can use. Thus, we must

understand the diagnostic test to assess the feasibility of

performing it in our care setting. In addition, differences in

test protocols have been shown to produce differences in

diagnostic accuracy for some tests.9,26 Therefore, including

these details is recommended by STARD criteria.6,7 Grossman

et al provided a detailed explanation with references to the

supporting literature in their methods section.2 It appears

that NMP22 is a diagnostic test that clinic personnel with

basic training could perform and, therefore, it is likely applicable to the practice of most urologists.

Having completed our study validity assessment for the

NMP22 urine assay diagnostic accuracy report, we conclude

that by and large the study by Grossman et al2 meets the

proposed primary and secondary validity criteria. The main

issue that we found was whether cystoscopy should be considered an adequate gold standard. Since cystoscopy is not

an effective means of making the diagnosis of CIS, which is

why we perform cytology and random bladder biopsy in

471

addition, when appropriate, some cases of bladder cancer

may have been missed. In addition, we would have liked to

have seen a more detailed description of the study population. Apart from these issues the investigators used important methodological safeguards to avoid bias.27 In addition,

information that relates to the feasibility of reproducing

these results in your clinical practice is provided. Therefore,

you decide that the study merits further review and proceed

to the results of the study.

What are the Results?

For any patient needing a diagnostic test clinicians have a

sense of the probability of disease in that patient, which is

known as the pretest probability. For example, consider a

70-year-old male with a 100 pack-year history of smoking

and a 42-year-old nonsmoking female who present with microscopic hematuria. The pretest probability, ie the probability of disease before any specific testing, of cancer in the

70-year-old male smoker is much higher than the probability of bladder cancer in the younger woman without risk

factors. Information about pretest probabilities can be derived from various sources, including the published literature on patients with similar symptom presentation, the

institutional registry or personal clinical experience and

intuition. Another source of information to estimate pretest

probabilities can come from the same studies that provide

data on diagnostic tests. For example, in the study by Grossman et al approximately 6% of all patients (79 of 1,331) were

ultimately found to have bladder cancer.2

The next step is to decide how the results of the NMP22

test change this pretest probability estimate. In other words,

urologists should be interested in the characteristic of the

test that indicates the direction and magnitude of change in

the probability of disease. This test characteristic is best

captured by a measure called the likelihood ratio (Appendix

2). LR is the characteristic of the test that links the pretest

probability to the probability of the target condition after

obtaining the test result, also called the posttest probability.

What are the LRs Associated With the Test Results?

LR⫹ is defined as the likelihood of a positive test in individuals with the disease compared to the likelihood of a positive

test in those without the disease. As an example, consider

the results of the study by Grossman et al (table 1).2 There

were 79 patients who were found to have bladder cancer and

1,252 in whom bladder cancer was ruled out. How likely is a

positive NMP22 test in patients with bladder cancer? Table

1 shows that 44 of 79 patients (55.7%) with bladder cancer

had a positive NMP22 test. Conversely how likely is a positive NMP22 in patients without bladder cancer? The inves-

TABLE 1. Evaluation of diagnostic test for bladder cancer with

point of care NMP22 proteomic assay2

No. Cystoscopy (reference test)

NMP22 Result

Pos

Neg

Totals

Bladder Ca

No Bladder Ca

44

35

179

1,073

79

1,252

Sensitivity ⫽ 44/79 ⫽ 55.7%, specificity ⫽ 1,073/1,252 ⫽ 85.7%, LR⫹ ⫽

(44/79)/(179/1,252) ⫽ 3.90 and LR⫺ ⫽ (35/79)/(1,073/1,252) ⫽ 0.52.

472

HOW TO USE ARTICLES ABOUT DIAGNOSTIC TESTS

tigators found a positive NMP22 test in 179 of 1,252 patients

(14.3%) without bladder cancer. The ratio of these 2 proportions equals 3.9, which is the LR for a positive NMP22 test.

In other words, a positive NMP22 test is 3.9 times more

likely to occur in patients with than without bladder cancer.

Note that the first part of the ratio (the probability of a

positive test in patients with the condition) is sensitivity.

Similarly LR⫺ is defined as the likelihood of a negative test

in patients with the condition compared to the likelihood of

a negative test in patients without the condition. In the case

of the NMP22 test LR⫺ ⫽ (35/79)/(1,073/1,252) or 0.5.

In this study the LRs for the NMP22 test are not presented but Grossman et al provided the data necessary to

calculate them.2 This emphasizes the importance of understanding the definitions of LR⫹ and LR⫺, so that these

measures can be derived from the information provided in a

2 ⫻ 2 table. Alternatively if not enough information is provided to construct a 2 ⫻ 2 table for the test results, as is the

case for urine cytology in this article, LRs can be calculated

from sensitivity and specificity values (Appendix 2). LR⫹ is

defined as (sensitivity)/(1 ⫺ specificity) and for urine cytology it is calculated as 0.158/(1 ⫺ 0.992) or 19.8. LR⫺ is (1 ⫺

sensitivity)/(specificity) and for cytology it is calculated as

(1 ⫺ 0.158)/0.992 or 0.85.

Now that we have learned how to calculate LR, how do we

apply LRs in clinical practice? LR indicates by what factor

the result of a given diagnostic test would increase or decrease the pretest probability of a condition. If a test has an

LR of 1, the posttest probability of the target condition is

exactly the same as the pretest probability, ie the test is

useless. If LR is greater than 1, this results in a posttest

probability that is higher than the pretest probability. Conversely if LR is less than 1, this results in a posttest probability that is lower than the pretest probability. The size of

this effect is related to the size of the LR. 1) LRs greater than

10 or less than 0.1 create large and often conclusive changes

from pretest to posttest probability. 2) LRs of 5 to 10 and 0.1

to 0.2 create moderate changes in pretest to posttest probability. 3) LRs of 2 to 5 and 0.2 to 0.5 generate small changes

in probability. 4) LRs of 1 to 2 and 0.5 to 1 are unlikely to

alter probability to a clinically significant degree.4

The importance of the effect of the LR, ie whether the test

results in a clinically meaningful change, is not simply determined by how large or small an LR is. It is also determined by the pretest probability.

Having determined the LRs, how do we use them to link

the pretest probability to the posttest probability? One approach, although tedious, is to convert the pretest probability

to the pretest odds (odds ⫽ probability/[1 ⫺ probability]), which

is then multiplied by the LR to obtain the posttest odds.4 We

then need to convert the posttest odds back to a posttest probability (probability ⫽ odds/[1 ⫹ odds]). An easier approach is to

use a Fagan nomogram, which allows you to read off the

posttest probability by aligning pretest probability and LR for

a given clinical scenario (see figure).28 An interactive version of

the Fagan nomogram28 is available at the CEBM (Centre for

Evidence-Based Medicine) website (http://www.cebm.net).

Let us return to the clinical example of the 70-year-old

male with a smoking history and gross painless hematuria.

While we may not know the exact pretest probability of

bladder cancer in this patient, we can make a plausible

estimate of 30% based on clinical experience. Alternatively if

data or clinical experience does not permit a specific esti-

Nomogram for converting pretest to posttest probability using LR.28

Instructions for use: identify pretest probability of disease on left

axis, then identify LR of diagnostic test on middle axis. Connect

these 2 points with straight line and continue this line to right

(posttest) axis. Point where line crosses posttest axis is estimated

posttest probability. Reprinted with permission from Massachusetts

Medical Society. Copyright © 1975 Massachusetts Medical Society.

All rights reserved.

mate, we can identify an upper and lower limit to our plausible pretest probability, for example 10% to 50%, and then

use these values to generate upper and lower limits of posttest probability. Using our pretest probability of 30% and

given a positive NMP22 test with an LR⫹ of 3.9, the Fagan

nomogram yields a posttest probability of approximately

65%. In comparison, if cytology was positive (LR⫹ ⫽ 19.8) in

the same patient, we would arrive at a posttest probability of

approximately 90%. It is noteworthy in this context that the

high LR⫹ for cytology derives from the high specificity of the

test, rather than from its sensitivity. This example demonstrates that cytology is a better diagnostic test than NMP22

for increasing the probability of bladder cancer, as reflected

by its higher LR⫹.

HOW TO USE ARTICLES ABOUT DIAGNOSTIC TESTS

Alternatively we could ask whether a negative NMP22 or

cytology result would help us lower the probability of bladder

cancer. Again, assuming a pretest probability of 30%, and an

LR⫺ of 0.52 and 0.85 for NMP22 and cytology we arrive at

posttest probabilities of approximately 18% and 27%, respectively. Comparing pretest and posttest probabilities, we note

that a negative NMP22 test resulted in a greater change than

cytology, thereby indicating that it is a better test for decreasing the suspicion of bladder cancer. However, neither of the 2

tests has altered the probability of bladder cancer dramatically

enough that we are likely to be comfortable assuring the patient that he does not have bladder cancer.

There are other measures in addition to LR to characterize the properties of a diagnostic test. Many urologists are

familiar with the terms sensitivity and specificity. Sensitivity is defined as the proportion of individuals with disease in

whom the test is positive and specificity is defined as the

proportion of individuals without the disease in whom the test

is negative. These values are often presented in the context of

a 2 ⫻ 2 table (Appendix 2). Grossman et al did not present a 2

⫻ 2 table of the results of the NMP22 test compared to the

reference standard, but rather sufficient data to construct a

table (table 1).2 For the NMP22 test sensitivity is calculated

as 44/79 or 55.7%. Specificity for the NMP22 point of care

assay is 1,073/1,252 or 85.7%. The investigators also provided these measures for cytology (15.8% sensitivity and

99.2% specificity) but they reported insufficient data to reconstruct a 2 ⫻ 2 table for cytology.

While the sensitivity/specificity framework provides a

method for comparing diagnostic tests, it is not helpful to

clinicians who are most interested in to what extent a positive or negative test alters the patient probability of disease. The reason is that the calculation for most physicians

to go from pretest probability to posttest probability, ie the

probability of disease in our patient after the test, using

sensitivity and specificity is so complicated and long that

physicians must guess at the posttest probability, and unfortunately the guess may frequently be wrong. LR provides

a more clinically relevant framework for understanding the

implications of a diagnostic test result in our patient. With

the Fagan nomogram physicians can accurately know the

posttest probability within seconds without having to guess.

Of note, neither LR, sensitivity nor specificity varies with

the prevalence of disease in the population (table 2). PPV

TABLE 2. Effect of disease prevalence on diagnostic

test properties

No. Disease

Present

Disease prevalence ⫽ 5%:*

Pos test

Neg test

Totals

Disease prevalence ⫽ 20%:†

Pos test

Neg test

Totals

Absent

Total No.

40

10

95

855

135

865

50

950

1,000

160

40

80

720

240

760

200

800

1,000

* LR⫹ ⫽ (40/50)/(95/950) ⫽ 8, sensitivity ⫽ 40/50 ⫽ 80% and PPV ⫽ 40/135 ⫽

29.6%, and LR⫺ ⫽ (10/50)/(855/950) ⫽ 0.22, specificity ⫽ 855/950 ⫽ 90%

and NPV ⫽ 855/865 ⫽ 98.8%.

† LR⫹ ⫽ (160/200)/(80/800) ⫽ 8, sensitivity ⫽ 160/200 ⫽ 80% and PPV ⫽

160/240 ⫽ 67%, and LR⫺ ⫽ (40/200)/(720/800) ⫽ 0.22, specificity ⫽ 720/800 ⫽

90% and NPV ⫽ 720/760 ⫽ 94.7%.

473

and NPV change with the prevalence of disease and, therefore, they are considered unreliable measures of test performance. For example, if a test is derived in the setting of a

urology clinic in a population with a higher pretest probability of bladder cancer and one wishes to apply it in another

clinical setting such as primary care, NPV and PPV cannot

be used. LRs are independent of prevalence and, therefore,

they are conserved across clinical settings. Table 2 shows

the hypothetical example of diagnostic test for bladder cancer with 80% sensitivity and 90% specificity that is applied

in patient populations with different bladder cancer prevalence. Whereas LRs, sensitivity and specificity are constant

regardless of whether the disease prevalence is 5% or 20%,

PPV and NPV change with disease prevalence. The implication of this observation is that reports of PPV and NPV in

the evaluation of a diagnostic test have little value since

there is no assurance that the disease prevalence in your

setting would be similar to that in the study population.

Again, LRs are clinically more useful because they do not

vary with disease prevalence and, therefore, they may be

applied by clinicians with different patient populations, ie in

the evaluation of hematuria by primary care physicians vs

urologists. Another strength of LRs is that, unlike sensitivity and specificity, they may be applied to measures with

nondichotomous outcomes, as discussed in greater detail by

Jaeschke et al.4

These examples demonstrate the usefulness of LR for

comparing 2 diagnostic tests and for assisting our clinical

evaluation of patients by modifying pretest probabilities.

When evaluating our article about a diagnostic test by

Grossman et al,2 we first assessed the validity of the study

and now we have ascertained how to generate and use the

LR using the study results. The last step in evaluating our

article is to understand how the test is applicable to patient

care.

Will the Results Help

Me in Caring for My Patient?

Will the reproducibility of the test result and its interpretation be satisfactory in my setting? The value of a

diagnostic test often depends on its reproducibility when

applied to a patient, which refers to whether the test yields the

same result when a patient disease state has not changed. Test

reproducibility can be affected by various factors, including

features of the test itself. For example, if degradation of the

monoclonal antibody used for the NMP22 test occurs in

storage, the test may not perform reproducibly with time.

Other tests may lack reproducibility due to varying interpretations by subjective observers. For example, different

pathologists viewing cytology samples may vary in their

interpretations of abnormal-appearing cells. If a study describes a test as being highly reproducible, this may be

because the test is simple and easy to use, or because the

investigators are highly skilled at applying the test. In the

latter case the test may not perform as well when you apply

it in your practice setting. In the case of the NMP22 test the

detailed test description suggests that it may be used with

minimal training and it does not rely on subjective interpretation. Thus, it is likely to be reproducible in your setting.

Are the results applicable to my patient? Another important issue to consider is how similar the patients in your

practice are to the patients in the study population used to

474

HOW TO USE ARTICLES ABOUT DIAGNOSTIC TESTS

evaluate the diagnostic test. As reported previously, spectrum bias may cause a diagnostic test to perform differently

in patient populations with different degrees of disease severity.18 Therefore, reviewing the practice setting and the

types of patients studied is important. In our example patients were recruited from academic, veteran and private

practice facilities but detailed eligibility criteria were not

reported.2 Indications for testing were urinary symptoms,

such as frequency or hematuria, or risk factors for bladder

cancer, such as smoking. While Grossman et al reported

patient demographics, we have little information on the

clinical characteristics of these patients, such as the proportion with a smoking history or with urinary frequency. No

distinction was made between patients presenting with

gross and microscopic hematuria. Therefore, our ability to

generalize these study findings is hindered by the lack of a

detailed description of the study population.

Will the results change my management? After we have

decided that the results are applicable to our patient, we

must decide whether they would change our management.

Before ordering any type of diagnostic test it is helpful to

know what probabilities we are willing to accept to confirm

or refute the target diagnosis. For example, consider our

evaluation of a patient with hematuria. In the presence of

negative office cystoscopy and negative cytology the probability of bladder cancer is low and no further testing is

indicated unless prompted by new symptoms. In other words

the probability of disease is below the testing threshold. On

the other hand, if a patient has negative office cystoscopy

but positive cytology, the probability of bladder cancer is

much higher and these patients typically undergo further

testing, eg bladder biopsy. In this case the probability of

disease is between the testing and treatment thresholds.

Finally, assume that our patient has a history of bladder CIS

treated with BCG. With negative office cystoscopy but positive cytology a treatment alternative (although several exist) would be to proceed with a second course of BCG therapy

without further testing. The probability of disease is above

the treatment threshold and we may be willing to administer BCG therapy without further testing. These treatment

and test thresholds may vary among patients and disease

states because they are a matter of clinical judgment and

patient values. Considerations that play into the decision

making process include the danger of an undiagnosed or

untreated condition, the potential risks of therapy, eg BCG

vs radical cystectomy, and the overall medical condition of

an individual patient.

Ideally what we hope to achieve when performing a diagnostic test is to increase the probability of disease in that

patient beyond the treatment threshold or lower it to a level

at which we are comfortable assuming that the patient does

not have the target condition, eg bladder cancer. In our

example recall that LR⫹ for the NMP22 test was 3.9, which

will have a relatively small effect on the conversion from

pretest to posttest probability (table 3). On the other hand,

LR⫹ for cytology was 19.8, which causes large changes from

pretest to posttest probability. Table 3 provides an overview

of the posttest probabilities that result from applying the calculated LR⫹ and LR⫺ for NMP22 and cytology to different

pretest probabilities. This comparison shows that the NMP22

test results in relatively small changes from pretest to posttest

TABLE 3. LR effect on pretest probability

% Pretest Probability

LR ⫽ 19.8:

80 (high)

50

30

10 (low)

LR ⫽ 3.9:

80 (high)

50

30

10 (low)

LR ⫽ 0.85:

80 (high)

50

30

10 (low)

LR ⫽ 0.52:

80 (high)

50

30

10 (low)

% Posttest Probability

99

95

89

69

94

80

63

30

77

46

27

9

68

34

18

5

probability, therefore, indicating that it may have little impact

on how we would treat our patient. Finally, as with assessments of the magnitude of benefit of clinical treatments we like

to base them on more than 1 well done study. In the same way

diagnostic test characteristics would ideally be determined

based on more than a single investigation.

Will patients be better off as a result of the test? A

diagnostic test ultimately should be judged by whether it

adds information beyond what is otherwise available and

whether this information leads to a change in management

that is beneficial to the patient.5 When a diagnostic test

carries a relatively low risk, the target condition is serious

and treatment is effective, the value of the test is clear.

Other tests may be accurate but may not have a significant

impact on patient outcome.

RESOLUTION OF CLINICAL SCENARIO

Having critically appraised an original research study that

you found on the role of the NMP22 test in the diagnostic

evaluation of patients at increased risk for bladder cancer, you

must now decide whether to apply it in the care of future

patients. You conclude that the study was well done, yielding

results that are likely valid. However, uncertainty remains as

to whether NMP22 testing would change the management of

your cases. Therefore, based on the understanding of the results that you gained through the critical appraisal process you

decide not to change your established practice at this time. You

further recognize that the decision to adopt a new diagnostic

test should ideally be based on several well designed studies in

different patient populations that also consider the usefulness

of the test and the practicalities of its implementation, including its costs and ease of use.

CONCLUSIONS

We have outlined a simple approach to an evidence-based

practice for using diagnostic tests. To evaluate a study of

diagnostic test accuracy we apply 3 simple questions. 1) Are

the results of the study valid? 2) What are the results?

HOW TO USE ARTICLES ABOUT DIAGNOSTIC TESTS

3) Will the results help me in caring for my patients? Applying this framework will further our understanding of the

literature and enhance patient care through an evidencebased approach to diagnosis.

ACKNOWLEDGMENTS

The concepts presented were taken in part from Users’

Guide to the Medical Literature.3

Abbreviations and Acronyms

BCG

CIS

LR

LR⫺

LR⫹

NPV

PPV

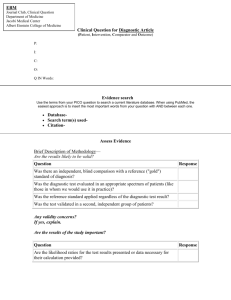

APPENDIX 1

Guidelines for Evaluating an Article About

a Diagnostic Test.4,5

2.

Are the Results of the Study Valid?

Primary Guides

Was there an independent, blind comparison with a reference standard?

Did the patient sample include an appropriate spectrum of patients to

whom the diagnostic test will be applied in clinical practice?

Secondary Guides

Did the results of the test being evaluated influence the decision to

perform the reference standard?

Were the methods for performing the test described in sufficient detail to

permit replication?

3.

Will the Results Help Me in Caring for My Patients?

Will the reproducibility of the test results and its interpretation be

satisfactory in my setting?

Are the results applicable to my patient?

Will the results change my management?

Will patients be better off as a result of the test?

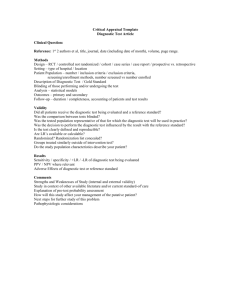

APPENDIX 2

4.

5.

6.

7.

8.

Diagnostic Test Properties

9.

Reference Standard

Reference Positive Reference Negative

Test is positive

Test is negative

Total

True positive (a)

False negative (c)

a⫹c

False positive (b)

True negative (d)

b⫹d

Total

a⫹b

c⫹d

a⫹b⫹c⫹d

10.

Terms and Calculations

Sensitivity ⫽ a/(a ⫹ c)

Question answered: What proportion of patients with disease has a

positive test?

Specificity ⫽ d/(b ⫹ d)

Question answered: What proportion of patients without disease has a

negative test?

11.

PPV ⫽ a/(a ⫹ b)

Question answered: What proportion of positive tests is correct?

12.

NPV ⫽ d/(c ⫹ d)

Question answered: What proportion of negative tests is correct?

13.

LR⫹ ⫽ [a/(a ⫹ c)]/[b/(b ⫹ d)]

Or LR⫹ ⫽ sensitivity/(1⫺specificity) ⫽

probability of a positive test in diseased people/probability of a positive

test in nondiseased people

Question answered: How much will a positive test change my pretest

probability of disease?

LR⫺ ⫽ [c/(a ⫹ c)]/[d/(b ⫹ d)]

Or LR⫺ ⫽ (1⫺sensitivity)/specificity) ⫽

probability of negative test in diseased people/probability of a negative

test in nondiseased people

Question answered: How much will a negative test change my pretest

probability of disease?

14.

15.

16.

PPV and NPV vary with disease prevalence in the study population. Therefore, these properties should not be applied to compare test performance

between studies or for individual patients (table 2).

⫽

⫽

⫽

⫽

⫽

⫽

⫽

bacillus Calmette-Guerin

carcinoma in situ

likelihood ratio

LR of a negative test

LR of a positive test

negative predictive value

positive predictive value

REFERENCES

1.

What are the Results?

Are LRs for the test results presented or data necessary for their

calculation provided?

475

Krupski TL, Dahm P, Fesperman SF and Schardt CM: User’s

guide to the urological literature: how to perform a literature search. J Urol 2008; 179: 1264.

Grossman HB, Messing E, Soloway M, Tomera K, Katz G,

Berger Y et al: Detection of bladder cancer using a pointof-care proteomic assay. JAMA 2005; 293: 810.

Guyatt G and Rennie D: Users’ Guide to the Medical Literature, 4th ed. Chicago: AMA Press 2002.

Jaeschke R, Guyatt GH and Sackett DL: Users’ guides to the

medical literature. III. How to use an article about a diagnostic test. B. What are the results and will they help me in

caring for my patients? The Evidence-Based Medicine

Working Group. JAMA 1994; 271: 703.

Guyatt GH, Tugwell PX, Feeny DH, Haynes RB and Drummond M: A framework for clinical evaluation of diagnostic

technologies. CMAJ 1986; 134: 587.

Bossuyt PM, Reitsma JB, Bruns DE, Gatsonis CA, Glasziou

PP, Irwig LM et al: Towards complete and accurate reporting of studies of diagnostic accuracy: the STARD Initiative.

Ann Intern Med 2003; 138: 40.

Bossuyt PM, Reitsma JB, Bruns DE, Gatsonis CA, Glasziou

PP, Irwig LM et al: The STARD statement for reporting

studies of diagnostic accuracy: explanation and elaboration.

Ann Intern Med 2003; 138: W1.

Lijmer JG, Mol BW, Heisterkamp S, Bonsel GJ, Prins MH, van

der Meulen JH et al: Empirical evidence of design-related

bias in studies of diagnostic tests. JAMA 1999; 282: 1061.

Detrano R, Gianrossi R and Froelicher V: The diagnostic accuracy of the exercise electrocardiogram: a meta-analysis of

22 years of research. Prog Cardiovasc Dis 1989; 32: 173.

Stein PD, Gottschalk A, Henry JW and Shivkumar K: Stratification of patients according to prior cardiopulmonary disease and probability assessment based on the number of mismatched segmental equivalent perfusion defects. Approaches

to strengthen the diagnostic value of ventilation/perfusion

lung scans in acute pulmonary embolism. Chest 1993; 104:

1461.

Fletcher RH: Carcinoembryonic antigen. Ann Intern Med

1986; 104: 66.

Harris JM Jr: The hazards of bedside Bayes. JAMA 1981; 246:

2602.

Hlatky MA, Pryor DB, Harrell FE Jr, Califf RM, Mark DB and

Rosati RA: Factors affecting sensitivity and specificity of

exercise electrocardiography. Multivariable analysis. Am J

Med 1984; 77: 64.

Lachs MS, Nachamkin I, Edelstein PH, Goldman J, Feinstein

AR and Schwartz JS: Spectrum bias in the evaluation of

diagnostic tests: lessons from the rapid dipstick test for

urinary tract infection. Ann Intern Med 1992; 117: 135.

Moons KG, van Es GA, Deckers JW, Habbema JD and Grobbee

DE: Limitations of sensitivity, specificity, likelihood ratio,

and Bayes’ theorem in assessing diagnostic probabilities: a

clinical example. Epidemiology 1997; 8: 12.

O’Connor PW, Tansay CM, Detsky AS, Mushlin AI and Kucharczyk

W: The effect of spectrum bias on the utility of magnetic resonance imaging and evoked potentials in the diagnosis of suspected multiple sclerosis. Neurology 1996; 47: 140.

476

17.

18.

19.

20.

21.

22.

HOW TO USE ARTICLES ABOUT DIAGNOSTIC TESTS

Philbrick JT, Horwitz RI, Feinstein AR, Langou RA and Chandler JP: The limited spectrum of patients studied in exercise test research. Analyzing the tip of the iceberg. JAMA

1982; 248: 2467.

Ransohoff DF and Feinstein AR: Problems of spectrum and

bias in evaluating the efficacy of diagnostic tests. N Engl

J Med 1978; 299: 926.

Begg CB and Greenes RA: Assessment of diagnostic tests when

disease verification is subject to selection bias. Biometrics

1983; 39: 207.

Cecil MP, Kosinski AS, Jones MT, Taylor A, Alazraki NP,

Pettigrew RI et al: The importance of work-up (verification)

bias correction in assessing the accuracy of SPECT thallium201 testing for the diagnosis of coronary artery disease.

J Clin Epidemiol 1996; 49: 735.

Choi BC: Sensitivity and specificity of a single diagnostic test in the

presence of work-up bias. J Clin Epidemiol 1992; 45: 581.

Diamond GA: Off Bayes: effect of verification bias on posterior

probabilities calculated using Bayes’ theorem. Med Decis

Making 1992; 12: 22.

23.

Diamond GA, Rozanski A, Forrester JS, Morris D, Pollock BH,

Staniloff HM et al: A model for assessing the sensitivity and

specificity of tests subject to selection bias. Application to

exercise radionuclide ventriculography for diagnosis of coronary artery disease. J Chronic Dis 1986; 39: 343.

24. Greenes RA and Begg CB: Assessment of diagnostic technologies. Methodology for unbiased estimation from samples of

selectively verified patients. Invest Radiol 1985; 20: 751.

25. Zhou XH: Effect of verification bias on positive and negative

predictive values. Stat Med 1994; 13: 1737.

26. Philbrick JT, Horwitz RI and Feinstein AR: Methodologic

problems of exercise testing for coronary artery disease:

groups, analysis and bias. Am J Cardiol 1980; 46: 807.

27. Jaeschke R, Guyatt G and Sackett DL: Users’ guides to the

medical literature. III. How to use an article about a

diagnostic test A. Are the results of the study valid?

Evidence-Based Medicine Working Group. JAMA 1994;

271: 389.

28. Fagan TJ: Letter to the editor: a nomogram for applying likelihood ratios. N Engl J Med 1975; 293: 257.