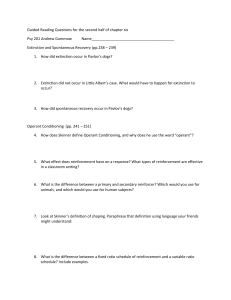

5 reward and punishment - Duke University | Psychology

advertisement