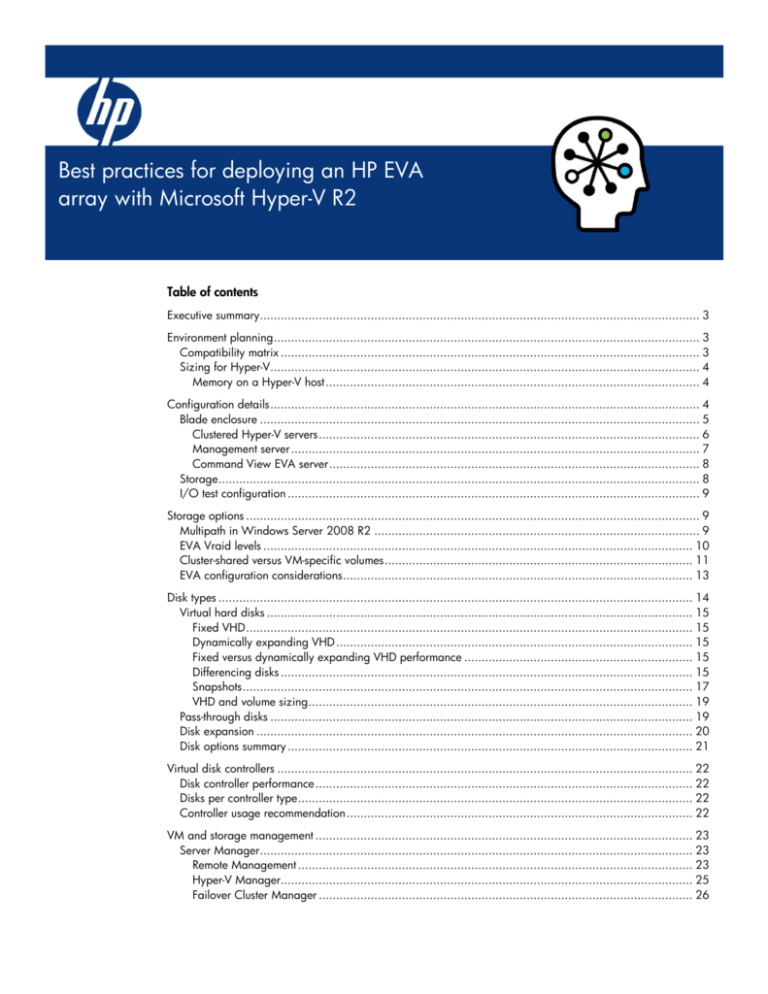

Best practices for deploying an HP EVA array with Microsoft Hyper

advertisement