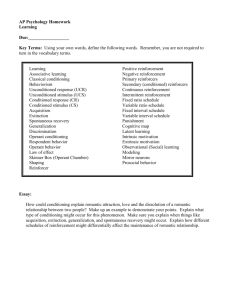

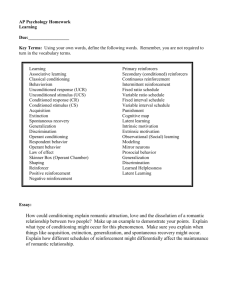

What Teachers Need to Know About Learning

advertisement