Multicores, Multiprocessors, and Clusters

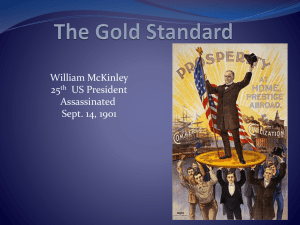

advertisement

Multicores, Multiprocessors, and Clusters P. A. Wilsey Univ of Cincinnati 1 / 12 Classification of Parallelism Classification from Textbook Serial Hardware Parallel Software Sequential Concurrent Some problem written as a se- Some problem written as a quential program (the MATLAB concurrent program (the O/S example from the textbook). example from the textbook). Execution on a serial platform. Execution on a serial platform. Some problem written as a se- Some problem written as a quential program (the MATLAB concurrent program (the O/S example from the textbook). example from the textbook). Execution on a parallel plat- Execution on a parallel platform. form. 2 / 12 Flynn’s Classification of Parallelism CU: control unit PU: processor unit MM: memory unit SM: shared memory IS: instruction stream DS: data stream PU CU IS PU IS DS1 MM 1 1 DS2 MM 2 2 SM IS CU PU DS MM DSn PU n (a) SISD computer MM m IS (b) SIMD computer IS1 IS1 IS2 CU 1 CU 2 IS1 IS2 PU 1 IS1 DS SM PU 2 MM 1 MM 2 IS2 MM m CU 1 CU 2 IS1 IS2 PU PU DS1 1 DS2 2 MM 1 MM 2 IS2 SM ISn CU n ISn PU n ISn IS2 DS IS1 CU n ISn PU n DSn ISn MM m ISn (c) MISD computer (d) MIMD computer Single program, multiple data (SPMD): Single program run on every node on a MIMD style platform. 3 / 12 Parallelism is Hard/Amdhal’s Law Amdhal’s Law: Idealized parallelism 20 95% parallel 90% parallel 75% parallel 50% parallel 18 16 14 Speedup 12 10 8 6 4 2 0 1 Speedup = 4 16 64 256 1024 Number of Processors 1 Fractionenhanced (1−Fractionenhanced )+ Speedupenhanced Restating 4096 16384 65536 Hence the drive to find scalable problems sizes (see textbook example on pg 506) or embarrassingly parallel applications. 1 Speedup = (1−% affected+% left after optimization) 4 / 12 Speed-up Challenges Strong Scaling: increasing parallelism in hardware achieves increased speedup. Weak Scaling: increased parallelism is achieved only by increasing the problem size with the hardware parallelism size increases. 5 / 12 Shared Memory Multiprocessors (SMP) Parallel hardware that presents the programmer with a single physical address space across all processors. Uniform Memory Access (UMA, nobody uses this acronym): all memory is accessed in near uniform speeds. NonUniform Memory Access (NUMA, widely used): memory access speed is dependent on location vis-a-via the processor/memory positions. Shared memory programming model requires mechanisms (in the hardware) to enable programming to protect shared objects from simultaneous updates. I locks other atomic primitives I non-blocking algorithms and atomic operations I transactional memory (forthcoming in the Intel Haswell processor) 6 / 12 Locks and Other Atomic Primitives mutexes: A locking mechanism to synchronize access to a shared resource. Semaphores: A signaling mechanism to control access to a shared resource. Deadlock: programming error where a collection of tasks are all waiting for a lock/semaphore but the lock is not available and no tasks are configured so that the locked data structure will be unlocked. 7 / 12 Mutex Operation Think of a mutex as a lock/unlock mechanism. A task locks an object, accesses it, and then unlocks the object. While different tasks can lock the same object, only the task that receives the lock can unlock the resource. task 1: loop while (!done1) lock (x); shared_variable++; unlock (x); end loop; task 2: loop while (!done2) lock (x); shared_variable--; unlock (x); end loop; In this example, the lock variable x is used to protect access to the shared variable shared variable. 8 / 12 Semaphores Most often structured as a counting semaphore that is initialized with the number of how many simultaneous accesses are allowed. The counting semaphores are variables that are accessed using wait (P) and signal (V). producer: wait(emptyCount); wait(useQueue); addItemInToQueue(item); signal(useQueue); signal(fullCount); consumer: wait(fullCount); wait(useQueue); removeItemFromQueue(); signal(useQueue); signal(emptyCount); This example illustrates the classic producer/consumer problem. The tasks communicate through a queue of maximum size N. Queue overruns/underuns are not permitted. the solution uses three semaphores: emptyCount initially set to N, fullCount initially set to 0, and useQueue initially set to 1. 9 / 12 Non-blocking Algorithms Lock-free: guarantees system wide progress. Basically the system guarantees that some parallel task will progress, but could conceptually starve one (or more) of the tasks. Wait-free: guarantees that all operations have a bounded number of steps to completion. Basically ensuring that no task starves (much stronger constraint than lock-free). 10 / 12 Transactional memory Transactional memory allows the grouping of collections of operations to memory so that they occur as a single atomic operation. The atomic operation to the memory is committed only if no other operation to that memory subspace is serviced during the transaction. Thus, the collection of operations are either all committed or not and when the transaction fails, the programmer must take some action to attempt to accomplish the operation. Execution an instruction to denote the beginning of a transaction. Execute one or more memory operations (e.g., x++; y--). Execute an instruction to denote the end of the transaction. Test if the transaction was committed. 11 / 12 Message Passing Multiprocessors Parallel hardware where each processor has its own private address space. I Information is shared by transmitting messages between processing nodes. I Most common form is a Beowulf Cluster or collection (tightly coupled or loosely coupled) of computers. I Often using a standardized message passing protocol such as MPI, PVM, or Infiniband. Of course TCP/IP and UDP can also be used. 12 / 12