Learning and Motivation 33 (2002) 485–509

www.academicpress.com

Between-subject PREE and

within-subject reversed PREE in

spaced-trial extinction with pigeons

Mauricio R. Papini,* Brian L. Thomas,

and Dawn G. McVicar

Department of Psychology, Texas Christian University, Box 298920, Fort Worth,

TX 76129, USA

Received 13 December 2001; revised 19 March 2002

Abstract

Three experiments explored the partial reinforcement extinction effect (PREE;

greater resistance to extinction after partial, rather than continuous, reinforcement

training), in a spaced-trial situation with pigeons. Experiments 1 and 2 report conventional PREEs with 24-h intertrial intervals and between-subject designs. The corresponding outcome (food reinforcement or nonreinforcement) was delivered after

satisfaction of a fixed-ratio 10 (Experiment 1) or a fixed-ratio 1 (Experiment 2). Experiment 3 reports a reversed PREE in a within-subject design with a fixed-ratio 10

requirement. Extinction occurred faster for the response paired with 50% partial

reinforcement than for the response paired with continuous reinforcement. A third

response paired with a small reinforcer (1 pellet/trial) in 100% of the trials extinguished faster than a response paired with a large reinforcer (15 pellets/trial). These

results are discussed in the context of spaced-trial instrumental performance (key

pecking and running), in pigeons.

Ó 2002 Elsevier Science (USA). All rights reserved.

*

Corresponding author. Fax: 817-257-7681.

E-mail address: m.papini@tcu.edu (M.R. Papini).

0023-9690/02/$ - see front matter Ó 2002 Elsevier Science (USA). All rights reserved.

PII: S 0 0 2 3 - 9 6 9 0 ( 0 2 ) 0 0 0 0 6 - 1

486

M.R. Papini et al. / Learning and Motivation 33 (2002) 485–509

Responses reinforced only in a random subset of acquisition trials

typically display greater resistance in extinction, compared to continuously

reinforced responses. Similarly, Pavlovian procedures in which a conditioned stimulus is partially reinforced may result in a greater behavioral level during extinction, compared to a stimulus reinforced in every trial. This

phenomenon, called the partial reinforcement extinction effect (PREE), has

been generally studied in between-subject designs and under relatively

massed training conditions (e.g., intertrial intervals, ITI, ranging from a

few seconds to a few minutes). Under such conditions, the PREE is a ubiquitous learning phenomenon both across species and training procedures

(for a review, see Amsel, 1992). A different picture emerges when these procedural features are altered to within-subject comparisons between partially

and continuously reinforced responses or stimuli, or to spaced training conditions with ITIs in the order of hours.

Within-subject experiments have demonstrated a range of effects. For example, whereas Rescorla (1999) found a within-subject PREE (wPREE) in

autoshaping with pigeons, others reported nonsignificant effects in autoshaping with quail (Crawford, Steirn, & Pavlik, 1985), salivary conditioning with

dogs (Sadler, 1968), runway performance in rats (Amsel, Rashotte, & MacKinnon, 1966), and goal tracking with rats (Pearce, Redhead, & Aydin, 1997).

Still others reported evidence of a reversed PREE, that is, greater persistence

after training with continuous reinforcement, rather than partial reinforcement. Reversed PREEs were found in experiments with rats involving running

and lever pressing responses (Pavlik & Carlton, 1965; Pavlik, Carlton, &

Hughes, 1965), and in experiments with human subjects (Svartdal, 2000). A

within-subject experiment reported by Mellgren and Seybert (1973) suggests

one factor that might account for this diversity of results, namely, the sequence of reinforced (R) and nonreinforced (N) trials. Each rat received training in two different runways, one paired with 50% partial reinforcement and

the other with continuous reinforcement. Mellgren and Seybert found the following pattern of results: A PREE occurred when N trials were followed by R

trials in the partial runway; a reversed PREE emerged when N trials were followed by R trials in the continuous runway; and nondifferential extinction followed acquisition training in which the two NR transitions were equally often.

The between-subject PREE (bPREE) is also affected by the spacing of the

trials. Experiments with nonmammalian species that demonstrate a conventional bPREE under massed conditions of training, have consistently found

a reversed bPREE under spaced training conditions. For example, toads

(Bufo arenarum) trained in a runway situation to collect water in the goal

box show greater persistence in extinction after partial reinforcement when

trials are separated by 15-s ITIs, but not when trials are separated by 5-min

ITIs (Muzio, Segura, & Papini, 1992). Moreover, lengthening the ITI to 24 h

produces clear evidence of a reversed bPREE (Muzio et al., 1992, 1994).

Similar reversed bPREEs have been reported in fish (Boitano & Foskett,

M.R. Papini et al. / Learning and Motivation 33 (2002) 485–509

487

1968; Gonzalez & Bitterman, 1967; Schutz & Bitterman, 1969), turtles

(Gonzalez & Bitterman, 1962; Pert & Bitterman, 1970), and iguanas (Graf,

1972), all trained under spaced-trial conditions.

In the experiments reported in the present article, pigeons received training under conditions of spaced practice, with trials separated by ITIs in the

order of hours, during which the animal remained in its home cage. In addition, both between-subject (Experiments 1 and 2) and within-subject designs (Experiment 3) were used. Our interest in the PREE is primarily

comparative; the spaced-trial bPREE has been reported in several experiments with mammals (e.g., Weinstock, 1954), but not in experiments involving fish, amphibians, or reptiles (see references above). Our presumption is

that these species differences in spaced-trial learning phenomena are markers

of a broad phylogenetic divergence in brain mechanisms of learning among

the vertebrates (Papini, 2002). The goal of the present experiments is to extend the study of the spaced-trial PREE to an avian species. Pigeons are ideally suited for our purpose because there are well-developed training

techniques that can be readily applied to the present requirements. We have

developed a key-pecking, one-trial-per-session situation that has been used

to study the effects of reward magnitude on learned performance (Papini,

1997; Papini & Thomas, 1997); here we extend that procedure to a partial

reinforcement situation, for which there is limited information. Only one

demonstration of the spaced-trial bPREE in pigeons has been published

and it involves training in a runway situation, 24 h ITI, and food reinforcement as the main training parameters (Roberts, Bullock, & Bitterman,

1963). Thus the present experiments extend the study reported by Roberts

et al. to a key-pecking training situation and to a within-subject design.

We report the results of each experiment leaving the discussion of their theoretical relevance for the General discussion section.

Experiment 1

The main goal of this experiment was to determine the effect of 50% partial

and 100% continuous reinforcement training on the extinction of key-pecking instrumental performance under spaced conditions of training. The basic

training procedure involves a single trial per day in which a pigeon is exposed

to a key light and required to peck 10 times to obtain an outcome. This outcome was either fifteen 45-mg pigeon pellets in reinforced trials, or nothing in

nonreinforced trials. An analogous experiment with rats as subjects demonstrated a significant bPREE in lever-pressing instrumental performance

(McNaughton, 1984). Furthermore, that experiment showed that treatment

with the anxiolytic chlordiazepoxide eliminated the lever-pressing bPREE,

just as it also eliminated a bPREE based on runway performance, suggesting

common underlying mechanisms across these two training situations.

488

M.R. Papini et al. / Learning and Motivation 33 (2002) 485–509

The present experiment sought to determine the presence of a second effect that has been reported in experiments involving partial reinforcement

under more massed conditions of training. Responses acquired under partial

reinforcement training are usually performed at a higher level than responses acquired under continuous reinforcement during the acquisition

phase (Goodrich, 1959). This phenomenon, labeled the partial reinforcement acquisition effect (PRAE), has been described in rats and under relatively massed conditions of training, but its occurrence in other species

and under spaced training conditions has not yet been reported.

Method

Subjects

Twelve pigeons purchased from Ruthardt Pet and Feed, Fort Worth, all

sexually mature, served as subjects. Pigeons were deprived of food to an 80–

85% of their ad libitum weights. Post-session meals (at least 20 min after the

session) were adjusted to maintain constant target weights. Water was continuously available in the cage. The vivarium was continuously illuminated.

These pigeons had extensive prior experience in analogous experiments

manipulating reinforcer magnitude (Papini, 1997, Experiments 1 and 3; Papini & Thomas, 1997). Pigeons that had received continuous reinforcement

training with large rewards were assigned to the same condition in the present experiment (Group C). Pigeons that had received training with continuous reinforcement and small rewards were assigned to partial reinforcement

in the present experiment (Group P). The decision to assign pigeons in

agreement with their prior training history, rather than using random assignment or matching for training history, was based on two considerations.

First, random or matched assignment would result in a mixture of reinforcement histories in each group that could potentially increase within-subject

variance and consequently reduce power. Second, since the pigeons assigned

to partial reinforcement had exhibited faster extinction in the previous experiments than those assigned to continuous reinforcement, this procedure

is conservative for a demonstration of the bPREE. A demonstration of

the bPREE would require that pigeons that exhibited fast extinction in previous experiments exhibit slow extinction in the present experiment, in both

cases relative to the same comparison group (Group C).

Apparatus

Three standard Skinner boxes, each enclosed in a sound-attenuating

chamber, were used for training. Each chamber was equipped with a fan

to provide for air circulation and masking background noise. Each Skinner

box measured 32:2 29:9 32:2 cm (width length height). The front

wall, back wall, and ceiling were made of Plexiglas, whereas the two

lateral walls were made of aluminum. One of the lateral walls contained

M.R. Papini et al. / Learning and Motivation 33 (2002) 485–509

489

the following elements: A lamp (General Electric 1820), providing diffuse illumination from the upper left corner, and the response key (1.8 cm in diameter, 18.5 cm above the floor, and in the center of the wall). There was also a

feeder cup located 3 cm above the floor and in the center of the wall; this cup

was made of opaque Plexiglas and measured 4:5 5:5 4 cm: Noyes precision pellets (pigeon formula, 45 mg), were automatically delivered into this

cup. Visual stimuli could be presented on the response key by illuminating

it from behind. A computer located in an adjacent room controlled all the

stimuli and recorded the key-pecking responses.

Procedure

Experimental sessions were conducted between 08:00 and 12:00 h. Pigeons were extensively pretrained. First, all pigeons received 2 daily sessions

of exposure to the conditioning box, each lasting 20 min; no stimuli were

presented during these sessions. Second, birds were exposed to a mixed Pavlovian-instrumental schedule (20 trials/session). In the first session of this series, a small amount of mixed grain (about 2 g) was freely available in the

food cup; no grain was provided thereafter. A single key-peck at a white

key light or 6 s, whichever occurred first, resulted in the termination of

the light and the immediate delivery of a single pellet. Each pigeon was required to respond on at least 80% of the trials on two of three consecutive

days before it was shifted to the next phase. Third, after achieving this criterion, purely instrumental pretraining was initiated in which reinforcement

occurred only if the pigeon responded during the 15-s long white key light.

During these sessions, the fixed-ratio requirement was gradually increased

from 1 to 10 responses, in steps of one key-peck. Before each transition to

the next value, pigeons had to satisfy the same criterion described above.

A transition from fixed-ratio 10 to a single trial per day (acquisition phase,

see below) required 80% or better response level in three of five consecutive

sessions. This gradual procedure was adopted to minimize behavioral disruptions and to insure the occurrence of key pecking after the shift from

20 trials/session to 1 trial/session. This pretraining procedure was used in

previous experiments (Papini, 1997; Papini & Thomas, 1997).

One trial/day was administered to each animal during acquisition. Pigeons assigned to Group C (n ¼ 6) received 15 pellets at the end of each

trial, delivered at a rate of 1 pellet every 0.2 s, provided they had emitted

10 responses. For the pigeons assigned to Group P (n ¼ 6), half of the daily

trials ended in reinforcement (15 pellets delivered as described above),

whereas the rest ended in nonreinforcement. The sequence of R and N trials

was based on Gellermann (1933): RRNN RNRN NRRR NNRN NRNR

RNRN RRNN RRNR NNRR NNRR NRNN NRRN RRNN. Thus the

labels ‘‘continuous’’ and ‘‘partial’’ apply to the outcome of emitting 10 responses, and not to the outcome of each individual response (because

10 key pecks were required on each trial), or to the stimulus-reinforcer

490

M.R. Papini et al. / Learning and Motivation 33 (2002) 485–509

contingency (because failure to complete the fixed-ratio 10 ended the trial in

nonreinforcement). In this acquisition phase, the stimulus was changed from

white (pretraining) to a black plus sign on a white background. A trial

started with onset of the house light, followed by an initial interval averaging 60 s (range: 30–90 s). At the end of this interval, the plus stimulus was

projected on the key; animals were allowed a maximum of 60 s to initiate responding and an additional 60 s to complete the fixed-ratio 10. A computer

located in an adjacent room recorded the latency (0.01 s units), defined as

the time from stimulus onset to the tenth response. Failure to complete

the fixed-ratio 10 within 60 s ended the trial in nonreinforcement. A maximum latency of 120 s was assigned on incomplete trials. The session ended

after a variable interval similar to the initial one. At the end of this interval,

the house light was turned off and the pigeon was returned to its home cage.

There were 52 acquisition trials followed by 88 extinction trials; pigeons receive one trial per day. Extinction trials were identical to the nonreinforced

trials of Group P.

Latency scores obtained in each trial and measured in 0.01 s units were

transformed to the natural logarithm (ln), to improve normality and allow

for the use of parametric statistics. A standard, mixed-model analysis of variance was used to test the effects of reinforcement schedule on the latencies.

The alpha value was set to 0.05 in all the analyses reported in this paper.

Nonparametric tests were also used when extreme numbers skewed data

distributions.

Results

Given the criteria used to increase the fixed-ratio value, pretraining required a minimum of 24 sessions. Eleven pigeons finished pretraining in

24 sessions, while the remaining pigeon, from Group P, required a total

of 28 sessions. Although pretraining was extensive, it was not differentially

so across groups. Moreover, none of the pigeons exhibited behavioral disruption during pretraining sessions.

There was no evidence of the PRAE in the present experiment. Key-pecking performance during acquisition was similar across groups. The change

in stimulus from pretraining (white key-light) to acquisition (plus sign) resulted in a small degree of disruption in key pecking during the early acquisition trials. None of the effects of a Group Trial analysis of variance was

significant, F s < 2:97. An analysis of the last 8 acquisition trials also indicated a nonsignificant group effect, F ð1; 11Þ ¼ 1:11; thus, asymptotic performance was very similar across groups.

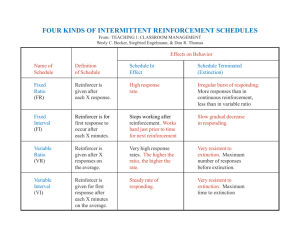

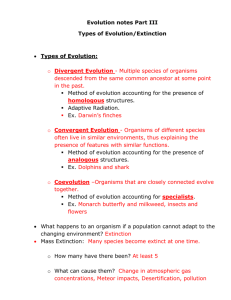

As shown in Fig. 1, initial extinction performance was extremely stable

and similar in both groups. An analysis of the initial 8 extinction trials indicated that groups were statistically indistinguishable, F < 1. Eventually,

the pigeons in Group C extinguished faster than those in Group P. A

Group Trial analysis (computed on a trial-by-trial basis), detected this

M.R. Papini et al. / Learning and Motivation 33 (2002) 485–509

491

Fig. 1. Extinction of key-pecking performance in groups of pigeons trained with either continuous (C) or 50% partial (P) reinforcement, at a rate of a single trial per day. A fixed-ratio 10

requirement was imposed in each trial. Data from Experiment 1.

bPREE in terms of a significant interaction, F ð87; 870Þ ¼ 1:33. There was

also a significant extinction effect, F ð87; 870Þ ¼ 38:65, but the difference

between groups was not significant, F ð1; 10Þ ¼ 3:51. The interpretation of

this bPREE is not affected by initial group differences in performance level,

as shown by nonsignificant group effects for the final acquisition trials and

initial extinction trials.

The instrumental procedure used in this experiment implied that an animal nominally subjected to 100% continuous or 50% partial reinforcement

could receive a lower frequency of reinforcement (in the sense of the stimulus-reinforcer contingency), if it failed to complete the fixed-ratio 10 on a

substantial number of trials. Disruption was assessed in terms of the number of trials on which pigeons from each group failed to complete the

fixed-ratio 10 during the time allowed, during both acquisition and extinction sessions. The degree of disruption in acquisition was relatively low,

averaging 1.8 trials in Group C and 0.2 trials in Group P; with a total

of 52 acquisition trials, pigeons completed more than 96% of all acquisition trials. In extinction, pigeons show disruption in an average of 49.7

and 26.3 trials in Groups C and P, respectively (out of a total of 88 trials).

Because 8 of the 12 animals showed no disruption in acquisition, all disruption data were analyzed using nonparametric tests. Mann–Whitney

tests indicated that disruption during acquisition was not significantly different across groups, U ð6; 6Þ ¼ 11, and there was a tendency toward a

greater disruption in Group C than in Group P during extinction, significant only at a one-tail test level, U ð6; 6Þ ¼ 5:5.

492

M.R. Papini et al. / Learning and Motivation 33 (2002) 485–509

Experiment 2

There are two potential problems with the results of Experiment 1. First,

since 10 key pecks were required to deliver the reinforcer, it may be argued

that the pigeons from both groups were under a partial reinforcement regime. The main difference may have been that whereas the pigeons in Group

C received a reinforcer for every 10 responses, those in Group P had to emit

20 responses, on average, to be reinforced. To clarify this issue, two new

groups of animals were run under the same conditions as those in the previous experiment, except that a single peck at the key was required for reinforcement (i.e., a fixed-ratio 1 requirement). Second, in Experiment 1 and

for reasons that were explained previously (see Experiment 1, Subjects),

group assignment was consistent with the previous experience of the pigeons

(e.g., prior large reward was assigned to large reward; prior small reward

was assigned to partial reward). The pigeons used in the present experiment

also had previous experience (see Stout, Muzio, Boughner, & Papini, 2002,

Experiments 4 and 5), in a complex training situation. In those experiments,

responses to two stimuli (green light and plus sign), were paired with reinforcement and nonreinforcement in either a discrimination (i.e., Aþ/B),

or a pseudodiscrimination (i.e., A/B) condition, across groups. These trials were followed, after a brief interval, by a white key light (to be used in

the present experiment), always reinforced on a variable-interval 20-s schedule (i.e., Cþ). Two precautions were taken with these subjects. First,

pigeons were matched for prior experience (discrimination vs. pseudodiscrimination training) within each of the conditions used in the present

experiment (Groups C and P). Second, the white stimulus, which had been

subject to the same treatment in both groups of the previous experiments,

served as the discriminative stimulus in the present experiment.

Method

Subjects and apparatus

Twelve pigeons were utilized in this experiment, all sexually mature, obtained from the same source, and maintained under the same conditions as

in Experiment 1. The same Skinner boxes described in Experiment 1 were

also used in the present experiment.

Procedure

Pairs of pigeons matched for prior experience were randomly assigned to

the two conditions of the present experiment: Groups C and P ðn ¼ 6Þ.

Thus, three animals in each of the present groups had previously received

discrimination training and the rest had received pseudodiscrimination

training. Each pigeon received one trial per day, between 08:00 and

12:00 h. In each trial, pigeons were placed in the conditioning box, the house

M.R. Papini et al. / Learning and Motivation 33 (2002) 485–509

493

light was turned on, and after an average period of 60 s (range: 30–90 s), the

white light was presented on the response key. A single peck at the white key

turned the white light off and administered 15 pellets (in reinforced trials).

Pellets were administered at a rate of 1 every 0.2 s. This reinforcement

was followed by an interval averaging 60 s (range: 30–90 s), at the end of

which the house light was turned off and the pigeon was returned to its cage.

During the entire experiment, pigeons receive a single trial per day. In trials 1–4, all pigeons were immediately reinforced for responding within 60 s

of key-light onset. Failure to respond resulted in the automatic delivery of

the reinforcer after 60 s. Thus, these sessions involved a mixture of Pavlovian and instrumental contingencies designed to recover key-pecking behavior. Starting in session 5, pigeons received the appropriate training under

purely instrumental conditions. Pigeons in Group C received a total of 56

trials in which a single key-peck response resulted in the delivery of the reinforcer. Pigeons in Group P received 56 trials in which R and N trials were

intermixed according to the following schedule based on Gellermann (1933):

RNRN RRNN NRRN RNNR RRNN RNRN NRRN NRRN RNNR

NNRR RNNR RRNR NNRN NRRR. Thus, there were a total of 60 acquisition trials. Extinction started on trial 61 and continued for 48 trials. In

nonreinforced trials (e.g., in N trials for Group P and extinction trials for

both groups), a peck at the white key or 60 s, whichever occurred first,

turned off the white light and ended the trial. The primary dependent measure was the latency to respond, the time from key-light onset to the detection of a peck (measured in 0.01 s units). These scores were treated as

described in Experiment 1.

Results

One pigeon died in the course of acquisition training and two other pigeons failed to reacquire the response. Although it would have been possible

to retrain the two pigeons that failed to respond, it was decided to discard

them from the experiment to keep previous experience as constant as

possible across groups. As a result, the 9 pigeons that remained in this

experiment, 4 in Group C and 5 in Group P, all showed recovery of the

key-pecking response when transferred to a one-trial-per-day regime without any explicit pretraining.

The key-pecking performance of both groups during acquisition was similar, thus yielding no evidence of the PRAE. A Group Trial analysis indicated a significant acquisition effect, F ð59; 413Þ ¼ 1:96; however, the group

effect and the interaction effect were both nonsignificant F s < 1:33. Furthermore, an analysis of the final 8 trials of acquisition indicated no difference

among groups and no group trial interaction, F s < 1:16, although the

latencies were still decreasing significantly, F ð7; 49Þ ¼ 3:34.

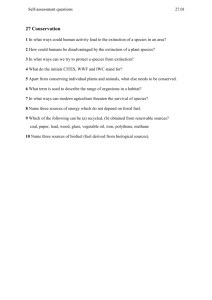

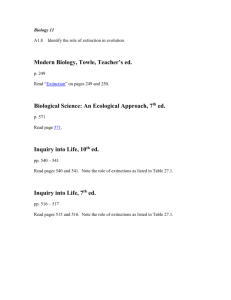

The results of the extinction phase are presented in Fig. 2 for each group

and as a function of 4-trial blocks. The latency scores were lower than in the

494

M.R. Papini et al. / Learning and Motivation 33 (2002) 485–509

Fig. 2. Extinction of key-pecking performance in groups of pigeons trained with either continuous (C) or 50% partial (P) reinforcement, at a rate of a single trial per day. A fixed-ratio 1

requirement was imposed in each trial. Data from Experiment 2.

previous experiment simply because it takes less time to emit a single response than the time it takes to complete 10 responses. Two features of these

results are worth emphasizing. First and as in the previous experiment, the

initial extinction performance was relatively stable, except for some initial

fluctuation in Group C. For approximately 20 trials, the latencies in both

groups remained relatively low. An analysis of the initial 8 trials indicated

nonsignificant group differences, F < 1. Second and also as in the previous

experiment, there was clear evidence of a bPREE. Indeed, there was

little change in performance during the 48 extinction trials in Group P.

A trial-by-trial analysis of extinction data detected the bPREE in terms of

a group trial interaction, F ð47; 329Þ ¼ 2:61. Also significant was the extinction effect, F ð47; 329Þ ¼ 4:46, but not the difference between the groups,

F ð1; 7Þ ¼ 2:82. Because terminal acquisition performance and early extinction performance were not different across groups, this bPREE is not

confounded with initial differences in latency.

Only three of the nine pigeons failed to respond in at least one of the

acquisition trials, one in Group C and two in Group P. Although the average

number of disrupted trials across individual pigeons for Groups C and P

(0.75 and 4.40 trials, respectively) seem different, the difference was not

statistically reliable, U ð4; 5Þ ¼ 7:5. This group tendency reversed during

extinction, with Group C exhibiting greater average disruption (7.50 trials),

M.R. Papini et al. / Learning and Motivation 33 (2002) 485–509

495

than Group P (0.25 trials); however, this difference also failed to achieve a

significant level, U ð4; 5Þ ¼ 3. Thus the bPREE found in the present experiment was not related to differential disruption in performance across groups.

Experiment 3

An experiment by Mellgren and Seybert (1973) provides information on

the effects of partial and continuous reinforcement on extinction in a withinsubject design and spaced conditions of training (i.e., one trial per day). Rats

were trained in two different runways, one associated with partial reinforcement and the other with continuous reinforcement. Extinction was similar in

the partial and continuous runways when the number of NR trial transitions

was matched across runways. However, in most within-subject experiments,

ITI values ranged from zero, as in free-operant situations (Pavlik & Carlton,

1965), up to a few minutes (Pearce et al., 1997). Additionally, in all of these

experiments the animals remained in the conditioning chamber during the

ITI. Under relatively massed training conditions, the outcome of one trial

may leave a sensory or memory trace capable of remaining active until

the next trial (Sheffield, 1949). As a result of such carry-over effect, responding in any given trial may fall under the control of both current external

stimuli as well as carry-over stimuli (whether sensory or mnemonic). Additionally, the massing of trials may promote a comparison among stimuli

paired with different frequencies of reinforcement. Such comparisons may

induce contrast effects, affecting response rates in ways that may be difficult

to anticipate (Svartdal, 2000). A unique advantage of spaced-trial procedures is that they reduced or eliminated these problems, promoting a relatively clean control of behavior by the associative value acquired by the

discriminative stimuli.

Furthermore, Papini (1997) reported evidence of a reverse magnitude of

reinforcement extinction effect (MREE) in both between- and within-subject

experiments with pigeons and under spaced-trial conditions. The MREE is

defined as greater persistence in extinction after acquisition with a small,

rather than large, reinforcer magnitude (Hulse, 1958; Wagner, 1961). Mellgren and Dyck (1974) reported a within-subject experiment with rats in

which two discriminable runways were paired with large and small rewards

under massed-training conditions (i.e., 15-s ITIs). Rats that had received an

equal number of transitions from large to small rewards and from small to

large rewards exhibited the MREE. However, there seem to be no reports of

MREE studies based on spaced-training conditions and within-subject designs in rats or any species. A tentative summary of available evidence indicates that partial and small reinforcers generate relatively greater persistence

in extinction under spaced-trial conditions in rats, but their effects are

dissociated in spaced-trial extinction in pigeons. In fact, the spaced-trial

496

M.R. Papini et al. / Learning and Motivation 33 (2002) 485–509

PREE and MREE co-vary in any given species in which they have been

studied (see Papini, 1997; Papini, Muzio, & Segura, 1995), except for pigeons. In pigeons, the spaced-trial bPREE has now been demonstrated in

runway (Roberts et al., 1963; Thomas, 2001), and Skinner box situations

(Experiments 1 and 2), whereas the spaced-trial reversed MREE has been

found in both runway (Thomas, 2001), and Skinner box situations (Papini,

1997; Papini & Thomas, 1997).

The present experiment was designed to provide further information on

the dissociation of spaced-trial PREE and MREE in pigeons by testing both

effects in a within-subject design. Each pigeon received exposure to three discriminative stimuli, one continuously reinforced with a large reward, a second paired with 50% reinforcement of the same large reward, and a third

continuously reinforced with a small reward. Eventually, all three stimuli

were shifted to extinction.

Method

Subjects and apparatus

Nine adult pigeons, all experimentally naive, served as subjects. They

were obtained and maintained as described in Experiment 1. The same Skinner boxes described above were also used in the present experiment.

Procedure

Experimental sessions were conducted between 08:00 and 12:00 h. Pretraining was exactly as described in Experiment 1. Pigeons were gradually

shaped to peck at the white key light for a single pellet of food, and daily

sessions contained 20 trials each. The response requirement was gradually

increased up to a fixed-ratio 10 according to the same behavioral criteria described in Experiment 1. Subsequently, pigeons were shifted to the acquisition phase.

During acquisition, animals received 1 trial per session and 3 sessions per

day. Each trial was exactly as described in Experiment 1. At the end of each

trial, the animal was returned to its cage where it remained during the ITI.

Within any given day, the ITI was 1–1.5 h long; between days, the ITI was

between 18- and 21-h long. Three different stimuli were presented to each

pigeon, although only one in any particular trial. The stimuli were a white

plus sign on a black background, a red key, and a green key. For any given

animal, responding 10 times to each stimulus produced one of the following

outcomes: delivery of 15 pellets in every trial (the continuous, large-reward

stimulus, or CL), delivery of 15 pellets or nothing on a random 50% basis

(the partial, large-reward stimulus, or PL), or delivery of 1 pellet in every

trial (the continuous, small-reward stimulus, or CS). The stimulus-outcome

relationship was counterbalanced across animals, such that each stimulus

was paired to each of the three outcomes in an equal number of animals.

M.R. Papini et al. / Learning and Motivation 33 (2002) 485–509

497

Acquisition trials were scheduled in 3-day blocks. In each 3-day block, a given animal received 3 trials of each type (CL, PL, and CS), distributed randomly except that no more than 2 trials of the same type were administered

in any given day. Three sequences were administered, each to 3 pigeons:

PLP SLL SSP, LSL PSS PPL, and SPS LPP LLS (where P is the partial

stimulus, which was R and N on a 50% schedule; L is the CL stimulus;

and S is the CS stimulus). This procedure ensured that each stimulus was

presented the same number of times in each possible position within a

day for the group as a whole.

There were 48 acquisition trials of each type, followed by 48 extinction

trials of each type. A total of 288 trials were administered in the entire experiment. Extinction trials were exactly the same as acquisition trials, except

that no food was delivered. No less than 1 h after the end of the third trial, in

any given day, pigeons received sufficient supplementary food to maintain

their body weights at 80–85% of their free-food weight. The latency to complete the fixed-ratio 10 requirement (time from the onset of the stimulus until the emission of the 10th key-peck response, in 0.01 s units) was the

primary dependent measure. Scores were treated as described in Experiment

1. For consistency with the previous experiments, disruption data were

analyzed with nonparametric techniques.

Results

An average of 33.2 (range 29–35), sessions of pretraining were needed to

shape the fixed-ratio 10 requirement. None of the pigeons exhibited any behavioral disruption during pretraining.

Acquisition performance was very similar across trial types. A Trial Type

(CL, PL, CS), by Trial analysis of variance (both as repeated-measure factors), indicated only a significant acquisition effect, F ð47; 376Þ ¼ 3:27. The

difference across trial types and the interaction effect were nonsignificant,

F s < 1:04. The same results were obtained in a pairwise comparison between

CL and PL trials (partial reinforcement), and between CL and CS trials

(magnitude of reinforcement). The acquisition effect was significant in both

of these analyses, F sð1; 8Þ > 2:96, but none of them detected a significant trial-type effect, F s < 1, or interaction effect, F sð47; 376Þ < 1:15. Performance

during the asymptotic period was also very similar across trial types. An

analysis of the last 8 acquisition trials indicated nonsignificant differences

between CL and PL trials, F < 1, and between CL and CS trials, F < 1.

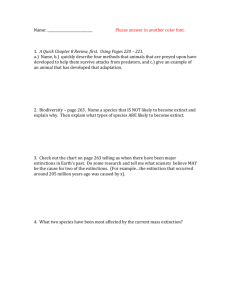

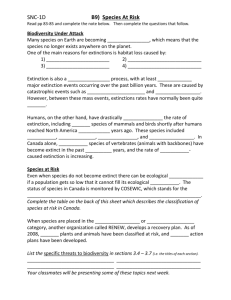

The extinction performance of these pigeons in the three types of trials is

shown in Fig. 3. Behavioral changes start comparatively earlier than in the

previous experiments, suggesting a substantial generalization of extinctionÕs

decremental effects across stimuli. Nonetheless, an analysis of the initial 8

trials of extinction shows nonsignificant differences in behavior between

CL and PL trials, F ð1; 8Þ ¼ 3:47, and between CL and CS trials, F < 1.

Thus the performance across trial types in both late acquisition and early

498

M.R. Papini et al. / Learning and Motivation 33 (2002) 485–509

Fig. 3. Extinction of key-pecking performance in three different discriminative stimuli associated with continuous-large reward (CL), 50% partial-large reward (PL), and continuous-small

reward (CS). Training was administered at a rate of 3 trials per day with a minimum ITI of one

hour. Animals remained in their cages during the ITI. A fixed-ratio 10 requirement was imposed

in each trial. Data from Experiment 3.

extinction was statistically indistinguishable, a fact that simplifies the interpretation of extinction data. Overall, Fig. 3 suggests that the extinction performance in CL trials was consistently below that of either PL (reversed

wPREE), or CS trials (reversed wMREE). An overall analysis indicated

significant differences across trial types, F ð2; 16Þ ¼ 5:76, and trials,

F ð47; 376Þ ¼ 27:54, but a nonsignificant interaction, F ð47; 376Þ ¼ 1:11.

Two pairwise comparisons are relevant in this experiment. First, a comparison of CL and PL trials indicated significantly lower extinction latencies in

the continuous, rather than partial, reinforcement stimulus, F ð1; 8Þ ¼ 6:88,

thus demonstrating a reversed wPREE. Second, a similar comparison

between the CL and CS stimuli indicated significantly lower extinction

latencies after training with large, rather than small, reinforcement,

F ð1; 8Þ ¼ 10:79, revealing a reversed wMREE. In both of these analyses

there were significant extinction effects, F sð47; 376Þ > 20:45, but nonsignificant interaction effects, F sð37; 376Þ < 1:26.

None of the nine pigeons exhibited disruption of key-pecking

performance in any of the 144 acquisition trials. During extinction, pigeons

completed the fixed-ratio 10 requirement in an average of 30.7 CL, 22.9 PL,

and 22.8 CS trials. Wilcoxon tests (two-tailed) demonstrated that pigeons

M.R. Papini et al. / Learning and Motivation 33 (2002) 485–509

499

completed a significantly larger number of CL trials than both PL trials,

T ð9Þ ¼ 0, and CS trials, T ð9Þ ¼ 1. Thus these results demonstrate the presence of reversed wPREE and wMREE with disruption as the dependent

measure.

General discussion

Two main findings stem from the present experiments. First, Experiments

1 and 2 provide an original demonstration of the spaced-trial bPREE in a

key-pecking procedure with pigeons. Together with an analogous runway

experiment reported by Roberts et al. (1963) and with additional experiments from our lab (Thomas, 2001), they constitute the only evidence available of the spaced-trial bPREE in any nonmammalian species; this result is

thus particularly important from the comparative point of view. Experiment

3 provides the second main contribution of this paper. As far as we know,

this is the first report to study the effects of both reinforcer schedule and reinforcer magnitude in a within-subject, spaced-trial design. As previously

mentioned, massed-training procedures allow for stimulus and memory carry-over effects from prior trials to control the instrumental response (Sheffield, 1949). In addition, within-subject designs involving relatively massed

conditions of training encourage comparison across training conditions that

may influence behavior (Svartdal, 2000). These potential carry-over and

comparison effects are minimized or eliminated by the use of widely spaced

training conditions and by the removal of the animals from the conditioning

context during the ITI. As a result, spaced-trial procedures permit a cleaner

assessment of the manner in which the associative strength of the discriminative stimulus controls the instrumental response. The pattern of results reported in these experiments (i.e., bPREE and reversed wPREE) is therefore

not attributable to these factors.

Different extinction performance across groups or conditions in an experiment may sometimes be attributable to the effects of the target manipulations on acquisition. Different transformations have been suggested to

deal with the problem generated by group differences in early extinction trials (e.g., Anderson, 1963). However, the bPREE reported in Experiments 1

and 2, and the reverse wPREE reported in Experiment 3 occurred in the absence of an effect of partial reinforcement training on acquisition in general,

and on late acquisition or early extinction in particular. The bPREEs obtained in Experiments 1 and 2 could be attributed to the prior experience

of the pigeons or, perhaps, the reverse wPREE reported in Experiment 3

could be the result of using experimentally naive animals. A consideration

of the assignment procedures, which were different in each of these

experiments, suggests that prior experience is unlikely to be the source

of these results. Whereas the assignment of pigeons in Experiment 1 was

500

M.R. Papini et al. / Learning and Motivation 33 (2002) 485–509

congruent with prior training, the assignment in Experiment 2 was orthogonal to previous experience. Furthermore, in Experiment 1, for which assignment was not orthogonal, the results of the previous and present

experiments were opposite. Thus, the pigeons showing the slowest extinction

in Experiment 1 (Group P) were those showing the fastest extinction in their

previous experiments (small reward group in Papini, 1997, Experiments 1

and 3, and in Papini & Thomas, 1997). Also relevant is the fact that the previous experience of the pigeons used in Experiments 1 (mostly one-trial-perday experiments; Papini, 1997; Papini & Thomas, 1997), and 2 (massedtraining procedures; Stout et al., 2002) was considerably different. Additional information suggesting that the bPREE is not an artifact of prior

training comes from studies involving experimentally naive pigeons. Using

a runway situation, Thomas (2001) found that reward inconsistency increased persistence in extinction of the running response both after partial

reinforcement training (thus replicating the results reported by Roberts et

al., 1963), and after a random mixture of large and small rewards. It is thus

safe to conclude that reward uncertainty in acquisition increases persistence

in extinction when pigeons are trained under spaced-trial conditions and

comparisons are made between groups.

Although currently popular theoretical accounts may explain one of the

reported effects, most theories would find it difficult to account for the complete pattern. Moreover, the picture is more complex if the effects of reward

magnitude are added. The available evidence on spaced-trial effects in

pigeons is summarized in Table 1. There are two basic problems to be

reconciled, both involving a dissociation of results. The first dissociation

involves the regular bPREE and the reversed MREE, found in both

between- and within-subject designs. The second dissociation involves the

Table 1

Summary of results from spaced-trial experiments with pigeons

PREE (Reference)

Between-subject designs

Key-Pecking

Conventional

(Present Experiments 1–2)

Running

Conventional

(Roberts et al., 1963)

(Thomas, 2001, Experiment 1)

Within-subjects designs

Key-Pecking

Reversed

(Present Experiment 3)

Running

No effect (see text for details)

(Mellgren & Seybert, 1973)

MREE (Reference)

Reversed

(Papini, 1997, Experiments 1–2)

(Papini & Thomas, 1997)

Reversed

(Thomas, 2001, Experiment 1)

Reversed

(Papini, 1997, Experiment 3)

(Present Experiment 3)

No information available

M.R. Papini et al. / Learning and Motivation 33 (2002) 485–509

501

PREE, which appears in several between-subject experiments, but not in the

within-subject experiment reported here, which yielded evidence of a

reversed wPREE.

Consider the first dissociation. A basic problem with the dissociation of

spaced-trial bPREE and reversed MREE is that schedule and magnitude

of reinforcement have traditionally been thought of as affecting associative

strength in the same manner. One example is the simple view, first suggested

by Thorndike (1911), that reinforcement strengthens a stimulus-response association, whereas nonreinforcement weakens it. This theoretical notion has

been applied extensively with some success (e.g., Couvillon & Bitterman,

1985; Rescorla & Wagner, 1972; Schmajuk, 1997). According to this

strengthening–weakening (S–W) view, the strength of a stimulus increases

directly with the frequency and magnitude of reinforcement. Most of these

models therefore predict reversed bPREEs and MREEs in any situation

in which the associative strength of a stimulus can be assessed relatively

directly, as in spaced-trial situations.

The failure of S–W views led to the development of other theories, which,

although based on different principles, still predict that training conditions

producing one effect should also produce the other. For example, AmselÕs

frustration theory (1992) and CapaldiÕs sequential theory (1994), both considered classic accounts of the PREE, can explain the results of Experiment

1 in general but fail to predict more specific aspects of such results. AmselÕs,

1992, account is inconsistent with the absence of a PRAE, and both accounts would demand a faster extinction rate than that observed in Group

C. As shown in Fig. 1, the behavior of continuously rewarded pigeons remained undisturbed during the initial 24 trials of extinction. Moreover, both

of these classic theories fail to account for the reversed wPREE obtained in

Experiment 3 and for the reversed bMREE and wMREE (e.g., Papini, 1997;

present Experiment 3). In terms of frustration theory, a within-subject design should lead both the partial and continuous cues (and the large- and

small-reinforcer cues) to induce a common internal response of anticipatory

frustration, which should result in nondifferential extinction to both cues

(e.g., Amsel et al., 1966).

The second dissociation (i.e., bPREE versus reversed wPREE), is also difficult to explain for most learning theories. A viable account of this dissociation was offered by Mellgren and Seybert (1973), based on CapaldiÕs

sequential theory. For a within-subject experiment, sequential theory predicts nondifferential extinction rates for stimuli paired with partial-large

reinforcement and continuous-large reinforcement, provided a specific

assumption is met, namely, that there is an equal number of NR transitions

in the partial-large stimulus and in the continuous-large stimulus. When this

provision is not met, the results may lead to either a wPREE or a reversed

wPREE depending on the sequential bias of the schedule used in acquisition, as mentioned previously (Mellgren & Seybert, 1973). Because the

502

M.R. Papini et al. / Learning and Motivation 33 (2002) 485–509

sequence of the three stimuli used in Experiment 3 was counterbalanced

across subjects, different pigeons received a different number of transitions.

The counterbalance criterion was chosen to equate the number of times each

stimulus appeared in each position during a series of 9 successive trials (3

trials/day). According to this procedure, PL, CL, and CS trials each occurred three times in each of the three positions (first, second, or third trial

of the day) for the group as a whole. Sporadic errors that modified the

scheduled sequence for a particular animal also introduced variability across

pigeons. Table 2 shows the results of an analysis of the actual sequences per

stimulus computed separately for the sequences occurring within days and

for those occurring across days. Data on transitions from small reward to

large reward were added because sequential theory clearly applies to such

cases (Capaldi, 1994). In agreement with Mellgren and SeybertÕs (1973) results, the continuous-large stimulus exhibited both the highest number of

critical transitions (within and between days) and the highest persistence

in extinction of the three stimuli used in Experiment 3. Moreover, strong

correlations were also evident across pigeons in terms of these two measures,

but their sign was opposite for within- and between-day sequences. For the

trials occurring within a single day and separated by an ITI of about 1–1.5 h

the correlation was positive, as predicted by sequential theory, namely, the

larger the number of transitions, the greater the level of persistence in extinction. However, for sequences occurring across days and separated by an ITI

of 18–21 h, the correlation was significant but negative. The results for

the partial-large and continuous-small stimuli also contradicted sequential

theoryÕs predictions. Despite individual variation in both measures, none

of the correlations approached significance and, in two cases, their sign

was negative. Of course, the sequential hypothesis also predicts the

MREE based on the assumption that, in extinction, there would be greater

Table 2

Sequential analysis of Experiment 3

Sequences within days

Probability of response

PearsonÕs coefficient

Sequences between days

Probability of response

PearsonÕs coefficient

Partial large

NL þ SL

Continuous large

NL þ SL

Continuous small

NS

7.3 (2–12)

0.48 (0.21–0.79)

)0.41

16.6 (13–19)

0.64 (0.29–0.92)

0.80

4.8 (3–7)

0.47 (0.13–0.85)

)0.42

4.3 (0–7)

0.48 (0.21–0.79)

0.60

7.9 (4–13)

0.64 (0.29–0.92)

)0.88

2.9 (0–6)

0.47 (0.13–0.85)

0.64

Note. N: nonreinforcement in partial-large trials. L: large reinforcer. S: small reinforcer.

The sequences of reinforcement and nonreinforcement correspond to the acquisition phase. The

probability of response was computed in terms of the number of extinction trials in which the

pigeon completed the fixed-ratio 10 requirement for each stimulus divided by 48 (the total

number of extinction trials with each stimulus). Ranges are given in parentheses.

*

p < 0:01, two-tailed test.

M.R. Papini et al. / Learning and Motivation 33 (2002) 485–509

503

generalization of memory control from a large reward to nonreward, than

from a small reward to nonreward (Capaldi, 1994). This prediction is not

supported by spaced-trial experiments that have consistently shown reversed

bMREE and wMREE (Papini, 1997; Papini & Thomas, 1997). Furthermore, and based on the same memory generalization-decrement mechanism,

sequential theory also predicts that a transition from a large to a small reinforcer magnitude should produce a successive negative contrast effect,

which failed to develop also under spaced-trial conditions (Papini, 1997).

More recent theoretical arguments also find it difficult to account for the

emerging pattern of results from spaced-trial experiments with pigeons. For

example, Pearce et al. (1997), elaborating on CapaldiÕs (1994) sequential theory, suggested that the conditions of reinforcement and nonreinforcement

induce an internal state that accompanies the presentation of cues thus providing an internal context. In a between-subject situation, the internal context changes more abruptly in extinction for animals exposed to continuous

reinforcement, than for animals trained with partial reinforcement, thus giving rise to the conventional bPREE. However, a within-subject design implies a common internal context thus equating generalization from

acquisition to extinction for both the continuous and the partial cues. Under

these conditions, this account predicts that performance in extinction would

reflect the associative strength of each cue. Given that the partial cue has

been frequently nonreinforced, it is expected to have less strength than the

continuous cue hence leading to a reversed wPREE. On the assumption,

mentioned previously, that reinforcement frequency and magnitude have

similar effects, then a cue paired with a small reward should acquire less

strength than one paired with a large reward. Pearce et al.Õs (1997) account

works well with the results of the present experiments, but it fails to predict

the reversed bMREE reported in previous articles (Papini, 1997; Papini &

Thomas, 1997). The bPREE and reversed bMREE were also obtained within the same experiment, measuring running performance (Thomas, 2001).

The results of spaced-trial experiments with pigeons are also inconsistent

with other contemporary proposals. First, Daly and DalyÕs (1982) model, a

set of linear equations combining Rescorla-WagnerÕs formal account of conditioning and AmselÕs frustration theory, accounts for the bPREE observed

in Experiment 1, but predicts conventional wPREE and wMREE for Experiment 3, rather than the reversed effects that were actually obtained. Second,

Eisenberger (1992) suggested that partial reinforcement induces a type of

generalized persistence, called learned industriousness, which transfers from

the original training context to new situations. This account leads to the

expectation that extinction should be equivalent in the within-subject

situation; presumably, the persistence induced by the partially reinforced

cue should transfer to all other cues extinguished in the same or in a different

situation. Precisely the opposite was found in the present Experiment 3.

Third, Rescorla (1999), elaborating on AmselÕs frustration theory, argued

504

M.R. Papini et al. / Learning and Motivation 33 (2002) 485–509

that performance in within-subject conditions would be under the control of

a configuration of the external cue plus an internal condition such as anticipatory frustration (see also Pearce et al., 1997). A configural control of

this type is at variance with the reversed wPREE and wMREE reported

in Experiment 3.

An explanation of the apparent dissociation between PREE and MREE

found in pigeons would seem to require a mechanism that applies to reinforcer schedule but not to reinforcer magnitude. Ideally, such a mechanism

would also have to be sensitive to the between-subject versus within-subject

dimension to successfully deal with the second dissociation mentioned previously (i.e., between-subject versus within-subject effects). Following Pearce

et al.Õs (1997) suggestion, one could argue that when this putative mechanism is engaged, it takes control of behavior away from the basic S–W process but, when it is not engaged, the S–W mechanism operates freely. Thus,

the bPREE would be produced by a mechanism engaged by reinforcement

uncertainty that overlies the S–W process, whereas the reversed MREE and

reversed wPREE would be produced by the simple S–W mechanism. There

are at least three hypotheses that may potentially fit this scenario.

The first is referred to as the response-unit hypothesis. Mowrer and Jones

(1945) suggested that partial reinforcement shapes a larger behavioral unit

than that shaped by continuous reinforcement. For example, an animal receiving one reinforcer per response develops a unit involving one response,

whereas another receiving, on average, one reinforcer every other response

develops a unit involving two responses. When shifted to extinction, the second animal will emit twice as many responses than the first, but the same

number of units. There is some evidence that reinforcement can induce

the formation of response patterns that exhibit a unified organization. For

example, pigeons required to peck four times, in any order, on two response

keys tend to develop a stereotyped sequence. When such sequences are

exposed to fixed-interval or fixed-ratio schedules, the sequence as a whole

exhibits properties that are typical of individual responses, such as a postreinforcement pause (Schwartz, 1982). The response-unit hypothesis

predicts that the bPREE should disappear (i.e., partial and continuous

groups exhibit the same extinction performance) when response units are

matched across groups. Although this hypothesis was not explicitly postulated to explain the effects of reinforcer magnitude on extinction, it could

be easily extended to such a case. Applied to the case of reinforcer magnitude, the response-unit hypothesis would plausibly predict that the response

unit will be established faster in the large-reinforcer condition than in the

small-reinforcer condition. This follows from the application of the S–W

rule to the response unit. In turn, this difference would lead to the expectation that the large-reinforcer group would extinguish more slowly than the

small-reinforcer group when extinction is measured in terms of individual

responses. This prediction fits the available evidence for pigeons (Papini,

M.R. Papini et al. / Learning and Motivation 33 (2002) 485–509

505

1997; Papini & Thomas, 1997; present Experiment 3). Furthermore, the

response-unit hypothesis predicts that the reversed MREE should diminish

or disappear after extended acquisition practice, a prediction that is both

counterintuitive and easy to test. The failure of the response-unit hypothesis

to explain the absence of dissociation in the within-subject condition, which

yielded a reversed wPREE and a reversed wMREE, could be accommodated in the following manner. In a within-subject situation, response units

could only develop if they can fall under stimulus control. It seem plausible

that the intermixing of trial types in a within-subject situation would introduce substantial levels of proactive interference that would tend to disrupt

the development of response units. Proactive interference has been demonstrated in pigeons in situations involving exposure to multiple stimuli (e.g.,

Roberts, 1980). The response-unit hypothesis can be accommodated to

explain the entire pattern of results described in Table 1 and therefore merits

a more direct testing.

The second may be referred to as the timing hypothesis. Gibbon, Farrell,

Locurto, Duncan, and Terrace (1980) suggested that trial responding depends on a comparison between the food expectancy induced by trial stimuli

versus that induced by the context in which trials take place. They further

assumed that these expectancies are inversely related to the duration of

the trial stimulus and directly related to the average interreinforcement interval, respectively. Thus, since the animal receives, on average, twice as

many trials per reinforcement at twice the interreinforcement interval in a

50% schedule than in a 100% schedule, the ratio of trial to interreinforcement durations is equal in both situations. The timing hypothesis predicts

that the bPREE would disappear when extinction performance is measured

as a function of expected reinforcements, rather than trials, a prediction

which Gibbon et al. (1980) confirmed in an extensive reanalysis of published

evidence from Pavlovian conditioning experiments (see also Gallistel & Gibbon, 2000; Gibbon & Balsam, 1981). This timing hypothesis was developed

to a large extent on the basis of data collected in autoshaping studies with

pigeons. Unlike in the present procedure (based on the delivery of discrete

pellets), autoshaping studies have typically varied reinforcer magnitude in

terms of hopper duration, the time during which a hopper containing grain

is made accessible to the pigeon (e.g., Balsam & Payne, 1979). With this duration procedure, magnitude either has no effect on acquisition rate, or it

produces faster acquisition with small rewards. As a result, timing theorists

tend to assume that reinforcer magnitude does not affect acquisition or extinction. As applied to the data summarized in Table 1, this conclusion

works well for acquisition data, which generally show no magnitude effects,

but it does not apply to extinction results. Extinction rates are typically

clearly different across groups; pigeons trained with large reinforcers extinguish relatively more slowly than pigeons trained with small reward (e.g.,

Papini, 1997; Papini & Thomas, 1997). This rate difference suggests that a

506

M.R. Papini et al. / Learning and Motivation 33 (2002) 485–509

transformation of extinction data in terms of expected reinforcers (cf. Gibbon et al., 1980) would not eliminate the effect of reinforcer magnitude on

extinction. Timing theory offers, therefore, no clear explanation of the magnitude effects reported in spaced-trial experiments with pigeons. Also unclear is the way in which the timing hypothesis would deal with the

interreinforcement interval. Exposure to the training apparatus during this

interval is assumed to lead to contextual conditioning. However, in the

spaced-trial procedure used in the present experiments, the pigeon was in

the training context during a relatively small fraction of the interreinforcement interval; pigeons were in their cages during most of this interval. Thus,

the timing hypothesis is so tied to particular training procedures and it is difficult to apply when, as in the present experiments, training involves somewhat unusual circumstances.

A third theoretical possibility, referred to as the attentional hypothesis, is

particularly relevant to the second dissociation mentioned previously, the

different effects of partial reinforcement in between-subject versus withinsubject designs. Bouton and Sunsay (2001) suggested that partial reinforcement encourages attention to both the trial stimulus as well as the contextual

cues in which trials occur because of the surprising nature of the R and N

outcomes. In contrast, continuous reinforcement encourages attention only

to the trial stimulus. Consistent with this attentional hypothesis, Bouton and

Sunsay (2001) reported that responding to a partial stimulus is more sensitive to contextual change than responding to a continuously reinforced stimulus, in a within-subject, appetitive conditioning experiment with rats. A

change from acquisition to extinction leads to a greater attentional shift

away from the trial stimulus and to the contextual stimuli in the 100% reinforcement condition than in the 50% reinforcement condition, thus leading

to the PREE. In a within-subject situation such as that used in Experiment

3, the presentation of the partial stimulus in the same context as the other

two stimuli would tend to match the amount of attention to contextual cues

across stimuli. In the absence of differential attention to the context, and applying Pearce et al.Õs (1997) rule, the S–W mechanism takes priority, producing a reversed PREE. The attentional hypothesis has not been explicitly

applied to the reinforcer magnitude case. However, it seems plausible to expect that attention to the context would be inversely related to reinforcer

magnitude. A testable prediction suggests that a context shift should be

more detrimental to a stimulus paired with a small reinforcer than to one

paired with a large reinforcer. In this case, then, the attentional hypothesis

predicts that a shift to extinction should be more disrupting in the large-reinforcer group than in the small-reinforcer group (i.e., an MREE). Thus,

this attentional hypothesis cannot explain the reversed bMREE (Papini,

1997; Papini & Thomas, 1997) and wMREE (Experiment 3).

The results of this series of spaced-trial experiments with pigeons are

difficult to explain for a wide variety of theoretical accounts, both classic

M.R. Papini et al. / Learning and Motivation 33 (2002) 485–509

507

and contemporary. The merits of the response-unit, timing, and attentional

hypotheses need to be established in experiments explicitly designed to test

their application to the present conditions of training. A consideration of all

the available evidence suggests that it may be premature to conclude that the

same mechanisms explain the adjustment to reinforcement uncertainty in pigeons and rats, despite the behavioral similarities in the bPREE. The first

piece of evidence is provided by the dissociation of bPREE and bMREE

in spaced-trial runway and key-pecking experiments with pigeons (Papini,

1997; Papini & Thomas, 1997; Roberts et al., 1963; Thomas, 2001). Therefore, the same training conditions that yield the spaced-trial, bPREE lead to

a reversed bMREE in pigeons. This dissociation of the bPREE and bMREE

is inconsistent with evidence from experiments with rats, for which these effects co-vary under analogous conditions of training (Hulse, 1958; Wagner,

1961). The second piece of evidence is provided by the drug profile of the

pigeonÕs bPREE, which appears to differ from that of the rat. For example,

haloperidol (mainly a dopamine antagonist) eliminates the bPREE in pigeons (Thomas, 2001), but has no effect in rats (Feldon, Katz, & Weiner,

1988); by contrast, chlordiazepoxide (a benzodiazepine anxiolytic) delays

the emergence of the bPREE without affecting its size in pigeons (Thomas,

2001), but it eliminates the bPREE in rats (Feldon & Gray, 1981; McNaughton, 1984). These results suggest that different underlying mechanisms contribute to the PREE in pigeons and rats.

Acknowledgments

Part of the results in this paper were presented at the 10th Annual Meeting of the American Psychological Society, Washington D.C., May 1998.

We thank John Capaldi, Roger Mellgren, and Steven Stout for comments

on a previous version of the manuscript.

References

Amsel, A. (1992). Frustration theory. An analysis of dispositional learning and memory. New

York: Cambridge University Press.

Amsel, A., Rashotte, M. E., & MacKinnon, J. R. (1966). Partial reinforcement effects within

subject and between subjects. Psychological Monographs, 80(628).

Anderson, N. H. (1963). Comparison of different populations: Resistance to extinction and

transfer. Psychological Review, 70, 162–179.

Balsam, P. D., & Payne, D. (1979). Intertrial interval and unconditioned stimulus durations in

autoshaping. Animal Learning and Behavior, 7, 477–482.

Boitano, J. J., & Foskett, M. D. (1968). Effects of partial reinforcement on speed of approach

responses in goldfish (Carassius auratus). Psychological Report, 22, 741–744.

Bouton, M. E., & Sunsay, C. (2001). Contextual control of appetitive conditioning: Influence of

a contextual stimulus generated by a partial reinforcement procedure. Quarterly Journal of

Experimental Psychology, 54B, 109–125.

508

M.R. Papini et al. / Learning and Motivation 33 (2002) 485–509

Capaldi, E. J. (1994). The sequential view: From rapidly fading stimulus traces to the

organization of memory and the abstract concept of number. Psychonomic Bulletin and

Review, 1, 156–181.

Couvillon, P. A., & Bitterman, M. E. (1985). Analysis of choice in honeybees. Animal Learning

and Behavior, 13, 246–254.

Crawford, L. L., Steirn, J. N., & Pavlik, W. B. (1985). Within- and between-subjects partial

reinforcement effects with an autoshaped response using Japanese quail (Coturnix coturnix

japonica). Animal Learning and Behavior, 13, 85–92.

Daly, H. B., & Daly, J. T. (1982). A mathematical model of reward and aversive I nonreward:

Its application in over 30 appetitive learning situations. Journal of Experimental Psychology:

General, 111, 441–480.

Eisenberger, R. (1992). Learned industriousness. Psychological Review, 99, 248–267.

Feldon, J., & Gray, J. A. (1981). The partial reinforcement extinction effect after treatment with

chlordiazepoxide. Psychopharmacology, 73, 269–275.

Feldon, J., Katz, Y., & Weiner, I. (1988). The effects of haloperidol on the partial reinforcement

extinction effect (PREE): implications for neuroleptic drug action on reinforcement and

nonreinforcement. Psychopharmacology, 95, 528–533.

Gallistel, C. R., & Gibbon, J. (2000). Time, rate, and conditioning. Psychological Review, 107,

289–344.

Gellermann, L. W. (1933). Chance orders of alternating stimuli in visual discrimination

experiments. Journal of Genetic Psychology, 42, 206–208.

Gibbon, J., & Balsam, P. D. (1981). Spreading association in time. In C. M. Locurto, H. S.

Terrace, & J. Gibbon (Eds.), Autoshaping and conditioning theory (pp. 219–253). New York:

Academic.

Gibbon, J., Farrell, L., Locurto, C. M., Duncan, H. J., & Terrace, H. S. (1980). Partial

reinforcement in autoshaping with pigeons. Animal Learning and Behavior, 8, 45–59.

Gonzalez, R. C., & Bitterman, M. E. (1962). A further study of partial reinforcement in the

turtle. Quarterly Journal of Experimental Psychology, 14, 109–112.

Gonzalez, R. C., & Bitterman, M. E. (1967). Partial reinforcement effect in the goldfish as a function

of amount of reward. Journal of Comparative and Physiological Psychology, 64, 163–167.

Goodrich, K. P. (1959). Performance in different segments of an instrumental response chain as

a function of reinforcement schedule. Journal of Experimental Psychology, 57, 57–63.

Graf, C. L. (1972). Spaced-trial partial reward in the lizard. Psychonomic Science, 27, 153–154.

Hulse, S. H., Jr. (1958). Amount and percentage of reinforcement and duration of goal

confinement in conditioning and extinction. Journal of Experimental Psychology, 56, 48–57.

McNaughton, N. (1984). Effects of anxiolytic drugs on the partial reinforcement effect in

runway and Skinner box. Quarterly Journal of Experimental Psychology, 36B, 319–330.

Mellgren, R. L., & Dyck, D. G. (1974). Reward magnitude in differential conditioning: effects of

sequential variables in acquisition and extinction. Journal of Comparative and Physiological

Psychology, 86, 1141–1148.

Mellgren, R. L., & Seybert, J. A. (1973). Resistance to extinction at spaced trials using the

within-subject procedure. Journal of Experimental Psychology, 100, 151–157.

Mowrer, O. H., & Jones, H. M. (1945). Habit strength as a function of the pattern of

reinforcement. Journal of Experimental Psychology, 43, 293–311.

Muzio, R. N., Segura, E. T., & Papini, M. R. (1992). Effect of schedule and magnitude of

reinforcement on instrumental learning in the toad, Bufo arenarum. Learning and

Motivation, 23, 406–429.

Muzio, R. N., Segura, E. T., & Papini, M. R. (1994). Learning under partial reinforcement in

the toad (Bufo arenarum): Effects of lesions in the medial pallium. Behavioral and Neural

Biology, 61, 36–46.

Papini, M. R. (1997). Role of reinforcement in spaced-trial operant learning in pigeons

(Columba livia). Journal of Comparative Psychology, 111, 275–285.

M.R. Papini et al. / Learning and Motivation 33 (2002) 485–509

509

Papini, M. R. (2002). Pattern and process in the evolution of learning. Psychological Review,

109, 186–201.

Papini, M. R., Muzio, R. N., & Segura, E. T. (1995). Instrumental learning in toads (Bufo

arenarum): Reinforcer magnitude and the medial pallium. Brain, Behavior, and Evolution,

46, 61–71.

Papini, M. R., & Thomas, B. L. (1997). Spaced-trial operant learning with purely instrumental

contingencies in pigeons (Columba livia). International Journal of Comparative Psychology,

10, 128–136.

Pavlik, W. B., & Carlton, P. L. (1965). A reversed partial-reinforcement effect. Journal of

Experimental Psychology, 70, 417–423.

Pavlik, W. B., Carlton, P. L., & Hughes, R. A. (1965). Partial reinforcement effects in a runway:

Between- and within-Ss. Psychonomic Science, 3, 203–204.

Pearce, J. M., Redhead, E. S., & Aydin, A. (1997). Partial reinforcement in appetitive Pavlovian

conditioning with rats. Quarterly Journal of Experimental Psychology, 50B, 273–294.

Pert, A., & Bitterman, M. E. (1970). Reward and learning in the turtle. Learning and

Motivation, 1, 121–128.

Rescorla, R. A. (1999). Within-subject partial reinforcement extinction effect in autoshaping.

Quarterly Journal of Experimental Psychology, 52B, 75–87.

Rescorla, R. A., & Wagner, A. R. (1972). A theory of Pavlovian conditioning variations in the

effectiveness of reinforcement and nonreinforcement. In A. H. Black & W. F. Prokasy

(Eds.), Classical conditioning II: Current research and theory (pp. 64–99). New York:

Appleton-Century-Crofts.

Roberts, W. A. (1980). Distribution of trials and intertrial retention in delayed matching to sample

with pigeons. Journal of Experimental Psychology: Animal Behavior Processes, 6, 217–237.

Roberts, W. A., Bullock, D. H., & Bitterman, M. E. (1963). Resistance to extinction in the

pigeon after partially reinforced instrumental training under discrete-trials conditions.

American Journal of Psychology, 76, 353–365.

Sadler, E. W. (1968). A within- and between-subjects comparison of partial reinforcement in

classical salivary conditioning. Journal of Comparative and Physiological Psychology, 66,

695–698.

Schmajuk, N. A. (1997). Animal learning and cognition. A neural network approach. Cambridge,

UK: Cambridge University Press.

Schutz, S. L., & Bitterman, M. E. (1969). Spaced-trials partial reinforcement and resistance to

extinction in the goldfish. Journal of Comparative and Physiological Psychology, 68, 126–128.

Schwartz, B. (1982). Interval and ratio reinforcement of a complex sequential operant in

pigeons. Journal of the Experimental Analysis of Behavior, 37, 349–357.

Sheffield, V. F. (1949). Extinction as a function of partial reinforcement and distribution of

practice. Journal of Experimental Psychology, 39, 511–526.

Stout, S. C., Muzio, R. N., Boughner, R., & Papini, M. R. (2002). After effects of the surprising

presentation and omission of appetitive reinforcers on key pecking performance in pigeons.

Journal of Experimental Psychology: Animal Behavior Processes, 28, 242–256.

Svartdal, F. (2000). Persistence during extinction: Conventional and reversed PREE under

multiple schedules. Learning and Motivation, 31, 21–40.

Thomas, B. L. (2001). Determinants of the spaced-trial partial reinforcement extinction effect in

pigeons. Unpublished Doctoral Dissertation, Texas Christian University.

Thorndike, E. L. (1911). Animal intelligence. New York: Macmillan.

Wagner, A. R. (1961). Effects of amount and percentage of reinforcement and number of

acquisition trials on conditioning and extinction. Journal of Experimental Psychology, 62,

234–242.

Weinstock, S. (1954). Resistance to extinction of a running response following partial

reinforcement under widely spaced trials. Journal of Comparative and Physiological

Psychology, 47, 318–322.