SourceCode - Department of Computer and Information Science

advertisement

Source Code

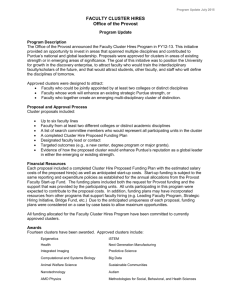

Project Report : Comparing Clustering Algorithms

Participants:

Joyesh Mishra

Vasanth Prabhu Sundararaj

Gnana Sundar Rajendiran

CIS 6930 Data Mining Fall 2007

Department of Computer and Information Science &

Engineering

University of Florida

Index

Source Code:

1. K-Means

2. Agglomerative Clustering

3. DBSCAN Using KD Trees

4. CURE

1. K – Means

2. Agglomerative

Single Link

load data1.dat;

X = data1';

%X = [0.4 0.22 0.35 0.26 0.08 0.45;0.53 0.38 0.32 0.19 0.41 0.30];

[D,N] = size(X);

%MAKE_SET

tempVec(1,1:N) = 1:N;

parentVec(1,1:N) = tempVec;

rankVec(1,1:N) = 0;

X2 = sum(X.^2,1);

dist = repmat(X2,N,1) + repmat(X2',1,N) - 2*X'*X;

%corre = corre - diag(diag(corre));

for i = 1:N

dist(i,i:N) = 1000;

end

dist = sqrt(dist);

vect = reshape(dist,1,N*N);

[sortVect,IX] = sort(vect);

iterNum = 0;

k = 0;

tic

while(length(find(~(parentVec - tempVec)))~=1)

iterNum = iterNum + 1;

pos2 = floor((IX(iterNum)-1)/N)+1;

pos1 = IX(iterNum) - (pos2-1)*N;

%fprintf('Iternation Number..%d...Closest data points %d %d\n',iterNum,pos1,pos2);

%Find operation%

[parentVal1,parentVec] = findOperation(pos1,parentVec);

[parentVal2,parentVec] = findOperation(pos2,parentVec);

%

%

%

%

if(parentVal1~=parentVal2)

k = k + 1;

mergingDataPoints(k,1) = pos1;

mergingDataPoints(k,2) = pos2;

%Union Operation%

if(rankVec(parentVal1)==rankVec(parentVal2))

parentVec(parentVal2) = parentVal1;

rankVec(parentVal1) = rankVec(parentVal1) + 1;

end

if(rankVec(parentVal1)>rankVec(parentVal2))

parentVec(parentVal2) = parentVal1;

end

if(rankVec(parentVal2)>rankVec(parentVal1))

parentVec(parentVal1) = parentVal2;

end

else

fprintf('%d and %d have the same parent %d...\n',pos1,pos2,parentVal1);

end

if(mod(iterNum,2000000)==0)

for i = 1:N

[parentVal,parentVec] = findOperation(i,parentVec);

end

clusterHeads = find(~(parentVec-tempVec));

for i = 1:length(clusterHeads)

dataPts = find(parentVec==clusterHeads(i));

fileName = strcat('clusters/',num2str(iterNum),'_Cluster_',num2str(i),'.txt');

fid = fopen(fileName,'w');

for pt = 1:length(dataPts)

fprintf(fid,'%8.4f\t%8.4f\n',X(1,dataPts(pt)),X(2,dataPts(pt)));

end

fclose('all');

fprintf('Cluster %d data points...\n',i);

disp(X(:,dataPts)');

end

%pause();

end

end

t = toc

for i = 1:N

[parentVal,parentVec] = findOperation(i,parentVec);

end

fprintf('Elapsed Time - %d\n',t);

clusterHeads = find(~(parentVec-tempVec));

for i = 1:length(clusterHeads)

dataPts = find(parentVec==clusterHeads(i));

%disp(dataPts);

fileName = strcat('clusters/',num2str(iterNum),'_Cluster_',num2str(i),'.txt');

fid = fopen(fileName,'w');

for pt = 1:length(dataPts)

fprintf(fid,'%8.4f\t%8.4f\n',X(1,dataPts(pt)),X(2,dataPts(pt)));

end

fclose('all');

end

disp(mergingDataPoints);

% plot(X(1,:),X(2,:),'k*','MarkerSize',3);

% hold on;

% for i = 1:k

%

dPt1 = X(:,mergingDataPoints(i,1));

%

dPt2 = X(:,mergingDataPoints(i,2));

%

line([dPt1(1,1) dPt2(1,1)],[dPt1(2,1) dPt2(2,1)],'Color','r');

% end

Complete Link

% Loads the Data file and merges it into a single matrix “sample1”

load sample1.dat;

% Taking the transpose of the matrix and storing it in X

X = sample1';

% Variable to set a high value in the diagonal and other merged points

inf = 1000;

% Getting the dimensions of the matrix X in D and N

[D,N] = size(X);

% Holds the initial number of clusters (singleton)

tempVec(1,1:N) = 1:N;

parentVec(1,1:N) = tempVec;

rankVec(1,1:N) = 0;

X2 = sum(X.^2,1);

dist = repmat(X2,N,1) + repmat(X2',1,N) - 2*X'*X;

dist = sqrt(dist);

dist = dist + inf*eye(N);

iterNum = 0;

k = 0;

tic

while(length(find(~(parentVec - tempVec)))~=1)

iterNum = iterNum + 1;

[minVals argmin] = min(dist);

[minDist pt1] = min(minVals);

pt2 = argmin(pt1);

%Find operation%

[parentVal1,parentVec] = findOperation(pt1,parentVec);

[parentVal2,parentVec] = findOperation(pt2,parentVec);

if(parentVal1~=parentVal2)

k = k + 1;

mergingDataPoints(k,1) = pt1;

mergingDataPoints(k,2) = pt2;

fprintf('Merging clusters %d %d\n',parentVal1,parentVal2);

%Union Operation%

if(rankVec(parentVal1)==rankVec(parentVal2))

dPt2 = parentVal2;

dPt1 = parentVal1;

rankVec(parentVal1) = rankVec(parentVal1) + 1;

end

if(rankVec(parentVal1)>rankVec(parentVal2))

dPt2 = parentVal2;

dPt1 = parentVal1;

end

if(rankVec(parentVal2)>rankVec(parentVal1))

dPt2 = parentVal1;

dPt1 = parentVal2;

end

parentVec(dPt2) = dPt1;

tempMat = [dist(dPt1,:);dist(dPt2,:)];

maxVals = max(tempMat);

dist(dPt1,:) = maxVals;

dist(:,dPt1) = maxVals';

dist(dPt2,:) = inf;

dist(:,dPt2) = inf;

%

else

%

fprintf('%d and %d have the same parent %d...\n',pos1,pos2,parentVal1);

end

if(mod(iterNum,250)==0)

for i = 1:N

[parentVal,parentVec] = findOperation(i,parentVec);

end

clusterHeads = find(~(parentVec-tempVec));

for i = 1:length(clusterHeads)

dataPts = find(parentVec==clusterHeads(i));

%

%

fileName = strcat('clusters/',num2str(iterNum),'_Cluster_',num2str(i),'.txt');

fid = fopen(fileName,'w');

for pt = 1:length(dataPts)

fprintf(fid,'%8.4f\t%8.4f\n',X(1,dataPts(pt)),X(2,dataPts(pt)));

end

fclose('all');

fprintf('Cluster %d data points...\n',i);

disp(X(:,dataPts)');

end

%pause();

end

end

t = toc

for i = 1:N

[parentVal,parentVec] = findOperation(i,parentVec);

end

fprintf('Elapsed Time - %d\n',t);

clusterHeads = find(~(parentVec-tempVec));

for i = 1:length(clusterHeads)

dataPts = find(parentVec==clusterHeads(i));

%disp(dataPts);

fileName = strcat('clusters/',num2str(iterNum),'_Cluster_',num2str(i),'.txt');

fid = fopen(fileName,'w');

for pt = 1:length(dataPts)

fprintf(fid,'%8.4f\t%8.4f\n',X(1,dataPts(pt)),X(2,dataPts(pt)));

end

fclose('all');

end

disp(mergingDataPoints);

% plot(X(1,:),X(2,:),'k*','MarkerSize',3);

% hold on;

% for i = 1:k

%

dPt1 = X(:,mergingDataPoints(i,1));

%

dPt2 = X(:,mergingDataPoints(i,2));

%

line([dPt1(1,1) dPt2(1,1)],[dPt1(2,1) dPt2(2,1)],'Color','r');

% end

Find Operation function

% To find the root of the tree

function [parentVal parentVec] = findOperation(pos,parentVec)

parentVal = parentVec(pos);

if(parentVal~=pos)

[parentVal parentVec] = findOperation(parentVec(pos),parentVec);

parentVec(pos) = parentVal;

end

end

3.DBSCAN Algorithm

Point.java

package dbscan;

/**

* Class Point - Stores a Point in the 2 Dimensional Space

* @version 1.0

* @author jmishra

*/

public class Point {

/** The following are the member variables for a Point **/

public double x;

public double y;

public int index;

public Cluster cluster;

public boolean isCorePoint = false;

public Point() {

}

public Point(double x, double y, int index) {

this.x = x;

this.y = y;

this.index = index;

}

public Point(Point point) {

this.x = point.x;

this.y = point.y;

}

/**

* Calculate Distance from a Point t

* @param t Point

* @return

* double Euclidean Distance from t

*/

public double calcDistanceFromPoint(Point t) {

return Math.sqrt(Math.pow(x-t.x, 2) + Math.pow(y-t.y, 2));

}

public boolean equals(Point t) {

return (x == t.x) && (y == t.y);

}

public void setCluster(Cluster cluster) {

this.cluster = cluster;

}

public Cluster getCluster() {

return cluster;

}

public double[] toDouble() {

double[] xy = {x,y};

return xy;

}

}

Cluster.java

package dbscan;

import java.util.ArrayList;

import java.util.Iterator;

/**

* Class Cluster - Represents a Cluster in DBSCAN

* @version 1.0

* @author jmishra

*/

public class Cluster {

/**

* Points Contained in the Cluster

*/

public ArrayList points = new ArrayList();

/**

* Returns the Size of Cluster

* @return

* int Size of Cluster

*/

public int size() {

return points.size();

}

/**

* Calculates if the Point P is in EPS Neighborhood of the Cluster

* @param p Point p

* @param eps EPS Distance

* @return

* boolean <b>true</b> If the point lies in EPS Neighborhood of all points of cluster

*

<b>false</b> Otherwise

*/

public boolean isInEPSProximityFromAllPoints(Point p, double eps) {

boolean result = true;

Iterator iter = points.iterator();

while(iter.hasNext()) {

Point t = (Point)iter.next();

if(t.calcDistanceFromPoint(p) > eps) {

result = false;

break;

}

}

return result;

}

}

DBSCAN.java

package dbscan;

import java.io.BufferedReader;

import java.io.BufferedWriter;

import java.io.FileReader;

import java.io.FileWriter;

import java.util.ArrayList;

import java.util.HashMap;

import java.util.StringTokenizer;

import edu.wlu.cs.levy.CG.KDTree;

/**

* DBSCAN Algorithm

* Input Parameters: DataFile NumberOfPointsInFile MinPointThreshHoldToClassifyAsCorePoint EPS

*

* The Algorithm uses KD Trees to store the data points and for searching Nearest Neighbors

* @version 1.0

* @author jmishra

*/

public class DBSCAN {

/** Stores the input arguments to the algorithm **/

private String dataFile;

private int totalNumberOfPoints;

private double eps;

private int minPointThreshHold;

private Point[] dataPoints;

private HashMap dataPointsMap = new HashMap();

private KDTree kdtree = new KDTree(2);

/** Stores the points in three categories based on the number of points in their neighborhood within EPS

**/

ArrayList corePoints = new ArrayList();

ArrayList borderPoints = new ArrayList();

ArrayList noisePoints = new ArrayList();

/** Stores the final list of clusters **/

ArrayList clusters = new ArrayList();

/**

* DBSCAN Algorithm Execution Begins Here

* @param args The Initialization Parameters passed through Command Line Arguments

*/

public DBSCAN(String[] args) {

System.out.println("DBSCAN Clustering Algorithm");

System.out.println("---------------------------\n");

initializeParameters(args);

readDataPoints();

long beginTime = System.currentTimeMillis();

initializeKDTree();

classifyPoints();

buildClustersFromCorePoints();

assignBorderPointsToClusters();

long time = System.currentTimeMillis() - beginTime;

System.out.println(clusters.size() + " Clusters Found.");

System.out.println("\nThe Algorithm took " + time + " milliseconds to complete.");

System.out.println("\nPlease Use GNUPlot to show the clusters by rendering the file using

\"load plotdbscan.txt\" on GNUPlot Console");

showClusters();

}

private void initializeParameters(String[] args) {

dataFile = args[1];

totalNumberOfPoints = Integer.parseInt(args[2]);

minPointThreshHold = Integer.parseInt(args[3]);

if(args.length > 3) eps = Double.parseDouble(args[4]);

else calculateEPS();

dataPoints = new Point[totalNumberOfPoints];

System.out.println("FileName: " + dataFile + "\t\tNumber of Points to be Clustered: " +

totalNumberOfPoints + "\n");

}

private void calculateEPS() {

/* Efficient Methods can be implemented here to calculate EPS based on MinPointThreshhold

Specified by the user.

* This procedure has not been implemented and user is expected to pass EPS as one of the

parameter.

* Finding EPS would require pre-processing of the data and calculating and estimated value of

EPS.

*/

}

/**

* Read and Initialize the Data Points from User Specified Input File

*/

private void readDataPoints() {

int pointIndex = 0;

FileReader fr = null;

try {

fr = new FileReader(dataFile);

BufferedReader in = new BufferedReader(fr);

String data = in.readLine();

while(data != null) {

StringTokenizer st = new StringTokenizer(data);

double x = Double.parseDouble(st.nextToken());

double y = Double.parseDouble(st.nextToken());

dataPoints[pointIndex] = new Point(x,y,pointIndex);

dataPointsMap.put(pointIndex, dataPoints[pointIndex]);

pointIndex++;

data = in.readLine();

}

in.close();

} catch(Exception e){

debug(e);

}

}

/**

* Initialize and build the KD Tree for the data points

*/

private void initializeKDTree() {

for(int i = 0; i < totalNumberOfPoints ; i++) {

try {

kdtree.insert(dataPoints[i].toDouble(), dataPoints[i].index);

} catch(Exception e) {}

}

}

/**

* Returns the n nearest neighbors for a Point by looking up in the KD Tree where n = MinPoints

* @param point Point P

* @return

* Object[] Index of the Nearest Points to Point P

*/

private Object[] getNearestNeighbours(Point point) {

Object[] nearestPoints = null;

try {

nearestPoints = kdtree.nearest(point.toDouble(), minPointThreshHold);

} catch(Exception e) {

debug(e);

}

return nearestPoints;

}

/**

* Classify the data points into Core Points, Border Points and Noise Points based on the number of

neighbors within EPS radius

*/

private void classifyPoints() {

for(int index = 0; index < totalNumberOfPoints; index++) {

Object[] nearestPoints = getNearestNeighbours(dataPoints[index]);

int satisyingPoints = 0;

for(int i = 0; i < nearestPoints.length ; i++) {

int nearestPointIndex = (Integer)nearestPoints[i];

if(dataPoints[nearestPointIndex].calcDistanceFromPoint(dataPoints[index]) <

eps) {

satisyingPoints++;

}

else if(satisyingPoints != 1) break;

}

if(satisyingPoints == 1) noisePoints.add(dataPoints[index]);

else if(satisyingPoints == minPointThreshHold) {

corePoints.add(dataPoints[index]);

dataPoints[index].isCorePoint = true;

}

else borderPoints.add(dataPoints[index]);

}

}

/**

* Creates a new Cluster and adds the point P

* When a core point is far enough from all remaining core points, a new cluster if formed for it.

* @param p Point P

*/

private void createNewClusterAndAddPoint(Point p) {

Cluster cluster = new Cluster();

cluster.points.add(p);

p.cluster = cluster;

clusters.add(cluster);

}

/**

* Builds all possible clusters from the list of Core Points

*/

private void buildClustersFromCorePoints() {

for(int i = 0; i < corePoints.size() ; i++) {

Point p = (Point)corePoints.get(i);

if(i == 0) {

createNewClusterAndAddPoint(p);

continue;

}

boolean isAssigned = false;

for(int index = 0; index < clusters.size() ; index++) {

Cluster c = (Cluster)clusters.get(index);

if(c.isInEPSProximityFromAllPoints(p, eps)) {

c.points.add(p);

p.cluster = c;

isAssigned = true;

break;

}

}

if(isAssigned == false) {

createNewClusterAndAddPoint(p);

}

}

}

/**

* Returns the KD Tree Index of Nth Nearest Neighbor for a Point P

* @param point Point P

* @param n Nth Nearest Neighbor

* @return

* int KD Tree Index of Nth Nearest Neighbor for a Point P

*/

private int getNthNearestNeighbour(Point point, int n) {

int nearestPointIndex = 0;

try {

Object[] nearestNeighbours = kdtree.nearest(point.toDouble(), n);

nearestPointIndex = (Integer)nearestNeighbours[n-1];

} catch(Exception e) {

debug(e);

}

return nearestPointIndex;

}

/**

* Assigns all border points to the clusters formed from the list of core points in

buildClustersFromCorePoints method

*/

private void assignBorderPointsToClusters() {

for(int i = 0; i < borderPoints.size() ; i++) {

Point p = (Point)borderPoints.get(i);

int j = 2;

Point q = dataPoints[getNthNearestNeighbour(p,j)];

while(!q.isCorePoint){

j++;

q = dataPoints[getNthNearestNeighbour(p,j)];

}

q.cluster.points.add(p);

p.cluster = q.cluster;

}

}

/**

* Shows all clusters generated

*/

private void showClusters() {

for(int i = 0; i < clusters.size() ; i++) {

Cluster c = (Cluster)clusters.get(i);

logCluster(c, "cluster" + i);

}

logOutliers("outliers");

logPlotScript(clusters.size());

}

/**

* Logs the cluster to a file

*/

private void logCluster(Cluster c, String filename) {

BufferedWriter out = getWriterHandle(filename);

try {

out.write("#\tX\tY\n");

for(int i = 0; i < c.size() ; i++) {

Point p = (Point)c.points.get(i);

out.write("\t" + p.x + "\t" + p.y + "\n");

}

out.flush();

out.close();

} catch(Exception e) {

debug(e);

}

}

/**

* Logs the Set of Outliers to the File

*/

private void logOutliers(String filename) {

BufferedWriter out = getWriterHandle(filename);

try {

out.write("#\tX\tY\n");

for(int i = 0; i < noisePoints.size() ; i++) {

Point p = (Point)noisePoints.get(i);

out.write("\t" + p.x + "\t" + p.y + "\n");

}

out.flush();

out.close();

} catch(Exception e) {

debug(e);

}

}

/**

* Writes a GNUPlot Script for the DBSCAN result to be shown.

*/

private void logPlotScript(int totalClusters) {

BufferedWriter out = getWriterHandle("plotdbscan.txt");

try {

setPlotStyle(out);

out.write("plot");

for(int i = 0; i < totalClusters ; i++) {

out.write(" \"cluster" + i + "\",");

}

out.write(" \"outliers\"");

out.flush();

out.close();

} catch(Exception e){

debug(e);

}

}

/**

* Returns a file writer handle given the filename

*/

private BufferedWriter getWriterHandle(String filename) {

BufferedWriter out = null;

try {

FileWriter fw = new FileWriter(filename, true);

out = new BufferedWriter(fw);

} catch(Exception e) {

debug(e);

}

return out;

}

/**

* Sets the Plot Style for the GNUPlot Script

*/

private void setPlotStyle(BufferedWriter out) {

try {

out.write("reset\n");

out.write("set size ratio 2\n");

out.write("unset key\n");

out.write("set title \"DBSCAN\"\n");

} catch(Exception e) {

debug(e);

}

}

/**

* Prints the Exception to standard output.

* @param e Exception thrown

*/

private void debug(Exception e) {

e.printStackTrace(System.out);

}

}

4. CURE Algorithm

Point.java

package cure;

import java.util.StringTokenizer;

/**

* Represents a Point Class. Also stores the KD Tree index for search.

* @version 1.0

* @author jmishra

*/

public class Point {

public double x;

public double y;

public int index;

public Point() {

}

public Point(double x, double y, int index) {

this.x = x;

this.y = y;

this.index = index;

}

public Point(Point point) {

this.x = point.x;

this.y = point.y;

}

public double[] toDouble() {

double[] xy = {x,y};

return xy;

}

public static Point parseString(String str) {

Point point = new Point();

StringTokenizer st = new StringTokenizer(str);

return point;

}

/**

* Calculates the Euclidean Distance from a Point t

* @param t Point t

* @return

* double Euclidean Distance from a Point t

*/

public double calcDistanceFromPoint(Point t) {

return Math.sqrt(Math.pow(x-t.x, 2) + Math.pow(y-t.y, 2));

}

public String toString() {

return "{" + x + "," + y + "}";

}

public boolean equals(Point t) {

return (x == t.x) && (y == t.y);

}

}

CompareCluster.java

package cure;

import java.util.Comparator;

/**

* Defines a class CompareCluster which helps the MinHeap (Implemented using Priority Queue)

* to compare two clusters and store accordingly in the heap.

*

* The 2 clusters are compared based on the distance from their closest Cluster. The cluster pair which has the

lowest such distance

* is stored at the root of the min heap.

* @version 1.0

* @author jmishra

*/

public class CompareCluster implements Comparator{

public int compare(Object CLUSTER1, Object CLUSTER2) {

Cluster cluster1 = (Cluster)CLUSTER1;

Cluster cluster2 = (Cluster)CLUSTER2;

if(cluster1.distanceFromClosest < cluster2.distanceFromClosest) {

return -1;

}

else if(cluster1.distanceFromClosest == cluster2.distanceFromClosest) {

return 0;

}

else return 1;

}

}

Cluster.java

package cure;

import java.util.ArrayList;

/**

* Class Cluster represents a collection of points and its set of representative points.

* It also stores the distance from its closest neighboring cluster.

*

* @version 1.0

* @author jmishra

*/

public class Cluster {

public ArrayList rep = new ArrayList();

public ArrayList pointsInCluster = new ArrayList();

public double distanceFromClosest = 0;

public Cluster closestCluster;

public ArrayList closestClusterRep = new ArrayList();

public double computeDistanceFromCluster(Cluster cluster) {

double minDistance = 1000000;

for(int i = 0; i<rep.size(); i++) {

for(int j = 0; j<cluster.rep.size() ; j++) {

Point p1 = (Point)rep.get(i);

Point p2 = (Point)cluster.rep.get(j);

double distance = p1.calcDistanceFromPoint(p2);

if(minDistance > distance) minDistance = distance;

}

}

return minDistance;

}

public int getClusterSize() {

return pointsInCluster.size();

}

public ArrayList getPointsInCluster() {

return pointsInCluster;

}

}

ClusterSet.java

package cure;

import java.io.*;

import java.util.*;

import edu.wlu.cs.levy.CG.KDTree;

/**

* Creates a set of clusters for a given number of data points or reduces the number of clusters to a fixed number

of clusters as specified

* using CURE's hierarchical clustering algorithm.

*

* The ClusterSet uses 2 data structures. The KD Tree is initialized and used to store points across clusters.

* The Min Heap (Uses java.util.PriorityQueue) is used to store the clusters and repetitively perform clustering.

The Min Heap is rearranged

* in every step to bring the closest pair of clusters to the root of the heap and also change the closest distance

measures for all clusters.

*

* Please refer to the CURE Hierarchical Clustering Algorithm for more details. This class works only with the

sampled partitioned data

* or already set of clusters formed. The computation of set of clusters can be done remotely on a machine hence

adding concurrency to the

* overall algorithm.

*

* @version 1.0

* @author jmishra

*/

public class ClusterSet {

int numberOfPoints;

Point[] points;

CompareCluster cc;

PriorityQueue heap;

KDTree kdtree;

int clustersToBeFound;

int numberofRepInCluster;

double shrinkFactor;

int newPointCount;

HashMap dataPointMap;

/**

* Initialize the Containers

* @param numberOfPoints Number of Data Points

* @param clustersToBeFound Clusters to be found after clustering

* @param numberOfRepInCluster Number of Representative Points for every Cluster

* @param shrinkFactor Shrink Factor for a Cluster

*/

public void initializeContainers(int numberOfPoints, int clustersToBeFound, int numberOfRepInCluster,

double shrinkFactor) {

this.numberOfPoints = numberOfPoints;

points = new Point[numberOfPoints];

cc = new CompareCluster();

heap = new PriorityQueue(1000,cc);

kdtree = new KDTree(2);

this.clustersToBeFound = clustersToBeFound;

this.numberofRepInCluster = numberOfRepInCluster;

this.shrinkFactor = shrinkFactor;

newPointCount = numberOfPoints;

}

/**

* Reduce the number of clusters to the specified numberOfClusters

*

* @param clusters Set of Clusters

* @param numberOfClusters Number of Clusters to be found

* @param numberOfRepInCluster Number of Representative Points in a Cluster

* @param shrinkFactor Shrink Factor for Representative Points in a new Cluster formed

* @param dataPointMap Data Point Map

* @param clusterMerge True

*/

public ClusterSet(ArrayList clusters, int numberOfClusters, int numberOfRepInCluster, double

shrinkFactor, HashMap dataPointMap, boolean clusterMerge) {

numberOfPoints = 0;

for(int i = 0; i < clusters.size(); i++) {

numberOfPoints += ((Cluster)clusters.get(i)).getClusterSize();

}

points = new Point[numberOfPoints];

cc = new CompareCluster();

heap = new PriorityQueue(1000,cc);

kdtree = new KDTree(2);

int pointIndex = 0;

if(clusterMerge) {

for(int i = 0; i<clusters.size(); i++) {

Cluster cluster = (Cluster)clusters.get(i);

for(int j = 0; j<cluster.getClusterSize(); j++) {

points[pointIndex] = (Point)cluster.pointsInCluster.get(j);

pointIndex++;

}

}

clustersToBeFound = numberOfClusters;

this.numberofRepInCluster = numberOfRepInCluster;

this.shrinkFactor = shrinkFactor;

this.dataPointMap = dataPointMap;

}

buildKDTree();

buildHeapForClusters(clusters);

}

/**

* Build the heap for set of clusters specified

* @param clusters Set of Clusters

*/

public void buildHeapForClusters(ArrayList clusters) {

for(int i=0; i<clusters.size(); i++) {

heap.add((Cluster)clusters.get(i));

}

}

/**

* Creates a set of clusters from the given number of data points and other CURE parameters

* @param dataPoints Data Points to be clustered

* @param numberOfClusters Number of Clusters to be formed

* @param numberOfRepInCluster Number of Representative points in a new cluster

* @param shrinkFactor Shrink factor for Representative points

* @param dataPointMap The HashMap to store the data points

*/

public ClusterSet(ArrayList dataPoints, int numberOfClusters, int numberOfRepInCluster, double

shrinkFactor, HashMap dataPointMap) {

initializeContainers(dataPoints.size(), numberOfClusters, numberOfRepInCluster,

shrinkFactor);

initializePoints(dataPoints,dataPointMap);

buildKDTree();

buildHeap();

startClustering();

}

/**

* Initialize the data points

* @param dataPoints Data Points List

* @param dataPointMap Map of Data Points

*/

public void initializePoints(ArrayList dataPoints, HashMap dataPointMap) {

this.dataPointMap = dataPointMap;

Iterator iter = dataPoints.iterator();

int index = 0;

while(iter.hasNext()) {

Point point = (Point)iter.next();

points[index] = point;

index++;

}

}

/**

* Merge the given set of clusters using CURE's hierarchical clustering algorithm

* @return

* ArrayList Set of Merged Clusters

*/

public ArrayList mergeClusters() {

ArrayList mergedClusters = new ArrayList();

startClustering();

while(heap.size() != 0) {

mergedClusters.add(heap.remove());

}

return mergedClusters;

}

/**

* Gets all clusters present in the Min Heap

* @return

* Cluster[] Set of Clusters

*/

public Cluster[] getAllClusters() {

Cluster clusters[] = new Cluster[heap.size()];

int i = 0;

while(heap.size() != 0) {

clusters[i] = (Cluster)heap.remove();

i++;

}

return clusters;

}

/**

* Builds the KD Tree to store the data points

*/

public void buildKDTree() {

for(Integer i=0; i<numberOfPoints; i++) {

try {

kdtree.insert(points[i].toDouble(), points[i].index);

} catch(Exception e) {

debug(e);

}

}

}

/**

* Builds the Initial Min Heap. Each point represents a cluster when the algorithm begins. It creates

each cluster and adds it to the heap.

*/

public void buildHeap() {

ArrayList clusters = new ArrayList();

HashMap pointCluster = new HashMap();

for(int i=0; i<numberOfPoints; i++) {

Cluster cluster = new Cluster();

cluster.rep.add(points[i]);

cluster.pointsInCluster.add(points[i]);

int nearestPoint = getNearestNeighbour(points[i]);

Point nearest = (Point)dataPointMap.get(nearestPoint);

cluster.distanceFromClosest = points[i].calcDistanceFromPoint(nearest);

//changed here from indexing to hashmap

cluster.closestClusterRep.add(nearestPoint);

clusters.add(cluster);

pointCluster.put(points[i].index,cluster);

}

for(int i = 0; i<clusters.size(); i++) {

Cluster cluster = (Cluster)clusters.get(i);

int closest = (Integer)cluster.closestClusterRep.get(0);

//cluster.closestCluster = (Cluster)clusters.get(closest);

cluster.closestCluster = (Cluster)pointCluster.get((Integer)closest);

heap.add(cluster);

}

}

/**

* Get the nearest neighbor for a given point

* @param point Point point

* @return

* int KD Tree index of the nearest neighbor

*/

public int getNearestNeighbour(Point point) {

int result = 0;

try {

Object[] nearestPoint = kdtree.nearest(point.toDouble(), 2);

result = (Integer)nearestPoint[1];

} catch(Exception e) {

debug(e);

result = -1;

}

return result;

}

/**

* Initiates the clustering. The stopping condition is reached when the size of heap equals number of

clusters to be found.

* At every step two clusters are merged and the heap is rearranged. The representative points are

deleted for old clusters and

* the representative points are added for new cluster to the KD Tree.

*/

public void startClustering() {

while(heap.size() > clustersToBeFound) {

Cluster minCluster = (Cluster)heap.remove();

Cluster closestCluster = minCluster.closestCluster;

heap.remove(closestCluster);

Cluster newCluster = merge(minCluster,closestCluster);

deleteAllRepPointsForCluster(minCluster);

deleteAllRepPointsForCluster(closestCluster);

insertAllRepPointsForCluster(newCluster);

newCluster.closestCluster = minCluster;

heap.add(newCluster);

adjustHeap(newCluster, minCluster, closestCluster);

}

}

/**

* Adjust the heap after the new merged cluster has been added

* @param newCluster The merged cluster

* @param oldcluster1 The Cluster 1 which was merged

* @param oldCluster2 The Closest Cluster, Cluster2, to Cluster 1 which was merged

*/

public void adjustHeap(Cluster newCluster, Cluster oldcluster1, Cluster oldCluster2) {

ArrayList clusters = new ArrayList();

int initialHeapSize = heap.size();

for(int i=0; i<initialHeapSize; i++) {

clusters.add(heap.remove());

}

for(int i=0; i<clusters.size(); i++) {

Cluster cluster1 = (Cluster)clusters.get(i);

if(!(cluster1.closestCluster == oldcluster1) && !(cluster1.closestCluster ==

oldCluster2)) {

heap.add(cluster1);

continue;

}

cluster1.distanceFromClosest = 100000;

for(int j=0; j<clusters.size(); j++) {

if(i==j) continue;

Cluster cluster2 = (Cluster)clusters.get(j);

double distance = cluster1.computeDistanceFromCluster(cluster2);

if(distance < cluster1.distanceFromClosest) {

cluster1.distanceFromClosest = distance;

cluster1.closestCluster = cluster2;

}

}

heap.add(cluster1);

}

}

/**

* Insert all representative points of the cluster to the KD Tree

* @param cluster Merged Cluster

*/

public void insertAllRepPointsForCluster(Cluster cluster) {

ArrayList repPoints = cluster.rep;

for(int i = 0; i<repPoints.size(); i++) {

Point point = (Point)repPoints.get(i);

try {

kdtree.insert(point.toDouble(),point.index);

} catch(Exception e) {

//debug(e);

}

}

}

/**

* Delete all representative points of the cluster from the KD Tree

* @param cluster Cluster which got merged

*/

public void deleteAllRepPointsForCluster(Cluster cluster) {

ArrayList repPoints = cluster.rep;

for(int i = 0; i<repPoints.size(); i++) {

Point point = (Point)repPoints.get(i);

try {

kdtree.delete(point.toDouble());

} catch(Exception e) {

//debug(e);

}

}

}

/**

* Computes the mean point of the cluster

* @param cluster Cluster

* @return

* Point The Mean Point of the Cluster

*/

public Point computeMeanOfCluster(Cluster cluster) {

Point point = new Point();

for(int i=0; i<cluster.pointsInCluster.size(); i++) {

point.x += ((Point)cluster.pointsInCluster.get(i)).x;

point.y += ((Point)cluster.pointsInCluster.get(i)).y;

}

point.x /= cluster.pointsInCluster.size();

point.y /= cluster.pointsInCluster.size();

return point;

}

/**

* Merge two clusters. Calculate the new representative points and shrink them

* @param cluster1 Cluster 1 to be merged

* @param cluster2 Cluster 2 to be merged

* @return

* Cluster The Merged Cluster

*/

public Cluster merge(Cluster cluster1, Cluster cluster2) {

Cluster newCluster = new Cluster();

for(int i=0; i<cluster1.pointsInCluster.size(); i++) {

newCluster.pointsInCluster.add(cluster1.pointsInCluster.get(i));

}

for(int i=0; i<cluster2.pointsInCluster.size(); i++) {

newCluster.pointsInCluster.add(cluster2.pointsInCluster.get(i));

}

Point mean = computeMeanOfCluster(newCluster);

ArrayList tempset = new ArrayList();

for(int i=0; i<numberofRepInCluster; i++) {

double maxDist = 0;

double minDist = 0;

Point maxPoint = null;

for(int j=0; j<newCluster.pointsInCluster.size(); j++) {

Point p = (Point)newCluster.pointsInCluster.get(j);

if(i==0) {

minDist = p.calcDistanceFromPoint(mean);

}

else {

minDist = computeMinDistanceFromGroup(p, tempset);

}

if(minDist >= maxDist) {

maxDist = minDist;

maxPoint = p;

}

}

tempset.add(maxPoint);

}

for(int i=0; i<tempset.size(); i++) {

Point p = (Point) tempset.get(i);

Point rep = new Point();

rep.x = p.x + shrinkFactor*(mean.x - p.x);

rep.y = p.y + shrinkFactor*(mean.y - p.y);

//rep.index = newPointCount++;

rep.index = Cure.getCurrentRepCount();

newCluster.rep.add(rep);

}

return newCluster;

}

/**

* Computes the min distance of a point from the group of points

* @param p Point p

* @param group Group of points

* @return

* double The Minimum Euclidean Distance

*/

public double computeMinDistanceFromGroup(Point p, ArrayList group) {

double minDistance = 100000;

for(int i = 0; i< group.size(); i++) {

Point q = (Point)group.get(i);

if(p.equals(q)) continue;

double distance = p.calcDistanceFromPoint(q);

if(minDistance > distance) {

minDistance = distance;

}

}

if(minDistance == 100000) return 0;

else return minDistance;

}

/**

* Show the clusters formed

*/

public void showClusters() {

for(int i=0; i<clustersToBeFound; i++) {

Cluster cluster = (Cluster)heap.remove();

logCluster(cluster, "cluster" + i);

}

}

/**

* Print the Exception thrown

* @param e Exception e

*/

public void debug(Exception e) {

//e.printStackTrace(System.out);

}

/**

* Logs the cluster to a file

* @param cluster Cluster

* @param filename Name of the file

*/

public void logCluster(Cluster cluster, String filename) {

FileWriter fw = null;

try {

fw = new FileWriter(filename, true);

BufferedWriter out = new BufferedWriter(fw);

out.write("#\tX\tY\n");

for(int j=0; j<cluster.pointsInCluster.size(); j++) {

Point p = (Point)cluster.pointsInCluster.get(j);

out.write("\t" + p.x + "\t" + p.y + "\n");

}

out.flush();

fw.close();

} catch(Exception e){

debug(e);

}

}

}

CURE.java

package cure;

import java.io.*;

import java.util.*;

/**

* CURE Clustering Algorithm

* The algorithm follows the six steps as specified in the original paperwork by Guha et. al.

*

* @version 1.0

* @author jmishra

*/

public class Cure {

/** The Input Parameters to the algorithm **/

private String dataFile;

private int totalNumberOfPoints;

private int numberOfClusters;

private int minRepresentativeCount;

private double shrinkFactor;

private double requiredRepresentationProbablity;

private int numberOfPartitions;

private int reducingFactorForEachPartition;

private Point[] dataPoints;

private ArrayList outliers;

private HashMap dataPointsMap;

private static int currentRepAdditionCount;

private Hashtable integerTable;

public Cure(String[] args) {

System.out.println("CURE Clustering Algorithm");

System.out.println("-------------------------\n");

initializeParameters(args);

readDataPoints();

long beginTime = System.currentTimeMillis();

int sampleSize = calculateSampleSize();

ArrayList randomPointSet = selectRandomPoints(sampleSize);

ArrayList[] partitionedPointSet = partitionPointSet(randomPointSet);

ArrayList subClusters = clusterSubPartitions(partitionedPointSet);

if(reducingFactorForEachPartition >= 10) {

eliminateOutliersFirstStage(subClusters, 1);

}

else {

eliminateOutliersFirstStage(subClusters, 0);

}

ArrayList clusters = clusterAll(subClusters);

clusters = labelRemainingDataPoints(clusters);

long time = System.currentTimeMillis() - beginTime;

System.out.println("The Algorithm took " + time + " milliseconds to complete.");

System.out.println("\nPlease Use GNUPlot to show the clusters by rendering the file using

\"load plotcure.txt\" on GNUPlot Console");

showClusters(clusters);

}

/**

* Initializes the Parameters

* @param args The Command Line Argument

*/

private void initializeParameters(String[] args) {

if(args.length == 0) {

dataFile = "spaeth2_05.txt";

totalNumberOfPoints = 59;

numberOfClusters = 5;

minRepresentativeCount = 6;

shrinkFactor = 0.5;

requiredRepresentationProbablity = 0.1;

numberOfPartitions = 2;

reducingFactorForEachPartition = 2;

}

else {

dataFile = args[1];

totalNumberOfPoints = Integer.parseInt(args[2]);

numberOfClusters = Integer.parseInt(args[3]);

minRepresentativeCount = Integer.parseInt(args[4]);

shrinkFactor = Double.parseDouble(args[5]);

requiredRepresentationProbablity = Double.parseDouble(args[6]);

numberOfPartitions = Integer.parseInt(args[7]);

reducingFactorForEachPartition = Integer.parseInt(args[8]);

}

dataPoints = new Point[totalNumberOfPoints];

dataPointsMap = new HashMap();

currentRepAdditionCount = totalNumberOfPoints;

integerTable = new Hashtable();

outliers = new ArrayList();

}

/**

* Reads the data points from file

*/

private void readDataPoints() {

int pointIndex = 0;

FileReader fr = null;

try {

fr = new FileReader(dataFile);

BufferedReader in = new BufferedReader(fr);

String data = in.readLine();

while(data != null) {

StringTokenizer st = new StringTokenizer(data);

double x = Double.parseDouble(st.nextToken());

double y = Double.parseDouble(st.nextToken());

dataPoints[pointIndex] = new Point(x,y,pointIndex);

dataPointsMap.put(pointIndex, dataPoints[pointIndex]);

pointIndex++;

data = in.readLine();

}

in.close();

} catch(Exception e){

debug(e);

}

}

/**

* Calculates the Sample Size based on Chernoff Bounds Mentioned in the CURE Algorithm

* @return

* int The Sample Data Size to be worked on

*/

private int calculateSampleSize() {

return (int)((0.5 * totalNumberOfPoints) + (numberOfClusters *

Math.log10(1/requiredRepresentationProbablity)) + (numberOfClusters *

Math.sqrt(Math.pow(Math.log10(1/requiredRepresentationProbablity), 2) +

(totalNumberOfPoints/numberOfClusters) * Math.log10(1/requiredRepresentationProbablity))));

}

/**

* Select random points from the data set

* @param sampleSize The sample size selected

* @return

* ArrayList The Selected Random Points

*/

private ArrayList selectRandomPoints(int sampleSize) {

ArrayList randomPointSet = new ArrayList();

Random random = new Random();

for(int i=0; i<sampleSize; i++) {

int index = random.nextInt(totalNumberOfPoints);

if(integerTable.containsKey(index)) {

i--; continue;

}

else {

Point point = dataPoints[index];

randomPointSet.add(point);

integerTable.put(index, "");

}

}

return randomPointSet;

}

/**

* Partition the sampled data points to p partitions (p = numberOfPartitions)

* @param pointSet Sample data point set

* @return

* ArrayList[] Data Set Partitioned Sets

*/

private ArrayList[] partitionPointSet(ArrayList pointSet) {

ArrayList partitionedSet[] = new ArrayList[numberOfPartitions];

Iterator iter = pointSet.iterator();

for(int i = 0; i < numberOfPartitions - 1 ; i++) {

partitionedSet[i] = new ArrayList();

int pointIndex = 0;

while(pointIndex < pointSet.size() / numberOfPartitions) {

partitionedSet[i].add(iter.next());

pointIndex++;

}

}

partitionedSet[numberOfPartitions - 1] = new ArrayList();

while(iter.hasNext()) {

partitionedSet[numberOfPartitions - 1].add(iter.next());

}

return partitionedSet;

}

/**

* Cluster each partitioned set to n/pq clusters

* @param partitionedSet Data Point Set

* @return

* ArrayList Clusters formed

*/

private ArrayList clusterSubPartitions(ArrayList partitionedSet[]) {

ArrayList clusters = new ArrayList();

int numberOfClusterInEachPartition = totalNumberOfPoints / (numberOfPartitions *

reducingFactorForEachPartition);

for(int i=0 ; i<partitionedSet.length; i++) {

ClusterSet clusterSet = new

ClusterSet(partitionedSet[i],numberOfClusterInEachPartition, minRepresentativeCount, shrinkFactor,

dataPointsMap);

Cluster[] subClusters = clusterSet.getAllClusters();

for(int j=0; j<subClusters.length; j++) {

clusters.add(subClusters[j]);

}

}

return clusters;

}

/**

* Eliminates outliers after pre-clustering

* @param clusters Clusters present

* @param outlierEligibilityCount Min Threshold count for not being outlier cluster

*/

private void eliminateOutliersFirstStage(ArrayList clusters, int outlierEligibilityCount) {

Iterator iter = clusters.iterator();

ArrayList clustersForRemoval = new ArrayList();

while(iter.hasNext()) {

Cluster cluster = (Cluster) iter.next();

if(cluster.getClusterSize() <= outlierEligibilityCount) {

updateOutlierSet(cluster);

clustersForRemoval.add(cluster);

}

}

while(!clustersForRemoval.isEmpty()) {

Cluster c = (Cluster)clustersForRemoval.remove(0);

clusters.remove(c);

}

}

/**

* Cluster all remaining clusters. Merge all clusters using CURE's hierarchical clustering algorithm till

specified number of clusters

* remain.

* @param clusters Pre-clusters formed

* @return

* ArrayList Set of clusters

*/

private ArrayList clusterAll(ArrayList clusters) {

ClusterSet clusterSet = new ClusterSet(clusters, numberOfClusters, minRepresentativeCount,

shrinkFactor, dataPointsMap, true);

return clusterSet.mergeClusters();

}

/**

* Assign all remaining data points which were not part of the sampled data set to set of clusters formed

* @param clusters Set of clusters

* @return

* ArrayList Modified clusters

*/

private ArrayList labelRemainingDataPoints(ArrayList clusters) {

for(int index = 0; index < dataPoints.length; index++) {

if(integerTable.containsKey(index)) continue;

Point p = dataPoints[index];

double smallestDistance = 1000000;

int nearestClusterIndex = -1;

for(int i = 0; i < clusters.size(); i++) {

ArrayList rep = ((Cluster)clusters.get(i)).rep;

for(int j=0; j<rep.size(); j++) {

double distance = p.calcDistanceFromPoint((Point)rep.get(j));

if(distance < smallestDistance) {

smallestDistance = distance;

nearestClusterIndex = i;

}

}

}

if(nearestClusterIndex != -1) {

((Cluster)clusters.get(nearestClusterIndex)).pointsInCluster.add(p);

}

}

return clusters;

}

/**

* Update the outlier set for the clusters which have been identified as outliers

* @param cluster Outlier Cluster

*/

private void updateOutlierSet(Cluster cluster) {

ArrayList outlierPoints = cluster.getPointsInCluster();

Iterator iter = outlierPoints.iterator();

while(iter.hasNext()) {

outliers.add(iter.next());

}

}

private void debug(Exception e) {

//e.printStackTrace(System.out);

}

/**

* Gets the current representative count so that the new points added do not conflict with older KD Tree

indices

* @return

* int Next representative count

*/

public static int getCurrentRepCount() {

return ++currentRepAdditionCount;

}

public void showClusters(ArrayList clusters) {

for(int i=0; i<numberOfClusters; i++) {

Cluster cluster = (Cluster)clusters.get(i);

logCluster(cluster, "cluster" + i);

}

logOutlier();

logPlotScript(clusters.size());

}

private BufferedWriter getWriterHandle(String filename) {

BufferedWriter out = null;

try {

FileWriter fw = new FileWriter(filename, true);

out = new BufferedWriter(fw);

} catch(Exception e) {

debug(e);

}

return out;

}

private void closeWriterHandle(BufferedWriter out) {

try {

out.flush();

out.close();

} catch(Exception e) {

debug(e);

}

}

private void logCluster(Cluster cluster, String filename) {

BufferedWriter out = getWriterHandle(filename);

try {

out.write("#\tX\tY\n");

for(int j=0; j<cluster.pointsInCluster.size(); j++) {

Point p = (Point)cluster.pointsInCluster.get(j);

out.write("\t" + p.x + "\t" + p.y + "\n");

}

} catch(Exception e){

debug(e);

}

closeWriterHandle(out);

}

private void logOutlier() {

BufferedWriter out = getWriterHandle("outliers");

try {

out.write("#\tX\tY\n");

for(int j=0; j<outliers.size(); j++) {

Point p = (Point)outliers.get(j);

out.write("\t" + p.x + "\t" + p.y + "\n");

}

} catch(Exception e){

debug(e);

}

closeWriterHandle(out);

}

private void logPlotScript(int totalClusters) {

BufferedWriter out = getWriterHandle("plotcure.txt");

try {

setPlotStyle(out);

out.write("plot");

for(int i = 0; i< totalClusters; i++) {

out.write(" \"cluster" + i + "\",");

}

out.write(" \"outliers\"");

} catch(Exception e){

debug(e);

}

closeWriterHandle(out);

}

private void setPlotStyle(BufferedWriter out) {

try {

out.write("reset\n");

out.write("set size ratio 2\n");

out.write("unset key\n");

out.write("set title \"CURE\"\n");

} catch(Exception e) {

debug(e);

}

}

}

KD – Tree Implementation

Open Source KD Tree Implementation has been used and is distributable under GNU General Public License

Please refer http://www.cs.wlu.edu/~levy/software/kd/ for source/executable files.

Please add kd.jar to classpath for successful execution of CURE and DBSCAN packages.

http://www.cs.wlu.edu/~levy/software/kd/doc/ contains the Java Documentation for KD Tree Implementation.