Title III Grant Application

advertisement

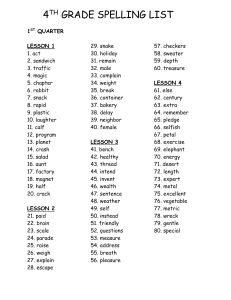

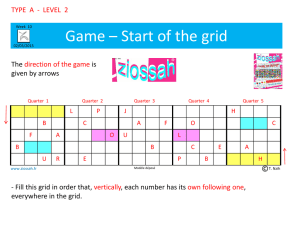

Title III Grant Application Proposed by Susan Stearns Course Description: The course under proposal is our undergraduate research methods course (CMST470) but this will also have application to our graduate quantitative research methods course (CMST521). The undergraduate course is a requirement for all of our senior Bachelor of Science students and is an option for the Bachelor of Arts students. It is typically taught one time a year in the Spring of the year and then twice the next year, once the next year, twice the next year, etc. There are 30 – 33 students per section. I personally have taught the course once to twice a year for the last thirteen years, excluding my sabbatical year. This course focuses upon research methodological terms and the two methods of survey and experimental design. The focus of the course is for students to become critical consumers of survey and experimental research and capable of conducting basic survey and experimental research. I have focused upon this course because in the last years as I have seen our classroom size increase, I’ve had to change the course to adjust to the sheer numbers of students. I think there are more effective ways to change the course so that my students can achieve better critical thinking skills. Specifically, I think offering the students further opportunities to practice and/or apply research methods will allow this. My students need more opportunities for applications in the three following areas. After each area I give examples of the type of activities I would like to create: 1. Being able to check their vocabulary and going beyond this to understanding the interconnections between all the new words (182 as of now) introduced in this course. a. The course is currently structured into four units with a vocabulary list for each unit. I would like to have basic questions for each vocabulary list that are a combination of (1) asking students about a definition and then asking them which term fits that definition in a multiple choice format and then (2) the opposite where I ask students the definition of a word and have students choose which definition is best, again in multiple choice format. With both options I would like the student to be told whether the answer is right or wrong. If the answer is wrong then various definitions of the word will appear. At this time, I would like the program to not let them continue on till a few seconds go by so that they are forced to read the various definitions for the term. If this timed format is available, it will encourage students not to skip reading about a word they don’t truly know and it will expose them to one of many definitions for a term. I don’t believe in teaching one “book definition” of a term, but also want my students to know the “gist” of a term, not to rote memorize. Basically, this activity is based on redundancy theory in an attempt to assist my students in learning these vocabulary terms. I’m hoping this is possible with the branching abilities of RoboDemo. b. I’m thinking this second option is somehow possible, but Patrick Lordan has let me know that he thinks RoboDemo probably is not capable of doing this. I’m not giving up hope that we might find a way to do this, so I will go ahead and explain an activity I’ve used to get students to go to a higher cognitive level. This quarter I experimented with flash cards and poker. Students created their own flash cards and then we played “poker” with them the day before the exam. I am after the higher order cognitive demands with this method. First, students must know definitions of terms to be able to play this type of poker. The rules for the poker game are to create two to five of a kinds (i.e., they are dealt five flash cards). They may also create full houses (e.g., a two of a kind, along with a three of a kind). The key is that they must show how the cards they are dealt are connected. In class they do this through verbally explaining this to their group peers and if accepted their hand stands. Online, I’m thinking of two levels of “poker.” Level one is easiest and they are dealt a predetermined hand of five terms with their definitions and then they can create different options and see what responses I have created for them. Level two is harder because they will only be dealt five terms, no definitions, and then have to see what options they can create. I’m hoping these branching abilities will be possible with some other program that either the staff at the TLC become aware of or a new program that comes on the market. c. And later I would like to design something that will assist the students in seeing how some of the terms from the four different sections of the course are interconnected. I’m thinking this could be used as a review prior to the final exam. This one is still rather vague in my mind, but the issue is that there are terms that appear in each section of the course that revolve around particular concepts. For example the concept of validity is extremely important in all forms of quantitative research methods. It means “are you studying what you really think you are studying.” There are many other specific terms that also address this concept. Somehow I would like to develop something where students begin to see these interconnections over all four units in the course. 2. Experimental design research scenarios that allow students to know if they are correct in their analyses of what the independent and dependent variables are, the potential problems with the research (internal and external invalidity), and how they could “fix” the potential research problems by redesigning the research. a. This first type is something I currently do with my students during class time. They go home with a created scenario (see scenario at end of this proposal) and respond to particular research methodological questions. The difference would be that I would put the questions into multiple choice format and then have the students respond with the correct option. Again, if they choose the incorrect option there would be an educational moment with an explanation of why the answer is incorrect. And again, I would love it if there was a timed device that would force students to remain on the wrong answer for a moment of two so they could read explanations of why it is incorrect and learn from these explanations. b. The second type of scenario is a type of activity that I used to do with students, but the actual packets of information became too warn from use to continue using. Plus, having them in this mode would make each packet simultaneously available to students. I would like to scan articles from the Spokesman Review and magazines familiar to the students (e.g., Shape, Prevention, etc.) and ask them to read the article and then choose one of the following terms below as being addressed in the article (I’ve already started collecting articles for this purpose!). The branching option from RoboDemo will work out great here. Whichever term they use will then allow them to then choose from a list of explanations for why this term is relevant to the article. They will then be returned to the original list of terms to see how some of the other terms may or may not be appropriately associated with the article. This will allow students to see how research methodological terms are constantly being addressed in the everyday press. 3. Survey design research scenarios that allow students to know if they are correct in their analyses of what the independent and dependent variables are, the potential problems with the research (internal and external invalidity), and how they could “fix” the potential research problems with redesigning the research. a. Same as 2a but the focus would be upon a sample survey. See example of the Survey I have at the end of this proposal. This example has multiple choice questions with it, but the branching has not been done (this is taken from my current exam in the course). b. Same as 2b above but the focus would be on survey research as the results are presented in newspaper articles and magazines. For example, last week the Spokesman Review reported that Robinson Research here in Spokane just did a survey of a little over 400 subjects to determine issues regarding the Spokane Transit System. They reported a 4.9% error term for the study. When one applies what my students have been taught about error terms and surveys to the results reported in the paper, my students would quickly see that Robinson was paid a lot of money to tell us nothing. The categories, when the standard error is calculated, are all overlapping so there is actually no difference in the different percents they have reported as “opinions.” Again, I would walk them through the math and such with multiple choice and branching techniques. But the key, once again, is to show the students how this information would change how they interpret the article (i.e., the article’s writer suggests the opinions expressed are different because the author clearly doesn’t understand how to interpret the standard error). My students need this additional “homework” for better understanding of the course material. Specifically, they need the redundancy of having multiple examples of each of the types mentioned above and other types the Title III staff may assist me with. Also, please note that since my original interaction with Patrick Lordan I spoke to my students in this quarter’s research methods course asking them if they thought this idea would enhance their learning. They were eager. There was an initial misunderstanding and my students thought I had this developed and they wanted access immediately. I clarified to be sure they understood I meant this was for future students and they still thought it would be great. They specifically wanted more examples to work with and ponder. They wanted to “challenge” their knowledge and see if I could create elevated levels of methods for those that were more advanced, but have options that were rather standard for those that struggle with this type of critical thinking. They also liked the fact that it would be available to them 24 hours a day, 7 days a week. I was surprised by how overwhelmingly enthusiastic they were even when I reiterated that this would take me until next year’s class to develop. They thought it would be very beneficial to future students. Project Description: I am proposing the creation of a number of activities (see descriptions above) for students to respond to and learn from. I have some basic ideas for what I am proposing but I also want to learn options that I probably don’t even know exist at this time. I plan on this technology being used by my students as something like a “lab.” If I use it in class it will be to show the students how to progress through a unit prior to having them work with the programs. I do not see myself working with this outside of class other than to respond to student email questions and keep track of how successful students are with the programs. While I would like students with slow modems to be able to have a CD of these activities, I will not be developing one in the initial stages of this project. Patrick has informed me that the tracking ability of Blackboard when students are using an associated CD is minimal. With initial assessment in the use of these proposed activities, I would prefer to be able to track how my students are using these activities rather than students having the benefit of a quicker interaction with their computers. But later, after revisions and such, creating this in a CD form may make it more enjoyable (through it’s faster speed) for the students to use. I plan on the students using this material outside of class. My past work with Blackboard has shown me that students value having access 24 hours a day, seven days a week (i.e., plus my current research methods students talked about this being a benefit). I’m hoping to develop enough scenarios and vocabulary programs that students will not only have required homework but have the option to do additional applications to improve their knowledge and critical thinking skills specifically related to research methods. After my initial discussion with Patrick Lordan, I’m thinking that the computer program RoboDemo may be the tool I want to use so that when students have correctly answered a question they are told so and when they have not it would enter them into a mini tutorial or educational moment. Goals and Objectives for the Project: My goals are for students: 1. To better learn the course vocabulary. 2. To better understand the connections between the vocabulary terms. 3. To better understand survey design. 4. To more effectively evaluate survey design. 5. To better understand experimental design. 6. To more effectively evaluate experimental design. 7. To understand how their new knowledge is great for evaluating research as presented in everyday media like newspapers and magazines. 8. To be somewhat comfortable with their research methodological knowledge. Please note that in the above goals and objectives, I have listed all that I want to do. At this time, my overall goals and objectives have not changed, but through discussions with Patrick Lordan I am now aware that I may need to downsize my objectives for the amount of time that I have to spend on this project. I think what I am capable of doing is the vocabulary for numbers 1, 3, & 5 and a limited number of activities for numbers 4 & 6 above. Number 2 is a bit more difficult depending on the ideas that might be generated when I brainstorm more with TLC staff and others. But at this time, things like the poker game may not be possible to put in this mode and I as of yet do not have another idea for getting at the higher level cognition demanded of #2, so I’m imagining this will be more time consuming. Number 8 is the next most demanding one, simply because it takes lots of examples for students to respond to for “comfortability” to occur (i.e., which means lots of activities for numbers 4 & 6 above). Students need multiple examples of each prior to them having enough repetition with each type of concept for this to occur. But #7 might be doable in the time span depending on how my Winter quarter Chair duties go. I’m already keeping a file of articles for use so that aspect of the work will be done by Winter, but there’s lots of time to be spent developing questions to focus students on particular aspects of the articles so they figure out the methodological questions (and those questions also have to be developed, along with the answers). So if my Chair duties go smoothly I will also be able to do this. I note this because I have been cautioned that this is a large task I want to accomplish, but at the same time, I have wanted to do this for years. So, first my motivation is high. Secondly, I teach only 18 credits a year with the other half of my load Chairing. I teach 8 credits in the Fall and then 5 both Winter and Spring. The way that I have requested this grant is for me to be released from all 5 credits for Winter, thus, my time not Chairing (and that’s a slow quarter for Chairing) can be devoted to this project. Additionally, the Spring quarter (which at the end is a very busy quarter for Chairs) will be spent teaching in the course I am proposing this technology be implemented. Though I’m busy in Spring, I will be focused on this course. And finally, the revision stage is proposed for the following Fall at which time I will again be Chairing but I will only teach a 3 credit Introduction to Graduate Studies course. So I am doing my best to lighten my teaching load to make time for doing this project. Assessment Plan: My primary plan to assess the above objectives is to compare student test scores with past classes. This is the one class that I use the same or somewhat the same exams each time I teach the course. I think this would allow me to assess numbers one through six. I think questions seven and eight need to be assessed separately. Question seven could be evaluated with the creation of an essay question revolving around a newspaper or magazine article. Question eight needs to be assessed as a qualitative question or series of questions. It also might be nice to have a student satisfaction survey specifically geared to assess how comfortable they are with their knowledge of research methods and how satisfied they are with the use of the interactive format for learning. Since questions one through seven will be administered via the exams, I don’t think additional faculty members are necessary. But if the TLC staff would like to be involved in the assessment of question number eight, that would be fine (to reduce possible bias). I also think, from a technological perspective, that letting Blackboard track the number of uses of these scenarios would be of value. Of particular importance might be the optional scenarios and optional vocabulary applications. A few qualitative questions regarding why students chose or did not choose to use the optional applications may give additional information by which to assist in redesign. Another good idea (from Patrick) is to begin assessment early on. To design a measure to see how students are using the activities (in addition to using Blackboard tracking). I think this should also include asking students what would assist them and/or ask them if anything is hindering them with interacting with these activities. ______________________________ Example One – Experimental Research Design Scenario (Questions to be changed to multiple choice for this proposed project) S. A. STEARNS Covert-Tower, I. V. (1999). Teaching methods for apprehensives: Conventional wisdom isn't always wise. Unpublished manuscript, Pennsylvania State University. ABSTRACT A major communication problem faced by many college students is communication apprehension, or fear of public speaking. Two methods of helping people to overcome communication apprehension have been proposed: one method ("desensitization") involves creating a comfortable atmosphere in which students can be led painlessly through a series of small steps toward public speaking; the other method ("forced confrontation") involves forcing students to confront their fears all at once by requiring them to give a series of formal speeches to large audiences, a total of 6 speeches in 10 weeks. The Communication Department, in an effort to provide the best possible instruction in public speaking, designed a study to compare the two proposed methods of instruction, as well as the traditional method of teaching the course -lectures on the principles of public speaking combined with moderate practice, 3 speeches over 10 weeks. 150 students who enrolled for Comm 115 were randomly assigned to one of three sections, all meeting at the same time of day. The 50 students in Section A got the desensitization training. The 50 students in Section B got forced confrontation. And the 50 students in Section C got the traditional course. The first day of class, students in all three sections filled out the 24 question version of the Personal Report of Communication Apprehension (PRCA-24); average scores for the three groups were nearly identical. By the end of the term, when students again filled out the PRCA-24, average scores for the three sections were significantly different. The 48 students in Section A had the highest average score (the most anxiety). The 40 students who completed Section C had the next highest average, but showed a good bit of improvement for the quarter. The 30 students in Section B had the lowest average by far (the least anxiety). Based on these results, the Communication Department decided that all sections would use the forced confrontation method, since it was obviously the most effective in eliminating communication apprehension. (Example acquired from S. A. Jackson) Questions: 1. State the Hypothesis or research question. 2. Name the independent variable(s) and dependent variable(s)--also name the levels of these variables. 3. What is the research design being used? Draw it too! 4. Are there any internal validity problems? 5. Who should this study be generalized to? (This is a question dealing with external validity) ____________________________ Example Number Two – Survey Example (Note: Branching and Explanations of incorrect answers still to be done) The following mini-survey applies to questions 21-24. This survey was given to people who claimed to be TV viewers. 1. What type of TV programming do you prefer? ( ) realistic drama (e.g., Judging Amy) ( ) unrealistic situation comedy (e.g., The Simpsons) 2. Why do you watch television? 3. When do you watch television? 6 a.m. - 9 a.m. often occasionally 9 a.m. - 6 p.m. often occasionally 8 p.m. -11 p.m. often occasionally 11 p.m. - 6 a.m. often occasionally rarely rarely rarely rarely never never never never 21. a. b. Item #3 is an example of: a contingency question. a matrix format. 22. a. b. c. d. Item #3 is an example of: a poor question because it is not mutually exclusive. a poor question because it is not exhaustive. both "a' and "b." a good question. 23. a. b. Item #2 is an example of: a closed response format. an open response format. 24. a. b. c. Item #1 is: a poor question because it is double-barreled. a poor question because of biased terms (i.e., loaded language). a good question. c. d. c. d. a double barreled question. an open response format. a double-barreled question. a leading question.