Branch Prediction using a Correlating Feature Selector / Dynamic

advertisement

Branch Prediction using a Correlating Feature Selector /

Dynamic Decision Tree

Cindy Jen, Steven Beigelmacher

Electrical and Computer Engineering Department

Carnegie Mellon University

Pittsburgh, PA 15213 USA

{cdj, steven7}@andrew.cmu.edu

Abstract

As the accuracy of branch prediction becomes an increasingly

important factor in modern processor performance and storage

requirements of current branch predictors continue to rise, it is

important that we develop an accurate branch predictor whose

storage requirements do not explode as more branch prediction

features are considered. The storage requirement for current

table-based hardware predictors grows exponentially with the

number of features used in making branch prediction. We seek a

branch prediction method that can consider a large number of

features, without exhibiting that exponential growth in size. In

this paper, we describe our implementation of a correlating

feature selector for branch prediction. The correlating feature

selector is implemented in the form of a dynamic decision tree,

which is a binary tree of decision-maker nodes. We use tracedriven simulation (SimpleScalar’s sim-bpred) results to show that

the CFS/DDT predictor is the most efficient, in terms of accuracy

for a given storage requirement, when correlating on local

history, global history, and the lower eight bits of each of the 32

architectural integer registers. The ability of the dynamic

decision tree to use more features for prediction without a gross

increase in size is what led us to be able to correlate on register

values, which turned out to be quite useful in predicting branch

outcomes. We also use out-of-order processor simulation

(SimpleScalar’s sim-outorder) to evaluate the CFS/DDT’s

accuracy when instructions are executed out of order, rather than

in a sequential, non-overlapping manner. CFS/DDT accuracy

results are compared to the accuracy of the commonly used

tournament predictor, modeled after that in the Alpha EV6. We

investigate and discuss why the CFS/DDT predicts significantly

better with trace-driven simulation than with out-of-order

simulation.

Modern branch prediction implementations rely on a two-level

table, maintaining a limited history of previous local and global

branch outcomes to predict the result of the current branch.

Because these table-based approaches do not know which history

bits of the entire set are strongly tied to a given branch, the entire

history vector must be considered for a given branch. As a result,

storage requirements grow exponentially with each bit of

additional history. Due to the explosive growth in storage

requirements, most table based branch predictors neglect to take

into account other factors (such as register values) that are likely

strongly correlated to the branch outcome. We seek a branch

prediction implementation that selectively pays attention to

relevant feature bits for each branch, thereby reducing storage

requirements as the total number of feature bits grow, in turn

allowing us to consider more features.

We have implemented a correlating feature selector using a

dynamic decision tree that dynamically determines which features

correlate more strongly to each branch and uses those features for

future predictions. We show that the use of this CFS/DDT saves

storage space, allowing the use of other features in addition to

local and global history bits. We then show that the addition of

register values as features to consider in branch prediction

increases accuracy over local/global tournament predictors of the

same size. Next, we consider the implications of stale data in an

out of order processor, and investigate ways to overcome this

problem and achieve the benefits of the CFS/DDT’s extra feature

storage space.

This paper consists of five sections. Section 2 examines previous

work in branch predictors. Section 3 introduces CFS/DDT and

discusses its implementation. Section 4 presents our experimental

methodology and results. Section 5 offers concluding remarks.

2. Previous Related Work

1. Introduction

Branch prediction is an essential component of modern highperformance microprocessors.

As pipelines grow deeper,

branches do not get resolved until further into the pipeline. As a

result, mispredictions become more costly since a greater number

of wasted cycles occur in between the branch prediction and

branch resolution. Additionally, as superscalar processors grow

wider and integer programs on average continue to show branches

once every six instructions, more speculative execution is needed

to keep functional units busy. As speculative paths branch to

deeper speculative paths, the prediction accuracy drops off

exponentially, making the problem more severe. These trends

motivate development of an accurate branch prediction scheme.

The two-level adaptive training branch predictor, proposed by

Yeh and Patt[1], uses two levels of branch history information to

make predictions. The first level contains the history of the last n

branches, while the second level contains the branch outcome for

the last s occurrences of the branch matching the global pattern.

Variations exist regarding whether the two levels are unique to

each branch instruction or shared. Per-address structures were

found to be most accurate due to the removal of interference

between different branches, but at the cost of storage

requirements. The most efficient implementation for a target

branch accuracy was found to be PAg, where the two major

structures are a per-address history register table, which contains

the most recent global history bits, and a global pattern table,

which contains the most recent branch results for the content of

the history register. Several finite state machines are used for

updating pattern history bits, and allow the predictor to adjust to

the current branch behavior of the program. The predictor

achieved an average prediction accuracy of 97 percent on nine

SPEC benchmarks. Other techniques were simulated in that study

as well, including static training predictors, branch target buffer

designs, always taken, backward taken, etc, and the two-level

adaptive training branch predictor achieved about 4 percent better

prediction accuracy than other predictors.

Heil, Z. Smith and J. Smith[2] examined correlation between

branch outcome and data values in their paper on improving

branch predictions. After examining a sampling of branches that

exhibited unusually high mispredict rates, the authors determined

that the local and global history alone was not enough to yield a

good prediction for certain types of branches. The authors

believed that these branches could be predicted relatively easily if

the values tested by the branch instruction could be included as

part of the prediction. To that end, they implemented a branch

difference predictor (BDP), which stores the difference of the two

branch source register operands (the authors included a special

case for handling MIPS instructions like “set”). This value is then

used as part of their prediction mechanism along with the branch

address and global history. Additionally, the authors devised a

method of decrementing counters used to measure the “staleness”

of a stored difference value, taking into account that a branch may

be issued in an out of order processor before the data difference

on which it depends has been resolved. These counters were a

way of letting the predictor know how far beyond the last known

difference value the current branch instruction was. In comparing

their BDP implementation against gshare and Bi-Mode predictors

running selected benchmarks from SPEC95, Heil et al found a

mispredict improvement of up to 33% over gshare and 15% over

Bi-Modal.

While much work has been spent on improving the basic twolevel prediction scheme mentioned above, there has also been

research in applying new techniques to branch prediction.

Numerous solutions have been suggested that are based off of

extensions to the field of machine learning.

Jimenez and Lin[3] borrowed from neural networks when they

proposed using perceptrons in dynamic branch predictions. These

perceptrons essentially consisted of a vector of input units

connected to a single output unit by weighted edges. In their

implementation, the individual inputs are driven by the global

branch history shift register, and each input is either a –1 (for

branch not taken) or 1 (for branch taken). The weighting factors

for each input indicate the strength of correlation between that

input bit and the output prediction. The output is the result of

summing the weighted input bits. A negative output results in a

prediction of not taken; otherwise the branch is predicted to be

taken. Once the branch is resolved, the perceptrons are said to be

trained, whereby the input bits that predicted correctly have their

weight incremented, and mispredicting input bits have their

weights decremented. The authors showed that their perceptron

predictor scaled linearly with the history size, thereby enabling

more history to be considered than a table-based approach for a

given hardware budget. In addition, they improved misprediction

rates by 10.1% over a gshare predictor running SPEC 2000

benchmarks.

Previous work by Loh and Henry[4] to improve branch prediction

involved the idea of ensemble branch prediction, where a master

expert uses individual predictions made by a collection of

different algorithms to make a final prediction. The master expert

uses a weighted majority algorithm to analyze the individual

predictions and decide what outcome to predict. As individual

predictors make correct predictions, their weight increases, and as

they make incorrect predictions, their weight decreases. In

addition, each branch address corresponds to its own set of

predictor weights. This method was found to reduce branch

mispredictions by about 5%-11%.

3. Design and Implementation Details

3.1 Overview

In this section we will present the algorithm used in our

correlating feature selector/dynamic decision tree, which was first

proposed by Fern et. al.[5]. Correlating feature selection is

capable of keeping track of a large number of features, while

selecting the most relevant features from a feature set to use in

predicting the outcome of each branch. We use a dynamic

decision tree (DDT) predictor, borrowed from the idea of decision

trees used in machine learning, to implement correlating feature

selection. The predictor operates in two modes: an update mode

and a prediction mode. In the update mode, a feature vector/target

outcome pair is used to update internal state. The feature vector is

an array of bits representing all the possible features to use in

predicting branch outcomes. The target outcome is simply the

branch outcome corresponding to the feature vector it is paired

with. These data are fed to the predictor so that it may learn from

each branch, and become more accurate with time and training.

In the prediction mode, an input feature vector is used along with

the dynamic decision tree to predict the outcome of the current

branch.

The number of trees used in the algorithm is

parameterizable, and the lower order bits of each branch address

are used to map each branch to its tree. The root node of the

decision tree uses the most highly predictive feature (feature that

correlates most strongly to the branch outcome), and nodes at

each subsequent level of the tree use the most highly predictive

feature of all the remaining features. The features used in the Fern

et. al. predictor were 64 history bits, composed of 32 local and 32

global bits. We propose to build upon the DDT predictor idea by

using additional predictive information in the form of register

values. In Section 4, we describe in detail the need to use register

bit values in addition to branch history bit values. The rest of this

section provides implementation details for our correlating feature

selector/dynamic decision tree.

3.2 Feature Selection

A predictor that forms rules based on the principle of using only a

small set of carefully selected features is said to perform feature

selection [5]. For each branch, the CFS/DDT selectively uses the

most relevant features to predict the branch outcome, while

ignoring irrelevant features. The ability of our branch predictor to

dynamically determine which features correlate most strongly to

branch outcomes for each particular branch is the key behind its

relatively low storage requirements. Feature selection is carried

out within the dynamic decision tree, whose implementation is

described below.

3.3 DDT Updating

The update mode is the more complex of the two DDT modes. In

this mode, the values of all the features used at the time of a

branch’s outcome prediction are used in combination with the

actual branch outcome result to update the necessary nodes in the

tree for that particular branch address. Each node contains an 8bit counter for each feature. The magnitude of each counter

represents the strength of the correlation between the feature and

the true branch outcome (more positive for strong positive

correlation, more negative for strong negative correlation) at its

particular node. In update mode, all nodes used to make the

branch prediction are updated. The feature bits are compared to

the branch outcome, and counters at the updated nodes are

incremented for a match, and decremented for a mismatch. If any

counter saturates, all counters are halved to preserve relative

strengths. In addition to updating its own feature counters, each

updated node must also activate its selected child node to update

the child’s feature counters as well. In this manner, the path of

selected prediction nodes for the particular branch address is

updated from the root node down.

Special features to note are the constant feature and the subtree

feature. These features each have a correlation counter as well.

The constant feature is just a ‘1’ bit, and it exists to account for

the case where a branch heavily favors a particular constant

outcome. It is important for the case where a node encounters a

stream of feature values/target outcome pairs with nearly uniform

branch outcomes. The subtree bit represents the prediction of the

selected child node. It is not used for prediction, but is used to

learn whether a prediction should be made locally at a node, or

the prediction should be made based on the selected child node’s

prediction.

At each updated node, the updated feature counters are compared,

and the feature counter (excluding constant and subtree) with the

largest magnitude is set as the splitting variable for the node,

among the features that have not been selected as the splitting

variable by any of the parent nodes. This splitting variable is the

most predictive of all the features at that node that may be used as

the splitting variable. If the constant or subtree features have

larger magnitudes than the splitting feature, a special use-constant

or use-subtree bit is set (use of these bits described further in

Section 3.4).

3.4 DDT Predicting

In predict mode, node values set in the update mode are used in

combination with a set of input features to predict a branch

outcome. Prediction starts at the root node of the branch

address’s tree, and continues down the tree as necessary. At each

node, if the use-constant bit is set, a prediction is made for taken

or for not taken, based upon the sign of the constant feature

counter (positive for taken, negative for not taken). If the usesubtree bit is set, the prediction of the child node (selected based

on the splitting feature value) is used. If neither of these bits are

set, the splitting feature value is used to make a branch outcome

prediction (1 for taken, 0 for not taken).

3.5 Implementation Parameters

In our implementation, the number of dynamic decision trees and

the maximum depth of each tree are parameterizable. Increasing

either value raises prediction accuracy, but at the cost of increased

storage requirements. We map a branch instruction to a decision

tree based on the lower-order bits of the branch instruction’s

address.

4. Experimental Methodology and Results

4.1 Initial Evaluation Environment

For our initial evaluation of branch prediction schemes we used

the sim-bpred simulator from SimpleScalar. Sim-bpred has the

advantage of being a fast trace-driven simulator. Furthermore it

operates on one instruction at a time to completion, so that an

instruction is read, executes, and updates system state without

overlapping with other instructions. Thus, when any instruction

begins, all previous instructions have updated system state.

This environment provides a best case scenario with respect to

known system state in which we could evaluate different branch

predictors, without the artifacts of out-of-order processing. Our

goal was to use sim-bpred to determine what aspects of system

state correlated most strongly to branch outcomes for a simplified

processor model.

4.2 Tournament Predictor

The first step in our investigation was to employ a commonly used

branch predictor to provide us with accuracy measurements to

compare our results against. We used a tournament predictor,

modeled after the predictor used in the Alpha EV6 [6]. The

tournament predictor chooses between a local and global predictor

on a per-branch basis. The tournament predictor was evaluated in

SimpleScalar using the built in “combined” branch prediction

scheme.

4.3 CFS/DDT Features -- Local and Global

History Bits

The CFS/DDT proposed by Fern et. al. [5] used only history bits

as features on which to correlate branch outcomes. They used 32

bits of local history and 32 bits of global history. The local

history represents the last 32 branch outcomes for a specific

branch. The global history represents the last 32 branch outcomes

on all branches in the program. Our first step in creating a

CFS/DDT implementation was to recreate the predictor

implemented by Fern et. al., and thus only correlate on local and

global history bits.

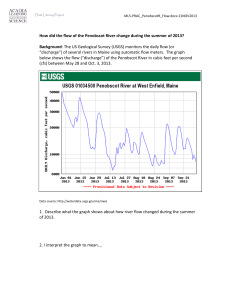

Our initial comparison is between a tournament predictor and the

CFS/DDT predictor operating on only 64 features (32 bits global

history, 32 bits local history). Simulations were run on the SPEC

2000 benchmarks gcc, twolf and parser. We chose integer

benchmarks rather than floating point benchmarks because their

branch behavior tends to be more unpredictable.

Our initial CFS/DDT was obviously outperformed by the

tournament predictor, so we set out to find more features to

correlate upon.

0.1000

0.0900

0.0800

Mispredict Rate

0.0700

4.4 CFS/DDT Features -- Register Values

0.0600

gcc CFS/DDT (hist)

0.0500

gcc TOURN

0.0400

0.0300

0.0200

0.0100

0.0000

256_0

256_1

256_3

256_5

512_5

Equivalent Tree Configuration for Predictor Size (#Trees_Depth)

0.1400

0.1200

Mispredict Rate

0.1000

0.0800

twolf CFS/DDT (hist)

twolf TOURN

0.0600

0.0400

0.0200

0.0000

256_0

256_1

256_3

256_5

512_5

Equivalent Tree Configuration for Predictor Size (#Trees_Depth)

Next, we investigated adding additional features to see how well

they would improve the performance of the baseline CFS/DDT

predictor. Since branches operate based on comparisons of

register values, correlating on the values of the registers seemed

like a natural choice. We initially added the lower 8 bits of each

of the 32 general purpose integer registers to the CFS/DDT

feature set. We feel that the majority of branches are based on

very small numbers, especially compare to zero or compare to

one. Hence, we decided to try correlating on the lower bits of the

register. However, loop termination branches often compare

against some moderately larger values, and thus the lowest bit

alone would not be enough. We felt 8 bits would be large enough

to capture the correlation on most of these compares.

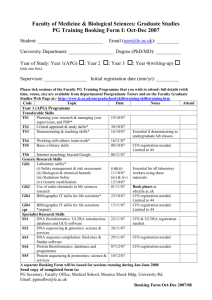

The graphs in Figure 2 show simulations of the same three integer

SPEC 2000 benchmarks used in Figure 1, where the previous

results are compared to the performance of the new CFS/DDT

implementation that correlates on 64 bits of history and 8 bits of

data per register. It should be noted that for the different

configurations of maximum number of trees and maximum tree

depth, the total storage requirement of the CFS/DDT

implementation changes. For each configuration the tournament

predictor is sized such that the total storage requirement is

comparable to the size of the CFS/DDT predictor it is being

compared to.

0.0700

0.1000

0.0900

0.0600

0.0800

0.0500

parser CFS/DDT (hist)

parser TOURN

0.0300

0.0200

Mispredict Rate

Mispredict Rate

0.0700

0.0400

0.0600

gcc CFS/DDT (hist)

0.0500

gcc CFS/DDT (reg8)

gcc TOURN

0.0400

0.0300

0.0100

0.0200

0.0100

0.0000

256_0

256_1

256_3

256_5

512_5

Equivalent Tree Configuration for Predictor Size (#Trees_Depth)

0.0000

256_0

256_1

256_3

256_5

512_5

Equivalent Tree Configuration for Predictor Size

(#Trees_Depth)

Figure 1 shows the mispredict rates of the tournament predictor

and our baseline CFS/DDT predictor for our three integer

benchmarks. Five CFS/DDT configurations are run for a different

number of trees and a different maximum tree depth.

Figure 1

We see that in all benchmarks across all configurations the

tournament predictor performs better than the baseline CFS/DDT

implementation. The difference in mispredict rate ranges from

5.62% to 1.66%, with the tournament predictor on average

mispredicting 3.08% fewer times.

4.5 Correlating On Entire Registers

0.1400

We next investigated what would happen if we correlated on 32

bits of each of the 32 integer registers rather than just the lower 8

bits.

0.1200

Mispredict Rate

0.1000

0.1000

0.0800

twolf CFS/DDT (hist)

twolf CFS/DDT (reg8)

0.0900

twolf TOURN

0.0600

0.0800

0.0700

Mispredict Rate

0.0400

0.0200

0.0000

256_0

256_1

256_3

256_5

0.0600

gcc CFS/DDT (hist)

0.0500

gcc CFS/DDT (reg8)

gcc CFS/DDT (reg32)

0.0400

512_5

0.0300

Equivalent Tree Configuration for Predictor Size

(#Trees_Depth)

0.0200

0.0100

0.0700

0.0000

256_0

0.0500

256_3

256_5

512_5

0.1400

0.0400

parser CFS/DDT (hist)

0.1200

parser CFS/DDT (reg8)

parser TOURN

0.0300

0.1000

Mispredict Rate

Mispredict Rate

256_1

Tree Configuration (#Trees_Depth)

0.0600

0.0200

0.0100

0.0000

0.0800

twolf CFS/DDT (hist)

twolf CFS/DDT (reg8)

twolf CFS/DDT (reg32)

0.0600

0.0400

256_0

256_1

256_3

256_5

512_5

Equivalent Tree Configuration for Predictor Size

(#Trees_Depth)

0.0200

0.0000

256_0

Figure 2

In examining the results of the gcc and parser benchmarks, we see

that the tournament predictor initially performs better than the

new CFS/DDT implementation. However as total storage size

used by the branch predictor increases, we see that the difference

between CFS/DDT and the tournament predictor decreases. By

512 trees with a maximum depth of 5 nodes, the CFS/DDT

implementation outperforms the tournament predictor for these

two benchmarks as well. The CFS/DDT branch predictor appears

to gain more accuracy as storage size increases than does the

tournament predictor. Thus, we conclude that the CFS/DDT

predictor is able to store more relevant information and predict

branch outcomes more accurately than the tournament predictor

with a moderate amount of predictor storage available.

256_3

256_5

512_5

Tree Configuration (#Trees_Depth)

For all benchmarks and all configurations the CFS/DDT

correlating on history and 8 bits of data per register performs

better than the baseline CFS/DDT implementation.

The

difference in mispredict rates range from 6.79% to 1.76%, with an

average difference of 3.78%.

0.0700

0.0600

0.0500

Mispredict Rate

The enhanced CFS/DDT implementation also outperformed the

tournament predictor across all configurations of the twolf

benchmark. The difference in mispredict rates range from 4.13%

to 2.49% with an average difference of 3.26%.

256_1

0.0400

parser CFS/DDT (hist)

parser CFS/DDT (reg8)

parser CFS/DDT (reg32)

0.0300

0.0200

0.0100

0.0000

256_0

256_1

256_3

256_5

512_5

Tree Configuration (#Trees_Depth)

Figure 3

Figure 3 shows a comparison of the three flavors of CFS/DDT

predictors (history only, history and lower 8 bits of each register,

history and full 32 bits of each register). The simulation results

reveal that adding the lower 8 bits of each register value to the

feature set increases prediction accuracy on average by 3.78%

(over a feature set of only history bits). Adding all 32 bits of each

register value to the feature set increases prediction accuracy on

4.6 Evaluating CFS/DDT in an Out of Order

Simulation Environment

To this point, we have only simulated our CFS/DDT using

SimpleScalar’s sim-bpred, which is a trace-driven simulation

environment with no real notion of a CPU. However, if we want

to test our branch predictor in a realistic processor simulation

environment, sim-bpred is not sufficient.

0.1800

0.1600

0.1400

Misprediction Rate

0.1200

0.1000

twolf CFS/DDT (bpred)

twolf CFS/DDT (outorder)

0.0800

0.0600

0.0400

0.0200

0.0000

256_0

256_1

We first ported over a naive version of the branch prediction code

over to sim-outorder, with minimal changes to the code. As stated

previously, we used the CFS/DDT whose feature set consists of

64 bits of history and the lower 8 bits of each register value.

Figure 4 compares the accuracy of our CFS/DDT in sim-bpred vs.

in sim-outorder.

0.1000

0.0800

parser CFS/DDT (bpred)

parser CFS/DDT (outorder)

0.0600

0.0400

0.0200

0.0000

512_5

We simulated the tournament predictor in sim-outorder as well, to

see if the tournament predictor would lose as much accuracy as

our CFS/DDT predictor. We also wanted to compare the

tournament predictor’s accuracy to our branch predictor in the

out-of-order execution environment.

0.1600

Mispredict Rate

Misprediction Rate

gcc CFS/DDT (outorder)

256_5

Our CFS/DDT implementation in sim-outorder performed worse

than the sim-bpred implementation in every simulation. Accuracy

differences ranged from 13.98% to 8.94% with an average of

10.7%.

0.1200

gcc CFS/DDT (bpred)

256_3

Figure 4

0.1400

0.0800

256_1

Tree Configuration (#Trees_Depth)

0.1400

0.1000

512_5

0.1200

0.1600

0.1200

256_5

0.1400

256_0

Our next step was to investigate if our branch predictor could

achieve accuracy comparable to or better than a tournament

predictor of the same size, for a processor that uses out-of-order

execution. Sim-outorder, another part of the SimpleScalar suite,

simulates an out-of-order processor, and met our testing needs.

Since we previously already verified that register values do in fact

correlate to branch outcomes, we needed to port our CFS/DDT

branch predictor over to sim-outorder to provide realistic

simulations involving CPU timing.

256_3

Tree Configuration (#Trees_Depth)

Misprediction Rate

average by 4.72% (over a feature set of only history bits). While

adding 32 bits of register values increases prediction accuracy by

more than adding lower 8 bits of register values, we must also

consider the increase in storage size resulting from these

additions. Adding the lower 8 bits of the register values increases

storage size requirements by 256 bytes (one 8 bit counter per

feature, 256 additional features) per dynamic decision tree node,

while adding 32 bits of register values increases storage size

requirements by 1024 bytes per dynamic decision tree node.

Thus, we feel that the ~1% increase in accuracy of the CFS/DDT

with 32 bits of register values over the CFS/DDT with 8 bits of

register values, is outweighed by the four times greater increase in

storage size required by the 32 bits CFS/DDT. It would be worth

examining in a future study how an increase in the number of

register bits used might decrease the number of trees or maximum

tree depth needed to achieve a target prediction accuracy.

However, time constraints prevented us from examining that in

this study. For our remaining analysis we will consider CFS/DDT

predictors that use 64 history bits and the lower 8 bits of each of

the 32 integer register values.

0.1000

gcc CFS/DDT (reg8)

0.0800

gcc TOURN

0.0600

0.0400

0.0600

0.0200

0.0400

0.0000

0.0200

256_0

0.0000

256_0

256_1

256_3

256_5

Tree Configuration (#Trees_Depth)

512_5

256_1

256_3

256_5

512_5

Equivalent Tree Configuration for

Predictor Size (#Trees_Depth)

0.1800

0.1600

Mispredict Rate

0.1400

0.1200

0.1000

tw olf CFS/DDT (reg8)

0.0800

tw olf TOURN

0.0600

overlapping execution of instructions and out of order processing.

Essentially the state of the registers could change from the time a

branch is predicted to the time it is resolved. To compensate for

this problem, we modified our implementation so that in the

predict mode we save the current state of all the register feature

bits. In the update stage, this saved register state for that

prediction is used for node updating, rather than the current

register state at the time of update. Simulations were run to see

how using saved register state in the update phase would affect

accuracy.

0.0400

0.0200

0.1600

0.0000

0.1400

256_0

256_1

256_3

256_5

512_5

0.1200

Mispredict Rate

Equivalent Tree Configuration for

Predictor Size (#Trees_Depth)

0.1400

0.1000

gcc CFS/DDT (reg8)

0.0800

gcc TOURN

gcc CFS/DDT (saveregs)

0.0600

0.0400

0.1200

0.0200

Mispredict Rate

0.1000

0.0000

0.0800

256_0

parser CFS/DDT (reg8)

parser TOURN

0.0600

256_1

256_3

256_5

512_5

Equivalent Tree Configuration for

Predictor Size (#Trees_Depth)

0.0400

0.1800

0.0200

0.1600

0.0000

256_1

256_3

256_5

0.1400

512_5

Equivalent Tree Configuration for

Predictor Size (#Trees_Depth)

Mispredict Rate

256_0

Figure 5

We believe that the CFS/DDT is so sensitive to out-of-order

execution because it correlates on and relies heavily on register

values. This reliance is evidenced by the significant increase in

prediction accuracy from correlating solely on history bits to

correlating on register bits as well in Section 4.4. These register

values may have different values in trace-driven simulation than

in out-of-order simulation.

4.7 Feature Set Differences in Update and

Lookup Mode

We predicted that part of the large increase in misprediction rate

was due to the fact that the register values changed in between the

predict and update phase of branch prediction due to the

tw olf CFS/DDT (reg8)

0.1000

tw olf TOURN

0.0800

tw olf CFS/DDT (saveregs)

0.0600

0.0400

0.0200

0.0000

256_0

256_1

256_3

256_5

512_5

Equivalent Tree Configuration for

Predictor Size (#Trees_Depth)

0.1400

0.1200

0.1000

Mispredict Rate

Figure 5 shows our simulation results after porting our branch

predictor over to sim-outorder. Our CFS/DDT consistently

performs worse than the tournament predictor in sim-outorder.

The accuracy rate difference of the tournament predictor over the

CFS/DDT ranges from 7.69% to 3.29% with an average

difference of 5.42%. Our CFS/DDT performed comparably with

the tournament predictor in sim-bpred, yet performed quite worse

than the tournament predictor in sim-outorder. Thus, we reasoned

that the CFS/DDT is more sensitive to out-of-order execution than

the tournament predictor.

0.1200

parser CFS/DDT (reg8)

0.0800

parser TOURN

0.0600

parser CFS/DDT (saveregs)

0.0400

0.0200

0.0000

256_0

256_1

256_3

256_5

512_5

Equivalent Tree Configuration for Predictor

Size (#Trees_Depth)

We see that saving register state before updating yielded a

Figure 6

performance improvement over the standard CFS/DDT

implementation with history bits and the lower 8 bits of each

register. Improvement in mispredict ranged from 2.43% to

1.32%, with an average improvement of 1.94%. However, the

tournament predictor still beats the modified CFS/DDT

implementation. In the next section we discuss some possible

reasons for why we were unable to match the performance of the

tournament predictor and consider possible ways of closing the

performance gap.

4.8 Analysis of Out of Order Execution

Results

Clearly there exists a significant performance gap between the

tournament predictor and variations of the CFS/DDT predictor

when both are modeled in an out of order execution environment.

Since the predictors were more evenly matched when executed in

sim-bpred, with the CFS/DDT predictor besting the tournament

predictor in certain configurations, we must consider what it is

about simulating in sim-outorder that would result in CFS/DDT

taking such a performance hit.

At this point we examined simulation traces to see if the large

difference in accuracy might have been caused by some error in

recording branch prediction statistics or if the porting of our code

from the sim-bpred to the sim-outorder model had otherwise

failed. In sim-outorder branch statistics are recorded during calls

to the branch update function. After careful analysis we

determined that the update function was only being called on

correct-path executions. Our worry that wrong-path executions

were making the statistics appear worse was unfounded. To be

sure we checked the addresses of branch instructions for which

the update function was called and confirmed it was the same

sequence of addresses generated by sim-bpred. Next we printed

the state of register values at the time of calls to the branch update

functions. We confirmed that in some cases the values of

registers during a call to predict in sim-outorder would differ from

the values of registers during the call to predict for the same

branch in sim-bpred. This difference in register values then

caused different splitting variables to be selected in some cases,

and thus we saw a difference in accuracy. We believe this shows

the drop in accuracy is an artifact of the difference in the register

file as a result of the overlapped and out of order execution of

instructions in sim-outorder. We then attempted to discern how

differences in the register file might cause a loss in accuracy.

The tournament predictor bases its prediction only on branch

history, while the CFS/DDT gets much of its prediction accuracy

from correlating on register values that are available at the time of

prediction. We expect then that the increased performance gap

will have to do with the register values. There are two scenarios

that might result in CFS/DDT making a prediction based off of

data different from what it saw in sim-bpred. Because of

CFS/DDT’s reliance on register values, it takes a significant

performance hit.

The first scenario is when a branch outcome depends on an

instruction that has been executed but not yet committed. In such

a case the needed register value is sitting inside the physical

register file but is not yet mapped to the architectural register the

branch will be looking at. As a result, performance degrades.

This case can be taken care of if CFS/DDT is expanded to

correlate on the entire physical register file and knows which

physical register the architectural register identified in the

instruction will eventually point to. We were unable to implement

this feature, but certainly further investigation warrants seeing

what performance can be reclaimed in this case.

The second scenario does not have as straightforward a solution.

In an out of order core, we may expect there are times when a

branch instruction is fetched while a previous instruction the

branch outcome depends on is still waiting to be issued and

executed. As a result, the data value that the branch will depend

on has not even be computed yet, let along committed. Although

the branch itself will also wait to be issued due to the true

dependency, the branch prediction must still occur immediately in

order to fetch new instructions the next cycle. Thus, branch

prediction may occur using a register file with stale data values.

We know of no good ways to predict accurately based off of data

values that do not yet exist.

5. Conclusion

In this paper we have examined a new approach to dynamic

branch prediction, the Correlating Feature Selector/Dynamic

Decision Tree predictor. By using techniques from machine

learning to dynamically select which features each branch should

base a prediction on, CFS/DDTs scale linearly with added

features, not exponentially. As a result we have the ability to

explore the use of additional features without the exponential

increase in storage size.

We have shown that a tournament predictor modeled off of the

EV6 branch predictor outperforms a CFS/DDT predictor using

only global and local history bits. However, by adding the lowest

8 bits of all integer registers to the feature set, we have shown that

the new CFS/DDT predictor gains more accuracy as more storage

is devoted to the branch predictor (used up by additional trees or

deeper trees) than the tournament predictor, so that at moderate

storage sizes the expanded CFS/DDT predictor outperforms the

tournament predictor.

We next evaluated the CFS/DDT predictor in sim-outorder to

measure the predictor's sensitivity to register values and the

effects of out of order execution. We found a significant loss in

the predictor's accuracy compared to the tournament predictor,

and suggested two situations that might cause such a performance

loss. The first occurs when the instructions writing to the

registers on which a branch's outcome depends have executed but

not committed, so that the register values are sitting in physical

but not architectural registers. We have suggested a workaround

for this scenario, but have not yet explored what sort of gains it

will yield. The second situation occurs when the instructions

writing to the registers on which a branch's outcome depends are

waiting in the issue queue, whether it is due to some dependency,

structural hazard, or something else. In this case although the

branch instruction will also wait in the issue queue, a prediction is

still necessary right away in order to fetch instructions for the next

cycle. When this occurs prediction is based off of a stale register

file, and accuracy is reduced. Due to time constraints, we leave

this at future work to determine and examine workarounds for this

situation.

It has been shown that the CFS/DDT predictor has an enormous

amount of potential as a branch prediction scheme. This is

especially true in future designs where more transistors may be

dedicated to branch prediction storage, permitting a greater

number of trees and greater maximum depth to each tree.

However, simulations in an out-of-order processor model show

that techniques must first be developed to account for register

values not updated in time for a branch prediction before

CFS/DDT will be feasible in a modern out-of-order processor.

6. Acknowledgements

The authors wish to acknowledge with gratitude the instructor,

TAs, and fellow students of 18-741 for providing immeasurable

insight. The authors would also like to thank the not so

anonymous referees (course instructor and TAs) for their

guidance.

7. References

[1] Tse-Yu Yeh and Yale N. Patt, “Two-Level Adaptive

Training Branch Prediction”.

[2] Timothy H. Heil, Zak Smith, and J.E. Smith, “Improving

Branch Predictors by Correlating on Data Values”.

[3] Daniel A. Jimenez and Calvin Lin, “Dynamic Branch

Prediction with Perceptrons”.

[4] Gabriel H. Loh and Dana S. Henry, “Applying Machine

Learning for Ensemble Branch Predictors”.

[5] Alan Fern, Robert Givan, Babak Falsafi, and T.N.

Vijaykumar, “Dynamic Feature Selection for Hardware

Prediction”.

[6] R.E. Kessler, E.J. McLellan, and D.A. Webb, “The Alpha

21264 Microprocessor Architecture”.