Mod_Spec_P2_TestGuide_v1.0c

advertisement

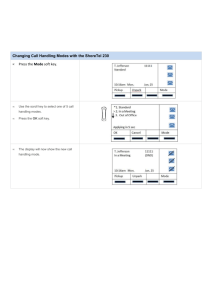

Modular Specifications Project Phase 2 - Direct The Office of the National Coordinator for Health Information Technology Direct Test Guide Version 1.0c February 2, 2012 Prepared by: ONC Standards and Interoperability Test Team Modular Specification Phase 2 Direct Version 1.0c Referenced Documents Direct Transport and Security Specification SMTP and SMIME Requirements Traceability Matrix Direct Message Conversion Specification XDR and XDM Requirements Traceability Matrix Mod_Spec_P2_Transport_and_Security_Test_Package Mod_Spec_P2_XD_Test_Package Document Change History Version 0.8 Date August 25, 2011 0.9 October 26, 2011 1.0 1.1 November 1, 2011 November 8, 2011 1.2 1.3 November 10, 2011 November 18, 2011 1.4 January 13, 2012 1.5 (external 1.0c) February 2, 2012 February 2, 2012 Items Changed Since Previous Version Initial draft based from Mod Spec P1 wrapper document and new validation test package template. Added Testing Prep, Testing Materials, Summary of Specifications sections Incorporated feedback from Test Team Incorporated feedback from Spec Team, added Section 2 Added Executive Summary Reworked Test Environment and Test Coverage sections, updated per feedback Added section 6.2.6 which explains test cases that are subflows of other test cases. Fixed referenced documents. Clarified the description of the XDR Testing Tool in Section 3. Updated the test coverage in Section 4. Updated Section 6 to reflect the latest test packet structure and the assigned ID blocks for test items. Changed By Testing Team Testing Team Testing Team Testing Team Testing Team Testing Team Testing Team Testing Team Page 1 Modular Specification Phase 2 Direct Version 1.0c Table of Contents 1 2 3 4 5 6 7 8 EXECUTIVE SUMMARY .......................................................................................................................4 PROJECT BACKGROUND: MODULAR SPECIFICATION PHASE 2 ......................................................................5 2.1 Document Audience & Sections ...................................................................................................... 5 TEST ENVIRONMENT .........................................................................................................................6 3.1 Introduction .................................................................................................................................... 6 3.2 Terminology .................................................................................................................................... 6 3.3 Actors in the Test Environment - Mail ............................................................................................ 7 3.4 Actors in the Test Environment - Mail to/from XDR ....................................................................... 9 TEST COVERAGE OVERVIEW .............................................................................................................. 12 4.1 Key Test Scope Decisions .............................................................................................................. 12 4.1.1 Out of scope: Document payload .......................................................................................... 12 4.1.2 Out of scope: Mail – Certificate discovery and publication ................................................... 12 4.1.3 Out of scope: XDR-XDR messaging ........................................................................................ 12 4.1.4 Out of scope: Internal details of specific Mail deployment models ...................................... 12 4.2 Test Coverage Areas ...................................................................................................................... 13 4.2.1 Mail – Trust verification ......................................................................................................... 13 4.2.2 Mail – Basic messaging .......................................................................................................... 13 4.2.3 Mail - Signatures and encryption ........................................................................................... 13 4.2.4 Mail - XDM ............................................................................................................................. 14 4.2.5 Conversion: Mail to XDR ........................................................................................................ 14 4.2.6 Conversion: Mail - XDM to XDR ............................................................................................. 14 4.2.7 Conversion: XDR to Mail ........................................................................................................ 15 4.2.8 XD* ......................................................................................................................................... 15 4.3 Testing Roadmap........................................................................................................................... 15 4.3.1 Initial connectivity testing...................................................................................................... 16 4.3.2 Interoperability testing .......................................................................................................... 16 TESTING PREP ............................................................................................................................... 18 5.1 Stand Up Testing Tool ................................................................................................................... 18 5.2 Identify relevant Test Cases .......................................................................................................... 18 5.3 Compile Test Data ......................................................................................................................... 18 5.4 General Test Case Guidance.......................................................................................................... 18 TESTING MATERIALS ....................................................................................................................... 20 6.1 Test Packet Contents ..................................................................................................................... 20 6.2 Navigating the Test Cases Sheet ................................................................................................... 22 6.3 Test Data ....................................................................................................................................... 26 EXECUTING TESTING ....................................................................................................................... 27 7.1 Testing Steps ................................................................................................................................. 27 7.2 Analysis.......................................................................................................................................... 27 7.2.1 Prepare for Analysis ............................................................................................................... 27 7.2.2 Checklist Verification ............................................................................................................. 27 7.2.3 Reporting Test Case Outcomes .............................................................................................. 28 SUMMARY OF SPECIFICATIONS .......................................................................................................... 29 8.1 Spec Tree ....................................................................................................................................... 29 February 2, 2012 Page 2 Modular Specification Phase 2 Direct Version 1.0c 8.2 Spec Summaries ............................................................................................................................ 31 9 APPENDIX A – GLOSSARY OF TERMS ................................................................................................... 38 Table of Figures Figure 1: Actors for Mail (Direct messaging) ................................................................................................ 7 Figure 2: Example test environment: Mail sender only................................................................................. 8 Figure 3: Dual versions of the same System.................................................................................................. 8 Figure 4: Two different Systems .................................................................................................................... 9 Figure 5: System to Endpoint Simulator ........................................................................................................ 9 Figure 6: Actors for Conversion: Mail to/from XDR..................................................................................... 10 Figure 7: Example test environment: Mail + Mail to XDR ........................................................................... 10 Figure 8: Test Data Index – sample test Message description .................................................................... 18 Figure 9: Test Packet sheets ........................................................................................................................ 20 Figure 10: Test cases using XD* checklists .................................................................................................. 21 Figure 11: Test Cases Sheet - Columns ........................................................................................................ 22 Figure 12: Test Cases – System Under Test – Mail ...................................................................................... 24 Figure 13: Test Cases – System Under Test – Mail-XDR Conversion ........................................................... 24 Figure 14: Test Cases Sheet - Preconditions ................................................................................................ 25 Figure 15: Test Cases – multiple Testing Tools............................................................................................ 25 Figure 16: Test Cases Sheet – Test Steps verification steps ........................................................................ 25 Figure 17: Test Cases Sheet – Test Steps verification steps ........................................................................ 25 Figure 18: Test Cases Sheet – Test Data ID ................................................................................................. 26 Figure 19: Test Data Index – Test Data ID................................................................................................... 26 Figure 20: Checklist Sheet ........................................................................................................................... 28 Figure 21: Spec Tree .................................................................................................................................... 30 February 2, 2012 Page 3 Modular Specification Phase 2 Direct Version 1.0c 1 Executive Summary This Direct Test Guide is a companion artifact to the Direct Test Packets, which contain Test Cases, Test Data, and inspection Checklists, and the Conformance Questionnaire. Together, the Test Guide and Test Packets comprise a self-testing kit to enable implementers to verify conformance to the Direct specifications. It is recommended that implementers review the Test Guide before examining the Test Packet. The Test Guide includes project background information, an overview of our test coverage, and instructions for identifying Testing Tools and executing Test Cases. A detailed walk-through of the Test Packet artifacts is also provided. February 2, 2012 Page 4 Modular Specification Phase 2 Direct Version 1.0c 2 Project Background: Modular Specification Phase 2 This section provides an introduction to the Modular Specification Phase 2 project that drove the production of the Direct Test Packet and this Testing Guide. The overall goal of the Modular Specification Phase 2 project is to extract the specifications currently used in the Direct pilot implementation (http://wiki.directproject.org) and develop implementable, testable specifications along with a conformant test implementation and high quality Test Cases that can be used to verify conformance. Within this project are four collaborative workstreams and groups, working under direction from ONC: 1. The Specifications Team: Focused on converting the existing specifications into a matrix format, designed to clearly delineate normative content into tracable and atomic requirements. 2. Test Implementation Team: Focused on standing up and expanding existing test implementation code into a demonstration System. 3. Testing Team: Focused on creating vendor neutral Test Cases, data, and conformance checklists to serve as a written test kit for verifying conformance and/or testing interoperability. 4. NIST Tools Team: Focused on utilizing and expanding existing and potential tools for executing the test suite. This document was produced by the Testing Team and reviewed by the entire Project Team, and represents the main project deliverable of the Testing Team’s workstream. 2.1 Document Audience & Sections The Test Packet is meant to act as a stand-alone and platform-neutral test kit that can be used to test systems implementing the Direct specifications. It targets three main audiences: A test team intending to perform an IV&V task, A product vendor or developer for rolling into their internal testing process, or A pilot System going through a pre-validation or self-attestation model for joining some kind of exchange network. This document does not refer to or contain actual execution scripts or assumptions on the physical architecture of the test environment (such as the programming platform/framework, server components, or testing tools used to simulate message exchange). It does assume some high-level basics about aspects of the test environment, which are detailed below. This document is intended to be used in tandem with the Test Packet, which contains the Test Cases, Test Data, and inspection Checklists. February 2, 2012 Page 5 Modular Specification Phase 2 Direct Version 1.0c 3 Test Environment This section describes the test environment in which the Test Cases are executed. It outlines the components of the test environment, and some common variations of the testing platform. 3.1 Introduction The following core work components are required for completion of any Test Cases: 1. Set up a test platform to simulate the user or System activity to be tested. 2. Perform the steps to simulate the type of user or System activity. 3. Note the results at each step and compare them against expected results and communicate the delta to the appropriate audience (development team, product owners, governing body, etc.). It is important to note that many people (including professional testers) are familiar with systems using heavy graphical user interfaces (GUIs) as opposed to messaging systems or integration programming, which generally have very light (or no) interface. One of the key differences in testing between these types of systems is the degree of emphasis on the three work areas defined above. For most GUI-heavy Systems, the weight is firmly in #2 above: running test procedures or scenarios, which generally have a 1-to-1 relationship to #3 (one step, one comparison to expected results). As such, when testing a typical shopping cart application there might be a dozen steps – and each step would have an expected result for comparison. For testing message or integration-biased Systems, there might be a single logical task (“Send a message from gateway 1 to gateway 2 with this structure.”), which then might have 100+ specific inspection tasks to follow. In addition, in messaging-biased testing the tester often has to perform additional work in #1 (adjusting the test platform) to simulate a particular test. For example, to perform a basic negative test in a shopping cart the tester might simply enter an incorrect credit card number. In a messaging System, they might have significant work (programming or configuration) to simulate a similar basic negative Test Case (such as leaving out an security header element). 3.2 Terminology The following terms are used in the test artifacts (additional terms are defined in Appendix A: Glossary of Terms): System, or SUT: in the context of a test case, the system under test. Testing Tool, or TT: in the context of a test case, something that is capable of operating as the control end of the message exchange with the system under test. Mail: a shorthand for the primary mechanism for Direct messaging: RFC5322+S/MIME messages over SMTP, including the MDN response. February 2, 2012 Page 6 Modular Specification Phase 2 Direct Version 1.0c Mail+XDM: a shorthand for RFC5322+S/MIME messages over SMTP, containing XDM-compliant files. XDR: a shorthand for a secondary mechanism for Direct messaging: SOAP+XDR messages over HTTP, including the SOAP+XDR response. Initiator: the initiator of a message exchange. In the context of the “push” protocols used in the Direct project (Mail and XDR), this is equivalent to the Sender. Receiver: the receiver of a message exchange. Converter: receives messages from one implementation type, converts, and sends to another implementation type. Although this is called out as a specific actor in test cases, it is not required to be a distinct implementation component from the Initiator/Sender or the Receiver. What is required is for the test subject to know where in their system conversion occurs – in other words, which are native interfaces and which are converted interfaces. 3.3 Actors in the Test Environment - Mail The test environment for Mail transactions is intentionally simplified, to accommodate different deployment models (as shown in http://wiki.directproject.org/Deployment+Models). We begin by abstracting two actors: a Mail sender and a Mail receiver. Mail sender SMTP + S/MIME Mail receiver Figure 1: Actors for Mail (Direct messaging) The Mail sender (“source” in the Deployment Model terminology) aggregates all behavior expected of the source in a black-box fashion, regardless of how that behavior is distributed among system components: Locating the destination address Locating and verifying the destination certificate Signing and encrypting the message Sending the SMTP-RFC5322-S/MIME message Optionally sending XDM-compliant files Likewise, the Mail receiver aggregates all behavior expected of the destination: Receiving the SMTP-RFC5322-S/MIME message Verifying the sender certificate Verifying the signature and decrypting the message Handling XDM-compliant files February 2, 2012 Page 7 Modular Specification Phase 2 Direct Version 1.0c Internal behavior/messaging within the Mail sender and receiver (for example, between an email client and a full service HISP) is not tested. We create the test environment by mapping the System under test and one or more Testing Tools to these actors, as appropriate. The physical systems or tools/code playing either side of this exchange are often quite similar, and sometimes identical. How Testers fill the two roles depends on what they are trying to accomplish with their testing. The architecture of the System and Testing Tools is not specified. For example, assume a system under test that can only send Direct Mail, without XDM attachments. First the tester would determine which test cases apply (this is explained in section 5.2). These test cases would have a Test Focus of “Mail…” and a System Under Test of “Initiator”, meaning they test the Initiator (i.e. the Mail Sender) in a Mail transaction. The abstract test environment would look like this (we would still need to choose what physical system would play the role of the Testing Tool): SUT: Mail sender SMTP + S/MIME TT: Mail receiver Figure 2: Example test environment: Mail sender only Since the Testing Tool role can be filled multiple ways, this leads to some common variants to the test platform: 1. Dual versions of the same System. In this model, Testers set up two instances of the System they want to test and then trigger a message from one System to the other. Depending on the Test Case being executed, each instance is designated the role of Initiator (i.e. sender) or Receiver. When the Systems are identical, the focus is almost entirely on conformance. System A SUT: Mail sender/ receiver System A SMTP + S/MIME TT: Mail sender/ receiver Figure 3: Dual versions of the same System 2. Two different Systems. In this model, Testers set up two different Systems. For example, one TI and another System based on or compatible with the specifications. The instance playing the role of the Initiator versus the Receiver depends on the Test Case being executed. This type of testing still contains conformance testing, but now includes interoperability as well. February 2, 2012 Page 8 Modular Specification Phase 2 Direct Version 1.0c Test Implementation System A SUT: Mail sender/ receiver TT: Mail sender/ receiver SMTP + S/MIME Figure 4: Two different Systems 3. A single System using a program to simulate an endpoint. This model is typically used to send a message to the System. The easiest way to do this is to simply set up a second System (as in #1 or #2 above) and trigger a message from one to the other through its API. The problem with this approach is when the Tester wants to do alternative flows or negative testing. Without modifying the System being used as the Testing Tool, Testers often cannot do things like not include a field or load it incorrectly. Some products get around this by continuing to expand the Application Programming Interface (API) to allow Testers to modify these elements. But if/when Testers cannot, they can create or use a program to simulate an endpoint entirely. Endpoint simulator System A SUT: Mail sender/ receiver SMTP + S/MIME TT: Mail sender/ receiver Figure 5: System to Endpoint Simulator As shown in the Test Cases spreadsheet, there are base flows that are sent across the physical environment through the roles previously described. The majority of this type of testing focuses on the observable messages between these Systems. They are analyzed to determine both message conformance as well as actual versus expected behavior. Actually sending messages and analyzing the resultant messages (or faults) is the foundation of this test packet. 3.4 Actors in the Test Environment - Mail to/from XDR When the system under test will be involved with conversion between Mail and XDR, there are additional actors defined. Mail sender February 2, 2012 SMTP + S/MIME Mail to XDR converter XDR XDR receiver Page 9 Modular Specification Phase 2 Direct Version 1.0c XDR sender XDR XDR to Mail converter SMTP + S/MIME Mail receiver Figure 6: Actors for Conversion: Mail to/from XDR The additional actors have the following behavior: The Mail to XDR converter is responsible for all conversions needed to translate a Mail message to an XDR message: Transport conversion from SMTP to SOAP Packaging conversion from RFC5322/MIME to XDR Packaging conversion from XDM to XDR Ensuring metadata conformance The XDR to Mail converter is responsible for conversions in the opposite direction. The XDR receiver is responsible for handling XDR messages that have been converted from Mail, including: Handling Direct-specific SOAP headers Handling full or minimal XDS metadata The XDR sender is responsible for sending XDR messages that will be converted to Mail. Note that there are no Direct-specific requirements for this actor, so there are no XDR to Mail test cases where the System Under Test is the Initiator (i.e. XDR sender). As with basic Mail messaging, the System under test and Testing Tool(s) will map to these actors, to create the test environment. Let us look at an example: System A has the ability to send and receive Mail messages, and also the ability to convert outgoing Mail messages to XDR messages, but not the reverse. The physical test environment may look like the following: System A SUT/TT: Mail sender/ receiver Test Implementation System A SMTP + S/MIME SUT: Mail to XDR converter XDR TT: XDR receiver Test Implementation SMTP + S/MIME TT: Mail sender/ receiver Figure 7: Example test environment: Mail + Mail to XDR February 2, 2012 Page 10 Modular Specification Phase 2 Direct Version 1.0c In this example, the following test areas would be executed: Mail Initiator tests: System A’s Mail sender would be the System under test, and a Test Implementation capable of receiving Mail would be the Mail Testing Tool. Mail Receiver tests: System A’s Mail receiver would be the System under test, and a Test Implementation capable of sending Mail would be the Mail Testing Tool. Mail to XDR tests: System A’s Mail sender would function as the Mail Testing Tool, System A’s Mail to XDR converter would be the System under test, and a Test Implementation capable of receiving converted XDR messages would be the XDR Testing Tool. Note that the exact complement of tests to be executed is determined by a questionnaire, since there are a number of conditional tests based on features implemented by the System. This questionnaire is explained in section 5.2. February 2, 2012 Page 11 Modular Specification Phase 2 Direct Version 1.0c 4 Test Coverage Overview This section gives an overview of the coverage of the Test Cases, grouped into logical test coverage areas. The descriptions in this section serve as a high level guide to the more detailed Test Cases, test data, and inspection Checklists contained in the Test Cases spreadsheet. Note that in some cases we have chosen to add scope clarification in the form of key requirements or clarification from the Spec Team before deciding if or how we will test a given functional area. 4.1 Key Test Scope Decisions The following are key decisions made on the scope of testing. 4.1.1 Out of scope: Document payload The Direct specifications are a messaging platform – they focus on transport, security, and the message envelope – but do not specify the type or format of the actual files transferred (the “payload”). The associated Test Cases were written to allow any valid payload to be used, which matches the intent of the specifications to be flexible to a wide variety of possible payloads. The only exception to this is the special case of XDM files sent to/from an XDR endpoint, which is covered in the conversion areas. 4.1.2 Out of scope: Mail – Certificate discovery and publication The current specifications do not include a deterministic mechanism for publishing and discovering certificates, although using DNS to do so is specified as an optional method. As efforts are underway to fill this gap, we have decided not to test this area thoroughly at this time (i.e. multiple variants and positive and negative testing). Rather, we test this feature implicitly (i.e. positive testing, no explicit variants), via our “Mail – Trust verification” tests, which require that systems have some way to obtain certs. Note: support for certificate discovery will be added in a future version. 4.1.3 Out of scope: XDR-XDR messaging While the Direct messaging conversion matrix shows the possibility of an XDR-capable endpoint as the sender and an XDR-capable endpoint as the receiver, the Direct project has defined its scope to require a Mail link somewhere in the communication chain. XDR messaging with no conversion to/from Mail is adequately tested by existing IHE testing artifacts or, in the case of the NwHIN exchange, Document Submission artifacts. 4.1.4 Out of scope: Internal details of specific Mail deployment models The Direct Deployment models wiki page shows a number of possible deployment models. While variations in these models may be employed for interoperability testing (explained later in the document), the models are non-normative examples, and their internal details (for example, messaging between an email client and a full-service HISP) are not specified. For this reason, these details are not tested. February 2, 2012 Page 12 Modular Specification Phase 2 Direct Version 1.0c 4.2 Test Coverage Areas We have identified the following areas for test coverage. We approach each area with a coverage philosophy that includes testing both success and failure variants, as well as verifying conformance of any products of the test, such as messages. In the test packet, the Test Cases and Checklists that primarily support a given test coverage area will list that area in the “Test Focus” column. Note that some areas are not tested directly, but rather through the test cases of some related area. The following test coverage areas are addressed: 4.2.1 Mail – Trust verification As the security foundation for Direct messaging, certificates are tested thoroughly: Systems under test: Initiator and Receiver Variants of binding: address-bound and organization-bound Variants of trust path: same cert, common trust anchor, etc. Variants of certificates: cert extensions Verification of certificate path Negative testing: rejected messaging for variants of trust failure (e.g. expired/revoked certs, trust path cannot be established) 4.2.2 Mail – Basic messaging In this test coverage area, we explore the Mail messaging common to all SMTP + S/MIME implementations: Systems under test: Initiator and Receiver Nominal sender and receiver cases for all Mail messaging areas o Note that because all tests are system-level, these nominal cases also cover the nominal cases for other Mail-related areas: certificate lookup, trust verification, and signatures and encryption. Variants of Internet Mail Format: mail headers – CC, BCC, etc. Variants of MIME: attachments, content type, transfer encodings, etc. Verification of message conformance: Mail message, MDN response Negative testing 4.2.3 o Applicant as Receiver returns failure MDN in case of invalid incoming message o Applicant as Initiator handles failure MDN or no MDN returned by Receiver (no requirements for this, so this is considered informative testing) Mail - Signatures and encryption In this test coverage area, we explore the way that security is implemented in Mail messages using S/MIME: February 2, 2012 Page 13 Modular Specification Phase 2 Direct Version 1.0c Systems under test: Initiator and Receiver Variants of signatures and algorithms Variants of encryption Negative testing o 4.2.4 Invalid signature algorithms, unencrypted messages, corrupt signatures, etc. Mail - XDM This test area covers initiating and receiving in the XDM format across the basic Mail transport only. Conversion to/from XDR transport is addressed separately. Systems under test: Initiator and Receiver Variants of MIME: attachments besides XDM, multiple XDM attachments Variants of XDM content: multiple submission sets, minimal/full metadata Verification of Message conformance: XDM structure and format Negative testing: none 4.2.5 Conversion: Mail to XDR This test area covers sending from the Mail transport and converting to the SOAP-XDR transport. Systems under test: Converter and Receiver (Initiator does not have functionality specific to this transaction) Variants of Internet Mail Format: Mail headers – CC, BCC, etc. Variants of MIME: attachments, content type, document encoding. Variants of XDS content: minimal metadata Verification of Message conformance: Direct-specific XDR message, XDR message, XDS metadata – Direct-specific requirements Verification of metadata conversion Negative testing: converter’s ability to handle SOAP faults or XDR error responses 4.2.6 Conversion: Mail - XDM to XDR This test area covers sending from the Mail transport and converting to the SOAP-XDR transport, where the sending system has the ability to construct XDM formatted content. Systems under test: Converter and Receiver (Initiator does not have functionality specific to this transaction) Variants of MIME: attachments, content type Variants of XDM: number of submission sets Variants of XDS content: minimal/full metadata Variants of network architecture: number of SOAP endpoints February 2, 2012 Page 14 Modular Specification Phase 2 Direct Version 1.0c Verification of message conformance: Direct-specific XDR message, XDR message, XDS metadata – Direct-specific requirements Verification of metadata conversion Negative testing: none 4.2.7 Conversion: XDR to Mail This test area covers sending from the SOAP-XDR transport and converting to the Mail transport. Systems under test: Converter (Initiator does not have functionality specific to this transaction, and Receiver of XDM is covered in area Mail – XDM) Variants of MIME: attachments, content type: multipart Variants of Direct: Direct addressing block Variants of XDM: number of submission sets Variants of XDR: folders, document links, references to existing objects Verification of Message conformance: Mail Message, XDS metadata – Direct-specific requirements Verification of Message conformance: XDM structure and format Verification of metadata conversion Negative testing: none 4.2.8 XD* This test area covers the underlying IHE XD* specifications: XDR/XDS/XDM. Because these specifications are used in a number of other contexts (e.g. NwHIN Query for Documents), we have created modular testing artifacts that may be reused in multiple testing contexts. The following is not the entire test coverage available in this test area. Rather, it is the test coverage utilized in the context of Direct testing. Systems under test: Converter Verification of message conformance: XDR message Verification of message conformance: XDS metadata, in the context of XDR, XDM, and Metadata-Limited Document Sources Test cases: none (in other words, all test cases utilizing XD* functionality are Direct-specific) 4.3 Testing Roadmap While the Test Cases can be executed in any order, Testers may find that there is an advantage to executing them within a larger roadmap, building up from simple messaging to interoperability: First, perform initial connectivity testing of each of the supported transports. Next, perform functional testing by executing each of the applicable Test Cases against a single complement of Testing Tools. February 2, 2012 Page 15 Modular Specification Phase 2 Direct Version 1.0c Finally, perform interoperability testing by repeating some or all of the Test Cases against other systems acting in the role of the Testing Tool, varying the implementations and deployment models of these other systems. The first and last steps are outlined below. 4.3.1 Initial connectivity testing Initial connectivity testing simply means exercising a subset of end-to-end test cases that exercise the key functionality of the system. We have categorized each test case by “flow” as follows: Basic success: this is a nominal “happy path” case, which tests the most simple and/or common way to exercise the functionality. Variant success: these are the tests that exercise all the variations in functionality, and still expect a successful result. Error: these are the tests that ensure the system under test can detect and/or handle error conditions appropriately. While testers may decide what constitutes initial connectivity differently, a good starting point would be to exercise all Basic success cases that apply to the system under test. 4.3.2 Interoperability testing The conformance Test Cases that accompany this Guide can be used to test interoperability between systems using the following approach: Test your system according to your business use cases and expected partners Test your system against different deployment models Test your system against different implementations (i.e. based on different COTS products) First, determine the business use case(s) that require the systems to communicate. Interoperability encompasses a spectrum of communication capabilities and the level of interoperability required by a use case depends on its goals. For example, sending a PDF from one system to another for a human being to print out and act on requires a less intensive level of system interoperability than sending a medical record that is to be parsed by the receiving system, automatically analyzed for certain conditions, then used to trigger clinical decisions affecting the well-being of the patient involved. Based on the level of interoperability required for each use case, determine the corresponding technical capabilities that must be expressed to demonstrate interoperability. Use the accompanying Test Cases to create test scenarios that demonstrate those technical capabilities. Two disparate systems that execute these scenarios successfully can be said to be interoperable at the required level. Data exchange partners may use the accompanying Test Cases to set up their own test beds. These can be used to vet the systems of new partners. Such test beds will be most effective if they host a set of systems representative of those in production. Second, test your system against systems built around different deployment models, as available. There are a number of such models identified on the Direct Project’s Deployment Models wiki page. Finally, test your system against different implementations, as available. For example, test against a February 2, 2012 Page 16 Modular Specification Phase 2 Direct Version 1.0c system that is built from different components than yours: using different email clients, email servers, messaging stacks, etc. February 2, 2012 Page 17 Modular Specification Phase 2 Direct Version 1.0c 5 Testing Prep This section describes the steps that must be taken in preparation for executing Test Cases. A Tester must stand up a Testing Tool, identify relevant Test Cases, and compile Test Data for use in the Test Cases. 5.1 Stand Up Testing Tool The first step in preparing for testing is to stand up a Testing Tool as described in section 3.2. In some Test Cases multiple Testing Tools are used. 5.2 Identify relevant Test Cases A Conformance Questionnaire is included with this Test Packet to assist Testers with identifying which Test Cases you will need to execute. The Questionnaire elicits information about the System implementation type as well as which optional functionality is supported and this information is mapped to the Test Case IDs found in the Test Case spreadsheet. Use the Questionnaire to determine which Test Cases are Required or Optional for your system. 5.3 Compile Test Data Each Test Case references test data or message(s) that reside in the Test Case spreadsheet, in the Test Data Index sheet. These sheets provide examples and descriptions of the test data or messages that will be transmitted during the test. The Tester should use the reference numbers provided in each Test Case to trace to correct test data or message description, and compile their own test data and messages based on these descriptions. See Section 6.3 for more on these reference numbers. Figure 8: Test Data Index – sample test Message description 5.4 General Test Case Guidance The Direct specifications include requirements that indicate varying degrees of conformance precision, such as MAY, SHOULD, or MUST. The following guidance provides some insight into how the Test Cases were written to address these variants. 1. Test Cases may be based on a function that MAY be or SHOULD be implemented, rather than a function that MUST be implemented. This is reflected directly in the test optionality (i.e. February 2, 2012 Page 18 Modular Specification Phase 2 Direct Version 1.0c required, optional, or conditional). If your System supports the requirement, do execute those test cases. If your System does not support the requirement, you may want to make a note of this when recording your testing outcomes (see Section 7.2.3). 2. Some of the requirements delineated in the RTM are not testable, either because they are operational requirements levied on the STA, or because they legislate activities in back-end systems. Example: RTM Requirement 10. “An organization that maintains Organizational Certificates MUST vouch for the identity of all Direct Addresses at the Health Domain Name tied to the certificate(s).” 3. There are some requirements in the RTM that dictate an action, but the method is not specified, or sample methods are suggested. In some instances, there are Test Cases that test the recommended method, although any method may be used. Elsewhere, Test Cases test the end to end behavior that is expected, without specifying the method. February 2, 2012 Page 19 Modular Specification Phase 2 Direct Version 1.0c 6 Testing Materials This Section serves as a guide to each Test Packet artifact, which contains the Test Cases, Test Data instructions, and message verification tools. The Test Cases sheet is your starting point and the Test Steps contained in each Test Case will direct you to the appropriate Test Data and verification tools. There are two test packets: Mod_Spec_P2_Transport_and_Security_Test_Package: this contains testing materials for the basic Mail transactions, including sending and receiving XDM-compliant attachments. Mod_Spec_P2_XD_Test_Package: this contains testing materials for Mail-XDR conversion transactions. The structure of each packet is nearly identical and is detailed below. 6.1 Test Packet Contents Each Test Packet contains sheets (tabs) that include Test Cases, Test Data, and verification and Checklist tools. Following is a description of each of these sheets. Figure 9: Test Packet sheets Each test packet starts with the following common sheets: Revision History: Cover Sheet with document history. Test Cases: Test Cases for all implementation types. Serves as a step-by-step guide for executing Test Cases. Each Test Case directs the Tester to test data in the Test Data Index or XDR Test Messages that is used for that Test Case. Each Test Case also directs the Tester to the appropriate checklists for conformance verification. Test Data Index: Detailed descriptions of the test data utilized by the Test Cases. Users should compile test data based on this Index. Following this are verification checklists. The Mail related checklists are: RFC5322 Checklist: Checklist for verifying conformance of an RFC5322 Message. Certificate Checklist: Checklist for verifying conformance of x.509 certificates. MDN Checklist: Checklist for verifying conformance of an MDN. The tests for both XDM attachments to Mail messages and Mail-XDR conversions use a number of additional checklists that cover IHE XD* areas, for example XDS, XDM and XDR, and converted messages. The following diagram shows the way these XD* checklists relate, and how they are referenced by test cases. We have introduced the idea of the “context” in which a checklist is used. Think of a context as a variable you can pass into a checklist that dictates some aspect of how it is executed. February 2, 2012 Page 20 Modular Specification Phase 2 Direct Version 1.0c Verify XDM files converted from XDR messages Test cases: Mail - XDM Verify sending and receiving XDM Zip Files in Direct messages - Verify XDR requests converted from Mail - Use minimal metadata context - Verify XDR requests converted from Mail+XDM - Context is test-casespecific; depends on XDM content Verify XDM files XDM Zip File Checklist Verify an XDM Zip File Contexts: none - Verify lcm:SubmitObjectsRequest in each METADATA.XML - Use DDSM for XDS Metadata Checklist Test cases: Conversion: XDR to Mail Verify converting XDR messages to Direct Mail messages Test cases: Conversion: Mail to XDR Verify converting Direct Mail messages to XDR messages Test cases: Conversion: Mail - XDM to XDR Verify converting Direct Mail messages with XDM Zip Files to XDR messages Direct XDR Request Checklist Verify a Direct XDR request message that has been converted from Mail Contexts: - Minimal metadata - Full metadata - Verify XDR Request - If minimal metadata, use PRDSML for XDS Metadata Checklist; if full use PRDS XDR Request Checklist Verify an XDR request Contexts: none - Verify rim:RegistryObjectList - Use PRDS unless overridden XDS Metadata Checklist Verify a collection of XDS metadata in a rim:RegistryObjectList Contexts: - PRDS: Provide and Register Document Set-b - PRDSML: Provide and Register Document Set-b, Metadata Limited Document Source actor - DDSM: Distribute Document Set on Media - RSQR: Registry Stored Query response Figure 10: Test cases using XD* checklists February 2, 2012 Page 21 Modular Specification Phase 2 Direct Version 1.0c 6.2 Navigating the Test Cases Sheet The Test Cases sheet is the Tester’s starting point for preparing and executing Test Cases. Each Test Case contains the following columns. Use the steps below to navigate through the Test Cases and Test Data. Figure 11: Test Cases Sheet - Columns Column Prefix ID February 2, 2012 Test Cases Sheet Columns Description A unique prefix to be used for a group of test cases. Combined with the ID, this yields a unique identifier. Note: the Prefix+ID identification scheme is also used to identify specific checks within Checklist sheets. Unique number (within the prefix) for the Test Case (or Checklist check). Numbers are assigned in blocks which correspond to an area in the test package, which assists in quickly finding a given test item traced from an RTM. Note that within a given block, the ID is only used for identification and traceability; it has no inherent meaning. For example, consecutive numbering of a group of test cases should not be assumed. Module Prefix Block Start Block End Area in Test Package DTS 1 99 Direct T&S: Page 22 Modular Specification Phase 2 Direct Version 1.0c Test Focus Flow SUT: Initiator/Receiver/Converter Source Dest Purpose/Description Test Steps Required/Conditional Additional Info/Comments RTM Underlying Spec Test Data IDs February 2, 2012 DTS 100 199 DTS 200 399 DTS 400 449 DTS CERT 450 1000 499 1399 XD 1 99 XD 100 299 XD 300 349 test cases except Mail Trust Verification RFC5322 Checklist Direct XD Test Cases Direct XDR Message Checklist MDN Checklist Direct T&S: Mail - Trust Verification test cases XDR Request Checklist XDS metadata checklist XDM Zip File Checklist Area of functionality being tested. The type of flow being tested. Can be one of: Basic success, Variant success, or Error. Whether the System under test is the message Initiator, Receiver, or Converter from one implementation type to another. Implementation type of the Source of the end-to-end message. This can be one of these types: Mail, Mail+XDM, or XDR (see Terminology section). Implementation type of the Destination of the end-to-end message. . For conversion test cases, this will be different from the Source. Brief description of what is being tested. Test execution steps. May also contain Preconditions, reference to Test Data, reference to Checklists and other verification instructions. Indicates whether this Test Case is Required or Conditional for Systems that support the functionality under test. Additional information, descriptions, context. Traceability to the RTM Traceability to the underlying specification Reference to the Test Data artifact to be used for the Test Case. The Test Data descriptions reside in the Test Data Index and are referenced by these unique IDs. Page 23 Modular Specification Phase 2 Direct Version 1.0c 6.2.1 By using the Questionnaire, you should already know the Test Cases that apply to your system. The SUT (System Under Test) column identifies the role/actor in the transaction (roles/actors are explained in section 3) that is being tested in each test case. The other actors in the transaction will be represented by Testing Tools. For example, in a basic Mail transaction test case, if SUT is I (Initiator), it is testing the Mail Sender. The Mail Receiver is a Testing Tool. Figure 12: Test Cases – System Under Test – Mail 6.2.2 In the XD conversion test packet, you will also need to look at the Source and Dest columns to understand the specific conversion and thus the role of the SUT. For example, if SUT is R, Source is Mail, and Dest is XDR, then the System under Test is the XDR Receiver in a Mail to XDR conversion. Both the Mail Sender and Mail to XDR Converter actors are considered Testing Tools. Figure 13: Test Cases – System Under Test – Mail-XDR Conversion 6.2.3 Look in the Test Data ID column for the ID that references the test data or message to be used for this Test Case, per Section 6.2.7 below. 6.2.4 In some Test Cases, the Test Steps column defines Preconditions for the Test Data, System or Testing Tool. February 2, 2012 Page 24 Modular Specification Phase 2 Direct Version 1.0c Figure 14: Test Cases Sheet - Preconditions 6.2.5 Some Test Cases require the use of two or more Testing Tools. Figure 15: Test Cases – multiple Testing Tools 6.2.6 Following the test execution steps, the Test Steps column directs the Tester to verify test outcomes through visual inspection or verification against a Checklist. This may include several steps. Figure 16: Test Cases Sheet – Test Steps verification steps 6.2.7 Some of the test cases are “Subflows” of another “basic flow” test case. These subflows replace a step in the basic flow but then refer back to the basic flow for any remaining steps. In the following case, Step 1 of DTS 8 would be replaced with Step 1 of this Test Case. After step one, the test case would resume with Step 2 of DTS 8. Figure 17: Test Cases Sheet – Test Steps verification steps February 2, 2012 Page 25 Modular Specification Phase 2 Direct Version 1.0c 6.3 Test Data 6.3.1 In each Test Case, Test Data IDs are provided for the Test Data and Messages to be used for that Test Case. These IDs reference the Test Data Index sheet as indicated. 6.3.2 The Tester will use the descriptions provided in the Test Data Index to compile their own Test Data and Messages for executing the Test Case. Figure 18: Test Cases Sheet – Test Data ID Figure 19: Test Data Index – Test Data ID February 2, 2012 Page 26 Modular Specification Phase 2 Direct Version 1.0c 7 Executing Testing Once you have completed the Testing Prep activities and are familiar with the contents of the Test Packet, you are ready to execute Test Cases. This section provides step by step instructions for executing Test Cases and conducting analysis of the resulting messages / outcomes. 7.1 Testing Steps After completing the testing prep activities described in section 5, the Tester must execute the following steps once for each Test Case. 1. Define which of your two endpoint nodes will act as the Initiator or Receiver for the case being run. If required, identify additional Testing Tools as instructed by the Test Case. 2. Compile the correct Test Data or Message(s) for the Test Case being run, as described in Section 6.2.7. 3. Configure the System, Testing Tool, and Test Data to meet Preconditions, if required by the Test Case. 4. Execute the test by following the Test Steps. 5. Collect logs or messages from the System or Testing Tool endpoint(s). 6. Perform verification inspection and analysis as directed by the Test Steps. 7.2 7.2.1 Analysis Prepare for Analysis After each Test Case has been executed, use the appropriate Checklist or the Converted Messages tab to verify conformance of the Message, as directed by the Test Case. 1. Extract the Messages from the server logs. 2. For XML messages (i.e. SOAP envelopes containing XDR requests/responses), open the Messages using an editor that renders XML correctly. 3. As directed by the Test Case, use the appropriate Checklist to verify that each of the elements in the Message conforms to the inspections in the checklist. Or, for converted messages, check the Message against the elements in the Converted Messages tab. 7.2.2 Checklist Verification To use the Checklist, follow these basic steps: 1. Element: Use the path provided in XPath notation or plain text in the "Element" column to navigate to the appropriate message element. 2. Verify Presence: Use this column to confirm that all required or conditionally required elements are present. If the value in the column is: February 2, 2012 Page 27 Modular Specification Phase 2 Direct Version 1.0c "R" - the element is required and must be present in the message. "R2" - if known, the element is required and must be present in the message. "C" - the element is conditional and must be present if the message meets the conditions specified. "O" - the element is optional and may be present. If present, it must conform to the checks listed in the checklist. 3. Multiple Possible: Some elements may be repeated multiple times in the message. If this is allowed, it will be noted with an “X” in the "Multiple Possible" column. In those cases, each instance of the element must pass the checks listed in the Checklist for the element. 4. Additional Verification, Description, Example: Visually inspect the message element to confirm that it meets each of the checks listed in this column. 5. RTM: For traceability, reference to the RTM requirement(s) and underlying specifications are provided for each line item. Figure 20: Checklist Sheet 7.2.3 Reporting Test Case Outcomes It is recommended that Testers record the outcomes of the Test Cases that were executed. A suggested method is to record the Test Case ID, the log file names, and any relevant notes for each Test Case in a single report. Notes might include discrepancies in expected outcomes, failures, optional functionality not supported, etc. To resolve discrepancies in expected outcomes, a Tester might submit a question to the appropriate specification body or to the maintainers of the test package, or modify the System(s) under test and then rerun the Test Case. February 2, 2012 Page 28 Modular Specification Phase 2 Direct Version 1.0c 8 Summary of Specifications This section contains an introduction to the Direct specifications and the underlying specifications that are incorporated by them. All of the Test Cases provide traceability to both the RTM and underlying specs. 8.1 Spec Tree The following graphic represents all of the specifications that are included in the Direct specs. February 2, 2012 Page 29 Modular Specification Phase 2 Direct Version 1.0c 1 Direct Transport and Security RTM 3 . B HL7 2 3 Applicability Statement for Secure Health Transport 3 . C XDR and XDM for Direct Messaging WS Addressing Core 1.0 3 . D 2 . A . 1 RFC 1034 - Domain Names - Concepts and Facilities 2 . A . 2 RFC 2045 - MIME Format 2 . B . 1 RFC 4648 - Base16, Base32, and Base64 Data Encodings RFC 768 - User Datagram Protocol 3 . E 2 . B . 2 RFC 5321 - Simple Mail Transfer Protocol RFC 2046 -MIME Media Types 3 . F 2 . A . 3 RFC 3370 Cryptographic Message Syntax (CMS) Algorithms 2 . A . 4 RFC 3798 - Message DIsposition Notification 2 . A . 5 RFC 4510 - Lightweight Directory Access Protocol (LDAP) Tech Specs Road Map 2 . A . 5 RFC 5280 - X.509 Internet Public Key Infrastructure: Certifi cate Revocation List (CRL) Profi le 2 . A . 6 RFC 5751 - Secure/ Multipupose Internet Mail Extensions (S/ MIME) Version 3.2 2 . B . 3 RFC 2560 - X.509 Internet Public Key Infrastructure: Online Certifi cate Status Protocol (OCSP) RFC 5322 - Internet Message Format 3 . A . 1 IHE ITI Vol 1 - Section 15, 16, E, J, K 2 . B . 4 RFC 3565 - Use of Advanced Encryption Standard (AES) Encryption Algorithm in Cryptographic Message Syntax (CMS) 2 . B . 5 RFC 4034 - Resource Records for the DNS Security Extensions 3 . A . 2 Vol 2b Section 3.32 Vol 2x - Appendix T and V 3 . A . 3 Vol 3 Section 4.1 XDS Metadata 3 . A . 4 3 . A . 5 DICOM PS 3.12 Media Formats, Annex F, R, V, W 3 . A . 6 2 . B . 6 RFC 4398 - Storing Certifi cates in the Domain Name System DICOM PS 3.10 Media Storage 3 . A . 7 XHTML 1.0 and XHTML Basic (Dec 2000) 2 . B . 7 3 . A . 8 ebRIM v3.0 3 . A . 8 ZIP format 3 . A . 9 RFC 3798 Message Disposition Notification RFC 5321 - Simple Mail Transfer Protocol 2 . B . 8 RFC 5322 - Internet Message Format 2 . B . 9 RFC 5652 Cryptographic Message Syntax (CMS) Figure 21: Spec Tree February 2, 2012 Page 30 Modular Specification Phase 2 Direct Version 1.0c 8.2 Spec Summaries All of the specifications incorporated by the Direct specs are provided below. The reference number refers to the spec tree in section 8.1. Ref # 1 2 Spec Summaries Specification Summary & Notes Direct Transport and Security This document describes how to use SMTP, S/MIME, Requirements and Traceability and X.509 certificates to securely transport health Matrix (RTM) information over the Internet. Participants in exchange http://modularspecs.siframework.o are identified using standard e-mail addresses rg/Phase+2+Homepage associated with X.509 certificates. The data is packaged using standard MIME content types. Authentication and privacy are obtained by using Cryptographic Message Syntax (S/MIME), and confirmation delivery is accomplished using encrypted and signed Message Disposition Notification. Optionally, certificate discovery of endpoints is accomplished through the use of the DNS. Advice is given for specific processing for ensuring security and trust validation on behalf of the ultimate message originator or receiver. Applicability Statement for Secure Health Transport http://wiki.directproject.org/Applic ability+Statement+for+Secure+Heal th+Transport This document is intended as an applicability statement providing constrained conformance guidance on the interoperable use of a set of RFCs describing methods for achieving security, privacy, data integrity, authentication of sender and receiver, and confirmation of delivery consistent with the data transport needs for health information exchange. This document describes the following REQUIRED capabilities of a Security/Trust Agent (STA), which is a Message Transfer Agent, Message Submission Agent or Message User Agent supporting security and trust for a transaction conforming to this specification: • Use of Domain Names, Addresses, and Associated Certificates • Signed and encrypted Internet Message Format documents • Message Disposition Notification • Trust Verification This document also describes the following OPTIONAL components of a transaction conforming to this specification: Certificate Discovery Through the DNS February 2, 2012 Page 31 Modular Specification Phase 2 Direct Version 1.0c 3 XDR and XDM for Direct Messaging http://wiki.directproject.org/XDR+a nd+XDM+for+Direct+Messaging This specification addresses use of XDR and XDM zipped packages in e-mail in the context of directed messaging to fulfill the key user stories of the Direct Project. This specification defines: 1. Use of XD* Metadata with XDR and XDM in the context of directed messaging 2. Additional attributes for XDR and XDM in the context of directed messaging 3. Issues of conversion when endpoints using IHE XDR or XDM specifications interact with endpoints utilizing SMTP for delivering healthcare content. 2.A.1 RFC 1034 http://www.ietf.org/rfc/rfc1034.txt 2.A.2 RFC 2045 http://www.ietf.org/rfc/rfc2045.txt This RFC introduces domain style names, their use for Internet mail and host address support, and the protocols and servers used to implement domain name facilities. This is the first of a set of documents, collectively called Multipurpose Internet Mail Extensions (MIME), that redefine the format of messages to allow for 1. textual message bodies in character sets other than US-ASCII, 2. an extensible set of different formats for non-textual message bodies, 3. multi-part message bodies, and 4. textual header information in character sets other than US-ASCII. This initial document specifies the various headers used to describe the structure of MIME messages. 2.A.3 RFC 3370 http://tools.ietf.org/html/rfc3370 2.A.4 RFC 3798 http://tools.ietf.org/html/rfc3798 February 2, 2012 Note: To best understand this RFC, read the introduction and read the http://en.wikipedia.org/wiki/MIME article. Pay special attention to the "multipart" section as every Direct message needs to be multipart in order to be signed per RTM requirement 40. This document describes the conventions for using several cryptographic algorithms with the Cryptographic Message Syntax (CMS). The CMS is used to digitally sign, digest, authenticate, or encrypt arbitrary message contents. Message Disposition Notifications are messages that are returned to the sender of the original message as a sort of acknowledgement of the receipt of the message. This spec defines the format and syntax of these MDN Page 32 Modular Specification Phase 2 Direct Version 1.0c 2.A.5 RFC 4510 http://tools.ietf.org/html/rfc4510 2.A.6 RFC 5280 http://www.ietf.org/rfc/rfc5280.txt 2.A.7 RFC 5751 http://tools.ietf.org/html/rfc5751 2.B.1 RFC 768 http://tools.ietf.org/html/rfc768 2.B.2 RFC 2046 http://tools.ietf.org/html/rfc2046 2.B.3 RFC 2560 http://www.ietf.org/rfc/rfc2560.txt February 2, 2012 messages. The Lightweight Directory Access Protocol (LDAP) is an Internet protocol for accessing distributed directory services that act in accordance with X.500 data and service models. This document provides a road map of the LDAP Technical Specification. This memo profiles the X.509 v3 certificate and X.509 v2 certificate revocation list (CRL) for use in the Internet. An overview of this approach and model is provided as an introduction. The X.509 v3 certificate format is described in detail, with additional information regarding the format and semantics of Internet name forms. Standard certificate extensions are described and two Internet-specific extensions are defined. A set of required certificate extensions is specified. The X.509 v2 CRL format is described in detail along with standard and Internet-specific extensions. An algorithm for X.509 certification path validation is described. This document describes a protocol for adding cryptographic signature and encryption services to MIME data. This specification defines how to create a MIME body part that has been cryptographically enhanced according to the Cryptographic Message Syntax (CMS) RFC 5652 , which is derived from PKCS #7 [PKCS-7]. This specification also defines the application/pkcs7-mime media type that can be used to transport those body parts. This document also discusses how to use the multipart/signed media type defined in [MIME-SECURE] to transport S/MIME signed messages. multipart/signed is used in conjunction with the application/pkcs7-signature media type, which is used to transport a detached S/MIME signature. This User Datagram Protocol (UDP) is defined to make available a datagram mode of packet-switched computer communication in the environment of an interconnected set of computer networks. This protocol provides a procedure for application programs to send messages to other programs with a minimum of protocol mechanism. This is the second of a set of documents, collectively called Multipurpose Internet Mail Extensions (MIME) (see RFC 2045, above). This second document defines the general structure of the MIME media typing System and defines an initial set of media types. This document specifies a protocol useful in determining the current revocation status of a digital certificate Page 33 Modular Specification Phase 2 Direct Version 1.0c 2.B.4 RFC 3565 http://tools.ietf.org/html/rfc3565 2.B.5 RFC 4034 2.B.6 RFC 4398 http://tools.ietf.org/html/rfc4398 2.B.7 RFC 5321 http://tools.ietf.org/html/rfc5321 2.B.8 RFC 5322 http://tools.ietf.org/html/rfc5322 without requiring CRLs. This document specifies the conventions for using Advanced Encryption Standard (AES) content encryption algorithm with the Cryptographic Message Syntax [CMS] enveloped-data and encrypted-data content types. This document is part of a family of documents that describe the DNS Security Extensions (DNSSEC). The DNS Security Extensions are a collection of resource records and protocol modifications that provide source authentication for the DNS. This document defines the public key (DNSKEY), delegation signer (DS), resource record digital signature (RRSIG), and authenticated denial of existence (NSEC) resource records. The purpose and format of each resource record is described in detail, and an example of each resource record is given. Note: This RFC assumes knowledge of RFCs 4033, 4035, 1034, 1035, 2181, 2308 Cryptographic public keys are frequently published, and their authenticity is demonstrated by certificates. A CERT resource record (RR) is defined so that such certificates and related certificate revocation lists can be stored in the Domain Name System (DNS). This document is a specification of the basic protocol for Internet electronic mail transport. It consolidates, updates, and clarifies several previous documents, making all or parts of most of them obsolete. It covers the SMTP extension mechanisms and best practices for the contemporary Internet, but does not provide details about particular extensions. Although SMTP was designed as a mail transport and delivery protocol, this specification also contains information that is important to its use as a "mail submission" protocol for "split-UA" (User Agent) mail reading Systems and mobile environments. This document specifies the Internet Message Format (IMF), a syntax for text messages that are sent between computer users, within the framework of "electronic mail" messages. Notes: This specification specifies all of the header fields in an internet message. Also, the way this specification differs from RFC2045 (MIME Messages) is that it doesn't allow any other content types other than text. You can't use this for images, documents, etc. February 2, 2012 Page 34 Modular Specification Phase 2 Direct Version 1.0c 2.B.9 RFC 5652 http://tools.ietf.org/html/rfc5652 This document describes the Cryptographic Message Syntax (CMS). This syntax is used to digitally sign, digest, authenticate, or encrypt arbitrary message content. Notes: This RFC describes the format and content of the encrypted MIME part and / or the signed MIME-part. These parts contain information like algorithm, keys, certificates, etc. This information is used in RFC 5751 (S/MIME). S/MIME explains how to use CMS in an RFC5322 message. 3.A.1 IHE ITI Vol 1 – sec 15, 16, E, J, K http://www.ihe.net/Technical_Fra mework/upload/IHE_ITI_TF_Rev80_Vol1_FT_2011-08-19.pdf Vol 1: Section 1 of Volume 1 describes the general nature, purpose and function of the Technical Framework. Section 2 introduces the concept of IHE Integration Profiles that make up the Technical Framework. Section 3 and the subsequent sections of this volume provide detailed documentation on each integration profile, including the IT Infrastructure problem it is intended to address and the IHE actors and transactions it comprises. The appendices following the main body of the document provide a summary list of the actors and transactions, detailed discussion of specific issues related to the integration profiles and a glossary of terms and acronyms used. Direct Transport and Security: Only deals with Zip file attachments to email. 3.A.2 IHE ITI Vol 2b – Sec 3.32 http://www.ihe.net/Technical_Fra mework/upload/IHE_ITI_TF_60_Vol2b_FT_2009-08-10.pdf Volumes 2a, 2b, and 2x of the IT Infrastructure Technical Framework provide detailed technical descriptions of each IHE transaction used in the IT Infrastructure Integration Profiles. Volume 3 contains content specification and specifications used by multiple transactions. These volumes are consistent and can be used in conjunction with the Integration Profiles of other IHE domains. 3.A.3 IHE ITI Vol 2x – Appx T and V http://www.ihe.net/Technical_Fra mework/upload/IHE_ITI_TF_Rev80_Vol2x_FT_2011-08-19.pdf Notes: The Direct RTM req 52 changes the MDN return priorities. 3.A.4 IHE ITI Vol 3 – Sec 4.1 Section 4.1 includes mapping of XDS concepts to ebRS and ebRIM semantics, metadata definitions, XDS February 2, 2012 Page 35 Modular Specification Phase 2 Direct Version 1.0c 3.A.1.a 3.A.1.b 3.A.1.c http://www.ihe.net/Technical_Fra mework/upload/IHE_ITI_TF_Rev80_Vol3_FT_2011-08-19.pdf Registry Adaptor. DICOM PS 3.12 Annex F, R, V, W http://medical.nema.org/dicom/20 04/04_12PU.PDF DICOM PS 3.10 http://medical.nema.org/dicom/20 04/04_10PU.PDF XHTML 1.0 http://www.w3.org/TR/xhtml1/ XHTML Basic http://www.w3.org/TR/xhtmlbasic/ Media formats This specification defines the Second Edition of XHTML 1.0, a reformulation of HTML 4 as an XML 1.0 application, and three DTDs corresponding to the ones defined by HTML 4. The semantics of the elements and their attributes are defined in the W3C Recommendation for HTML 4. These semantics provide the foundation for future extensibility of XHTML. Compatibility with existing HTML user agents is possible by following a small set of guidelines. The XHTML Basic document type includes the minimal set of modules required to be an XHTML host language document type, and in addition it includes images, forms, basic tables, and object support. It is designed for Web clients that do not support the full set of XHTML features; for example, Web clients such as mobile phones, PDAs, pagers, and set top boxes. The document type is rich enough for content authoring. 3.A.1.d 3.A.1.e 3.A.1.f ebRIM v3.0 http://docs.oasisopen.org/regrep/v3.0/specs/regrep -rim-3.0-os.pdf ZIP Format http://www.pkware.com/support/zi p-app-note/ RFC 3798 http://tools.ietf.org/html/rfc3798 3.B HL7 http://www.hl7.org/ 3.C WS Addressing Core 1.0 http://www.w3.org/TR/ws-addrcore/ RFC 4648 3.D February 2, 2012 This document defines the types of metadata and content that can be stored in an ebXML Registry. Base 16, 32 and 64 encoding. Page 36 Modular Specification Phase 2 Direct Version 1.0c http://tools.ietf.org/html/rfc4648#s ection-4 3.E 3.F RFC 5321 RFC 5322 February 2, 2012 Page 37 Modular Specification Phase 2 Direct Version 1.0c 9 Appendix A – Glossary of Terms A brief list of terms referred to in this document or within the associated Test Cases follows. Glossary of Terms Term Description Checklist Inspection criteria for verifying conformance of a Message to the specifications. May contain data/fields, formats, values, required/optional, etc. Implementation Type Characterization of the type of Direct messages a System can create and receive. Test Implementation (TI) A benchmark System used to provide a definitive interpretation of the specifications. It is developed in concert with specifications and the test suite to collectively prove that the specifications are implementable and clear. Requirements Traceability Matrix (RTM) The document that correlates the requirements, Test Cases, and functionality of a System. In this project’s context, it also contains a flattened version of the requirements in one place (not merely a table showing relationships). Reviewer The person (or automated tool) looking at the resultant message from an execution script, often performing inspections according to an associated conformance checklist. The Test Steps direct the Reviewer through the necessary inspection steps. System System being tested by a Test Case. Test Case One logical path through a System and/or Testing Tool pair. The Test Case includes a description of its test goal(s), Testing Steps, test data, and verification steps. Tester The person (or automated tool) executing the Test Case. Testing Tool An endpoint that is capable of operating as the control end of the message exchange specified by a given Test Case, where the “System” is the side being tested. The Testing Tool can be a copy of the System under test, a separate System, or any other endpoint simulator. February 2, 2012 Page 38