appendix a: cam solution assessment report template

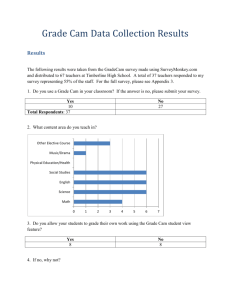

advertisement