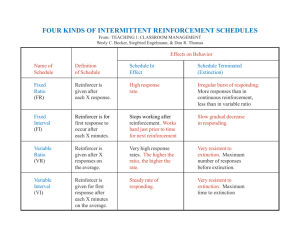

Terms-and-defintions.. - FIT ABA Materials: Eb Blakely

advertisement