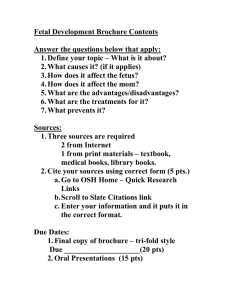

Algorithms

advertisement

Advanced Computer Architecture

Midterm Exam., Nov. 12, 2012

1. (16 pts) Consider enhancing a machine by adding a vector mode to it. When a

computation is run in vector mode it is 10 times faster than the normal mode of

execution. We call the percentage of time that could be spent using vector mode

the percentage of vectorization.

a. (6 pts) Draw a graph that plots the speedup as a percentage of the

computation performed in vector mode. Label the y axis “Net speedup” and

label the x axis “Percent vectorization.”

b. (3 pts) What percentage of vectorization is needed to achieve a speedup of 2?

c. (3 pts) What percentage of vectorization is needed to achieve one-half the

maximum speedup attainable from using vector mode?

d. (4 pts) Suppose you have measured the percentage of vectorization for

programs to be 70%. The hardware design group says they can double the

speed of the vector rate with a significant additional engineering investment.

You wonder whether the compiler crew could increase the use of vector

mode as another approach to increasing performance. How much of an

increase in the percentage of vectorization (relative to current usage) would

you need to obtain the same performance gain? Which investment would you

recommend?

2. (18 pts) Your company has a benchmark that is considered representative of your

typical applications. One of the older-model workstations does not have a

floating-point unit and must emulate each floating-point instruction by a sequence

of integer instructions. This older-model workstation is rated at 120 MIPS on this

benchmark. A third-party vendor offers an attached processor that executes each

floating-point instruction on a dedicated coprocessor (i.e., no emulation is

necessary). The workstation/attached processor rates 80 MIPS on the same

benchmark. The following symbols are used:

I—Number of integer instructions executed on the benchmark.

F—Number of floating-point instructions executed on the benchmark.

Y—Number of integer instructions to emulate a floating-point instruction.

W—Time to execute the benchmark on the workstation alone.

B—Time to execute the benchmark on the workstation/attached processor

combination.

a. (5 pts) Write an equation for the MIPS rating of each configuration using the

symbols above.

b. (3 pts) For the configuration without the coprocessor, we measure that F = 8

× 106, Y = 50, and W = 4. Find I.

c. (3 pts) What is the value of B?

d. (3 pts) What is the MFLOPS rating of the system with the attached processor

board?

e. (4 pts) Your colleague wants to purchase the attached processor board even

though the MIPS rating for the configuration using the board is less than that

of the workstation alone. Is your colleague’s evaluation correct? Defend your

answer.

3. (6 pts) It is usually the case that a set-associative or fully-associative cache has a

higher hit rate than a direct-mapped cache. But this is not always true. To illustrate

this, show a memory reference trace for a program that has a higher hit rate with a

2-block direct-mapped cache than a fully-associative cache with 2 blocks.

4. (20 pts) Consider a processor with 32-bit virtual addresses, 4KB pages and 36-bit

physical addresses. Assume memory is byte-addressable (i.e. the 32-bit VA

specifies a byte in memory).

a. L1 instruction cache: 64 Kbytes, 128 byte blocks, 4-way set associative,

indexed and tagged with virtual address.

b. L1 data cache: 32 Kbytes, 64 byte blocks, 2-way set associative, indexed and

tagged with physical address, write-back.

c. 4-way set associative TLB with 128 entries in all. Assume the TLB keeps a

dirty bit, a reference bit, and 3 permission bits (read, write, execute) for each

entry.

Answer the following questions:

a. (12 pts) Specify the number of offset, index, and tag bits for each of these

structures in the table below. Also, compute the total size in number of bit

cells for each of the tag and data arrays.

b. (4 pts) Explain why accesses to the data cache would take longer than accesses

to the instruction cache. Suggest a lower-latency data cache design with the

same capacity and describe how the organization of the cache would have to

change to achieve the lower latency.

c. (4 pts) Assume the architecture requires writes that modify the instruction text

(i.e. self-modifying code) to be reflected immediately if the modified

instructions are fetched and executed. Explain why it may be difficult to

support this requirement with this instruction cache organization.

5. (12 pts) Given the following benchmark code, and assume that all variables except

array locations reside in registers, a double variable occupies 8 bytes, and that

arrays A, B, and C are placed consecutively in memory, answer the following

questions:

double A[1024], B[1024], C[1024];

for (int i = 0; i < 1000; i += 2) {

A[i] = 35.0 * B[i] + C[i+1];

}

a. Assuming a virtually-addressed two-way set associative cache of capacity

8KB and 64 byte blocks, compute the overall miss rate (number of misses

divided by number of references).

b. Assuming a virtually-addressed direct-mapped cache of capacity 8KB and 64

byte blocks, compute the overall miss rate.

c. Assuming a virtually-addressed fully-associative cache with infinite capacity

and 64 byte blocks, compute the overall miss rate.

6. (28 pts) Below is a non-pipelined implementation of a simple microprocessor that

executes only ALU instructions with no data hazards. Given that pipeline registers

have the following timing requirements: 0.5 ns Setup time and 1 ns Delay time,

answer the following questions:

a. (12 pts) Generate a pipelined implementation that minimizes internal

fragmentation. Each sub-block in the diagram is a primitive unit that cannot

be further partitioned into smaller ones. Show the diagram of your pipelined

implementation.

b. (3 pts) Compute the latencies (in ns) of the instruction cycle of the

non-pipelined and the pipelined implementations.

c. (3 pts) Compute the machine cycle times (in ns) of the non-pipelined and the

pipelined implementations.

d. (4 pts) Compute the (potential) speedup of your pipelined implementation

over the original non-pipelined implementation.

e. (6 pts) Draw a simplified diagram of the pipeline stages that includes all the

necessary data forwarding paths.