Lcog read ch 4

advertisement

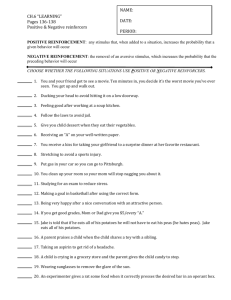

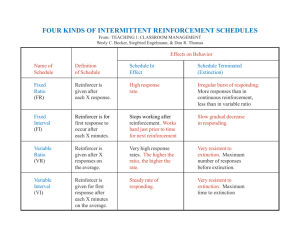

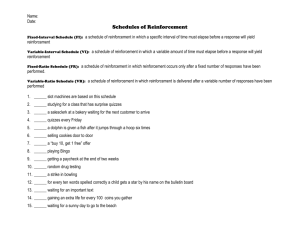

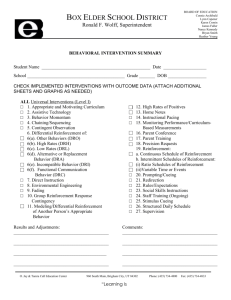

Lcog read ch 4 1. Key concepts: behavior modification: refers to applying the principles of operant conditioning to residential settings (mental health, classrooms, etc.) in order to control or change behavior. contingency management: see above; it is the controlling of the consequences of behavior in order to make a change in the frequency of that behavior. continuous reinforcement: the schedule of reinforcement that reinforces the organism every time it produces the desired response. Produces the fastest learning, but also the most easily extinguishable behaviors. Also, decline in responses due to satiation. cumulative recorder: machine used by Skinner and Skinner-box users. (I know this one) depression effect: refers to the phenomenon present in contrast studies where organisms usually reinforced w/ large amounts are then reinforced with small amounts. Their rate of responding goes below that of the normal rate for organisms reinforced at the small amount. The converse is true: see elation effect. Also, this is not only true for the amount/size of the reinforcer, but also for the time delay. Note, in all cases, the depression effect wears off and organisms then operate at the baseline for the level of reinforcement. deprivation: the act of removing any contact with the reinforcer (e.g., not feeding a rat before training). This is to increase "motivation". discriminative stimulus: the stimulus that suggests to an organism that certain RS contingencies are currently in effect. (e.g., a green light may signal to a rat that bar presses will be reinforced, and the absence of the light signals that they will not be reinforced). elation effect: see depression effect. Where organisms reinforced at a small level are then reinforced at a large amount, thus increasing their rate of responding to above that of the organisms' baseline rates on the large amount. This effect gradually wears off and the organisms return to the baseline rate proportional to the amount of reinforcement. fixed interval: a schedule of reinforcement where a fixed period of time needs to pass after a target behavior before that behavior will be reinforced again. Produces a "scallop" pattern on a cumulative recorder as a result of organisms learning they will not be reinforced unless x minutes pass; thus they only bother responding when that time is close. Also, slow rate of responding. fixed ratio: a schedule of reinforcement where an organism needs to perform a fixed amount of behaviors in order to achieve reinforcement. Produces the post-reinforcement pause. Fast rates of responding. instrumental conditioning: refers to discrete trial learning procedures (e.g., running a maze, the puzzle box); the organism is removed from the situation once the response occurs. law of effect: stated by Thorndike; simply that when a pleasurable experience follows a behavior, it is more likely that that behavior will occur. maintenance: following behavioral acquisition-the process of keeping a trained responder responding. matching law: proposed by Herrnstein (1961); the phenomenon that occurs when using concurrent schedules. An organism trained on concurrent schedules will respond in proportion to the ratio of reinforcement. negative reinforcement: the type of reinforcement where an aversive stimulus is removed as a result of a behavior, thus increasing the likelihood of the behavior. operant conditioning: the process of learning a relationship between a voluntary behavior and it's consequences (rewards or punishments), as opposed to respondent conditioning which functions solely with reflexes. partial reinforcement expectation effect: refers to the phenomenon where an organism that has been trained on a partial reinforcement schedule is more resistant to extinction than one trained on a continuous schedule. There are many explanations for this effect: frustration tolerance, the most accepted (Capaldi, 1966) is the sequential theory: a non-reinforced trial followed by a reinforced trial teaches the subject to keep responding despite not getting reinforced. positive reinforcement: increasing the likelihood of a behavior by presenting an appetitive stimulus after its occurance. post-reinforcement pause: occurs in fixed ratio schedules; the organism stops responding for a brief moment of time after being reinforced. This pause increases as the number of responses necessary for reinforcement increases. punisher: any consequence of a behavior intended to decrease that behavior (either presenting an aversive stimulus or removing a appetitive stimulus). punishment: see above rate of response: the measure of most operant conditioning experiments (# of responses per unit of time) satiation: when an organism no longer desires the reinforcer; the organism is "full" schedule: shaping: the process of reinforcing successively accurate approximations of the target behavior. trial-and-error learning: what Thorndike called the learning exhibited by his cats and his maze animals. They exhibit a wide variety of behaviors the first time in the situation, then, through "trial-and-error" learn which behavior allows them to receive reinforcement. variable interval: a reinforcement schedule where the amount of time necessary to pass in between behaviors in order to receive reinforcement varies randomly around a given average. This schedule produces a steady rate of slow responses, very persistent. variable ratio: reinforcement schedule where the number of necessary behaviors to achieve reinforcement varies randomly around a given average. This schedule produces rapid, stable responses. 2. Identify the scenario: Response: Cutting in line Reinforcer: great seat Type of reinforcement: positive reinforcement Schedule: continuous SD: public event w/ a queue. 3. Analyze: Response: book open on desk Reinforcer: no nag Type of reinforcement: negative reinforcement Schedule: continuous SD: school night. 4. Analyze: Response: Friday night partying Reinforcer: sit out quarter Type of reinforcement: punishment type II Schedule: fixed interval SD: Friday night.