Definition List 132KB Nov 30 2014 05:36:14 PM

advertisement

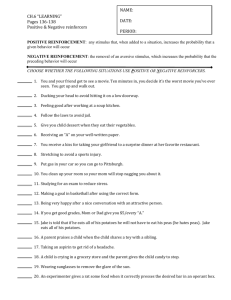

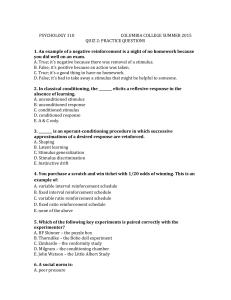

Definition List Objective: After completing the two weeks of training, participants will be able to define and state examples as intraverbal behavior as well as tact examples within their own behavior and the behavior of others for all of the basic concepts and terms listed below. Basic Concepts and Terms: 1. Determinism 2. Empiricism 3. Experiment 4. Independent Variable (IV) 5. Dependent Variable (DV) 6. Functional Relationship (FR) 7. Confounding Variable 8. Validity (of Measurement) 9. Internal Validity 10. External Validity 11. Reliability (of Measurement) 12. Replication 13. Parsimony 14. Philosophical Doubt 15. Mentalism 16. Explanatory Fiction 17. Science 18. Behaviorism 19. Behavior Analysis 20. Experimental Analysis of Behavior (EAB) 21. Applied Behavior Analysis (ABA) 22. Applied 23. Behavioral 24. Analytic 25. Technological 26. Conceptually Systematic 27. Effective 28. Generality 29. Social Validity 30. Methodological Behaviorism 31. Radical Behaviorism 32. Learning 33. Behavior 34. Response 35. Repertoire 36. Topography 37. Topographical Response Class 38. Function 39. Functional Response Class 40. Overt Behavior 41. Covert (Private) Behavior 42. Environment 43. Stimulus 44. Antecedent 45. Consequence 46. Contingency 47. Single Subject (Within Subject) Design 48. Cumulative Record 49. Visual Analysis 50. Acquisition 51. Acceleration 52. Deceleration 53. Target Behavior 54. Frequency 55. Rate 56. Percentage 57. Duration 58. Latency 59. Interresponse Time (IRT) 60. Intertrial Interval (ITI) 61. Continuous Measurement 62. Discontinuous Measurement 63. Data 64. Reflex 65. Respondent Behavior 66. Respondent (Pavlovian/Classical) Conditioning 67. Elicit 68. Unconditioned Stimulus (US) 69. Neutral Stimulus (NS) 70. Conditioned Stimulus (CS) 71. Unconditioned Response (UR) 1 72. Conditioned Response (CR) 73. Respondent Extinction 74. Operant Behavior 75. Operant Conditioning 76. Three-term Contingency 77. Emit 78. Reinforcement 79. Reinforcer 80. Unconditioned (Unlearned/Primary) Reinforcer 81. Conditioned (Learned/Secondary) Reinforcer 82. Generalized Conditioned Reinforcer 83. Backup Reinforcer 84. Positive Reinforcement 85. Positive Reinforcer 86. Negative Reinforcement 87. Negative Reinforcer 88. Aversive Stimulus 89. Escape Behavior 90. Avoidance Behavior 91. Socially Mediated Reinforcement 92. Automatic Reinforcement 93. Schedule of Reinforcement 94. Contingent Reinforcement 95. Non-contingent Reinforcement (NCR) 96. Continuous Reinforcement 97. Intermittent Reinforcement 98. Fixed Ratio (FR) Schedule 99. Variable Ratio (VR) Schedule 100. Fixed Interval (FI) Schedule 101. Variable Interval (VI) Schedule 102. Differential Reinforcement 103. Differential Reinforcement of Alternative Behavior (DRA) 104. Extinction (Operant) 105. Escape Extinction 106. Extinction Burst 107. Spontaneous Recovery 108. Resistance to Extinction 109. Punishment 110. Punisher 111. Unconditioned (Unlearned/Primary) Punisher 112. Conditioned (Learned/Secondary) Punisher 113. Positive (Type I) Punishment 114. Negative (Type II) Punishment 115. Time Out (from Positive Reinforcement) 116. Response Cost 117. Functional Behavior Assessment (FBA) 118. Functional Analysis (FA) 119. Stimulus Control 120. Stimulus Discrimination 121. Discriminative Stimulus (SD) 122. S-delta (S∆) 123. Stimulus Discrimination Training 124. Transfer of Stimulus Control 125. Stimulus Generalization 126. Response Generalization 127. Stimulus Class 128. Prompting 129. Prompt 130. Fading (Prompt Fading) 131. Response Prompt 132. Verbal Prompt 133. Modeling Prompt 134. Gestural Prompt 135. Physical Prompt 136. Stimulus Prompt 137. Within Stimulus Prompt 138. Extra-stimulus Prompt 139. Prompt Delay 140. Stimulus Fading 141. Motivation 142. Motivating Operation (MO) 143. Value Altering Effect 144. Establishing Operation (EO) 145. Abolishing Operation (AO) 146. Behavior Altering Effect 147. Evocative Effect 148. Abative Effect 149. Unconditioned MO (UMO) 150. Conditioned MO (CMO) 151. Transitive MO (CMO-T) 152. Reflexive MO (CMO-R) 153. Surrogate MO (CMO-S) 154. Verbal Behavior (VB) 155. Speaker 156. Listener 157. Audience 158. Verbal Operant 159. Formal Similarity 160. Point-to-point Correspondence 161. Duplic 162. Codic 163. Mand 164. Tact 165. Echoic 166. Mimetic 167. Copying Text 2 168. 169. 170. 171. 172. 173. Intraverbal Textual Transcription Autoclitic Listener Responding Listener Responding by Feature, Function, and Class (LRFFC) 174. ABLLS 175. VB-MAPP 176. 177. 178. 179. 180. 181. 182. 183. 184. Shaping Successive Approximations Terminal Behavior Behavior Chain Task Analysis Stimulus-Response Chain Backward Chaining Forward Chaining Total-Task Presentation 1. Determinism – an assumption of science that the universe is a lawful and orderly place in which phenomena (e.g., behavior) occur in relation to other events, not in some accidental fashion. 2. Empiricism – an assumption of science that involves the objective observation of the phenomena of interest. 3. Experiment – a carefully controlled comparison of some measure of the phenomenon of interest (the dependent variable) under two or more different conditions in which only one factor at a time (the independent variable) differs. 4. Independent Variable (IV) – the variable that is systematically manipulated by the researcher in an experiment; typically referred to as intervention or treatment. 5. Dependent Variable (DV) – the variable in an experiment that is measured to see if it changes as a result of manipulations in the independent variable; typically referred to as the target behavior. 6. Functional Relationship (FR) – a statement summarizing the results of an experiment that describes how a specific change in the variable of interest (the dependent variable) can be produced by manipulating another event (the independent variable). 7. Confounding Variable – an uncontrolled factor, which is not part of the independent variable, that is known or suspected to exert influence upon the dependent variable; this weakens internal validity and the ability to establish a functional relationship between the independent and dependent variables. 8. Validity (of Measurement) – the extent to which the data obtained through measurement are directly related to the target behavior of interest. 9. Internal Validity – how convincingly an experiment demonstrates that changes in the dependent variable are a function of the independent variable and not attributable to unknown or uncontrolled variables. 10. External Validity –the degree to which the findings of a study have generality across other subjects, settings, and/or behaviors. 11. Reliability (of Measurement) – the degree to which repeated measurement of the same event results in the same values; the consistency of measurement or data collection. 12. Replication – repeating conditions within an experiment, or repeating entire experiments. 3 13. Parsimony – an assumption of science that involves ruling out all simple, logical explanations first before considering more complex, abstract explanations. 14. Philosophical Doubt – an assumption of science in which conclusions of science are tentative and can be revised as new data comes to light; an attitude that the truthfulness of all scientific theory should be continually questioned. 15. Mentalism – an approach to explaining behavior that assumes there is an inner dimension that causes or mediates behavior. 16. Explanatory Fiction – a fictitious or hypothetical variable that is given as a reason for the phenomenon it claims to explain, but often just puts another name to the phenomenon without identifying a distinct cause or reason for it; also called circular reasoning. 17. Science – a systematic approach to the understanding of natural phenomena; incorporates determinism, empiricism, experimentation, replication, parsimony, and philosophical doubt. 18. Behaviorism – a philosophy of a science of behavior. 19. Behavior Analysis – the science of behavior. 20. Experimental Analysis of Behavior (EAB) – the basic research into the principles of behavior; typically conducted in controlled laboratory settings where the science of behavior is the subject matter of interest. 21. Applied Behavior Analysis (ABA) – the application of the experimental analysis of behavior to human affairs with the goal of improving socially significant behaviors. 22. Applied – a dimension of applied behavior analysis that involves addressing socially significant behaviors in ways that produce meaningful improvements in people’s lives. 23. Behavioral – a dimension of applied behavior analysis that involves studying behaviors that are in need of improvement and that can be objectively defined and empirically observed. 24. Analytic – a dimension of applied behavior analysis that involves demonstrating a functional relationship between the events that were manipulated (the independent variable) and a reliable change in some measurable dimension of a behavior (the dependent variable). 25. Technological – a dimension of applied behavior analysis that involves describing all procedures with enough detail and clarity that they could be replicated with the same results. 26. Conceptually Systematic – a dimension of applied behavior analysis in which all of the procedures used for behavior change are derived from and described in terms relevant to the basic principles of the science. 27. Effective – a dimension of applied behavior analysis that involves improving the behavior of interest to a practical degree (as measured by clinical or social significance). 4 28. Generality – a dimension of applied behavior analysis that is evidenced when behavior change lasts over time, across different environments, or spreads to other behaviors not directly targeted. 29. Social Validity – as determined by the individual and his/her community, the extent to which target behaviors are appropriate, intervention procedures are acceptable, and outcomes are significant, thereby improving the individual’s quality of life. 30. Methodological Behaviorism – a philosophical stance that views events that are not publicly observable as outside of the realm of the science of behavior. 31. Radical Behaviorism – a philosophical stance that attempts to understand all human behavior, including private events. 32. Learning – a behavioral process that can be observed and directly measured. 33. Behavior – activity of living organisms; everything that a person does; typically used to refer to an operant, or a class of responses. 34. Response – a single instance or occurrence of a type of behavior. 35. Repertoire – all of the behaviors a person can do relevant to a set of skills or knowledge. 36. Topography – physical shape or form of the behavior; how the behavior looks. 37. Topographical Response Class – a set of responses that all share the same form or appearance. 38. Function – the effect a behavior has on the environment; defined by the antecedents and consequences relevant to behavior. 39. Functional Response Class – a group of responses that produce the same effect on the environment, regardless of their form. 40. Overt Behavior – behavior that is publicly observable. 41. Covert (Private) Behavior – behavior that cannot be directly observed by others. 42. Environment – the circumstances in which the organism exists; includes both the world outside of the skin and the world inside the skin. 43. Stimulus – change to the environment that can be detected through sensory receptor cells. 44. Antecedent – environmental condition or stimulus change that existss or occur just prior to the behavior of interest. 45. Consequence – a stimulus change that occurs following the behavior of interest. 46. Contingency – a dependent relationship between a behavior and its controlling variables (i.e., antecedent and consequence stimuli). 5 47. Single Subject (Within Subject) Design – a type of research design where an individual subject serves as its own control; single subject designs are the standard within the field of behavior analysis. 48. Cumulative Record – a type of graph developed by B. F. Skinner that shows the cumulative number of responses emitted over time. 49. Visual Analysis – visually inspecting graphed data to look for changes in level, trend, and variability so as to interpret the results of an experiment or treatment program. 50. Acquisition – the development of a new behavior through reinforcement. 51. Acceleration – an increasing rate of acquisition or performance. 52. Deceleration – a decreasing rate of acquisition or performance. 53. Target Behavior – the behavior to be changed; the response class selected for intervention. 54. Frequency – count per unit of time (e.g., number of responses per minute); used synonymously with rate. 55. Rate – a ratio of count per period of observation time (e.g., number of responses per minute); used synonymously with frequency. 56. Percentage – a ratio of the number of responses of a certain type per total number of responses; expressed as number of parts per 100. 57. Duration – a measure of the total amount of time for which a behavior occurs. 58. Latency – a measure of the amount of time from the onset of a stimulus to the initiation of a response. 59. Interresponse Time (IRT) – a measure of the amount of time between the end of one response and the initiation of the next response. 60. Intertrial Interval (ITI) – a measure of the amount of time between the delivery of a consequence for one response and the presentation of the SD for the next response. 61. Continuous Measurement – recording data in such a way that all occurrences of the response class are accounted for during the observation period. 62. Discontinuous Measurement – recording data in such a way that some, but not all, occurrences of the response class are accounted for during the observation period. 63. Data – the results of measuring some quantifiable dimension of behavior. 64. Reflex – a stimulus-response relation that is made up of an antecedent stimulus and the respondent behavior it elicits. 65. Respondent Behavior – the response in a reflex; behavior that is elicited by an antecedent stimulus. 6 66. Respondent (Pavlovian/Classical) Conditioning – a stimulus-stimulus pairing procedure where a neutral stimulus (NS) is paired with an unconditioned stimulus (US), which elicits an unconditioned response (UR), until the neutral stimulus becomes a conditioned stimulus (CS) whereby it elicits the same response, now called a conditioned response (CR). 67. Elicit – the term used to describe the antecedent stimulus’ control over behavior in respondent conditioning. 68. Unconditioned Stimulus (US) – in respondent conditioning, a stimulus that elicits a respondent behavior in an unconditioned reflex without any prior learning history. 69. Neutral Stimulus (NS) – in respondent conditioning, a stimulus that initially does not elicit any respondent behavior; the stimulus targeted to be paired with the US. 70. Conditioned Stimulus (CS) – in respondent conditioning, a stimulus that elicits a respondent behavior after a history of having been paired with a US. 71. Unconditioned Response (UR) – in respondent conditioning, the response in an unconditioned reflex that is elicited by the US. 72. Conditioned Response (CR) – in respondent conditioning, the response that is elicited by the CS after it has been paired with a US. 73. Respondent Extinction – repeatedly presenting the CS without pairing it with the US so that the CS gradually loses its ability to elicit the CR. 74. Operant Behavior – behavior that is selected, maintained, and brought under stimulus control as a function of its consequences; behavior that is a product of one’s history with the environment; learned behavior. 75. Operant Conditioning – the process and selective effects of consequences on behavior. 76. Three-term Contingency – the basic unit of analysis in operant behavior; encompasses the relationships among an antecedent stimulus, behavior, and a consequence. 77. Emit – the term used in operant conditioning to describe the occurrence of behavior in relation to its consequences as the main controlling variables. 78. Reinforcement – a principle of behavior demonstrated when a stimulus change immediately follows a response and increases the future frequency of that type of behavior under similar conditions. 79. Reinforcer – the stimulus that increases the future frequency of the behavior that immediately precedes it. 80. Unconditioned (Unlearned/Primary) Reinforcer – stimulus that functions as a reinforcer regardless of any prior learning history; the same across all members of a species. 81. Conditioned (Learned/Secondary) Reinforcer – stimulus that functions as a reinforcer as a result of some prior learning history (i.e., pairing with other reinforcers); specific to the individual. 7 82. Generalized Conditioned Reinforcer – reinforcer, such as a token or money, that has been paired with many other reinforcers. 83. Backup Reinforcer – tangible object, activity, or privilege that serves as a reinforcer and can be purchased by exchanging tokens for it (as in a token economy). 84. Positive Reinforcement – process that occurs when a behavior is followed immediately by the presentation of a stimulus that increases the future frequency of the behavior under similar conditions. 85. Positive Reinforcer – a stimulus whose presentation functions as reinforcement by increasing the future frequency of the behavior that immediately preceded it. 86. Negative Reinforcement – process that occurs when a behavior is followed immediately by the removal of a stimulus that increases the future frequency of the behavior under similar conditions. 87. Negative Reinforcer – a stimulus whose removal functions as reinforcement by increasing the future frequency of the behavior that immediately preceded it. 88. Aversive Stimulus – a generally unpleasant stimulus; a stimulus that typically functions as a negative reinforcer when it is removed or as a positive punisher when it is presented; also a stimulus whose presentation evokes behaviors that have terminated it in the past. 89. Escape Behavior – behavior which terminates an ongoing stimulus. 90. Avoidance Behavior – behavior which prevents or postpones the presentation of a stimulus. 91. Socially Mediated Reinforcement – reinforcement that is mediated through another person (e.g., verbal praise). 92. Automatic Reinforcement – reinforcement that occurs through an individual’s direct interaction with the environment and independent of social mediation (e.g., scratching an itch). 93. Schedule of Reinforcement – a description of the contingency of reinforcement, including environmental arrangements and response requirements. 94. Contingent Reinforcement – reinforcement that is delivered only after the target behavior has occurred. 95. Non-contingent Reinforcement – reinforcement that is delivered without relation to the occurrence of the target behavior. 96. Continuous Reinforcement – a schedule of reinforcement in which reinforcement is delivered for every occurrence of the target behavior. 97. Intermittent Reinforcement – a schedule of reinforcement in which some, but not all, instances of the target behavior produce reinforcement. 8 98. Fixed Ratio (FR) Schedule – a schedule of reinforcement that requires a set number of responses to be emitted before reinforcement is delivered (e.g., FR5 means reinforcement will be delivered following every 5th response). 99. Variable Ratio (VR) Schedule – a schedule of reinforcement that requires an average number of responses to be emitted before reinforcement is delivered (e.g., VR5 means reinforcement will be delivered following an average of 5 responses). 100. Fixed Interval (FI) Schedule – a schedule of reinforcement in which reinforcement will be delivered for the first response emitted following a specified period of time (e.g., FI2’ means that reinforcement will be delivered for the first response emitted after the passage of 2 minutes since the last delivery of reinforcement). 101. Variable Interval (VI) Schedule – a schedule of reinforcement in which reinforcement will be delivered for the first response emitted following an average period of time (e.g., VI2’ means that reinforcement will be delivered for the first response emitted after the passage of an average of 2 minutes since the last delivery of reinforcement). 102. Differential Reinforcement – within a response class, reinforcing only those responses that meet a specific criterion and placing all other responses on extinction. 103. Differential Reinforcement of Alternative Behavior (DRA) – procedure for reducing problem behavior that involves delivering reinforcement for a behavior that serves as a desirable replacement for the behavior targeted for reduction and treating the targeted behavior with extinction. 104. Extinction (Operant) – a principle of behavior demonstrated when, for a behavior previously maintained by reinforcement, reinforcement is discontinued or withheld, thereby producing a decrease in the future frequency of the behavior. 105. Escape Extinction – placing behaviors previously maintained by escape (negative reinforcement) on extinction by no longer following those behaviors with the termination of the aversive stimulus. 106. Extinction Burst – an increase in some dimension of responding (i.e., rate, magnitude, etc.) seen when an extinction procedure is first implemented. 107. Spontaneous Recovery – a behavioral phenomenon associated with extinction where the behavior suddenly begins to occur again after its frequency has been decreased or stopped. 108. Resistance to Extinction – frequency with which a behavior is emitted when treated with extinction; behaviors maintained on intermittent schedules are more resistant to extinction and therefore will persist longer than those maintained on continuous schedule. 109. Punishment – a principle of behavior demonstrated when a stimulus change immediately follows a response and decreases the future frequency of that type of behavior under similar conditions. 110. Punisher – the stimulus that decreases the future frequency of the behavior that immediately precedes it. 9 111. Unconditioned (Unlearned/Primary) Punisher – stimulus that functions as a punisher regardless of any prior learning history; the same across all members of a species. 112. Conditioned (Learned/Secondary) Punisher – stimulus that functions as a punisher as a result of some prior learning history (i.e., pairing with other punishers); specific to the individual. 113. Positive (Type I) Punishment – process that occurs when a behavior is followed immediately by the presentation of a stimulus that decreases the future frequency of the behavior under similar conditions. 114. Negative (Type II) Punishment – process that occurs when a behavior is followed immediately by the removal of a stimulus that decreases the future frequency of the behavior under similar conditions. 115. Time Out (from Positive Reinforcement) – contingent withdrawal of the opportunity to earn positive reinforcement or loss of access to positive reinforcers for a specified period of time that decreases the future frequency of behavior; form of negative reinforcement. 116. Response Cost – contingent loss of a prespecified amount of a reinforcer (i.e., a fine) resulting in a decrease in the future frequency of behavior; form of negative reinforcement. 117. Functional Behavior Assessment (FBA) – systematic method of assessment for obtaining information about the purposes (functions) of problem behavior for an individual; can include indirect, direct, and experimental assessment methods. 118. Functional Analysis (FA) – experimentally assessing the relationships between problem behavior and the antecedents and consequences suspected to maintain the problem behavior so as to determine the function of the problem behavior. 119. Stimulus Control – a principle of behavior demonstrated when the rate, latency, duration, or amplitude of a response is altered in the presence of an antecedent stimulus; when a behavior is more likely to occur in the presence of a particular stimulus, the SD, because there is a history of that behavior having been reinforced only when that stimulus was present and less likely to occur in the presence of another stimulus, the S∆, because there is a history of that behavior not having been reinforced when that stimulus was present; the outcome of stimulus discrimination training. 120. Stimulus Discrimination – when different antecedent stimuli do not evoke the same response or evoke different responses. 121. Discriminative Stimulus (SD) – stimulus in the presence of which the current frequency of a behavior is momentarily increased due to a history of that behavior having occurred and been reinforced in the presence of this stimulus and having occurred and not been reinforced in the absence of this stimulus. 122. S-delta (S∆) – a stimulus in the presence of which a behavior has occurred and not been reinforced. 10 123. Stimulus Discrimination Training – procedure that leads to the development of stimulus control through the occurrence of two antecedent stimulus conditions – one in which a behavior is reinforced (SD) and one in which behavior is not reinforced (S∆). 124. Transfer of Stimulus Control – a process that involves transferring stimulus control from one antecedent stimulus to another; typically used to refer to the process of removing prompts once the target behavior is occurring in the presence of the SD. 125. Stimulus Generalization – when untrained antecedent stimuli sharing similar properties with the trained SD evoke the same response. 126. Response Generalization – emitting novel responses that are functionally equivalent to a previously trained response in the presence of the same antecedent stimulus. 127. Stimulus Class – a group of stimuli that share specified common elements along formal (e.g., size, color), temporal (e.g., antecedent or consequence), and/or functional (e.g., discriminative stimulus) dimensions. 128. Prompting – the procedure that involves the use of prompts (i.e., response or stimulus) to increase the likelihood that a person will engage in the correct behavior at the correct time. 129. Prompt – something that is done to increase the likelihood that a person will emit the correct behavior at the correct time; can be response or stimulus. 130. Fading (Prompt Fading) – the gradual removal of prompts as the behavior continues to occur in the presence of the SD with the goal of transferring stimulus control to the naturally occurring SD. 131. Response Prompt – a behavior that the trainer engages in just prior to or during the performance of a target behavior to induce the client to engage in the correct response; major forms include verbal instructions, modeling, and physical guidance. 132. Verbal Prompt – a type of response prompt that includes using vocal verbal instruction, written words, manual signs, or pictures to describe the target behavior. 133. Modeling Prompt – a type of response prompt that involves demonstrating the target behavior. 134. Gestural Prompt – a type of response prompt that involves modeling a portion of the target behavior or using some other physical movement (e.g., point to the correct card to be selected) to evoke the target behavior. 135. Physical Prompt – a type of response prompt that involves partially or fully physically guiding the learner through the movements of a target behavior. 136. Stimulus Prompt – a change in an antecedent stimulus, or the addition or removal of an antecedent stimulus, with the goal of making a correct response more likely. 137. Within Stimulus Prompt – a type of stimulus prompt in which some aspect of the SD or S∆ is changed to help a person make a correct discrimination. 11 138. Extra-stimulus Prompt – a type of stimulus prompt in which a stimulus is added to help a person make a correct discrimination. 139. Prompt Delay – an antecedent response prompt that involves varying the time intervals between the presentation of the natural SD and the presentation of the response prompt; can be a constant time delay or progressive time delay. 140. Stimulus Fading – a procedure for transferring stimulus control in which the features of an antecedent stimulus (e.g., shape, size, position, color) controlling a behavior are gradually changed to a new stimulus while maintaining the current behavior; features can be faded in (enhanced) or faded out (reduced). 141. Motivation – a principle of behavior demonstrated when conditions of deprivation, satiation, or aversion momentarily alter the value of consequences (reinforcers or punishers) and therefore momentarily alter some dimension of behaviors that have previously produced those consequences (reinforcers or punishers). 142. Motivating Operation (MO) – a stimulus, event, or set of environmental conditions that a) momentarily alters the value of another stimulus and b) alters the current frequency of all behaviors that have previously produced that consequence. 143. Value Altering Effect – the effect an MO has on the value of another stimulus as a reinforcer or punisher; can be an increase in effectiveness (Establishing Operation; EO) or a decrease in effectiveness (Abolishing Operation; AO). 144. Establishing Operation (EO) – an increase in the effectiveness of some other stimulus as a reinforcer or punisher; e.g., food deprivation is an EO for food as a reinforcer. 145. Abolishing Operation (AO) – a decrease in the effectiveness of some other stimulus as a reinforcer or punisher; e.g., food satiation is an AO for food as a reinforcer. 146. Behavior Altering Effect – the effect an MO has on the current frequency of a behavior; can be an increase (evocative effect) or a decrease (abative effect). 147. Evocative Effect – an increase in the current frequency of all behaviors that have previously led to the consequence for which the value has been altered by an MO; e.g., food deprivation has an evocative effect on all behaviors previously reinforced by food. 148. Abative Effect – a decrease in the current frequency of all behaviors that have previously led to the consequence for which the value has been altered by an MO; e.g., food satiation has an abative effect on all behaviors previously reinforced by food. 149. Unconditioned MO (UMO) – an antecedent stimulus that momentarily alters the value (effectiveness) of other stimuli as reinforcers (or punishers) without any prior learning history; an MO for which the value-altering effects are unlearned; these are the same across all members of a species; e.g., food deprivation and painful stimulation. 150. Conditioned MO (CMO) – an antecedent stimulus that momentarily alters the value (effectiveness) of other stimuli as reinforcers (or punishers), but only as a result of the organism’s learning history; an MO for which the value-altering effects are learned; individually specific. 12 151. Transitive MO (CMO-T) – a set of stimulus conditions, where there is a motivating operation for a stimulus but access to that stimulus is blocked, interrupted, or denied, that momentarily establishes (or abolishes) the value of some other stimulus as a reinforcer (or punisher) and evokes (or abates) all behaviors that have produced that have in the past produced that reinforcer (or punisher). 152. Reflexive MO (CMO-R) – any stimulus that systematically precedes (i.e., is positively correlated with) the onset of a worsening set of conditions and thereby the presentation of that stimulus establishes its own removal/termination/offset as a reinforcer and evokes any behavior that has been so reinforced. 153. Surrogate MO (CMO-S) – a stimulus that becomes capable of the same value-altering and behavior-altering effects as a UMO due to a history of having been paired with that UMO. 154. Verbal Behavior – any behavior for which reinforcement is mediated by another person (i.e., a listener); can be vocal or nonvocal. 155. Speaker – in a social exchange of verbal behavior, the person who gains access to reinforcement and controls his/her own environment through the behavior of listeners. 156. Listener – in a social exchange of verbal behavior, the person who responds to or reinforces the behavior of the speaker. 157. Audience – another person (or other people) the presence of whom is a necessary condition for verbal behavior to take place; the presence of the listener. 158. Verbal Operant – the unit of analysis in verbal behavior; the functional relation between behavior and the independent variables that control that behavior; includes motivating variables and discriminative stimuli, behavior, and consequences. 159. Formal Similarity – when the antecedent stimulus and the response product are both of the same sense modality (e.g., both are visual stimuli, both are auditory stimuli) and resemble each other topographically (e.g., look alike, sound alike); all duplics (echoic, mimetic, copying text) demonstrate formal similarity. 160. Point-to-point Correspondence – when the beginning, middle, and end of the antecedent stimulus matches the beginning, middle, and end of the response product (e.g., seeing the textual stimulus cat and saying “cat” or finger spelling “c-a-t”); all duplics (echoic, mimetic as it relates to sign language, copying text) and all codices (transcription and textual) demonstrate point-to-point correspondence. 161. Duplic – any verbal operant that is defined as a verbal antecedent stimulus, a verbal response with both point-to-point correspondence and formal similarity with the SD, and nonspecific reinforcement; consists of echoic, mimetic as it relates to sign language, and copying text. 162. Codic – any verbal operant that is defined as a verbal antecedent stimulus, a verbal response with point-to-point correspondence but without formal similarity with the SD, and non-specific reinforcement; consists of textual and transcription. 13 163. Mand – a primary verbal operant that is defined as an MO, a verbal response under the functional control of the MO, and reinforcement specific to the MO; commonly referred to as a request. 164. Tact – a primary verbal operant that is defined as a nonverbal discriminative stimulus, a verbal response, and non-specific reinforcement; commonly referred to as a label. 165. Echoic – a primary verbal operant that is defined as a vocal, verbal discriminative stimulus, a vocal, verbal response with point-to-point correspondence and formal similarity with the SD, and non-specific reinforcement; commonly referred to as vocal imitation. 166. Mimetic – an operant that is defined as a discriminative stimulus in the form of demonstrating a motor movement, a physical response that has point-to-point correspondence and formal similarity with the SD (i.e., imitating the motor movement), and non-specific reinforcement; can be a verbal operant if the motor movement is in the form of sign language or a non-verbal operant if the motor movement is any other physical movement. 167. Copying Text – a primary verbal operant that is defined as a textual (written) verbal discriminative stimulus, a textual (written) verbal response that has point-to-point correspondence and formal similarity with the SD, and non-specific reinforcement. 168. Intraverbal – a primary verbal operant that is defined as a verbal discriminative stimulus, a verbal response that does not have point-to-point correspondence with the SD, and nonspecific reinforcement; commonly referred to as conversational exchanges. 169. Textual – a primary verbal operant that is defined as a textual (written) verbal discriminative stimulus (visual or tactual), a verbal response that has point-to-point correspondence but no formal similarity with the SD, and non-specific reinforcement; commonly referred to as reading. 170. Transcription – a primary verbal operant that is defined as a verbal discriminative stimulus (visual or auditory), a written verbal response that has point-to-point correspondence but no formal similarity with the SD, and non-specific reinforcement; commonly referred to as taking dictation. 171. Autoclitic – a secondary verbal operant defined as the speaker’s own verbal behavior functioning as an SD or an MO, a response of additional verbal behavior by the speaker, and differential reinforcement by the listener; commonly referred to as verbal behavior about one’s own verbal behavior. 172. Listener Responding – a non-verbal operant that is defined as a verbal discriminative stimulus, a non-verbal response in the form of compliance with the SD, and non-specific reinforcement; commonly referred to as receptive language. 173. Listener Responding by Feature, Function, and Class (LRFFC) – a type of listener behavior in which the SD specifies some feature, function, or class of another non-verbal stimulus in the environment; commonly referred to as receptive by feature, function, or class (RFFC). 14 174. ABLLS – Assessment of Basic Language and Learning Skills; an assessment tool developed by Partington and Sundberg that assesses 25 domains of language and learning skills; language skills are described and assessed according to a behavioral analysis of language. 175. VM-MAPP – Verbal Behavior Milestones Assessment and Placement Program; an assessment tool developed by Mark Sundberg that is based upon Skinner’s analysis of verbal behavior; it assesses language and learning milestones across 16 domains, assesses 24 barriers that interfere with learning, and can assist with determining appropriate educational placements and instructional objectives. 176. Shaping – differentially reinforcing approximations that more closely resemble a terminal behavior while treating all poorer approximations with extinction; produces a series of gradually changing response classes that are successive approximations to the terminal behavior. 177. Successive Approximations – the sequence of gradually changing response classes that are produced during the shaping process as a result of differential reinforcement; each successive response class targeted for differential reinforcement more closely resembles the target behavior than the previous response class. 178. Terminal Behavior – the target behavior that is the end product of the shaping process. 179. Behavior Chain – a sequence of responses in which each response produces a change that functions as conditioned reinforcement for that response and as an SD for the next response. 180. Task Analysis – process of breaking a complex skill into a series of smaller, teachable units of behavior; also refers to the outcome of this process. 181. Stimulus-Response Chain – a chain that specifies relations between SDs, behaviors, and consequences for all of the component responses within a behavior chain. 182. Backward Chaining – a teaching procedure in which the learner is initially required to complete the last response in the chain independently, and is then taught to independently complete the steps in the chain in reverse order until the learner is completing the entire chain independently. 183. Forward Chaining – a teaching procedure in which the learner is initially required to complete the first step in the chain independently, and is then taught to independently complete all remaining steps in sequential order until the learner is completing the entire chain independently. 184. Total-Task Presentation – a teaching procedure in which the learner receives training on all steps in a behavior chain during every teaching session. 15