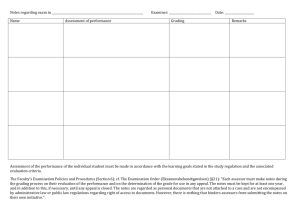

Assessment and grading at the University of Alberta: policies

advertisement