(FA2386-10-1-4127) PI: John E. Laird (University of Michigan)

advertisement

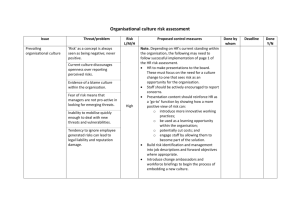

EXTENDING SEMANTIC AND EPISODIC MEMORY TO SUPPORT ROBUST DECISION MAKING (FA2386-10-1-4127) PI: John E. Laird (University of Michigan) Graduate Students: Nate Derbinsky, Mitchell Bloch, Mazin Assanie AFOSR Program Review: Mathematical and Computational Cognition Program Computational and Machine Intelligence Program Robust Decision Making in Human-System Interface Program (Jan 28 – Feb 1, 2013, Washington, DC) EXTENDING SEMANTIC AND EPISODIC MEMORY (JOHN LAIRD) Objective: Develop algorithms that support effective, general, and scalable long-term memory: 1. Effective: retrieves useful knowledge 2. General: effective across a variety of tasks 3. Scalable: supports large amounts of knowledge and long agent lifetimes: manageable growth in memory and computational requirements DoD Benefit: Develop science and technology to support: • Intelligent knowledge-rich autonomous systems that have long-term existence, such as autonomous vehicles (ONR, DARPA: ACTUV). • Large-scale, long-term cognitive models (AFRL) Technical Approach: 1. Analyze multiple tasks and domains to determine exploitable regularities. 2. Develop algorithms that exploit those regularities. 3. Embed within a general cognitive architecture. 4. Perform formal analyses and empirical evaluations across multiple domains. Budget: Actual/ Planned $K FY11 $99 FY12 $195 FY13 $205 $158 $165 $176 Annual Progress Report Submitted? Y Y N Project End Date: June 29, 2013 2 LIST OF PROJECT GOALS 1. Episodic memory (experiential & contextualized) – Expand functionality – Improve efficiency of storage (memory) and retrieval (time) 2. Semantic memory (context independent) – Enhance retrieval – Automatic generalization 3. Cognitive capabilities that leverage episodic and semantic memory functionality – Reusing prior experience, noticing familiar situations, … 4. Evaluate on real world domains 5. Extended Goal – Competence-preserving selective retention across multiple memories 3 PROGRESS TOWARDS GOALS 1. Episodic memory – Expand functionality (recognition) • [AAAI 2012b] – Improve efficiency of storage (memory) and retrieval (time) • Exploits temporal contiguity, structural regularity, high cue structural selectivity, high temporal selectivity, low cue feature co-occurrence • For many different cues and many different tasks, no significant slowdown with experience: runs for days of real time (tens of millions of episodes), faster than real time. • [ICCBR 2009; BRIMS 2011; AAMAS 2012] 2. Semantic memory – Enhance retrieval • Evaluated multiple bias functions: conclude base-level (exponential) activation works best • Developed efficient approximate algorithm that maintains high (>90%) validity – 30-100x fast as prior retrieval algorithms (non base-level activation) (for 3x larger data set) – sub linear slowdown as memory size increases • Exploits small node outdegree, high selectivity, not low co-occurrence of cue features. • [ICCM 2010; AISB 2011; AAAI 2011] • Current research: how to use context – collaboration with Braden Phillips, University of Adelaide on special purpose hardware to support spreading activation in semantic memory – Automatic generalization • Current research: Leverage data maintained for episodic memory 4 PROGRESS TOWARDS GOALS 3. Cognitive capabilities that leverage episodic and semantic memory functionality – Episodic memory • Seven distinct capabilities: recognition, prospective memory, virtual sensing, action modeling, … • [BRIMS 2011; ACS 2011b; AAAI 2012a] – Semantic memory • Support reconstruction of forgotten working memory • [ACS 2011; ICCM 2012a] 4. Evaluate on real world domains – Episodic memory • Multiple domains including mobile robotics, games, planning problems, linguistics • [BRIMS 2011; AAAI 2012a] – Semantic memory • Word sense disambiguation, mobile robotics • [ICCM 2010; BRIMS 2011; AAAI 2011] 5. Competence preserving retention/forgetting – Working memory • • Automatic management of working memory to improve the scalability of episodic memory, utilizing semantic memory [ACS 2011; ICCM 2012b; Cog Sys 2013]] – Procedural memory • • Automatic management or procedural memory using same algorithms as in working-memory management [ICCM 2012b; Cog Sys 2013] 5 NEW GOALS • Dynamic determination of value-functions for reinforcement learning to support robust decision making. – [ACS 2012; AAAI submitted] 6 OVERVIEW • Goal: – Online learning and decision making in novel domains with very large state spaces. – No a priori knowledge of which features are most important • Approach: – Reinforcement learning with adaptive value function determination using hierarchical tile coding – Only online, incremental methods need apply! • Hypothesis: – Will lead to more robust decision making and learning over small changes to environment and task 7 REINFORCEMENT LEARNING FOR ACTION SELECTION • Choose action based on the expected (Q) value stored in a value function – Value function maps from situation-action to expected value. • Value function updated based on reward received and expected future reward (Q Learning: off policy) Value Function (si, aj) → qij State: S1 Perception & Internal Structures Reward a3 a1 a2 a3 a4 (s2, a4) State: S2 a5 Page 8 VALUE-FUNCTION FOR LARGE STATE SPACES • (si, aj) → qij • si = (f1, f2, f3, f4, f5, f6, … fn) • Usually only a subset of features are relevant • If include irrelevant features, slow learning • If don’t include relevant features, suboptimal asymptotic performance • How get the best of both? • First step: hierarchical tile coding (Sutton & Barto, 1998) • Initial results for propositional representations in Puddle World and Mountain Car 9 PUDDLE WORLD 10 PUDDLE WORLD 2x2 4x4 8x8 Q-value for (si, aj) = ∑ (sit, aj) [as opposed average] More abstract tilings (2x2) gets more updates, which form the baseline for subtilings Update is distributed across all tiles that contribute to Q-value • Explored variety of distributions: 1/sqrt(updates), even, 1/updates, … 11 Puddle World: Single Level Tilings 0 -10000 Cumulative Reward/Episodes -20000 4x4 -30000 8x8 -40000 16x16 32x32 -50000 64x64 -60000 -70000 -80000 -90000 -100000 0 50 100 Actions (thousands) 150 200 12 Puddle World: Single Level Tilings Expanded 0 Cumulative Reward/Episodes -1000 -2000 4x4 8x8 -3000 16x16 -4000 -5000 -6000 -7000 0 10 20 30 Actions (thousands) 40 50 13 Puddle World: Includes Static Hierarchical Tiling 1-64 0 Cumulative Reward/Episodes -1000 -2000 4x4 8x8 -3000 16x16 1-64 static -4000 -5000 -6000 -7000 0 10 20 30 40 50 Actins (thousands) 14 MOUNTAIN CAR 15 Mountain Car: Static Tilings 0 Cumulative Reward/Episodes -1000 -2000 16x16 -3000 32x32 64x64 -4000 128x128 256x256 -5000 -6000 -7000 0 100 200 300 400 500 600 Actions (thousands) 700 800 900 1000 16 Mountain Car: Static Tilings Expanded 0 Cumulative Reward/Episodes -1000 -2000 16x16 -3000 32x32 64x64 -4000 128x128 256x256 -5000 -6000 -7000 0 10 20 30 40 50 60 70 80 90 100 Actions (thousands) 17 Mountain Car: Includes Static Hierarchical Tiling 0 Cumulative Reward/Episodes -1000 -2000 16x16 32x32 64x64 128x128 256x256 1-256 static -3000 -4000 -5000 -6000 -7000 0 10 20 30 40 50 60 70 80 90 100 Actions (thousands) 18 WHY DOES HIERARCHICAL TILING WORK? • Abstract Q values serve as starting point for learning more specific Q values so they require less learning • Exploits a locality assumption – – There is continuity in the mapping from feature space to Q values at multiple levels of refinement 19 FOR LARGE STATE SPACES, HOW AVOID HUGE MEMORY COSTS? • Hypothesis: non uniform tiling is sufficient • How do this incrementally and online? • Split a tile if mean Cumulative Absolute Bellman Error (CABE) is half a standard deviation above the mean – CABE is stored proportionally to the credit assignment and the learning rate. – The mean and standard deviations for CABE are tracked 100% incrementally at low computational cost • Incremental and online algorithm 20 1x1 PUDDLE WORLD 2x2 4x4 8x8 2x2 8x8 4x4 21 ANALYSIS AND EXPECTED RESULTS • Might lose performance because takes time to “grow” the tiling. • Might gain performance because not wasting updates on useless details. • Expect many fewer “active” Q values 22 Puddle World: Static Hierarchical Tiling Reward and Memory Usage 0 60000 Reward: 1-64 static -200 Memory: 1-64 static 50000 -600 40000 -800 -1000 30000 Q values Cumulative Reward/Episodes -400 -1200 20000 -1400 -1600 10000 -1800 -2000 0 0 2 4 6 8 10 12 Actions (thousands) 14 16 18 20 23 Puddle World: Static and Dynamic Hierarchical Tiling Reward and Memory Usage 0 60000 Reward 1-64 static -200 Reward 1-64 dynamic 50000 Memory: 1-64 static Memory: 1-64 dynamic -600 40000 -800 -1000 30000 Q values Cumulative Reward/Episodes -400 -1200 20000 -1400 -1600 10000 -1800 -2000 0 0 2 4 6 8 10 12 Actions (thousands) 14 16 18 20 24 Puddle World: Static and Dynamic Hierarchical Tiling Reward and Memory Usage 0 60000 Reward 1-64 static -200 Reward 1-64 dynamic 50000 Memory: 1-64 static Memory: 1-64 dynamic -600 40000 -800 -1000 30000 Q values Cumulative Reward/Episodes -400 -1200 20000 -1400 Dynamic memory is 9% of static at 10,000 actions -1600 10000 -1800 -2000 0 0 2 4 6 8 10 12 Actions (thousands) 14 16 18 20 25 26 Tile decomposition for move(north) 10K actions 27 Tile decomposition for move(south) 10K actions 28 Mountain Car: Even Credit Assignment 400000 -500 Reward: 1x256 static Reward: 1x256 dynamic Memory: 1x256 static Memory: 1x256 dynamic Cumulative Reward/Episodes -1000 -1500 350000 300000 250000 -2000 200000 -2500 150000 -3000 100000 -3500 50000 -4000 0 0 10 20 30 40 50 60 Actions (thousands) 70 80 90 Q Values 0 100 29 Mountain Car: Inverse Log Credit Assignment 0 400000 -500 Reward: 1-256 dynamic -1000 300000 Memory: 1-256 static Memory: 1-256 dynamic -1500 250000 -2000 200000 -2500 150000 -3000 100000 -3500 50000 -4000 0 0 10 20 30 40 50 60 Actions (thousands) 70 80 90 Q Values Cumulative Reward/Episodes 350000 Reward: 1-256 static 100 30 Mountain Car: Inverse Log Credit Assignment 0 400000 -500 Reward: 1-256 dynamic -1000 300000 Memory: 1-256 static Memory: 1-256 dynamic -1500 250000 -2000 200000 -2500 150000 Dynamic memory is 6% of static at 50,000 actions -3000 100000 -3500 50000 -4000 0 0 10 20 30 40 50 60 Actions (thousands) 70 80 90 Q Values Cumulative Reward/Episodes 350000 Reward: 1-256 static 100 31 Tile decomposition for move(right): 50K 32 Tile decomposition for move(right): 1,000K 33 Tile decomposition for move(left): 50K 34 Tile decomposition for move(left): 1,000K 35 Tile decomposition for move(idle): 50K 36 Tile decomposition for move(idle): 1,000K 37 RELATED WORK (McCallum, 1996) Reinforcement Learning with Selective Perception and Hidden State • Not strict hierarchies, but similar motivation for relational representations. Two levels with independent updating and no adaptive splitting • • • (Taylor & Stone, 2005) Behavior Transfer for Value-Function-Based Reinforcement Learning (Zheng, Luo & Lv, 2006) Control Double Inverted Pendulum by Reinforcement Learning with Double CMAC Network (Grzes, 2010) Improving Exploration in Reinforcement Learning through Domain Knowledge and Parameter Analysis Maintain data on the fringe of the hierarchy • (Munos & Moore, 1999) Variable Resolution Discretization in Optimal Control Splits periodically; Maintains a fringe of the hierarchy and splits top 'f'% of the cells to minimize the Stand_Dev of influence and variance; No time-based online performance data; requires action model • (Whiteson, Taylor, & Stone, 2007) Adaptive Tile Coding for Value Function Approximation Splits when Bellman error for any has not decreased in N steps; Maintains the fringe of the hierarchy and splits which maximally reduces Bellman error value, or maximally improves the policy 38 Whiteston Policy and Value Results Compared to Our Results 0 Cumulative Reward/Episodes -500 -1000 Whiteson Value-based splitting Whiteson Policy-based splitting -1500 Our static hierarchy Our dynamic hierarchy -2000 -2500 -3000 0 10 20 30 40 50 60 Actions (Thousands) 70 80 90 100 39 ROBUSTNESS “A characteristic describing a model's, test's or system's ability to effectively perform while its variables or assumptions are altered.” Puddle World 1. 2. 3. Change position of goal Change the size of the puddles Increase stochasticity of actions Mountain Car 1. Change the force of actions Hypotheses – Hierarchical tiling should be robust for small changes – Incremental tiling should have similar performance 40 CHANGES IN GOAL POSITION 4 3 2 1 0 41 PW: Different Goals with Static Hierarchy Cumulative Reward/Episodes 0 -1000 -2000 Static 1 Static 2 -3000 Static 3 -4000 Static 4 -5000 0 10 20 30 40 50 Actions (Thousands) 42 PW: Different Goals with Static Hierarchy Cumulative Reward/Episodes 0 -1000 -2000 Static 1 Static 2 -3000 Static 3 -4000 Static 4 -5000 0 10 20 30 40 50 Actions (Thousands) PW: Different Goals with Dynamic Hierarchy Cumulative Reward/Episodes 0 -1000 -2000 Dynamic 1 Dynamic 2 -3000 Dynamic 3 -4000 Dynamic 4 -5000 0 10 20 30 40 50 Actions (Thousands) 43 Different Goals with Static Hierarchy Transfer from Original to New Goal with Static Hierarchy 0 -1000 -2000 Static 1 Static 2 -3000 Static 3 -4000 Static 4 Cumulative Reward/Episodes Cumulative Reward/Episodes 0 -5000 -1000 -2000 Static 1 Static 2 -3000 Static 3 -4000 Static 4 -5000 0 10 20 30 40 50 0 10 Actions (Thousands) 30 40 50 Actions (Thousands) Different Goals with Dynamic Hierarchy Transfer from Original to New Goal with Dynamic Hierarchy 0 -1000 -2000 Dynamic 1 Dynamic 2 -3000 Dynamic 3 -4000 Dynamic 4 -5000 Cumulative Reward/Episodes 0 Cumulative Reward/Episodes 20 -1000 -2000 Dynamic 1 Dynamic 2 -3000 Dynamic 3 -4000 Dynamic 4 -5000 0 10 20 30 Actions (Thousands) 40 50 0 10 20 30 40 50 Actions (Thousands) 44 FUTURE WORK 1. Short term – – – 2. 3. Develop a criteria for stopping refinement More research on robustness Better understanding of different credit assignment policies Research on choosing which dimensions should be expanded Expand to relational representations – – No longer a strict hierarchy Must decide which relations/features should be included • • 4. What meta-data maintain? Can we use additional background knowledge? Embed within a cognitive architecture (Soar) – Already have prototype implementation for continuous features 45 LIST OF PUBLICATIONS ATTRIBUTED TO THE GRANT 1. 2. 3. 4. 5. 6. 7. 8. 9. 10. 11. 12. 13. [AAAI submitted] Bloch, M. & Laird, J. E. (submitted) Incremental Hierarchical Tile Coding in Reinforcement Learning, AAAI. [Cog Sys 2013] Derbinsky, N., & Laird, J. E. (2013) Effective and efficient forgetting of learned knowledge in Soar’s working and procedural memories. Cognitive Systems Research. [ACS 2012] Laird, J. E., Derbinsky, N. and Tinkerhess, M. (2012). Online Determination of Value-Function Structure and Actionvalue Estimates for Reinforcement Learning in a Cognitive Architecture, Advances in Cognitive Systems, Volume 2, Palo Alto, California. [AAMAS 2012] Derbinsky, N., Li, J., Laird, J. E. (2012) Evaluating Algorithmic Scaling in a General Episodic Memory (Extended Abstract). Proceedings of the 11th International Conference on Autonomous Agents and Multiagent Systems (AAMAS). Valencia, Spain. [ICCM 2012a] Derbinsky, N., Laird, J. E. (2012) Efficient Decay via Base-Level Activation. Proceedings of the 11th International Conference on Cognitive Modeling (ICCM). Berlin, Germany. Best Poster. [ICCM 2012b] Derbinsky, N., Laird, J. E. (2012) Competence-Preserving Retention of Learned Knowledge in Soar's Working and Procedural Memories. Proc. of the 11th International Conference on Cognitive Modeling (ICCM). Berlin, Germany. [AAAI 2012a] Derbinsky, N., Li, J., Laird, J. E. (2012) A Multi-Domain Evaluation of Scaling in a General Episodic Memory. Proceedings of the 26th AAAI Conference on Artificial Intelligence. Toronto, Canada. [AAAI 2012b] Li, J., Derbinsky, N., Laird, J. E. (2012) Functional Interactions Between Encoding and Recognition of Semantic Knowledge. Proceedings of the 26th AAAI Conference on Artificial Intelligence. Toronto, Canada. [AFOSR & ONR] [BRIMS 2011] Laird, J. E., Derbinsky, N., Voigt, J. (2011)Performance Evaluation of Declarative Memory Systems in Soar (2011). Proceedings of the 20th Behavior Representation in Modeling & Simulation Conference (BRIMS), 33-40. Sundance, UT. [AISB 2011] Derbinsky, N., Laird, J. E.(2011) A Preliminary Functional Analysis of Memory in the Word Sense Disambiguation Task. Proceedings of the 2nd Symposium on Human Memory for Artificial Agents, AISB, 25-29. York, England. [AAAI 2011] Derbinsky, N., and Laird, J. E. (2011) A Functional Analysis of Historical Memory Retrieval Bias in the Word Sense Disambiguation Task. Proceedings of the 25th National Conference on Artificial Intelligence (AAAI). 663-668. San Francisco, CA. [ACS 2011] Derbinsky, N., Laird, J. E. (2011) Effective and Efficient Management of Soar's Working Memory via Base-Level Activation. Papers from the 2011 AAAI Fall Symposium Series: Advances in Cognitive Systems (ACS), 82-89. Arlington, VA. [ICCM 2010] Derbinsky, N., Laird, J. E., Smith, B. (2010) Towards Efficiently Supporting Large Symbolic Declarative Memories. Proc. of the 10th International Conference on Cognitive Modeling (ICCM), 49-54.Philadelphia, PA. 46